Cloudron 9.0 (beta) bug reports

-

I get

cp exited with code 1errors on a backup via rsync to a locally attached USB-HDD:Nov 11 00:16:14 box:backuptask upload: path snapshot/mail site 59742348-c3f1-490a-aa43-3dc1f1701f47 uploaded: {"transferred":24537785098,"size":24537785098,"fileCount":96084} Nov 11 00:16:14 box:tasks updating task 21646 with: {"percent":91.47619047619045,"message":"Uploading integrity information to snapshot/mail.backupinfo (mail)"} Nov 11 00:16:15 box:backupupload upload completed. error: null Nov 11 00:16:15 box:backuptask runBackupUpload: result - {"result":{"stats":{"transferred":24537785098,"size":24537785098,"fileCount":96084},"integrity":{"signature":"72d32a4ba3105b1a7290323f430f85463992ce714e059553c3995f86bd8584364cf44b481149fe4731e4c2cde6174a7b2c07e19e2c938589594c04bae387550c"}}} Nov 11 00:16:15 box:backuptask uploadMailSnapshot: took 671.27 seconds Nov 11 00:16:15 box:backuptask backupMailWithTag: rotating mail snapshot of 59742348-c3f1-490a-aa43-3dc1f1701f47 to 2025-11-10-230001-644/mail_v9.0.7 Nov 11 00:16:15 box:tasks updating task 21646 with: {"percent":91.47619047619045,"message":"Copying /media/WD4TB/CloudronBackup/snapshot/mail to /media/WD4TB/CloudronBackup/2025-11-10-230001-644/mail_v9.0.7"} Nov 11 00:16:15 box:shell filesystem: cp -aRl /media/WD4TB/CloudronBackup/snapshot/mail /media/WD4TB/CloudronBackup/2025-11-10-230001-644/mail_v9.0.7 Nov 11 00:16:17 box:shell filesystem: cp -aRl /media/WD4TB/CloudronBackup/snapshot/mail /media/WD4TB/CloudronBackup/2025-11-10-230001-644/mail_v9.0.7 errored BoxError: cp exited with code 1 signal null Nov 11 00:16:17 at ChildProcess.<anonymous> (/home/yellowtent/box/src/shell.js:82:23) Nov 11 00:16:17 at ChildProcess.emit (node:events:519:28) Nov 11 00:16:17 at maybeClose (node:internal/child_process:1101:16) Nov 11 00:16:17 at ChildProcess._handle.onexit (node:internal/child_process:304:5) { Nov 11 00:16:17 reason: 'Shell Error', Nov 11 00:16:17 details: {}, Nov 11 00:16:17 stdout: <Buffer >, Nov 11 00:16:17 stdoutLineCount: 0, Nov 11 00:16:17 stderr: <Buffer 63 70 3a 20 63 61 6e 6e 6f 74 20 63 72 65 61 74 65 20 68 61 72 64 20 6c 69 6e 6b 20 27 2f 6d 65 64 69 61 2f 57 44 34 54 42 2f 43 6c 6f 75 64 72 6f 6e ... 210 more bytes>, Nov 11 00:16:17 stderrLineCount: 1, Nov 11 00:16:17 code: 1, Nov 11 00:16:17 signal: null, Nov 11 00:16:17 timedOut: false, Nov 11 00:16:17 terminated: false Nov 11 00:16:17 } Nov 11 00:16:17 box:backuptask copy: copy to 2025-11-10-230001-644/mail_v9.0.7 errored. error: cp exited with code 1 signal null Nov 11 00:16:17 box:tasks setCompleted - 21646: {"result":null,"error":{"message":"cp exited with code 1 signal null","reason":"External Error"},"percent":100} Nov 11 00:16:17 box:tasks updating task 21646 with: {"completed":true,"result":null,"error":{"message":"cp exited with code 1 signal null","reason":"External Error"},"percent":100} Nov 11 00:16:17 box:taskworker Task took 975.553 seconds Nov 11 00:16:17 BoxError: cp exited with code 1 signal null Nov 11 00:16:17 at copyInternal (/home/yellowtent/box/src/storage/filesystem.js:217:26) Nov 11 00:16:17 at process.processTicksAndRejections (node:internal/process/task_queues:105:5) Nov 11 00:16:17 at async Object.copyDir (/home/yellowtent/box/src/storage/filesystem.js:235:12) Nov 11 00:16:17 at async Object.copy (/home/yellowtent/box/src/backupformat/rsync.js:311:5)EDIT: The error persists even after increasing RAM from 4 to 16 GB.

-

That hex buffer translates to "cp: cannot create hard link '/media/WD4TB/Cloudron ... " .

@necrevistonnezr can you try running

cp -aRl /media/WD4TB/CloudronBackup/snapshot/mail /media/WD4TB/CloudronBackup/2025-11-10-230001-644/mail_v9.0.7manually on the server? It's failing for some reason. cp has a habit of failing when files disappear (as is the case with the mail directory and dovecot creating temp files). If that manual cp worked, can you try to do make a new backup one or two more times? -

@girish what is the first column described by the file cabinet/box icon there? How is it sorting the list when clicked on? Should it even be there, or somewhere above the list?

@robi said in Cloudron 9.0 (beta) bug reports:

@girish what is the first column described by the file cabinet/box icon there? How is it sorting the list when clicked on? Should it even be there, or somewhere above the list?

This is the indicator if a backup is archived or not. Archived backups are kept despite the retention policy of a backup site. We removed quite a bit of tooltips, to be a bit more mobile friendly, I guess it isn't obivous enought though.

-

That hex buffer translates to "cp: cannot create hard link '/media/WD4TB/Cloudron ... " .

@necrevistonnezr can you try running

cp -aRl /media/WD4TB/CloudronBackup/snapshot/mail /media/WD4TB/CloudronBackup/2025-11-10-230001-644/mail_v9.0.7manually on the server? It's failing for some reason. cp has a habit of failing when files disappear (as is the case with the mail directory and dovecot creating temp files). If that manual cp worked, can you try to do make a new backup one or two more times?@girish Thanks! After running

sudo -u yellowtent cp -aRlv /media/WD4TB/CloudronBackup/snapshot/mail /media/WD4TB/CloudronBackup/2025-11-10-230001-644/mail_v9.0.7

without error, backups went through without error, as well.

Is there something that can be done to prevent such an error? -

@WiseMetalhead yes, but which service or set up specifically? (or is this confidential?). How can we reproduce this?

@girish said in Cloudron 9.0 (beta) bug reports:

which service or set up specifically?

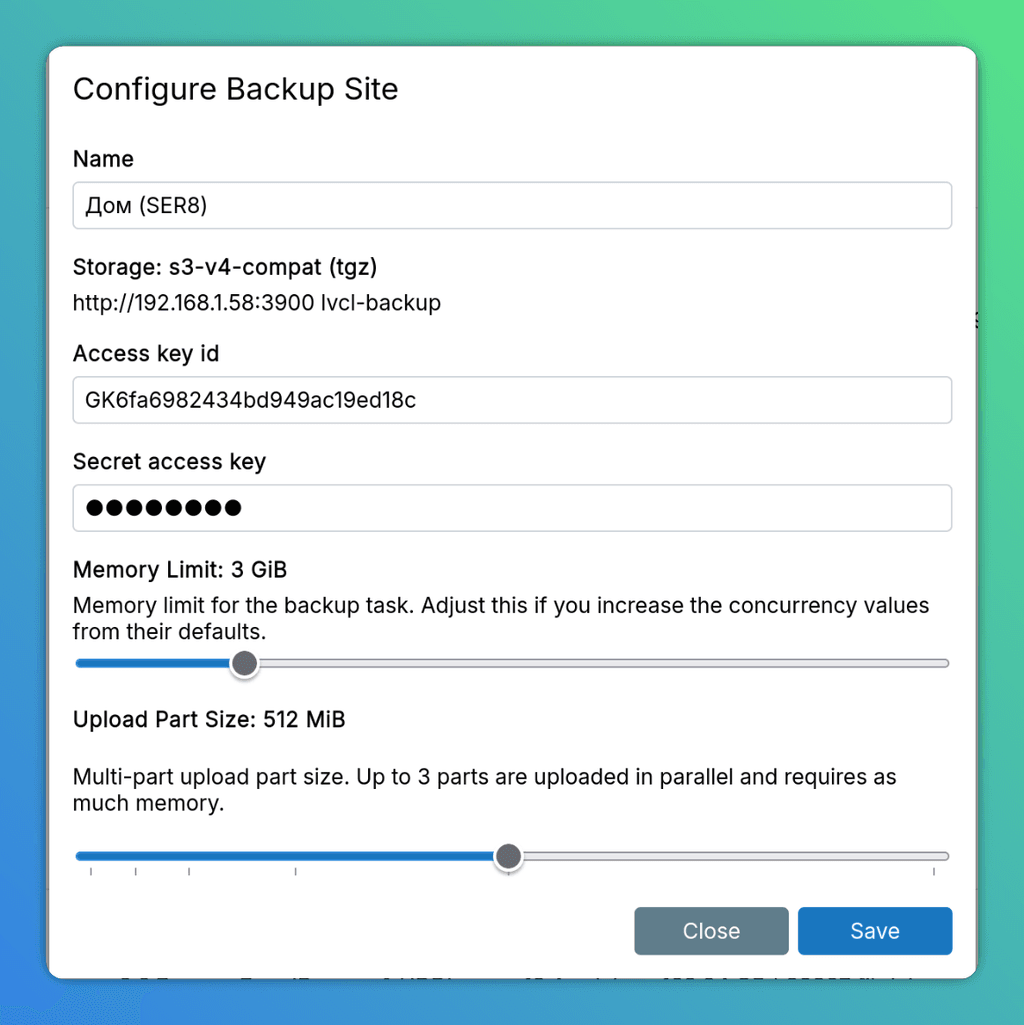

It seems the issue isn’t with my local provider after all. As an experiment, I set up Garage on a server in my local network and configured it as an

s3-v4–compatibleprovider for backups.I adjusted the

Memory LimitandUpload Part Sizesettings, then started a backup task. After that, I once again noticed in the logs that each part was10 MBinstead of512 MB, as specified in the configuration. Additionally, I wasn’t able to set a custom S3 Region.

After saving the configuration, the region kept reverting tous-east-1, so I had to configure Garage to use that region identifier instead.2025-11-11T14:57:13.843Z box:taskworker Starting task 2171. Logs are at /home/yellowtent/platformdata/logs/tasks/2171.log 2025-11-11T14:57:13.865Z box:taskworker Running task of type backup 2025-11-11T14:57:13.893Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Backing up ***.***.ru (1/18). Waiting for lock"} 2025-11-11T14:57:13.899Z box:locks write: current locks: {"full_backup_task_a1cb8769-ccfb-4107-b574-ab4c7afef2b6":null,"app_backup_07fd2189-9378-4a35-b18d-5cef77461fb1":"2171"} 2025-11-11T14:57:13.899Z box:locks acquire: app_backup_07fd2189-9378-4a35-b18d-5cef77461fb1 2025-11-11T14:57:13.900Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Snapshotting app ***.***.ru"} 2025-11-11T14:57:13.901Z box:services backupAddons 2025-11-11T14:57:13.901Z box:services backupAddons: backing up ["localstorage","postgresql","sendmail","oidc","redis"] 2025-11-11T14:57:13.902Z box:services Backing up postgresql 2025-11-11T14:57:14.139Z box:services pipeRequestToFile: connected with status code 200 2025-11-11T14:57:17.617Z box:services Backing up redis 2025-11-11T14:57:17.672Z box:services pipeRequestToFile: connected with status code 200 2025-11-11T14:57:17.682Z box:backuptask snapshotApp: ***.***.ru took 3.782 seconds 2025-11-11T14:57:17.697Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Uploading app snapshot ***.***.ru"} 2025-11-11T14:57:17.697Z box:backuptask runBackupUpload: adjusting heap size to 2816M 2025-11-11T14:57:17.697Z box:shell backuptask: /usr/bin/sudo --non-interactive -E --close-from=4 /home/yellowtent/box/src/scripts/backupupload.js snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz a1cb8769-ccfb-4107-b574-ab4c7afef2b6 {"localRoot":"/home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1","layout":[{"localDir":"/mnt/md0/IApps/photos","remoteDir":"data"}]} 2025-11-11T14:57:18.216Z box:backupupload Backing up {"localRoot":"/home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1","layout":[{"localDir":"/mnt/md0/IApps/photos","remoteDir":"data"}]} to snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz 2025-11-11T14:57:18.218Z box:backuptask upload: path snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz site a1cb8769-ccfb-4107-b574-ab4c7afef2b6 dataLayout {"localRoot":"/home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1","layout":[{"localDir":"/mnt/md0/IApps/photos","remoteDir":"data"}]} 2025-11-11T14:57:18.357Z box:backuptask checkPreconditions: mount point status is {"state":"active"} 2025-11-11T14:57:18.357Z box:backuptask checkPreconditions: getting disk usage of /home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1 2025-11-11T14:57:18.357Z box:shell backuptask: du --dereference-args --summarize --block-size=1 --exclude=*.lock --exclude=dovecot.list.index.log.* /home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1 2025-11-11T14:57:18.365Z box:backuptask checkPreconditions: getting disk usage of /mnt/md0/IApps/photos 2025-11-11T14:57:18.365Z box:shell backuptask: du --dereference-args --summarize --block-size=1 --exclude=*.lock --exclude=dovecot.list.index.log.* /mnt/md0/IApps/photos 2025-11-11T14:57:19.311Z box:backuptask checkPreconditions: total required=125243674624 available=Infinity 2025-11-11T14:57:19.317Z box:backupformat/tgz upload: uploading to site a1cb8769-ccfb-4107-b574-ab4c7afef2b6 path snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz (encrypted: false) dataLayout {"localRoot":"/home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1","layout":[{"localDir":"/mnt/md0/IApps/photos","remoteDir":"data"}]} 2025-11-11T14:57:19.318Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Uploading backup snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz (***.***.ru)"} 2025-11-11T14:57:19.323Z box:backupformat/tgz tarPack: processing /home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1 2025-11-11T14:57:19.330Z box:backupformat/tgz addToPack: added ./config.json file 2025-11-11T14:57:19.385Z box:backupformat/tgz addToPack: added ./dump.rdb file 2025-11-11T14:57:19.396Z box:backupformat/tgz addToPack: added ./fsmetadata.json file 2025-11-11T14:57:20.874Z box:storage/s3 Upload progress: {"loaded":10485760,"part":1,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:22.826Z box:storage/s3 Upload progress: {"loaded":20971520,"part":2,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:24.909Z box:storage/s3 Upload progress: {"loaded":31457280,"part":3,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:26.524Z box:storage/s3 Upload progress: {"loaded":41943040,"part":4,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:27.785Z box:storage/s3 Upload progress: {"loaded":52428800,"part":5,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:29.329Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Uploading backup 59M@6MBps (***.***.ru)"} 2025-11-11T14:57:29.652Z box:storage/s3 Upload progress: {"loaded":62914560,"part":6,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:31.626Z box:storage/s3 Upload progress: {"loaded":73400320,"part":7,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:33.614Z box:storage/s3 Upload progress: {"loaded":83886080,"part":8,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:35.396Z box:storage/s3 Upload progress: {"loaded":94371840,"part":9,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"}

-

@girish said in Cloudron 9.0 (beta) bug reports:

which service or set up specifically?

It seems the issue isn’t with my local provider after all. As an experiment, I set up Garage on a server in my local network and configured it as an

s3-v4–compatibleprovider for backups.I adjusted the

Memory LimitandUpload Part Sizesettings, then started a backup task. After that, I once again noticed in the logs that each part was10 MBinstead of512 MB, as specified in the configuration. Additionally, I wasn’t able to set a custom S3 Region.

After saving the configuration, the region kept reverting tous-east-1, so I had to configure Garage to use that region identifier instead.2025-11-11T14:57:13.843Z box:taskworker Starting task 2171. Logs are at /home/yellowtent/platformdata/logs/tasks/2171.log 2025-11-11T14:57:13.865Z box:taskworker Running task of type backup 2025-11-11T14:57:13.893Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Backing up ***.***.ru (1/18). Waiting for lock"} 2025-11-11T14:57:13.899Z box:locks write: current locks: {"full_backup_task_a1cb8769-ccfb-4107-b574-ab4c7afef2b6":null,"app_backup_07fd2189-9378-4a35-b18d-5cef77461fb1":"2171"} 2025-11-11T14:57:13.899Z box:locks acquire: app_backup_07fd2189-9378-4a35-b18d-5cef77461fb1 2025-11-11T14:57:13.900Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Snapshotting app ***.***.ru"} 2025-11-11T14:57:13.901Z box:services backupAddons 2025-11-11T14:57:13.901Z box:services backupAddons: backing up ["localstorage","postgresql","sendmail","oidc","redis"] 2025-11-11T14:57:13.902Z box:services Backing up postgresql 2025-11-11T14:57:14.139Z box:services pipeRequestToFile: connected with status code 200 2025-11-11T14:57:17.617Z box:services Backing up redis 2025-11-11T14:57:17.672Z box:services pipeRequestToFile: connected with status code 200 2025-11-11T14:57:17.682Z box:backuptask snapshotApp: ***.***.ru took 3.782 seconds 2025-11-11T14:57:17.697Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Uploading app snapshot ***.***.ru"} 2025-11-11T14:57:17.697Z box:backuptask runBackupUpload: adjusting heap size to 2816M 2025-11-11T14:57:17.697Z box:shell backuptask: /usr/bin/sudo --non-interactive -E --close-from=4 /home/yellowtent/box/src/scripts/backupupload.js snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz a1cb8769-ccfb-4107-b574-ab4c7afef2b6 {"localRoot":"/home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1","layout":[{"localDir":"/mnt/md0/IApps/photos","remoteDir":"data"}]} 2025-11-11T14:57:18.216Z box:backupupload Backing up {"localRoot":"/home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1","layout":[{"localDir":"/mnt/md0/IApps/photos","remoteDir":"data"}]} to snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz 2025-11-11T14:57:18.218Z box:backuptask upload: path snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz site a1cb8769-ccfb-4107-b574-ab4c7afef2b6 dataLayout {"localRoot":"/home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1","layout":[{"localDir":"/mnt/md0/IApps/photos","remoteDir":"data"}]} 2025-11-11T14:57:18.357Z box:backuptask checkPreconditions: mount point status is {"state":"active"} 2025-11-11T14:57:18.357Z box:backuptask checkPreconditions: getting disk usage of /home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1 2025-11-11T14:57:18.357Z box:shell backuptask: du --dereference-args --summarize --block-size=1 --exclude=*.lock --exclude=dovecot.list.index.log.* /home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1 2025-11-11T14:57:18.365Z box:backuptask checkPreconditions: getting disk usage of /mnt/md0/IApps/photos 2025-11-11T14:57:18.365Z box:shell backuptask: du --dereference-args --summarize --block-size=1 --exclude=*.lock --exclude=dovecot.list.index.log.* /mnt/md0/IApps/photos 2025-11-11T14:57:19.311Z box:backuptask checkPreconditions: total required=125243674624 available=Infinity 2025-11-11T14:57:19.317Z box:backupformat/tgz upload: uploading to site a1cb8769-ccfb-4107-b574-ab4c7afef2b6 path snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz (encrypted: false) dataLayout {"localRoot":"/home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1","layout":[{"localDir":"/mnt/md0/IApps/photos","remoteDir":"data"}]} 2025-11-11T14:57:19.318Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Uploading backup snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz (***.***.ru)"} 2025-11-11T14:57:19.323Z box:backupformat/tgz tarPack: processing /home/yellowtent/appsdata/07fd2189-9378-4a35-b18d-5cef77461fb1 2025-11-11T14:57:19.330Z box:backupformat/tgz addToPack: added ./config.json file 2025-11-11T14:57:19.385Z box:backupformat/tgz addToPack: added ./dump.rdb file 2025-11-11T14:57:19.396Z box:backupformat/tgz addToPack: added ./fsmetadata.json file 2025-11-11T14:57:20.874Z box:storage/s3 Upload progress: {"loaded":10485760,"part":1,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:22.826Z box:storage/s3 Upload progress: {"loaded":20971520,"part":2,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:24.909Z box:storage/s3 Upload progress: {"loaded":31457280,"part":3,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:26.524Z box:storage/s3 Upload progress: {"loaded":41943040,"part":4,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:27.785Z box:storage/s3 Upload progress: {"loaded":52428800,"part":5,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:29.329Z box:tasks updating task 2171 with: {"percent":5.761904761904762,"message":"Uploading backup 59M@6MBps (***.***.ru)"} 2025-11-11T14:57:29.652Z box:storage/s3 Upload progress: {"loaded":62914560,"part":6,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:31.626Z box:storage/s3 Upload progress: {"loaded":73400320,"part":7,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:33.614Z box:storage/s3 Upload progress: {"loaded":83886080,"part":8,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"} 2025-11-11T14:57:35.396Z box:storage/s3 Upload progress: {"loaded":94371840,"part":9,"Key":"snapshot/app_07fd2189-9378-4a35-b18d-5cef77461fb1.tar.gz","Bucket":"lvcl-backup"}

-

@girish Thanks! After running

sudo -u yellowtent cp -aRlv /media/WD4TB/CloudronBackup/snapshot/mail /media/WD4TB/CloudronBackup/2025-11-10-230001-644/mail_v9.0.7

without error, backups went through without error, as well.

Is there something that can be done to prevent such an error? -

Mostly stable yes, we are still busy fixing small things and the next patch release is just around the corner and after that we likely will start pushing it out slowly for autoupdates as stable.

-

@firmansi said in What's coming in Cloudron 9:

@nebulon Please don't forget bugs in reset password for LDAP sign in too yah

not sure I get what you mean by that. Is there some bug somewhere and if so do you have more information on that?

@nebulon I think @firmansi is referring to this https://forum.cloudron.io/topic/14359/cloudron-9.0-beta-bug-reports/87?_=1762939432122 (not that that is particularly clear to me)

-

J jdaviescoates referenced this topic

J jdaviescoates referenced this topic

-

@nebulon I think @firmansi is referring to this https://forum.cloudron.io/topic/14359/cloudron-9.0-beta-bug-reports/87?_=1762939432122 (not that that is particularly clear to me)

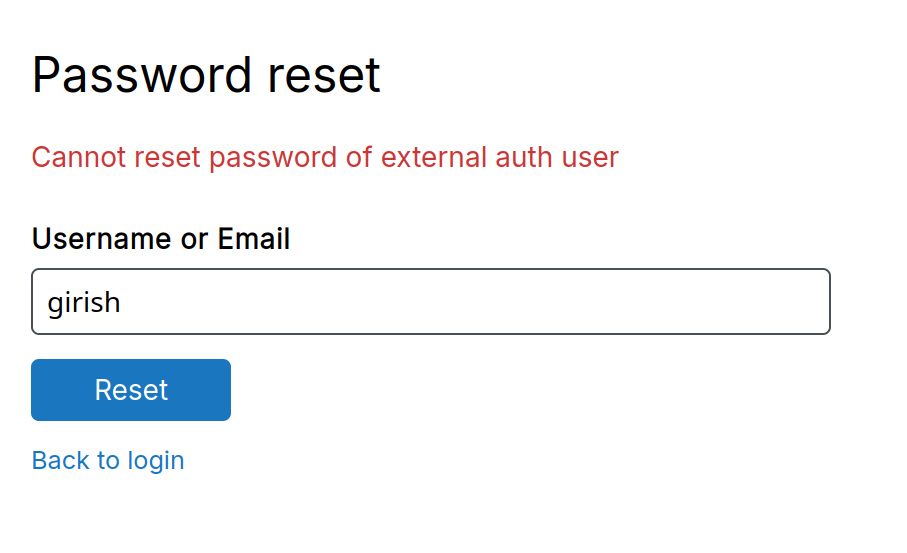

@jdaviescoates thanks for the citation, yes i refer to that. So, I’ve already upgraded to the latest Cloudron version, 9.07. Now, Cloudron A pulls its user data from Cloudron B via LDAP. I tested the password reset function on Cloudron A by clicking “Reset Password.” After clicking, the password reset window did open as expected. However, after entering the email and clicking the reset button, I never received the password reset email—even though the interface displayed a success message stating that the email had been sent.

Previously, in Cloudron version 8, when I clicked the “Reset Password” button for a user authenticated via LDAP, the system correctly responded with an error message indicating that the user’s authentication source is external (e.g., “The authentication provider for this user is not managed by this Cloudron instance”). Now, in version 9.07, that check seems to be missing or bypassed—instead of rejecting the request early, it proceeds and falsely reports success, even though no email is actually sent.

-

ah thanks for the clarification. We haven't looked into this yet. Given that LDAP has no such built-in feature, we probably need some extra API to trigger a password reset on the directory provider (which will of course then only work if it is also a Cloudron). For that case though I think the better approach would be to somehow make that work with OpenID instead of LDAP, but no code has been written for that either.

-

Possibly a bug - Maybe more of a specification/documentation situation.

I updated one of our servers from v8.3.2 to v9.0.7 yesterday. The overnight S3 backup to a local device failed with the following error:

Backup endpoint is not active: Error listing objects. code: undefined message: self-signed certificate HTTP: undefinedUp until now, the server was configured to backup to a s3-v4 compatible storage location using

httpsand allowing self signed certificate.While troubleshooting, changing the s3 endpoint from

httpstohttpand updating the cloudron config accordingly resolved the issue and allowed for backup to proceed.

To note:- the (local) address and port of the S3 endpoint have not changed

- I regenerated the self signed certificate and made another attempt but got no luck here either.

I am not clear whether

httpsid part of the s3 specifications or not. However,httpswith S3 worked fine with 8.3.2 so it is:- either a bug in v9.0.7

- or a change / normalization within cloudron for which a more meaningful error message (or form validation) and/or documentation would be helpful.

Hopefully this helps.

-

@jdaviescoates thanks for the citation, yes i refer to that. So, I’ve already upgraded to the latest Cloudron version, 9.07. Now, Cloudron A pulls its user data from Cloudron B via LDAP. I tested the password reset function on Cloudron A by clicking “Reset Password.” After clicking, the password reset window did open as expected. However, after entering the email and clicking the reset button, I never received the password reset email—even though the interface displayed a success message stating that the email had been sent.

Previously, in Cloudron version 8, when I clicked the “Reset Password” button for a user authenticated via LDAP, the system correctly responded with an error message indicating that the user’s authentication source is external (e.g., “The authentication provider for this user is not managed by this Cloudron instance”). Now, in version 9.07, that check seems to be missing or bypassed—instead of rejecting the request early, it proceeds and falsely reports success, even though no email is actually sent.

-

Possibly a bug - Maybe more of a specification/documentation situation.

I updated one of our servers from v8.3.2 to v9.0.7 yesterday. The overnight S3 backup to a local device failed with the following error:

Backup endpoint is not active: Error listing objects. code: undefined message: self-signed certificate HTTP: undefinedUp until now, the server was configured to backup to a s3-v4 compatible storage location using

httpsand allowing self signed certificate.While troubleshooting, changing the s3 endpoint from

httpstohttpand updating the cloudron config accordingly resolved the issue and allowed for backup to proceed.

To note:- the (local) address and port of the S3 endpoint have not changed

- I regenerated the self signed certificate and made another attempt but got no luck here either.

I am not clear whether

httpsid part of the s3 specifications or not. However,httpswith S3 worked fine with 8.3.2 so it is:- either a bug in v9.0.7

- or a change / normalization within cloudron for which a more meaningful error message (or form validation) and/or documentation would be helpful.

Hopefully this helps.

-

@firmansi I have fixed this now in https://git.cloudron.io/platform/box/-/commit/29e2be47d0da86e77105db47d6c73b601c0fc472 . I think what you meant is below correct?

-

ah thanks for the clarification. We haven't looked into this yet. Given that LDAP has no such built-in feature, we probably need some extra API to trigger a password reset on the directory provider (which will of course then only work if it is also a Cloudron). For that case though I think the better approach would be to somehow make that work with OpenID instead of LDAP, but no code has been written for that either.

@nebulon I’ve actually suggested this before: what if cloudron team added a field so that Cloudron admins like me could include a link or instructions for users on how to reset their LDAP password at the location where their user data is stored? I assume not everyone using Cloudron uses Cloudron itself as their LDAP server—some may use a separate external LDAP server. While adding an extra API to trigger password changes or resets on the external LDAP server is indeed a good idea, it might take some time to implement. So, I was just thinking of a more practical, immediate solution: letting each admin using Cloudron take responsibility for providing their own reset link or instructions.

-

Possibly a bug - Maybe more of a specification/documentation situation.

I updated one of our servers from v8.3.2 to v9.0.7 yesterday. The overnight S3 backup to a local device failed with the following error:

Backup endpoint is not active: Error listing objects. code: undefined message: self-signed certificate HTTP: undefinedUp until now, the server was configured to backup to a s3-v4 compatible storage location using

httpsand allowing self signed certificate.While troubleshooting, changing the s3 endpoint from

httpstohttpand updating the cloudron config accordingly resolved the issue and allowed for backup to proceed.

To note:- the (local) address and port of the S3 endpoint have not changed

- I regenerated the self signed certificate and made another attempt but got no luck here either.

I am not clear whether

httpsid part of the s3 specifications or not. However,httpswith S3 worked fine with 8.3.2 so it is:- either a bug in v9.0.7

- or a change / normalization within cloudron for which a more meaningful error message (or form validation) and/or documentation would be helpful.

Hopefully this helps.