Seems to be a superset problems by your expertise so ? I thought it would be related to the cloudron Security manager handling in addition to superset api...

I'll look into it when i'm in front of the problem i guess, maybe trying to override the security part of the api like a comment in the issues

Charles_Nkdl

Posts

-

Superset API access through Cloudron Oauth -

Superset API access through Cloudron OauthHello Cloudron Team and users,

Sorry to bother you again since last time. I come here for help and guidance.

I'm currently trying to fiddle something with Superset and embedding through the Superset API in local, which is ok.

Now that i want to try it out on my Cloudron Superset Instance i'm quite stuck, as i can't get a hold of my JWT token through the Superset API :

https://superset.apache.org/docs/api/ => /api/v1/security/login

Which is i think related to the fact cloudron introduced a custom security manager in the superset_config.py filefrom cloudron_security_manager import CloudronSecurityManager CUSTOM_SECURITY_MANAGER = CloudronSecurityManager AUTH_TYPE = AUTH_OAUTH AUTH_ROLE_ADMIN = 'Admin' AUTH_ROLE_PUBLIC = 'Public' AUTH_USER_REGISTRATION = True AUTH_USER_REGISTRATION_ROLE = "Admin" OAUTH_PROVIDERS = [ { 'name':'Cloudron', 'token_key':'access_token', 'icon':'fa-address-card', 'remote_app': { 'client_id': os.environ["CLOUDRON_OIDC_CLIENT_ID"], 'client_secret': os.environ["CLOUDRON_OIDC_CLIENT_SECRET"], 'client_kwargs': {'scope': 'openid email profile'}, 'server_metadata_url': f"{CLOUDRON_OIDC_ISSUER}/.well-known/openid-configuration", 'api_base_url': f"{CLOUDRON_OIDC_ISSUER}/", 'access_token_url': f"{CLOUDRON_OIDC_TOKEN_ENDPOINT}", 'authorize_url': f"{CLOUDRON_OIDC_AUTH_ENDPOINT}" } } ]Now more security is ok, but i don't know how i can access the JWT token going through cloudron security first, if someone have experience about this, i'm quite sure it's supposed to be this way but i may be wrong.

Thank you -

Metadata DB, Caching Redis and Celery ExecutorWorks fine thank you for your work !

-

Metadata DB, Caching Redis and Celery Executorgosu cloudron bash -c "source /app/pkg/env.sh && celery --app=superset.tasks.celery_app:app status" Loaded your LOCAL configuration at [/app/pkg/superset_config.py] 2024-07-04 14:43:03,737:INFO:superset.utils.logging_configurator:logging was configured successfully 2024-07-04 14:43:03,739:INFO:root:Configured event logger of type <class 'superset.utils.log.DBEventLogger'> /app/code/superset/flask_limiter/extension.py:333: UserWarning: Using the in-memory storage for tracking rate limits as no storage was explicitly specified. This is not recommended for production use. See: https://flask-limiter.readthedocs.io#configuring-a-storage-backend for documentation about configuring the storage backend. warnings.warn( -> celery@d4fc71d0-b0cc-4acc-a9f8-ec7dd74b5096: OK 1 node online.It does give me some feedback

But the redis cache results are different as if not each data (dashboards , query, filters ....) was correctly cached in the results

I won't lie i'm not good enough to decipher these redis logs and what they corresponds to exactly, but the loading speed between the reload of dashboards lacks something in the config file

Sadly, i don't have the expertise to pinpoint which components is lacking something as i don't have much experience in celery nor redis... And superset documentation is quite obscure at some points,

Sorry

-

Metadata DB, Caching Redis and Celery ExecutorHi Girish !

I don't see any error, there is just no logs present in the redis cache from superset as if it wasnt connected to cache the results from dashboards and datasets, i think some flags are missing in the super config

Redis cache 0 handles the pidbox and queue of celery workers so not a lot of things,

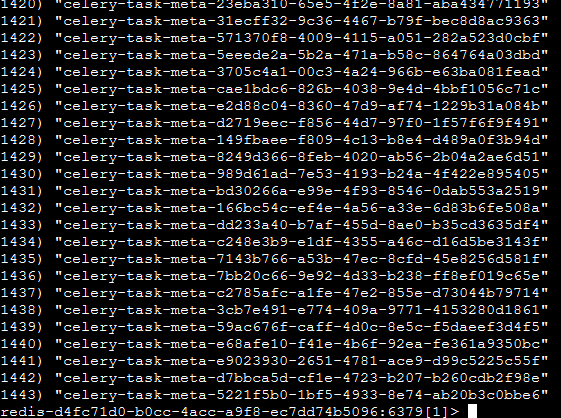

cache 1 is this :

There is only celery task metadata, but sadly i can't connect to celery logs directly as it give me an error :celery -A superset status Traceback (most recent call last): File "/app/code/superset/kombu/connection.py", line 472, in _reraise_as_library_errors yield File "/app/code/superset/kombu/connection.py", line 459, in _ensure_connection return retry_over_time( File "/app/code/superset/kombu/utils/functional.py", line 318, in retry_over_time return fun(*args, **kwargs) File "/app/code/superset/kombu/connection.py", line 934, in _connection_factory self._connection = self._establish_connection() File "/app/code/superset/kombu/connection.py", line 860, in _establish_connection conn = self.transport.establish_connection() File "/app/code/superset/kombu/transport/pyamqp.py", line 203, in establish_connection conn.connect() File "/app/code/superset/amqp/connection.py", line 324, in connect self.transport.connect() File "/app/code/superset/amqp/transport.py", line 129, in connect self._connect(self.host, self.port, self.connect_timeout) File "/app/code/superset/amqp/transport.py", line 184, in _connect self.sock.connect(sa) ConnectionRefusedError: [Errno 111] Connection refused The above exception was the direct cause of the following exception: Traceback (most recent call last): File "/app/code/superset/bin/celery", line 8, in <module> sys.exit(main()) File "/app/code/superset/celery/__main__.py", line 15, in main sys.exit(_main()) File "/app/code/superset/celery/bin/celery.py", line 236, in main return celery(auto_envvar_prefix="CELERY") File "/app/code/superset/click/core.py", line 1157, in __call__ return self.main(*args, **kwargs) File "/app/code/superset/click/core.py", line 1078, in main rv = self.invoke(ctx) File "/app/code/superset/click/core.py", line 1688, in invoke return _process_result(sub_ctx.command.invoke(sub_ctx)) File "/app/code/superset/click/core.py", line 1434, in invoke return ctx.invoke(self.callback, **ctx.params) File "/app/code/superset/click/core.py", line 783, in invoke return __callback(*args, **kwargs) File "/app/code/superset/click/decorators.py", line 33, in new_func return f(get_current_context(), *args, **kwargs) File "/app/code/superset/celery/bin/base.py", line 135, in caller return f(ctx, *args, **kwargs) File "/app/code/superset/celery/bin/control.py", line 133, in status callback=callback).ping() File "/app/code/superset/celery/app/control.py", line 294, in ping return self._request('ping') File "/app/code/superset/celery/app/control.py", line 106, in _request return self._prepare(self.app.control.broadcast( File "/app/code/superset/celery/app/control.py", line 776, in broadcast return self.mailbox(conn)._broadcast( File "/app/code/superset/kombu/pidbox.py", line 330, in _broadcast chan = channel or self.connection.default_channel File "/app/code/superset/kombu/connection.py", line 953, in default_channel self._ensure_connection(**conn_opts) File "/app/code/superset/kombu/connection.py", line 458, in _ensure_connection with ctx(): File "/usr/lib/python3.10/contextlib.py", line 153, in __exit__ self.gen.throw(typ, value, traceback) File "/app/code/superset/kombu/connection.py", line 476, in _reraise_as_library_errors raise ConnectionError(str(exc)) from exc kombu.exceptions.OperationalError: [Errno 111] Connection refusedI tried supercharging another dashboard following the docs and trying to adapt to your env: https://superset.apache.org/docs/configuration/cache

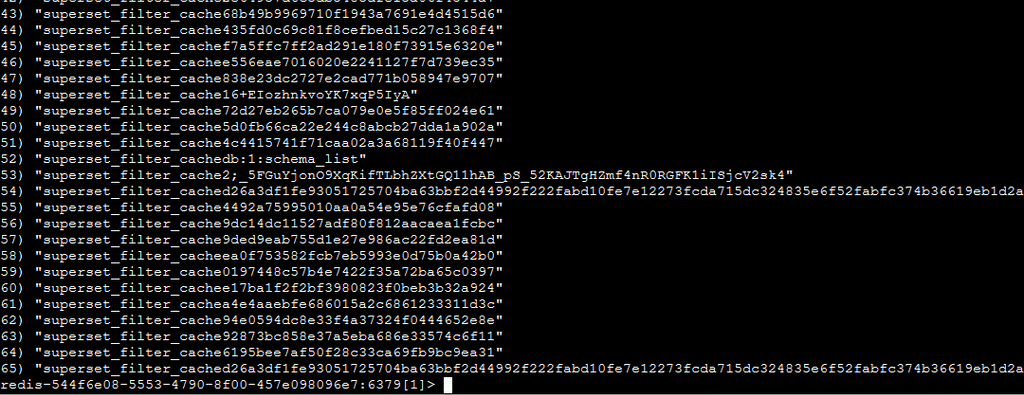

Here is the result :

So i can manage to get cache on my viz, i think it just lacked some flags

here is my config_file :import os import sys REDIS_HOST = os.environ["CLOUDRON_REDIS_HOST"] REDIS_PORT = os.environ["CLOUDRON_REDIS_PORT"] REDIS_CELERY_DB = "0" REDIS_RESULTS_DB = "1" FILTER_STATE_CACHE_CONFIG = { 'CACHE_TYPE': 'RedisCache', 'CACHE_DEFAULT_TIMEOUT': 86400, 'CACHE_KEY_PREFIX': 'superset_filter_cache', 'CACHE_REDIS_URL': f"redis://{REDIS_HOST}:{REDIS_PORT}/{REDIS_RESULTS_DB}" } EXPLORE_FORM_DATA_CACHE_CONFIG = { 'CACHE_TYPE': 'RedisCache', 'CACHE_DEFAULT_TIMEOUT': 86400, 'CACHE_KEY_PREFIX': 'superset_filter_cache', 'CACHE_REDIS_URL': f"redis://{REDIS_HOST}:{REDIS_PORT}/{REDIS_RESULTS_DB}" } CACHE_CONFIG = { 'CACHE_TYPE': 'RedisCache', 'CACHE_DEFAULT_TIMEOUT': 86400, 'CACHE_KEY_PREFIX': 'superset_filter_cache', 'CACHE_REDIS_URL': f"redis://{REDIS_HOST}:{REDIS_PORT}/{REDIS_RESULTS_DB}" } DATA_CACHE_CONFIG = { 'CACHE_TYPE': 'RedisCache', 'CACHE_DEFAULT_TIMEOUT': 86400, 'CACHE_KEY_PREFIX': 'superset_filter_cache', 'CACHE_REDIS_URL': f"redis://{REDIS_HOST}:{REDIS_PORT}/{REDIS_RESULTS_DB}" } class CeleryConfig(object): broker_url = f"redis://{REDIS_HOST}:{REDIS_PORT}/{REDIS_CELERY_DB}" imports = ( "superset.sql_lab", "superset.tasks.scheduler", ) result_backend = f"redis://{REDIS_HOST}:{REDIS_PORT}/{REDIS_CELERY_DB}" worker_prefetch_multiplier = 10 task_acks_late = True task_annotations = { "sql_lab.get_sql_results": { "rate_limit": "100/s", }, } CELERY_CONFIG = CeleryConfigI don't know if you think it is ok...

But sadly i couldn't get celery access to logs or see the tasks results to follow activity -

Metadata DB, Caching Redis and Celery ExecutorHello Cloudron,

I'm currently using Superset for dashboarding from various datasources. Some of these queries takes a lot of times and ressources actually and i wanted to optimize this.

Some easy solutions to optimize these times in Superset is modifying multiples things in the config, which i want to do as i did in my local environment but sadly, i'm not too acquainted with Cloudron environnement so i wanted to ask for your feedback.

1 / Changing the Sqlite Metadatabase of Superset

When you initialize Superset, it does create a Sqlite DB but it does limit its potential. Superset handles and tested a postgreSQL or MySQL metadata backend. I checked the dockerfile from your git superset and the superset_config and a postgre metadb was already setup in the config file

2/ Same thing with Redis and Celery, the config is present in the git of cloudron implementation (https://git.cloudron.io/cloudron/superset-app/-/blob/main/superset_config.py?ref_type=heads)

Sadly, even if redis and celery is configured in the super superset_config in the git, it doesnt work as checking logs of celery and cache of redis using the connection port

I tried fidgetting it with my local config but i do miss something to make it work, do you think you could help me on this point ???

Thank you !