Next Cloud Text and Image Generation using LLM Failures

-

Hi everyone i have found the fix but couldn't apply it to the cloudron app. can you please assist me with this one?

This is the exact link for the fix i need to do: https://help.nextcloud.com/t/ai-assistant-failing-resolved/172705

Just like he did i ran :

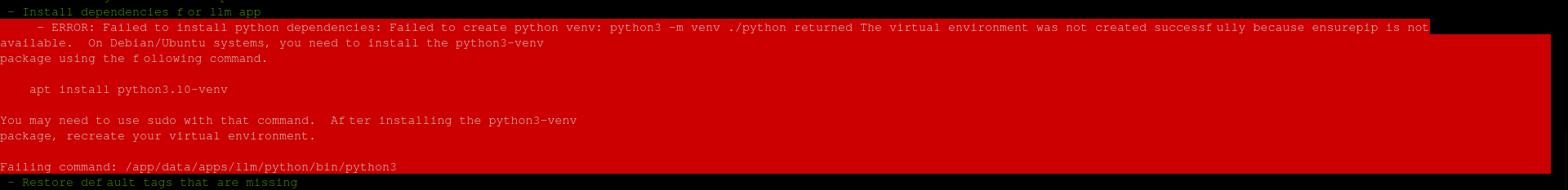

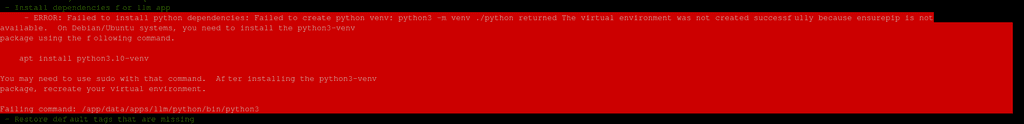

occ maintenance:repairand got the same error:

I tried executing the command:

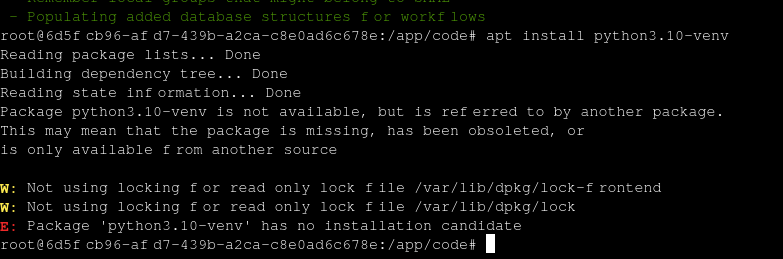

apt install python3.10-venvbut got this error:

If there is anyone who can guide me on how to install that particular python3.10-venv within the NextCloud Cloudron Application/Container, I would really appreciate it!

-

AFAIK it’s not possible at the moment (unless building a custom Cloudron package)

-

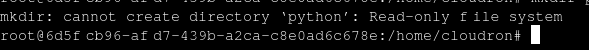

- Do you use the Cloudron package of Nextcloud? It‘s docker-based and read-only (except /app/data), you can’t just install packages into it.

- Nextcloud Assistant is very far from ready to be used on a daily basis for self-hosters. Super (and I mean super) slow (see the answering time of 2 minutes for the surface temperature of the sun in your link) as an LL.M is currently not something you want to run on your standard server.

- Installing the Assistant has many hurdles: https://forum.cloudron.io/post/87427 as usual with Nextcloud‘s dreaded „app“ system

-

- Yes, I just found out.

- Not just for self-hosting, need for prototyping for a prospect client. I saw the results so I wouldn't mind upgrading to not use "your standard server". we don't need context chat just the LLMs. I want to do what he exactly did in here: https://help.nextcloud.com/t/ai-assistant-failing-resolved/172705

so i would appreciate if the answer would be:

- The fix stated above is not possible in the current setup or;

- We should wait for cloudron to include python3.10-venv within the docker container?

- Yes, I just found out.

-

AFAIK it’s not possible at the moment (unless building a custom Cloudron package)

-

B bvxzee marked this topic as a question on

-

B bvxzee has marked this topic as solved on

-

Appreciate it! for the mean time I wouldn't be able to present the AI Capab of Next Cloud. On another note when would be Next Cloud Hub 8 package will be available within Cloudron?

@bvxzee Usually not before the first bugfix version has been released by Nextcloud (i.e. 29.01)