"Unlock instructions" email due to brute force attack on gitlab users

-

I found this thread which implies that it is a known issue in gitlab: https://gitlab.com/gitlab-org/gitlab/-/issues/297473

-

I found the logs - they were inside the container at

/home/git/gitlab/logRunning

grep -i "failed"revealed that the attack started in the early morning of 20th June. Somehow the list of usernames was known (probably relates to the issue in the link in my previous post) and signin requests are being made from random ip addresses.First 5 entries shown below (this pattern has continued since):

./application_json.log:{"severity":"INFO","time":"2025-07-20T03:17:13.349Z","correlation_id":"xxx","meta.caller_id":"SessionsController#create","meta.feature_category":"system_access","meta.organization_id":1,"meta.remote_ip":"156.146.59.50","meta.client_id":"ip/156.146.59.50","message":"Failed Login: username=xxx1 ip=156.146.59.50"} ./application_json.log:{"severity":"INFO","time":"2025-07-20T03:18:20.163Z","correlation_id":"xxx","meta.caller_id":"SessionsController#create","meta.feature_category":"system_access","meta.organization_id":1,"meta.remote_ip":"193.176.84.35","meta.client_id":"ip/193.176.84.35","message":"Failed Login: username=xxx2 ip=193.176.84.35"} ./application_json.log:{"severity":"INFO","time":"2025-07-20T03:18:39.636Z","correlation_id":"xxx","meta.caller_id":"SessionsController#create","meta.feature_category":"system_access","meta.organization_id":1,"meta.remote_ip":"20.205.138.223","meta.client_id":"ip/20.205.138.223","message":"Failed Login: username=xxxx3 ip=20.205.138.223"} ./application_json.log:{"severity":"INFO","time":"2025-07-20T03:19:04.255Z","correlation_id":"xxx","meta.caller_id":"SessionsController#create","meta.feature_category":"system_access","meta.organization_id":1,"meta.remote_ip":"98.152.200.61","meta.client_id":"ip/98.152.200.61","message":"Failed Login: username=xxx4 ip=98.152.200.61"} ./application_json.log:{"severity":"INFO","time":"2025-07-20T03:21:03.314Z","correlation_id":"xxx","meta.caller_id":"SessionsController#create","meta.feature_category":"system_access","meta.organization_id":1,"meta.remote_ip":"200.34.32.138","meta.client_id":"ip/200.34.32.138","message":"Failed Login: username=xxx5 ip=200.34.32.138"} -

So it appears that unauthenticated users (or attackers) are able to brute force usernames due to the fact that the corresponding API endpoints are not authenticated: https://gitlab.com/gitlab-org/gitlab/-/issues/297473

Furthermore, the gitlab team do not plan to fix the issue:

- https://gitlab.com/gitlab-org/gitlab/-/issues/16179

- https://gitlab.com/gitlab-org/gitlab/-/issues/336601

To mitigate the risk from such attacks in the future we took the following measures:

Actions taken on the server:

- Installed Fail2ban

Actions taken on the platform (cloudron):

- Removed several platform apps that were not being used

- Restricted visibility of (and access to) the gitlab instance to just those who need it

- Removed several users

Actions taken on the gitlab instance (cloudron container):

- Enabled 2FA (we had it natively on cloudron / OIDC but not for guest users)

- Removed Oauth apps that are no longer used

- Enabled "Deactivate dormant users after a period of inactivity" (90 days)

- Enabled "Enable unauthenticated API request rate limit" (1 per second)

- Enabled "Enable unauthenticated web request rate limit" (1 per second)

- Enabled "Enable authenticated API request rate limit" (2 per second)

- Enabled "Enable authenticated API request rate limit" (2 per second)

- Deleted 3 inactive runners and removed one active but no longer needed runner

- Removed dormant users

Suggestions (to the packaging team) for improvement:

- A hardened Gitlab configuration "out of the box" in cloudron

- Updates to the documentation (eg that the logs location is under

/home/git/gitlab/log). Maybe even putting that location in the file explorer, to make log capture / analysis easier. - Options within the cloudron platform itself to more aggressively reject IP addresses. It was noted that some attacker IPs were re-used after some time.

-

So it appears that unauthenticated users (or attackers) are able to brute force usernames due to the fact that the corresponding API endpoints are not authenticated: https://gitlab.com/gitlab-org/gitlab/-/issues/297473

Furthermore, the gitlab team do not plan to fix the issue:

- https://gitlab.com/gitlab-org/gitlab/-/issues/16179

- https://gitlab.com/gitlab-org/gitlab/-/issues/336601

To mitigate the risk from such attacks in the future we took the following measures:

Actions taken on the server:

- Installed Fail2ban

Actions taken on the platform (cloudron):

- Removed several platform apps that were not being used

- Restricted visibility of (and access to) the gitlab instance to just those who need it

- Removed several users

Actions taken on the gitlab instance (cloudron container):

- Enabled 2FA (we had it natively on cloudron / OIDC but not for guest users)

- Removed Oauth apps that are no longer used

- Enabled "Deactivate dormant users after a period of inactivity" (90 days)

- Enabled "Enable unauthenticated API request rate limit" (1 per second)

- Enabled "Enable unauthenticated web request rate limit" (1 per second)

- Enabled "Enable authenticated API request rate limit" (2 per second)

- Enabled "Enable authenticated API request rate limit" (2 per second)

- Deleted 3 inactive runners and removed one active but no longer needed runner

- Removed dormant users

Suggestions (to the packaging team) for improvement:

- A hardened Gitlab configuration "out of the box" in cloudron

- Updates to the documentation (eg that the logs location is under

/home/git/gitlab/log). Maybe even putting that location in the file explorer, to make log capture / analysis easier. - Options within the cloudron platform itself to more aggressively reject IP addresses. It was noted that some attacker IPs were re-used after some time.

@allanbowe said in "Unlock instructions" email due to brute force attack on gitlab users:

Suggestions (to the packaging team) for improvement:

A hardened Gitlab configuration "out of the box" in cloudron Updates to the documentation (eg that the logs location is under /home/git/gitlab/log). Maybe even putting that location in the file explorer, to make log capture / analysis easier. Options within the cloudron platform itself to more aggressively reject IP addresses. It was noted that some attacker IPs were re-used after some time.Agreed.

I'd add: copy the salient bits of above post (or at least link to it) into the docs too.

-

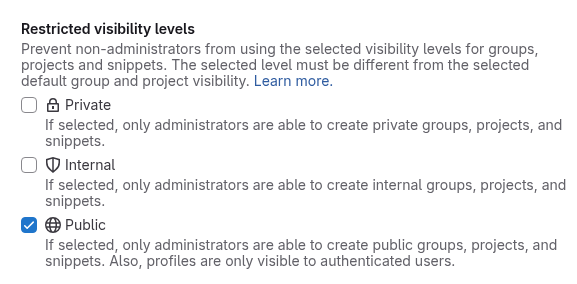

Just discovered a setting at the following path: /admin/application_settings/general#js-visibility-settings

Section: Restricted visibility levels

Setting: Public - If selected, only administrators are able to create public groups, projects, and snippets. Also, profiles are only visible to authenticated users.

After checking this, and testing with CURL, the /api/v4/users/XXX endpoints now consistently return a 404 whether authenticated or not!!

I suspect this is the fix, but will wait and see if there are any more "Unlock Instructions" emails tonight / tomorrow.

Weirdly, after checking this checkbox and hitting save, it gets unchecked immediately after - but refreshing the page shows that it was indeed checked.

Another side note - we saw in our email logs that we were getting a large number of requests from a subdomain of https://academyforinternetresearch.org/

So it seems that this could be an issue on their radar.

-

So it turns out this does NOT stop the "Unlock Instructions" email being sent. They even continue after forcing 2FA for all users.

What is more, we even get the emails for internal staff, who don't even have a password - because they authenticate using OIDC in cloudron.Any suggestions?

-

Hello @allanbowe

I would assume this is a temporary automated attack.

Maybe it would be a good idea to only allow access to Cloudron or GitLab from a VPN for some days.

This way the bots will notice action was taken and will not resume for some while or stop completely. -

Hello @allanbowe

Not by default from Cloudron. (Maybe in the future)I would advise to temporary edit the GitLab NGINX file to only allow certain IP addresses.

This manual change will get reset with every Cloudron / Server / App restart.

So it is really temporary.Example for APP ID

682ca768-93e5-4bcb-a760-677daa9a8e3bGo into the application NGINX config folder:

cd /home/yellowtent/platformdata/nginx/applications/682ca768-93e5-4bcb-a760-677daa9a8e3bEdit the

sub.domain.tld.conffile, in this casedokuwiki.cloudron.dev:nano dokuwiki.cloudron.dev.confInside this section, add:

# https server server { [...] # allow localhost allow 127.0.0.1; # allow cloudron proxy allow 172.18.0.1; # allow this servers public ipv4 allow REDACTED-IPV4; # allow this servers public ipv6 allow REDACTED-IPV6; # Allow some other specific IPv4 e.g VPN allow VPN-IP; # deny all other deny all; [...] }Reload the NGINX service:

systemctl reload nginx.serviceThis will result for other IPs that are not explicitly allowed to return a

403 Forbidden:

Keep in mind, every Cloudron / Server / App Restart will reset this change!