@girish Thank you very much for the info. I didn’t manage to reply earlier, but I saved the backup configuration again and the morning backup completed successfully. I’ll also try upgrading to 9.0.15.

archos

Posts

-

After auto update to 9.0.14: backup errors -

After auto update to 9.0.14: backup errorsHi, our server was updated to version 9.0.14 on Friday, and yesterday the backup failed with this error:

box:storage/filesystem SSH remote rm failed, trying sshfs rm Dec 15 05:06:26 box:shell filesystem: rm -rf /mnt/managedbackups/0a6effb5-6b5d-48f6-9e8d-2a0bd2f3e610/oscloud/snapshot/app_283b2bb3-155e-4d7b-9f9b-410e24888efb/data/data/tymoty/files_trashbin/versions/Shared (zkopírovat).d1763126576 Dec 15 05:06:27 box:shell filesystem: ssh -o "StrictHostKeyChecking no" -i /tmp/identity_file-mnt-managedbackups-0a6effb5-6b5d-48f6-9e8d-2a0bd2f3e610 -p 23 u430632@u430632.your-storagebox.de rm -rf oscloud/snapshot/app_283b2bb3-155e-4d7b-9f9b-410e24888efb/data/data/prvosienka/files_encryption/keys/files_trashbin/files/25-11-10 10-52-51 4362.jpg.d1763121179 errored BoxError: ssh exited with code 255 signal null Dec 15 05:06:27 at ChildProcess.<anonymous> (/home/yellowtent/box/src/shell.js:82:23) Dec 15 05:06:27 at ChildProcess.emit (node:events:519:28) Dec 15 05:06:27 at maybeClose (node:internal/child_process:1101:16) Dec 15 05:06:27 at Socket.<anonymous> (node:internal/child_process:456:11) Dec 15 05:06:27 at Socket.emit (node:events:519:28) Dec 15 05:06:27 at Pipe.<anonymous> (node:net:346:12) { Dec 15 05:06:27 reason: 'Shell Error', Dec 15 05:06:27 details: {}, Dec 15 05:06:27 stdout: <Buffer >, Dec 15 05:06:27 stdoutLineCount: 0, Dec 15 05:06:27 stderr: <Buffer 6e 6f 20 73 75 63 68 20 69 64 65 6e 74 69 74 79 3a 20 2f 74 6d 70 2f 69 64 65 6e 74 69 74 79 5f 66 69 6c 65 2d 6d 6e 74 2d 6d 61 6e 61 67 65 64 62 61 ... 224 more bytes>, Dec 15 05:06:27 stderrLineCount: 4, Dec 15 05:06:27 code: 255, Dec 15 05:06:27 signal: null, Dec 15 05:06:27 timedOut: false, Dec 15 05:06:27 terminated: false Dec 15 05:06:27 } Dec 15 05:06:27 box:backupformat/rsync sync: done processing deletes. error: BoxError: SSH connection error: ssh exited with code 255 signal null at Object.removeDir (/home/yellowtent/box/src/storage/filesystem.js:286:47) at async processSyncerChange (/home/yellowtent/box/src/backupformat/rsync.js:110:13) at async /home/yellowtent/box/src/backupformat/rsync.js:128:92 { reason: 'External Error', details: {} } Dec 15 05:06:27 box:backupupload upload completed. error: BoxError: SSH connection error: ssh exited with code 255 signal null at Object.removeDir (/home/yellowtent/box/src/storage/filesystem.js:286:47) at async processSyncerChange (/home/yellowtent/box/src/backupformat/rsync.js:110:13) at async /home/yellowtent/box/src/backupformat/rsync.js:128:92 { reason: 'External Error', details: {} } Dec 15 05:06:27 box:backuptask runBackupUpload: result - {"errorMessage":"SSH connection error: ssh exited with code 255 signal null"} Dec 15 05:06:27 box:shell backuptask: /usr/bin/sudo --non-interactive -E --close-from=4 /home/yellowtent/box/src/scripts/backupupload.js snapshot/app_283b2bb3-155e-4d7b-9f9b-410e24888efb 0a6effb5-6b5d-48f6-9e8d-2a0bd2f3e610 {"localRoot":"/home/yellowtent/appsdata/283b2bb3-155e-4d7b-9f9b-410e24888efb","layout":[]} errored BoxError: /usr/bin/sudo exited with code 50 signal null Dec 15 05:06:27 at ChildProcess.<anonymous> (/home/yellowtent/box/src/shell.js:82:23) Dec 15 05:06:27 at ChildProcess.emit (node:events:519:28) Dec 15 05:06:27 at maybeClose (node:internal/child_process:1101:16) Dec 15 05:06:27 at Socket.<anonymous> (node:internal/child_process:456:11) Dec 15 05:06:27 at Socket.emit (node:events:519:28) Dec 15 05:06:27 at Pipe.<anonymous> (node:net:346:12) { Dec 15 05:06:27 reason: 'Shell Error', Dec 15 05:06:27 details: {}, Dec 15 05:06:27 stdout: '', Dec 15 05:06:27 stdoutLineCount: 0, Dec 15 05:06:27 stderr: '', Dec 15 05:06:27 stderrLineCount: 0, Dec 15 05:06:27 code: 50, Dec 15 05:06:27 signal: null, Dec 15 05:06:27 timedOut: false, Dec 15 05:06:27 terminated: false Dec 15 05:06:27 } Dec 15 05:06:27 box:backuptask fullBackup: app next.oscloud.cz backup finished. Took 51.625 seconds Dec 15 05:06:27 box:locks write: current locks: {"full_backup_task_0a6effb5-6b5d-48f6-9e8d-2a0bd2f3e610":null} Dec 15 05:06:27 box:locks release: app_backup_283b2bb3-155e-4d7b-9f9b-410e24888efb Dec 15 05:06:27 box:tasks setCompleted - 9744: {"result":null,"error":{"message":"SSH connection error: ssh exited with code 255 signal null","reason":"External Error"},"percent":100} Dec 15 05:06:27 box:tasks updating task 9744 with: {"completed":true,"result":null,"error":{"message":"SSH connection error: ssh exited with code 255 signal null","reason":"External Error"},"percent":100} Dec 15 05:06:27 box:taskworker Task took 127.881 seconds Dec 15 05:06:27 BoxError: SSH connection error: ssh exited with code 255 signal null Dec 15 05:06:27 at runBackupUpload (/home/yellowtent/box/src/backuptask.js:204:15) Dec 15 05:06:27 at async uploadAppSnapshot (/home/yellowtent/box/src/backuptask.js:370:34) Dec 15 05:06:27 at async backupAppWithTag (/home/yellowtent/box/src/backuptask.js:393:26) Dec 15 05:06:27 Exiting with code 0Quick datapoint: our backup to Hetzner Storage Box (SSH port 23) fails in the delete phase with no such identity: /tmp/identity_file-mnt-managedbackups-... and ssh exited with code 255. Started after upgrade to 9.0.14.

-

[GUIDE] Move PeerTube video storage to Hetzner S3@stoccafisso DigitalOcean Spaces should work fine — it's a standard S3-compatible storage.

The setup is basically the same as in the guide.

Just use your DO endpoint and access keys. -

Old system backups are not listed@joseph said in Old system backups are not listed:

wonder if the backupcleaner removed the entries from the database by mistake. Have you tried searching for the old backup in the eventlog? Maybe it hits one of the backupcleaner tasks . Just a thought.

Thanks, Joseph.I checked the Event Log, but I don’t see any entries from backupcleaner or anything indicating that older backups were removed or pruned.

All older backup folders are still present on the storage, but Cloudron simply doesn't list the system backups created before the upgrade to 9.x.To clarify:

- Older app backups are visible under each app,

- but older system backups are missing entirely,

- and restoring an older app backup fails with the

fsmetadata.jsonENOENT error.

So it doesn't look like backupcleaner deleted the files – it just seems Cloudron no longer recognizes the old backups after the upgrade.

Let me know if there is anything else I should check.

-

Old system backups are not listed@ekevu123 said in Old system backups are not listed:

Did this occur while upgrading from Cloudron 8 into the 9 series?

Yes, it happened when upgrading from Cloudron 8 to the 9.x series.

Everything was working fine on Cloudron 8. -

Old system backups are not listedHi, just a quick follow-up.

Even after updating to 9.0.13, the issue is still not resolved.

The older backups are visible under each app, but the apps cannot be restored from them.

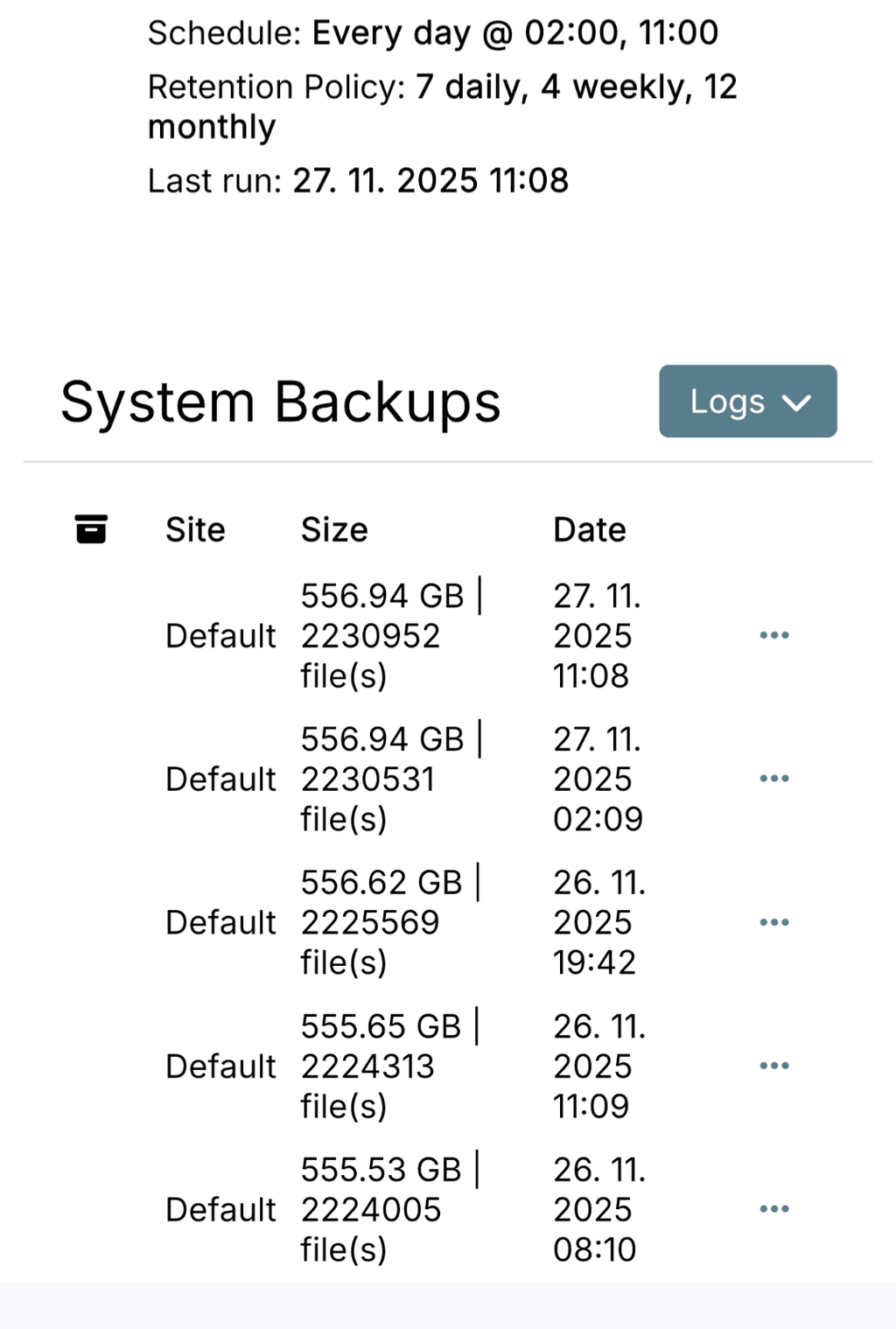

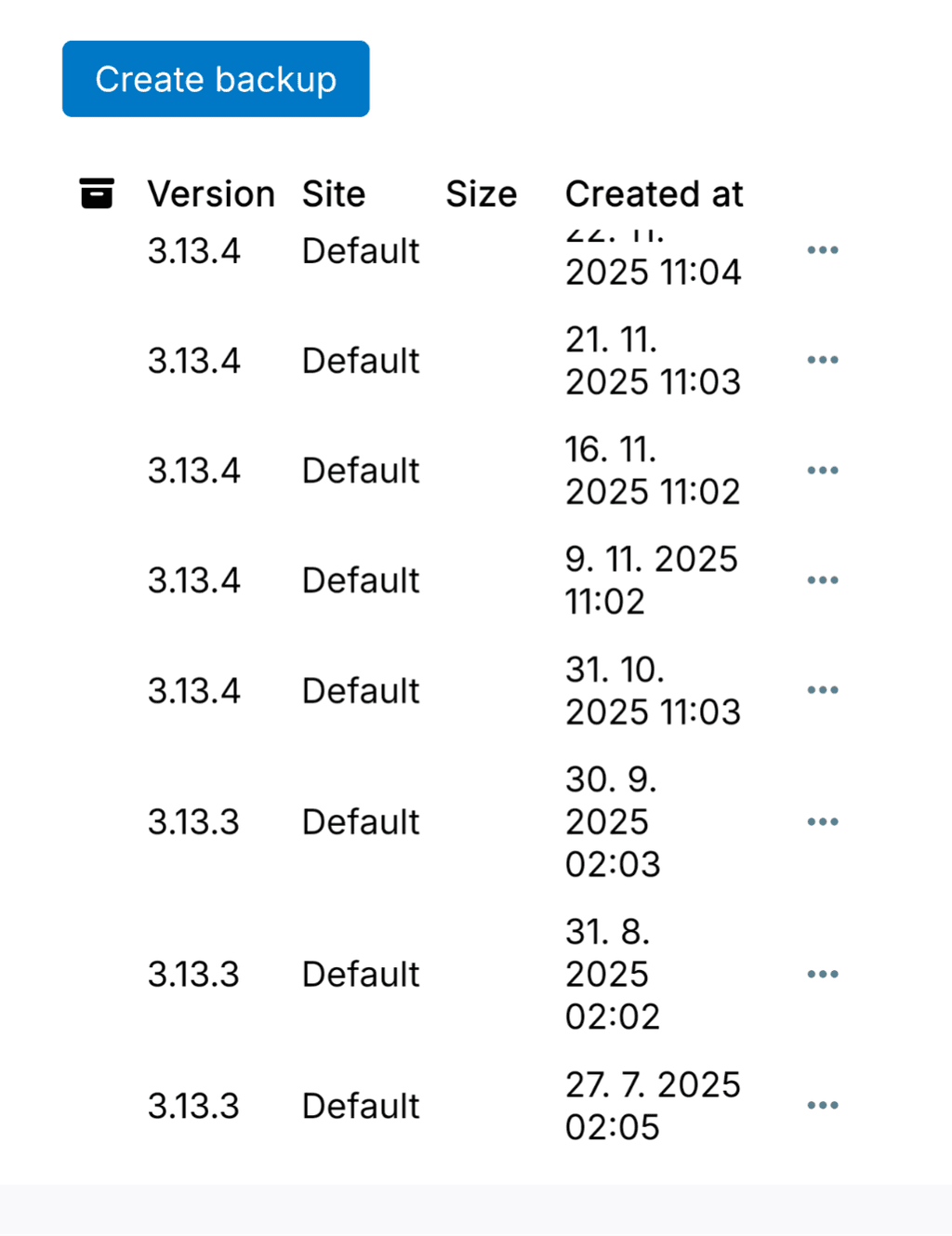

And in Backups / Sites, I only see the backups created after updating to version 9.0.12 — all older system backups remain missing.The backup folders do physically exist on the storage.

-

Old system backups are not listedThanks, but my situation is a bit different.

I keep 7 daily, 4 weekly and 12 monthly backups.

The older system backups from before the Cloudron update do not appear in Backup Sites at all, even though:the backup folders are physically present on the storage (I checked),

the older app backups do show up under each app,

but the system backups are completely missing from Backup Sites.

So the backups exist on the storage, but Cloudron does not list the older system backups anymore.

-

Old system backups are not listedI have the same issue after updating Cloudron.

I had to re-attach the backup storage and new backups are working, but:

the older backups are physically present on the StorageBox,

I can see them in each app’s backup list,

but they do not appear under Backup Sites,

and trying to restore them results in an

Task error Retry a failed installation, configuration, update, restore, or backup task. An error occurred during the restore operation: External Error: Error loading fsmetadata.json:ENOENT: no such file or directory, open '/home/yellowtent/appsdata/bebc848c-f9a9-41f9-aeb1-b1d8bf200d73/fsmetadata.json' -

Pixelfed – is it possible to add a custom logo & favicon on Cloudron?Thanks for the response.

Pixelfed does have a Custom CSS option in the admin panel,

but even with that it's currently not possible to override the logo.I'm also noticing other things not working. I have filtered registrations

enabled, but I'm not receiving any emails about new signup requests.

And when I try to edit /site/about, the changes don't show up at all.So it seems this is just the current state of Pixelfed.

Hopefully the developers will fix these issues in future releases. -

Pixelfed – is it possible to add a custom logo & favicon on Cloudron?Hi,

I’m hosting Pixelfed on Cloudron and I’d like to add a custom logo and favicon.

I’m not sure if this is even possible without modifying the app code, and I don’t want to break anything or do unsupported changes.Is there an official or recommended way on Cloudron to brand Pixelfed (logo, favicon, small UI changes)?

Or is this considered a code modification that Cloudron doesn’t allow?Any advice would be greatly appreciated.

Thank you! -

Gitea update notification missing@joseph Ah, got it

I’m not on Cloudron 9.0.0 yet, so that’s why the update didn’t show up.

Looks like I panicked for nothing again Thanks!

Thanks! -

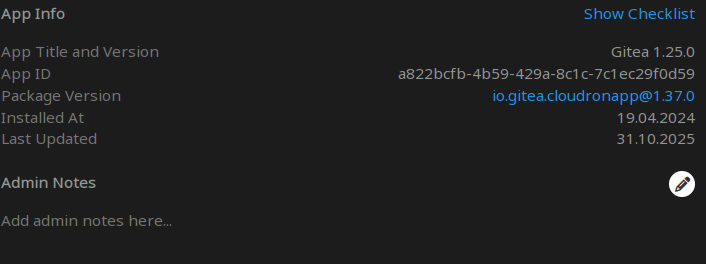

Gitea update notification missingHi,

on November 5th the Gitea app was updated to version 1.25.1, but on my Cloudron instance I still don’t see any update notification.

I’m not sure if I’ve missed something again (like I did earlier with Tiny Tiny RSS), or if there’s an issue with the update checks this time. Did anyone else run into the same problem?Thanks a lot for any info.

-

Tiny Tiny RSS – updates not showing up@james Yeah, that makes sense. I must have completely missed that note during the manual update back then.

Thanks for double-checking and explaining — and good idea to make such notices more visible in the future. -

Tiny Tiny RSS – updates not showing up@james Yeah, that would definitely help.

A short note in the changelog or a small notice in the app UI would make it much clearer for users — though I admit I should also keep a closer eye on release notes myself.

Thanks for taking it seriously! -

Tiny Tiny RSS – updates not showing up@james Thanks a lot for the quick reply — I totally missed that note about the migration.

Sorry for the unnecessary question! -

Tiny Tiny RSS – updates not showing upHi,

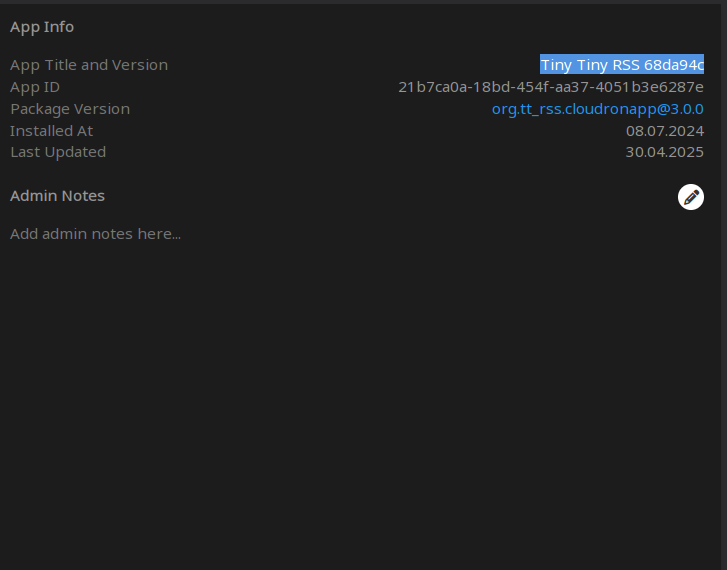

I’ve noticed that Tiny Tiny RSS on my Cloudron doesn’t show any available updates.

I also tried checking for updates manually, but nothing appears.I’m currently on version 68da94c, and according to the app info, the last update was on April 30, 2025.

However, I’m quite sure I updated the app about a month ago.Other apps show updates normally.

Thanks a lot for any information

-

Freescout – SQLSTATE[HY000] [2002] Connection refusedHello @james

Thanks for the suggestion!

I checked the MySQL service — it has more than enough memory (20 GiB total, only about 40 % used).

So it doesn’t look like a memory issue.

There are no errors or warnings in the MySQL logs so far, so I think it should be fine.

I’ll keep an eye on it for a few days just to be sure.Anyway, thank you very much for your help and your time!

-

Freescout – SQLSTATE[HY000] [2002] Connection refused@james said in Freescout – SQLSTATE[HY000] [2002] Connection refused:

Hello @archos

this error states Connection refused for the PDOConnection.php which would mean, when it tries to connect to the database it can't.

Please open your web terminal of the Freescout app and press the MYSQL button on the top and press enter.

You should then be connected to the database.

Please report if there is anything going wrong when doing so.Thank you very much for the quick reply!

I tried running the MySQL command in the terminal and it seems everything is working fine:root@70233011-1d9f-4b7f-abe2-fdf99594b834:/app/code# mysql --user=${CLOUDRON_MYSQL_USERNAME} --password=${CLOUDRON_MYSQL_PASSWORD} --host=${CLOUDRON_MYSQL_HOST} ${CLOUDRON_MYSQL_DATABASE} mysql: [Warning] Using a password on the command line interface can be insecure. Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 6823475 Server version: 8.0.41-0ubuntu0.24.04.1 (Ubuntu) Copyright (c) 2000, 2025, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql>Maybe something was just stuck after an update.

Thanks again for your help!

-

Freescout – SQLSTATE[HY000] [2002] Connection refusedHello,

in the last few days I’ve been seeing the following error repeatedly in my Freescout app logs:E[HY000] [2002] Connection refused at /app/code/overrides/doctrine/dbal/lib/Doctrine/DBAL/Driver/PDOConnection.php:38) [stacktrace] #0 /app/code/overrides/doctrine/dbal/lib/Doctrine/DBAL/Driver/PDOConnection.php(42): Doctrine\\DBAL\\Driver\\PDO\\Exception::new() #1 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connectors/Connector.php(64): Doctrine\\DBAL\\Driver\\PDOConnection->__construct() #2 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connectors/Connector.php(97): Illuminate\\Database\\Connectors\\Connector->createPdoConnection() #3 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connectors/Connector.php(46): Illuminate\\Database\\Connectors\\Connector->tryAgainIfCausedByLostConnection() #4 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connectors/MySqlConnector.php(24): Illuminate\\Database\\Connectors\\Connector->createConnection() #5 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connectors/ConnectionFactory.php(183): Illuminate\\Database\\Connectors\\MySqlConnector->connect() #6 [internal function]: Illuminate\\Database\\Connectors\\ConnectionFactory->Illuminate\\Database\\Connectors\\{closure}() #7 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connection.php(915): call_user_func() #8 /app/code/vendor/laravel/framework/src/Illuminate/Database/DatabaseManager.php(248): Illuminate\\Database\\Connection->getPdo() #9 /app/code/vendor/laravel/framework/src/Illuminate/Database/DatabaseManager.php(234): Illuminate\\Database\\DatabaseManager->refreshPdoConnections() #10 /app/code/vendor/laravel/framework/src/Illuminate/Database/DatabaseManager.php(168): Illuminate\\Database\\DatabaseManager->reconnect() #11 [internal function]: Illuminate\\Database\\DatabaseManager->Illuminate\\Database\\{closure}() #12 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connection.php(753): call_user_func() #13 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connection.php(767): Illuminate\\Database\\Connection->reconnect() #14 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connection.php(616): Illuminate\\Database\\Connection->reconnectIfMissingConnection() #15 /app/code/vendor/laravel/framework/src/Illuminate/Database/Connection.php(317): Illuminate\\Database\\Connection->run() #16 /app/code/overrides/laravel/framework/src/Illuminate/Database/Query/Builder.php(1718): Illuminate\\Database\\Connection->select() #17 /app/code/overrides/laravel/framework/src/Illuminate/Database/Query/Builder.php(1704): Illuminate\\Database\\Query\\Builder->runSelect() #18 /app/code/overrides/laravel/framework/src/Illuminate/Database/Eloquent/Builder.php(483): Illuminate\\Database\\Query\\Builder->get() #19 /app/code/overrides/laravel/framework/src/Illuminate/Database/Eloquent/Builder.php(467): Illuminate\\Database\\Eloquent\\Builder->getModels() #20 /app/code/overrides/laravel/framework/src/Illuminate/Database/Eloquent/Model.php(360): Illuminate\\Database\\Eloquent\\Builder->get() #21 /app/code/app/Module.php(29): Illuminate\\Database\\Eloquent\\Model::all() #22 /app/code/app/Module.php(144): App\\Module::getCached() #23 /app/code/app/Module.php(40): App\\Module::getByAlias() #24 /app/code/overrides/nwidart/laravel-modules/src/Repository.php(231): App\\Module::isActive() #25 /app/code/overrides/laravel/framework/src/Illuminate/Cache/Repository.php(327): Nwidart\\Modules\\Repository->Nwidart\\Modules\\{closure}() #26 /app/code/vendor/laravel/framework/src/Illuminate/Cache/CacheManager.php(304): Illuminate\\Cache\\Repository->remember() #27 /app/code/overrides/nwidart/laravel-modules/src/Repository.php(224): Illuminate\\Cache\\CacheManager->__call() #28 /app/code/overrides/nwidart/laravel-modules/src/Repository.php(181): Nwidart\\Modules\\Repository->getCached() #29 /app/code/overrides/nwidart/laravel-modules/src/Repository.php(263): Nwidart\\Modules\\Repository->all() #30 /app/code/overrides/nwidart/laravel-modules/src/Repository.php(291): Nwidart\\Modules\\Repository->getByStatus() #31 /app/code/overrides/nwidart/laravel-modules/src/Repository.php(333): Nwidart\\Modules\\Repository->enabled() #32 /app/code/overrides/nwidart/laravel-modules/src/Repository.php(365): Nwidart\\Modules\\Repository->getOrdered() #33 /app/code/vendor/nwidart/laravel-modules/src/Providers/BootstrapServiceProvider.php(22): Nwidart\\Modules\\Repository->register() #34 /app/code/overrides/laravel/framework/src/Illuminate/Foundation/Application.php(590): Nwidart\\Modules\\Providers\\BootstrapServiceProvider->register() #35 /app/code/vendor/nwidart/laravel-modules/src/ModulesServiceProvider.php(38): Illuminate\\Foundation\\Application->register() #36 /app/code/vendor/nwidart/laravel-modules/src/LaravelModulesServiceProvider.php(15): Nwidart\\Modules\\ModulesServiceProvider->registerModules() #37 [internal function]: Nwidart\\Modules\\LaravelModulesServiceProvider->boot() #38 /app/code/overrides/laravel/framework/src/Illuminate/Container/BoundMethod.php(28): call_user_func_array() #39 /app/code/overrides/laravel/framework/src/Illuminate/Container/BoundMethod.php(87): Illuminate\\Container\\BoundMethod::Illuminate\\Container\\{closure}() #40 /app/code/overrides/laravel/framework/src/Illuminate/Container/BoundMethod.php(27): Illuminate\\Container\\BoundMethod::callBoundMethod() #41 /app/code/overrides/laravel/framework/src/Illuminate/Container/Container.php(549): Illuminate\\Container\\BoundMethod::call() #42 /app/code/overrides/laravel/framework/src/Illuminate/Foundation/Application.php(796): Illuminate\\Container\\Container->call() #43 /app/code/overrides/laravel/framework/src/Illuminate/Foundation/Application.php(779): Illuminate\\Foundation\\Application->bootProvider() #44 [internal function]: Illuminate\\Foundation\\Application->Illuminate\\Foundation\\{closure}() #45 /app/code/overrides/laravel/framework/src/Illuminate/Foundation/Application.php(778): array_walk() #46 /app/code/vendor/laravel/framework/src/Illuminate/Foundation/Bootstrap/BootProviders.php(17): Illuminate\\Foundation\\Application->boot() #47 /app/code/overrides/laravel/framework/src/Illuminate/Foundation/Application.php(213): Illuminate\\Foundation\\Bootstrap\\BootProviders->bootstrap() #48 /app/code/vendor/laravel/framework/src/Illuminate/Foundation/Console/Kernel.php(296): Illuminate\\Foundation\\Application->bootstrapWith() #49 /app/code/vendor/laravel/framework/src/Illuminate/Foundation/Console/Kernel.php(119): Illuminate\\Foundation\\Console\\Kernel->bootstrap() #50 /app/code/artisan(60): Illuminate\\Foundation\\Console\\Kernel->handle() #51 {main} "}Do you know what might be causing this issue?

Thank you very much for any information.

-

[GUIDE] Move PeerTube video storage to Hetzner S3@girish said in [GUIDE] Move PeerTube video storage to Hetzner S3:

@archos should I use the email on this forum?

Yes, please use the same email I use for this forum.