Mention and live install+demo of ESS during a FOSDEM talk: https://fosdem.org/2026/schedule/event/BRRQYU-sustainable-matrix-at-element/

mononym

Posts

-

Element Server Suite -

Element Server SuiteThat's grant ! Thank you very much. I would like see if my wish from https://forum.cloudron.io/post/119520 could already be implemented in the package ? No pressure

️

️ -

OIDC customization settings not persistentYes, this makes perfect sense to me. That's also why I only want to change two specific parameters (

localpart_templateanddisplay_name_template) and not the whole OIDC setup, which should be unmutable so to say. And in my case, I also wanted to ensure thatemail_templateis kept in sync with the Cloudron account email, only giving freedom to set a desired handle and display name (although that one can be modified afterwards by the user).P.S.: I did not test yet if other settings are persistent or not, as I intend to set a retention policy for synapse as well.

-

OIDC customization settings not persistent@james Yes, that would be perfect. The upper part of the

start.shscript is checking ifhomeserver.yamlexists but the OIDC settings are not in that block. -

Remotely (remote desktop management)@marcusquinn Sorry, forgot to delete my post when I came across https://forum.cloudron.io/post/119487

-

OIDC customization settings not persistentGuess it has something to do with

app/pkg/start.sh# oidc if [[ -n "${CLOUDRON_OIDC_ISSUER:-}" ]]; then echo " ==> Configuring OIDC auth" yq eval -i ".oidc_providers[0].idp_id=\"cloudron\"" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].idp_name=\"${CLOUDRON_OIDC_PROVIDER_NAME:-Cloudron}\"" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].issuer=\"${CLOUDRON_OIDC_ISSUER}\"" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].client_id=\"${CLOUDRON_OIDC_CLIENT_ID}\"" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].client_secret=\"${CLOUDRON_OIDC_CLIENT_SECRET}\"" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].scopes=[\"openid\", \"email\", \"profile\"]" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].authorization_endpoint=\"${CLOUDRON_OIDC_AUTH_ENDPOINT}\"" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].token_endpoint=\"${CLOUDRON_OIDC_TOKEN_ENDPOINT}\"" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].userinfo_endpoint=\"${CLOUDRON_OIDC_PROFILE_ENDPOINT}\"" /app/data/configs/homeserver.yaml # https://s3lph.me/ldap-to-oidc-migration-3-matrix.html yq eval -i ".oidc_providers[0].allow_existing_users=true" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].skip_verification=true" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].user_mapping_provider.config.localpart_template=\"{{ user.sub }}\"" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].user_mapping_provider.config.display_name_template=\"{{ user.name }}\"" /app/data/configs/homeserver.yaml yq eval -i ".oidc_providers[0].user_mapping_provider.config.email_template=\"{{ user.email }}\"" /app/data/configs/homeserver.yaml elseBasically, the script should not update the

homeserver.yamllocalpart_templatekey, if its value was set (manually) tonull(changing it tonullis maybe easier to detect than just commenting it out). -

OIDC customization settings not persistentHello,

I want to comment out the following entries inhomeserver.yamlso that users can define their matrix usernames.user_mapping_provider: config: #localpart_template: '{{ user.sub }}' #display_name_template: '{{ user.name }}' email_template: '{{ user.email }}'But when restarting the app, the two lines are added again, uncommented, overwriting my changes.

-

ControlR - remote control and remote access- Main Page: https://controlr.app/

- Git: https://github.com/bitbound/ControlR

- Licence: MIT

- Dockerfile: Yes (https://hub.docker.com/r/bitbound/controlr)

- Demo: https://demo.controlr.app/

- Summary: Remote access, without the strings. Self-hosted remote access and device management that puts you in control. No paywalls, no rug-pulling, no surprises.

- Notes: This is where the energy from Remotely moved, and the package seems in good hands with the help of a sponsorship: https://github.com/bitbound/ControlR/discussions/83

- Alternative to: Rustdesk, Remotely, DWService

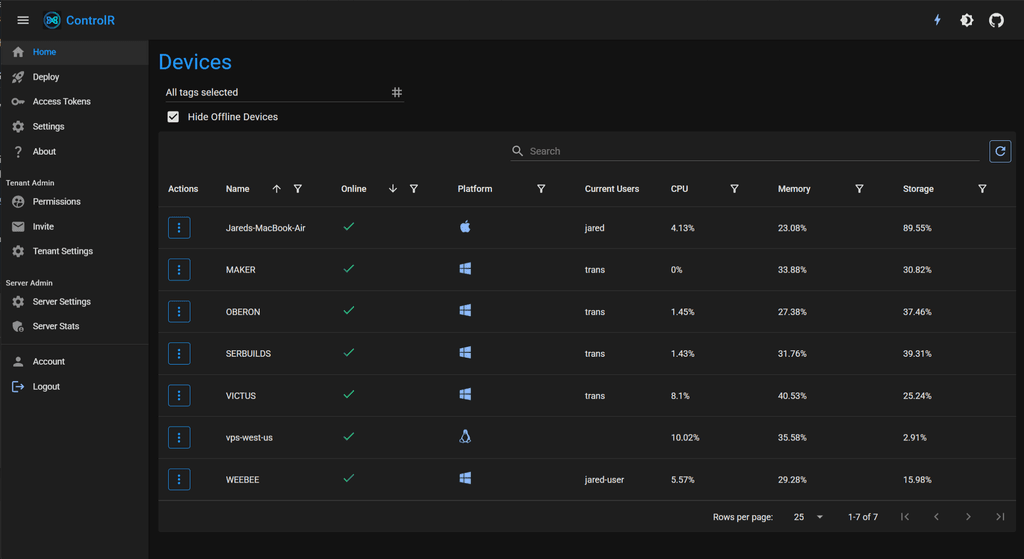

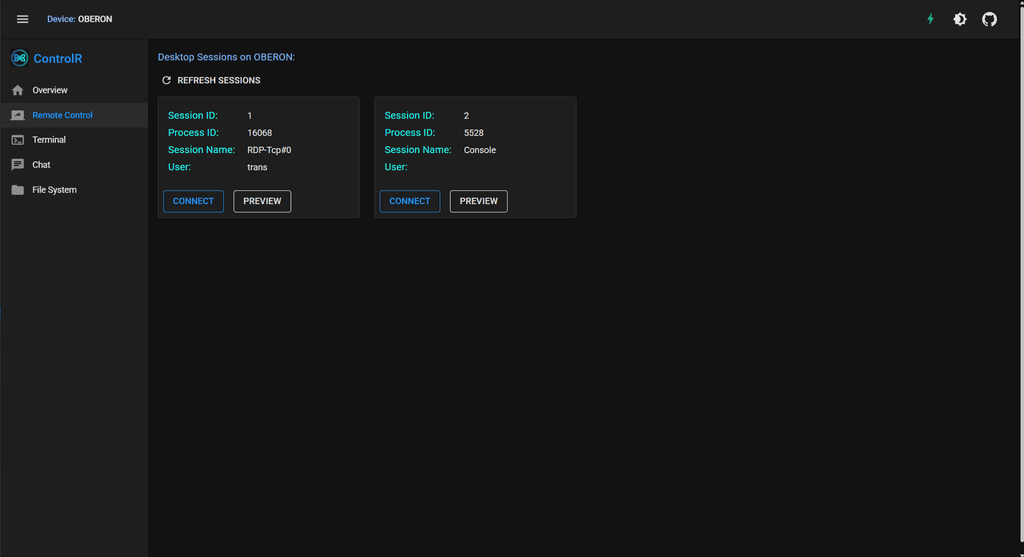

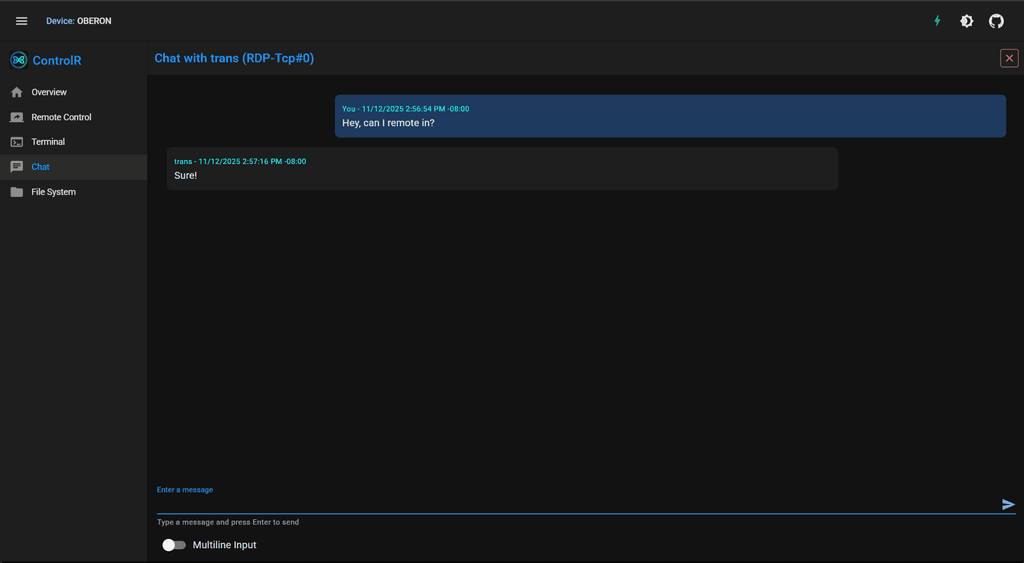

Device Dashboard

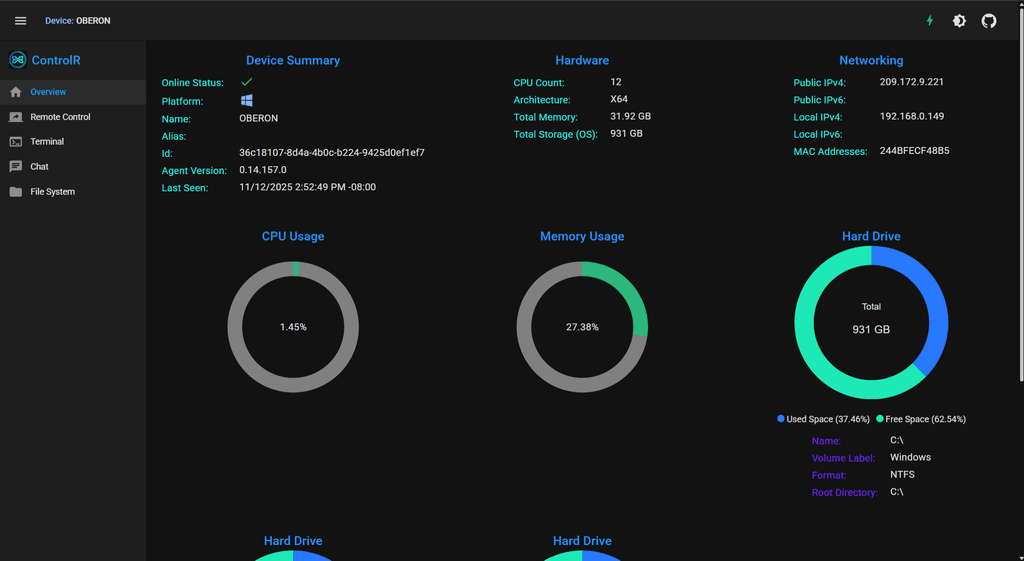

Device Details

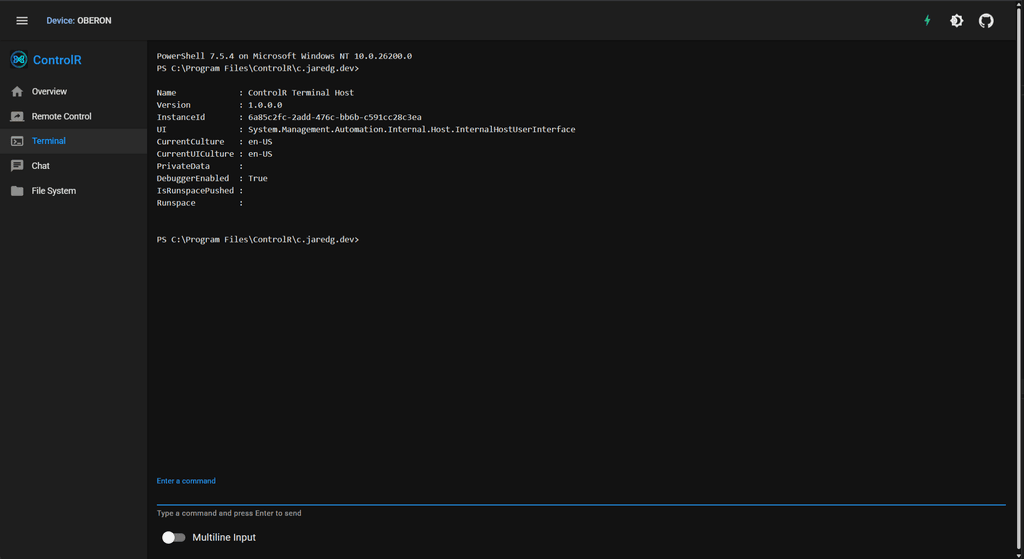

Remote Terminal

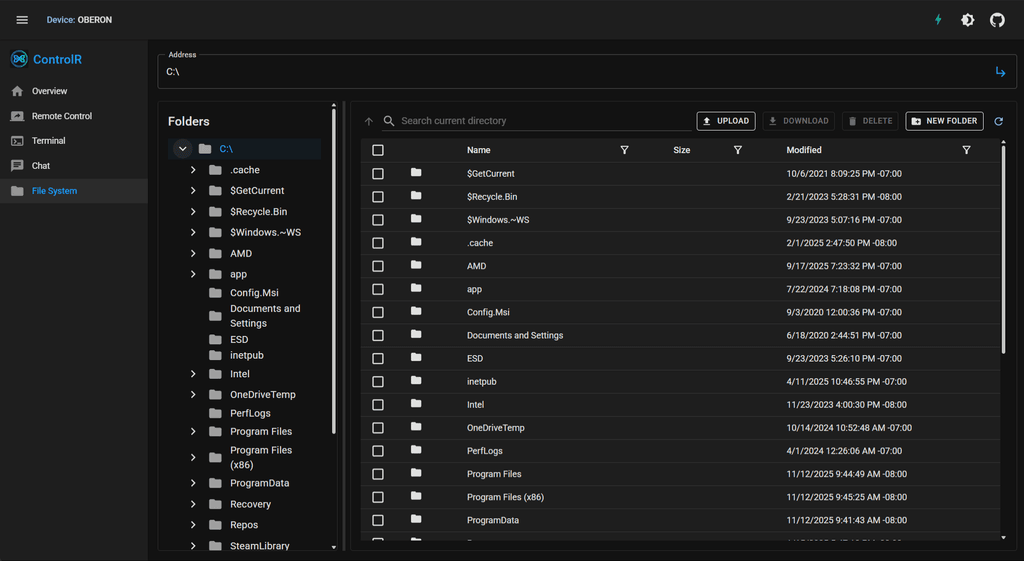

File Manager

Session Selection

Real-Time Chat

-

Link (emoji) assets to app/dataThank you @james !

I'll maybe open an upstream issue for the missing images. I guess the expected behaviour would be that at least the emoji picker simply omits the images not present in the emoji folder. For existing posts, it's understandable that a broken image is shown.

-

Link (emoji) assets to app/data@mononym said in Link (emoji) assets to app/data:

replace the 6 standard reaction emojis manually with custom images from within the Discourse admin UI. One has to set the name heart, +1, etc. before uploading the file. The new images show up as reactions, but now, the original set is not coherent anymore as 6 images differ in style.

Once done the steps above, I thought that it is all set. Now, I discovered that there are many more emoji present in quick access at least inside the chat interface. @staff would it be possible to move the

app/code/public/images/emoji/intoapp/data/.../emojiso that one could remove entire emoji sets or to make customization at the source please ?

-

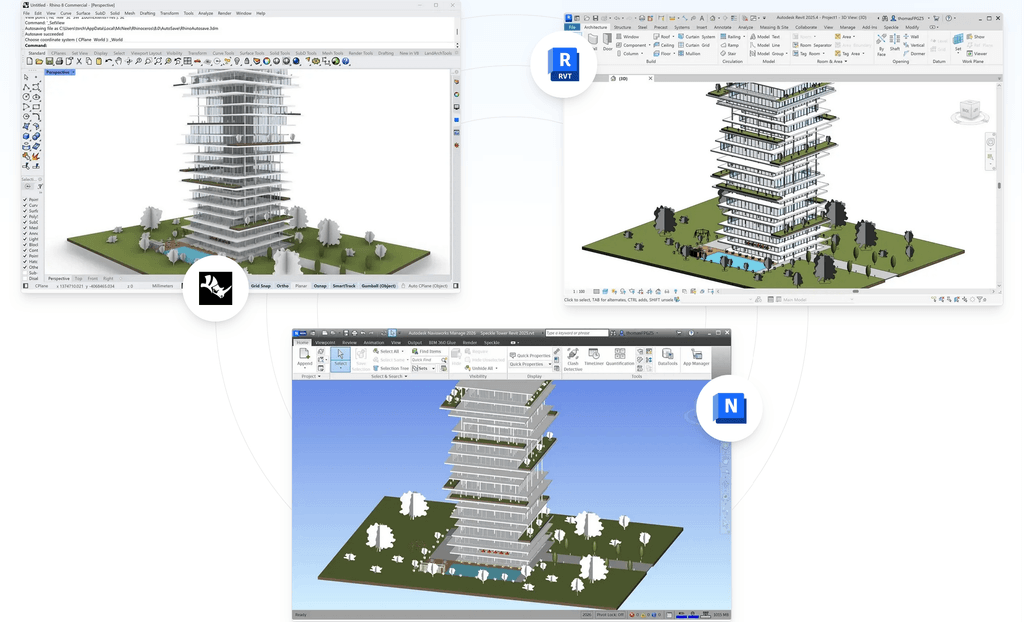

Speckle - sharing models, feedback, and insights across all your CAD & BIM tools.- Main Page: https://speckle.systems/

- Git: https://github.com/specklesystems/speckle-server

- Licence: Apache + “The Speckle Enterprise Edition (EE) license”

- Dockerfile: Yes; https://docs.speckle.systems/developers/server/getting-started

- Demo:

- Summary: Exchange Design Data. Watch models flow freely across tools and teams. Forget file exports. Share only what's needed to easily coordinate with other disciplines. Server = The Speckle Server, Frontend, 3D Viewer, & other JS utilities.

- Notes: Needs an S3 storage, but hopes are high to get this onto Cloudron.

-

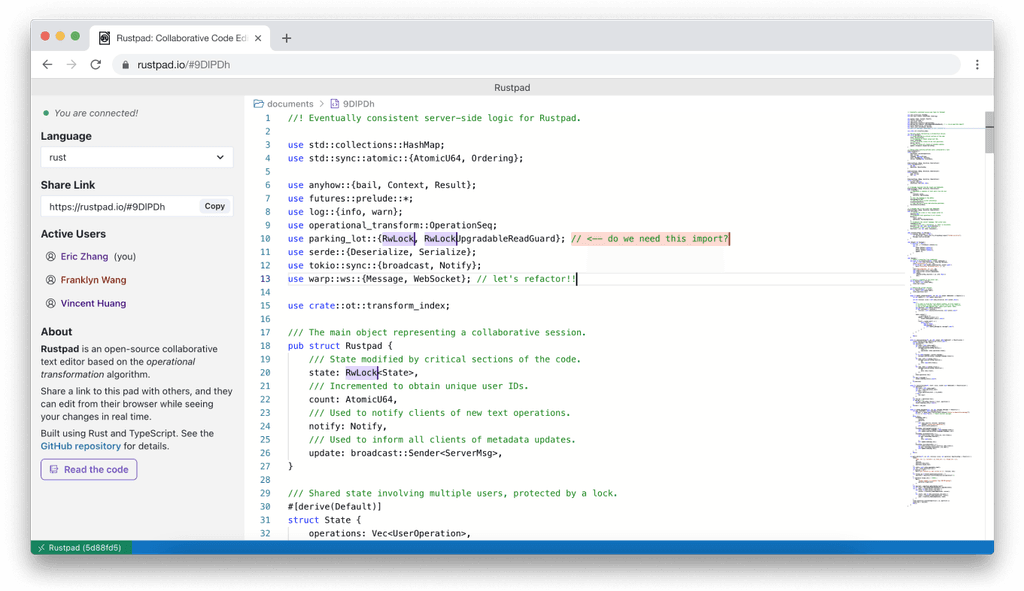

Rustpad on Cloudron - Efficient and minimal collaborative code editor, self-hosted, no database required- Git: https://github.com/ekzhang/rustpad

- Licence: MIT

- Dockerfile: Yes

- Demo: https://rustpad.io

- Summary: Rustpad is an efficient and minimal open-source collaborative text editor based on the operational transformation algorithm. It lets users collaborate in real time while writing code in their browser. Rustpad is completely self-hosted and fits in a tiny Docker image, no database required.

- Notes: I was looking for a classroom tool in order to code together in real time.

- Alternative to: codepad (abandoned), etherpad (not for code), cryptopad/code (heavy)

-

Add UnifiedPush settings in documentation@joseph said in Add UnifiedPush settings in documentation:

Maybe I am missing something but isn't that rule giving anon access to channels starting with 'up' .

I had the same concern but in the end it made sense. It is a write only access which certain application servers need to have. Ex. I set up Element to use UnifiedPush with the ntfy app on my device. The test all worked except "Test Push loop back". No idea what that does but it did work once I configured

"everyone:up*:write-only"like the suggested settings.When choosing ntfy as UnifiedPush the app created some random string (i.e. the ntfy topic) and it starts with

up. In the end, it is difficult to guess the topic name as it is random and as long you're not subscribed to that topic, you won't see the messages as well. Basically, write-only access is not enough to use the nfty server like a public instance as credentials are necessary to read the topic. So I think not really a concern here.I guess there's more about this on: https://unifiedpush.org

-

Add UnifiedPush settings in documentationHello.

I suggest to add an entry to the ntfy docs to explain how to make UnifiedPush work. Basically, one needs to apply this lines to the

app/data/config/server.yml:auth-access: - "everyone:up*:write-only"c.f. https://docs.ntfy.sh/config/#example-unifiedpush

It could also be a setting applied by default.

-

branding.xml not persistentHello.

When restarting Jellyfin, the custom CSS rules dissapear. One can set them in the app Dashboard/Branding menu. They then appear in the

app/data/jellyfin/config/branding.xmlfile. But after a restart, that file is reset to some standardbranding.xmlfile (integrating the SSO button etc. I think)What's the workaround here ?

Thanks ! -

be-BOP on Cloudron - Your Sales. Your Rules.- Main Page: https://be-bop.io/home

- Git: https://github.com/be-BOP-io-SA/be-BOP

- Licence: AGPL-3.0 license

- Dockerfile: https://github.com/be-BOP-io-SA/be-BOP?tab=readme-ov-file#docker

- Summary: Unifying all your sales channels, free from the middleman. Libre and open-source.

- Notes: Sparse information about the actual thing. I don't care about Bitcoin but for some it could be a motivation to look into it.

- Alternative to: Gumroad

-

Mostlymatter (i.e. FOSS fork of Mattermost)- Git: https://framagit.org/framasoft/framateam/mostlymatter

- Licence: Apache License 2.0

- Dockerfile: Yes (Mattermost install procedure)

- Demo: https://framateam.org

- Summary: Mostlymatter is a fork of Mattermost without users limit.

- Notes: YunoHost switched out their package: https://github.com/YunoHost-Apps/mattermost_ynh/issues/506#issuecomment-3697695195

-

Link (emoji) assets to app/dataMy first attempt is to replace the 6 standard reaction emojis manually with custom images from within the Discourse admin UI. One has to set the name

heart,+1, etc. before uploading the file. The new images show up as reactions, but now, the original set is not coherent anymore as 6 images differ in style.Second try is to upload the new emoji with a different name and set the new emoji as the 6 reaction choices. That works at first but then the reaction plugin refers to the original set when displaying the bundled reactions under a post.

I went back to the first option but I think the best solution here is still to be able to remove and replace the different emoji folders in

app/data/. -

Deploying Anubis (AI Crawler Filtering) on a Cloudron ServerAnother project of the sort is iocaine: https://iocaine.madhouse-project.org/documentation/3/getting-started/containers/ Was wondering if there's any chance to get that running.