How to configure LibreChat to discuss with secured self hosted Ollama?

-

Hi

I've installed LibreChat and Ollama on Cloudron and I've followed the checklists for each app.

LibreChat discusses with Mistral without trouble using my API Key.

However I can't make it work with my own instance of Ollama and I don't know what I'm doing wrong.

I get this error from LibreChatSomething went wrong. Here's the specific error message we encountered: An error occurred while processing the request: <html> <head><title>401 Authorization Required</title></head> <body> <center><h1>401 Authorization Required</h1></center> <hr><center>nginx/1.24.0 (Ubuntu)</center> </body> </html>I guess a Bearer is missing, but even if I add it to librechat.yaml configuration, the problem remains.

Any shared experience/example would help, I found the documentation very light about this issue, and the examples I see on how ton configure LibreChat for Ollama in other contexts show various base URLs for Ollama and sometimes use 'ollama' as api key, sometimes provide a Bearer token.

My librechat.yaml config:

version: 1.2.8 endpoints: custom: - name: "Ollama" apiKey: "ollama" baseURL: "https://ollama-api.<REDACTED>/v1" models: default: - "llama3:latest" - "mistral" - "gemma:7b" - "phi3:mini" fetch: false headers: Authorization: 'Bearer ${OLLAMA_BEARER_TOKEN}' titleConvo: true titleModel: "current_model" summarize: false summaryModel: "current_model" forcePrompt: false modelDisplayLabel: "Ollama"Does it look correct? the

OLLAMA_BEARER_TOKENis defined in the .env file.Thanks in advance

-

S SansGuidon marked this topic as a question on

S SansGuidon marked this topic as a question on

-

correct. I also tested that I can reach the Ollama api hosted on Cloudron using that same Bearer

curl -v https://ollama-api.<REDACTED>/v1/models -H "Authorization: Bearer <REDACTED>"

it does not return anything more than{"object":"list","data":null}useful but at least it's a 200 and a good enough test for me. -

correct. I also tested that I can reach the Ollama api hosted on Cloudron using that same Bearer

curl -v https://ollama-api.<REDACTED>/v1/models -H "Authorization: Bearer <REDACTED>"

it does not return anything more than{"object":"list","data":null}useful but at least it's a 200 and a good enough test for me.Hello @SansGuidon

Your API request returned

{"object":"list","data":null}which indicated no models found.For testing, in the web terminal of the Ollama app I send this command:

ollama run tinyllamaAfter that is done, now I get:

curl https://ollama-api.cloudron.dev/v1/models -H "Authorization: Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiJhZG1pbiIsImlhdCI6MTc2MTgxNjM1OSwiZXhwIjoxNzYxODE5OTU5fQ.YLZtAuIjqnApthTBfuoPyyyJ5a7N2wywn2GW9dTqUeU" {"object":"list","data":[{"id":"tinyllama:latest","object":"model","created":1761817528,"owned_by":"library"}]}After reproducing your setup I also get the HTTP error 401.

I suspect that theapiKey:config is wrong since we use bearer auth.

And yes, that was it.Here is my working config for LibreChat:

version: 1.2.8 endpoints: custom: - name: "Ollama" baseURL: "https://ollama-api.cloudron.dev/v1/" models: default: [ "tinyllama:latest" ] fetch: true titleConvo: true titleModel: "current_model" summarize: false summaryModel: "current_model" forcePrompt: false modelDisplayLabel: "Ollama" headers: Authorization: "Bearer ${OLLAMA_BEARER_TOKEN}"Always happy to help.

-

The LibreChat logs state on restart:

Oct 30 11:23:19 2025-10-30 10:23:19 error: [indexSync] error fetch failedBut in the Ollama logs for this timestamp I can see no request.

But when trying to send a prompt, I get a request:Oct 30 11:24:15 172.18.0.1 - - [30/Oct/2025:10:24:15 +0000] "GET /api/tags HTTP/1.1" 401 188 "-" "axios/1.12.1" Oct 30 11:24:17 172.18.0.1 - - [30/Oct/2025:10:24:17 +0000] "POST /api/chat HTTP/1.1" 401 188 "-" "ollama-js/0.5.14 (x64 linux Node.js/v22.14.0)"So, something is astray here.

-

Thanks @james

-

I was able to capture the headers that are send to Ollama.

// request made when re-trying the prompt ⚠️the auth bearer header is missing POST /mirror HTTP/1.1 X-Original-URI: /api/chat Host: 127.0.0.1:6677 Connection: close Content-Length: 114 Content-Type: application/json Accept: application/json User-Agent: ollama-js/0.5.14 (x64 linux Node.js/v22.14.0) accept-language: * sec-fetch-mode: cors accept-encoding: br, gzip, deflate // manual curl reuqest - header is there GET /mirror HTTP/1.1 X-Original-URI: /v1/models Host: 127.0.0.1:6677 Connection: close user-agent: curl/8.5.0 accept: */* authorization: Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiJhZG1pbiIsImlhdCI6MTc2MTgxNjM1OSwiZXhwIjoxNzYxODE5OTU5fQ.YLZtAuIjqnApthTBfuoPyyyJ5a7N2wywn2GW9dTqUeUSo for some reason the header is not send.

-

Thanks @james for confirming my suspicion. But Ollama is accepting the Header so I guess the problem is on LibreChat, right?

-

There was just a comment.

Thanks, this is specific to Ollama as it still uses some legacy code where headers are not expected.

You can already work around it by changing the custom endpoint name from "ollama" to something else, but I'm pushing a simple fix.

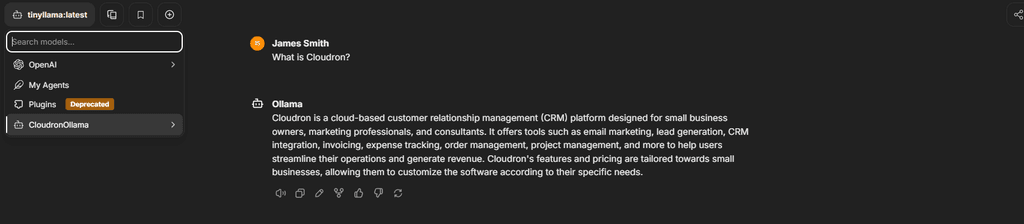

Tested this, config:

version: 1.2.8 endpoints: custom: - name: "CloudronOllama" apiKey: "ollama" baseURL: "https://ollama-api.cloudron.dev/v1/" models: default: ["tinyllama:latest"] fetch: true titleConvo: true titleModel: "current_model" summarize: false summaryModel: "current_model" forcePrompt: false modelDisplayLabel: "Ollama" headers: Content-Type: "application/json" Authorization: "Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiJhZG1pbiIsImlhdCI6MTc2MTgxNjM1OSwiZXhwIjoxNzYxODE5OTU5fQ.YLZtAuIjqnApthTBfuoPyyyJ5a7N2wywn2GW9dTqUeU"And indeed, this worked! But

danny-avilaalready created a PR to fix this issue.

So, to get it working right now, just change- name: "Ollama"to e.g.- name: "CloudronOllama"

-

That's working too for me by changing the name

! Thanks @james

! Thanks @james -

J joseph marked this topic as a regular topic on