Installing Whisper

-

Out of curiosity because a quick search didn't yield the results I was looking for: Is it possible to install Whisper in OpenWebUI? Doesn't seem so.

-

@firmansi Running the OpenAI Whisper model for transcription purposes

-

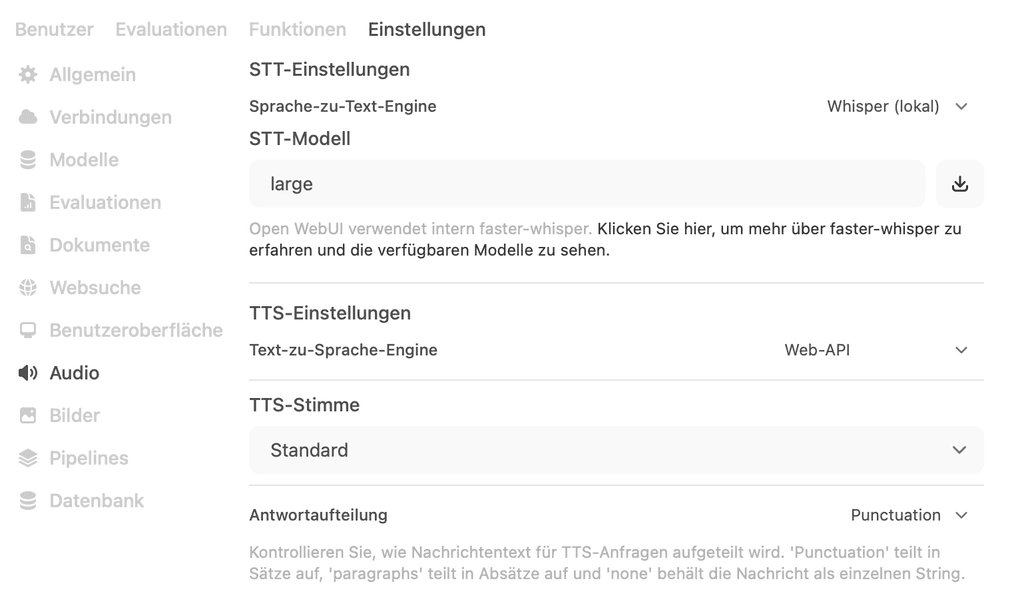

You mean from voice to text? If that you mean then you can enable it through Admin Settting > Audio

@firmansi Hmm I see. But how do I select that then in the interface? I.e. I have an audio file. What are the steps in OpenWebUI to get the transcription from said file.

-

Steps will be

- Choose OpenAI STT setting

- Fill openAI API

- Fill API Key

- Fill STT Model with whisper-large-v3 or any other that you prefer

@firmansi said in Installing Whisper:

Steps will be

- Choose OpenAI STT setting

- Fill openAI API

- Fill API Key

- Fill STT Model with whisper-large-v3 or any other that you prefer

Yes I#ve gotten that far. But when you select a new chat, I can not select the whisper models.

-

Seams like I'm not the only one struggling to understand the whisper implementation: https://github.com/open-webui/open-webui/issues/2248

-

Do you have a GPU for this? Otherwise it will be very slow.

There are speedups that have been made with JAX, but that still runs on GPUs.

Once you get it working, let me know how long it takes for x minutes of audio.

@robi Nope, just CPU. But seems to be very manageable. Faster Whisper says in the repo that a 13 minute on 8 threads Intel Core i7-12700K CPU takes roughly a minute.

-

BTW it seems to automatically recognize any audio file you throw at it. Either via Microphone or file upload and process TTS locally before passing it on to the selected model. Logs and graphs are pretty clear about this. Documentation however is not lol.