Wiby Personal Search Engine

-

With the advent of private local AI and sourcing fresh information, this is imperative to use for your own private internet and uncensored data.

https://github.com/wibyweb/wiby/

Build Your Own Search Engine

(Wiby Install Guide)

Overview

Installation

Controlling

ScalingOverview

Wiby is a search engine for the World Wide Web. The source code is now free as of July 8, 2022 under the GPLv2 license. I have been longing for this day! You can watch a quick demo

.It includes a web interface allowing guardians to control where, how far, and how often it crawls websites and follows hyperlinks. The search index is stored inside of an InnoDB full-text index.

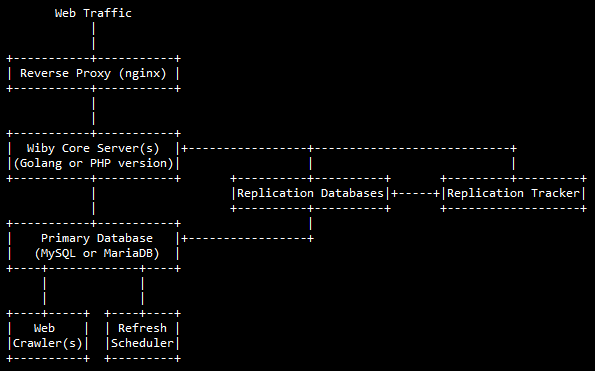

Fast queries are maintained by concurrently searching different sections of the index across multiple replication servers or across duplicate server connections, returning a list of top results from each connection, then searching the combined list to ensure correct ordering. Replicas that fail are automatically excluded; new replicas are easy to include. As new pages are crawled, they are stored randomly across the index, ensuring each search section can obtain relevant results.

The search engine is not meant to index the entire web and then sort it with a ranking algorithm. It prefers to seed its index through human submissions made by guests, or by the guardian(s) of the search engine.

The software is designed for anyone with some extra computers (even a Pi), to host their own search engine catering to whatever niche matters to them. The search engine includes a simple API for meta search engines to harness.

I hope this will enable anyone with a love of computers to cheaply build and maintain a search engine of their own. I hope it can cultivate free and independent search engines, ensuring accessibility of ideas and information across the World Wide Web.

-

Installation

I can only provide manual install instructions at this time.

Note that while the software is functionally complete, it is still in beta. Anticipate that some bugs will be discovered now that the source is released. Ensure that you isolate the search engine from your other important services, and if you are running parts of it out of your home, keep the servers on a separate VLAN. Make sure this VLAN cannot access your router or switch interface. Continue this practise even when the software reaches "1.0".

If you have created a "LAMP", or rather a "LEMP" server before, this isn't much more complicated. If you've never done that, I suggest you find a "LEMP" tutorial.

Build a LEMP server

Digital Ocean tutorials are usually pretty good so here is a link to one for Ubuntu 20 and Ubuntu 22.

For the sake of simplicity, assume all instructions are for Ubuntu 20 or 22. If you are on a different distro, modify the install steps accordingly to suit your distro.

If you don't have a physical server, you can rent computing space by looking for a "VPS provider". This virtual computer will be your reverse proxy, and if you want, it can host everything else too.

Install the following additional packages:

apt install build-essential php-gd libcurl4-openssl-dev libmysqlclient-dev golang git

Get Wiby Source Files

Download the source directly from Wiby here, or from GitHub. The source is released under the GPLv2 license. Copy the source files for Wiby to your server.

Compile the crawler (cr), refresh scheduler (rs), replication tracker (rt):

gcc cr.c -o cr -lmysqlclient -lcurl -std=c99 -O3

gcc rs.c -o rs -lmysqlclient -std=c99 -O3

gcc rt.c -o rt -lmysqlclient -std=c99 -O3If you get any compile errors, it is likely due to the path of the mysql or libcurl header files. This could happen if you are not using Ubuntu. You might have to locate the correct path for curl.h, easy.h, mysql.h, then edit the #include paths in the source files.

Build the core server application:

The core application is located inside the go folder. Run the following commands after copying the files over to your preferred location:

For Ubuntu 20:

go get -u github.com/go-sql-driver/mysqlFor Ubuntu 22 OR latest Golang versions:

go install github.com/go-sql-driver/mysql@latest

go mod init mysql

go get github.com/go-sql-driver/mysqlgo build core.go

go build 1core.goIf you are just starting out, you can use '1core'. If you are going to setup replication servers or you are using a computer with a lot of available cores, you can use 'core', but make sure to read the scaling section.

If you want to use 1core on a server separate from your reverse proxy server, modify line 37 of 1core.go: replace 'localhost' with '0.0.0.0' so that it accepts connections over your VPN from your reverse proxy.

You can also use index.php in the root of the www directory and not use the Go version at all. Though the PHP version is used mainly for prototyping.