Services and all apps down due to cgroups error

-

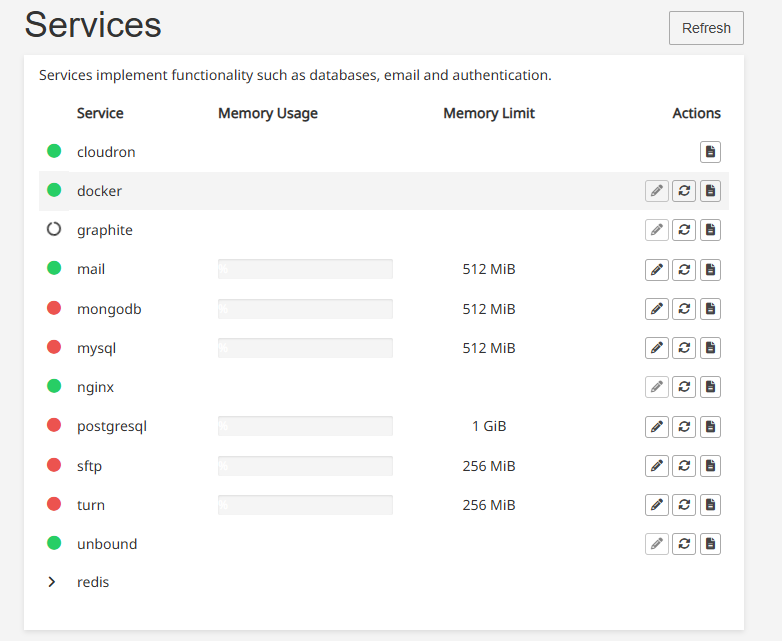

I'm getting an error in a bunch of my services and all apps.

When I try to restart a service it shows an error message in the web ui like this:

(HTTP code 500) server error - Cannot restart container mysql: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error setting cgroup config for procHooks process: cannot set memory limit: container could not join or create cgroup: unknown

After restarting to apply the latest ubuntu security updates it still has the same error.

-

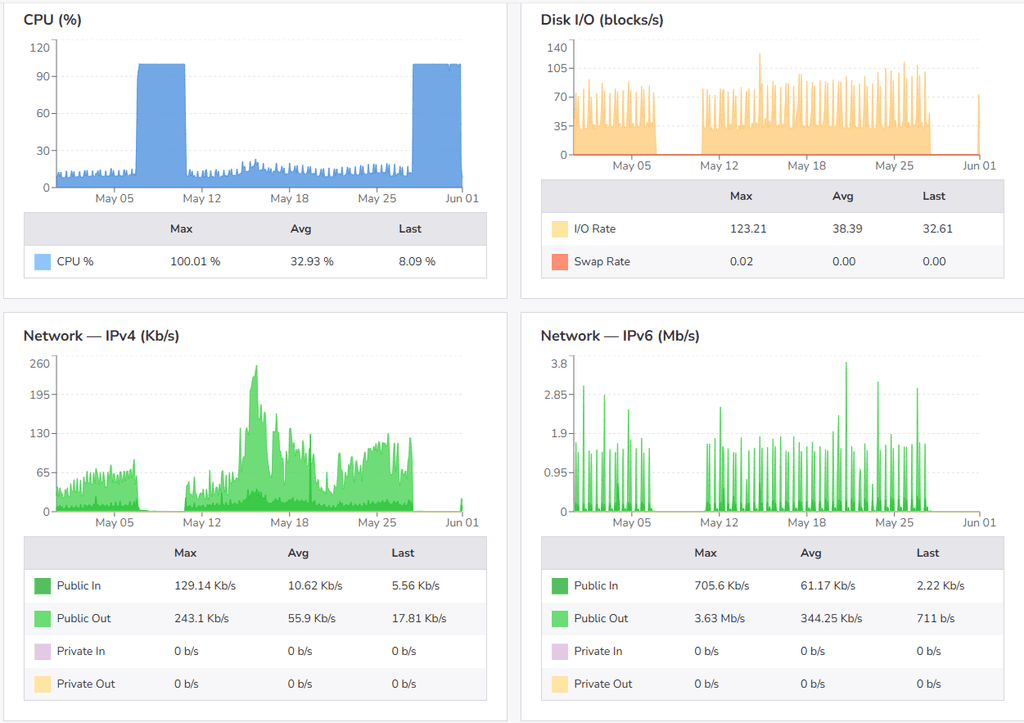

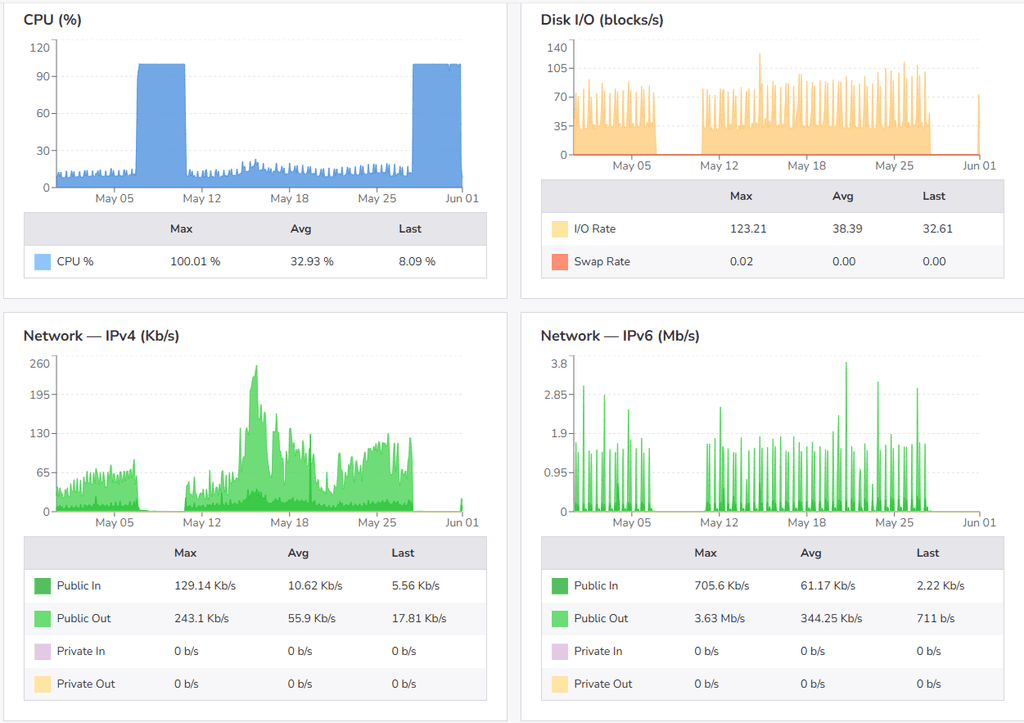

The following pattern occurs often: the server becomes completely unresponsive, I check the cloud dashboard and the server is using 100% CPU for something (I've never figured out what), so I reboot it and it goes back to normal. This cycle has repeated for over a year; I recall that I reported it before, but it's still an issue. Here's what it looked like in May:

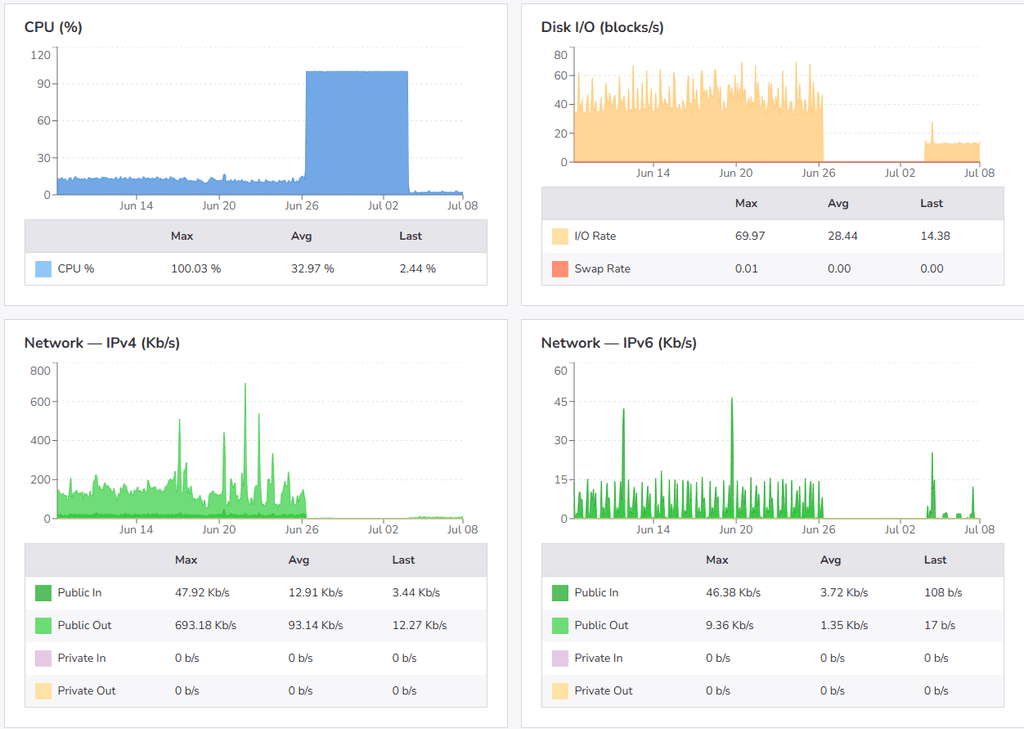

I did this again on July 4th, but this time it looks like it didn't come back ok:

I have plenty of disk left, > 50GB

-

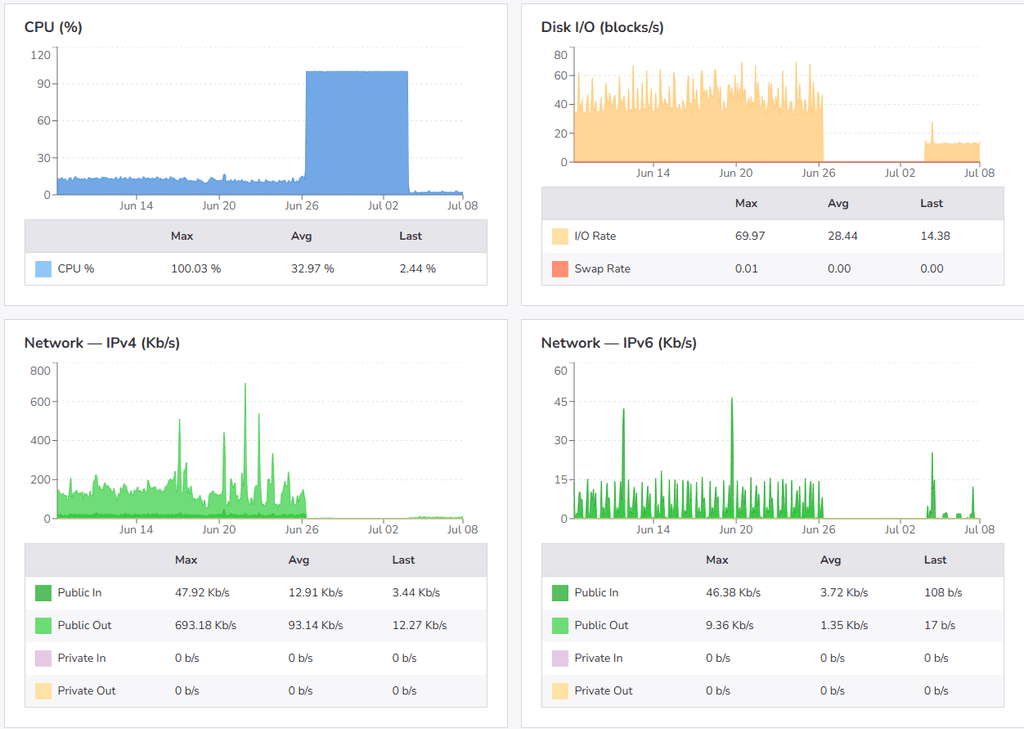

The following pattern occurs often: the server becomes completely unresponsive, I check the cloud dashboard and the server is using 100% CPU for something (I've never figured out what), so I reboot it and it goes back to normal. This cycle has repeated for over a year; I recall that I reported it before, but it's still an issue. Here's what it looked like in May:

I did this again on July 4th, but this time it looks like it didn't come back ok:

I have plenty of disk left, > 50GB

@infogulch out of interest, which VPS provider?

-

Something in the underlying system is off if containers cannot be rebuilt due to cgroup issues. Maybe docker got updated unexpectedly? I think we would have to take a closer look at the server to avoid too much back and forth. Please enable remote ssh support for us https://docs.cloudron.io/support/#remote-support

and send another mail to support@cloudron.io with your dashboard domain. We can take a look at it tomorrow. If you need to get up and running faster, you may also consider to restore to a new server to be on known ground https://docs.cloudron.io/backups/#restore-cloudron -

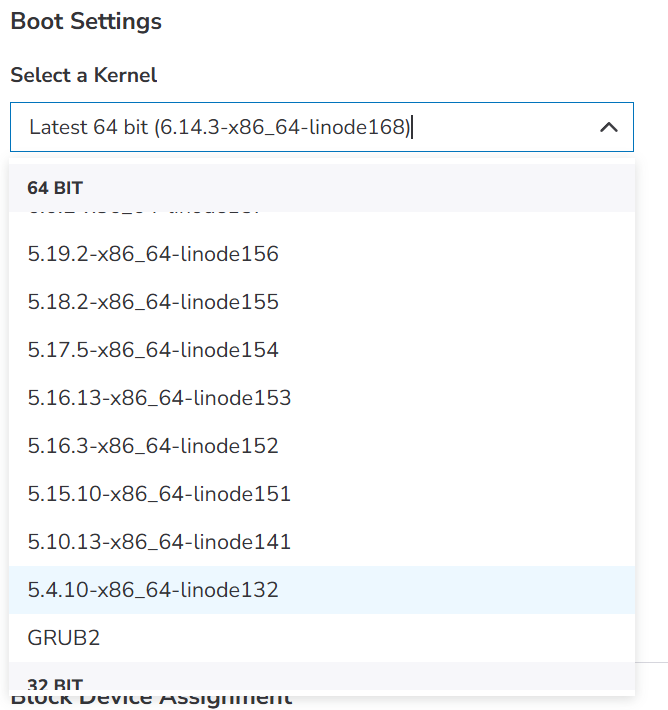

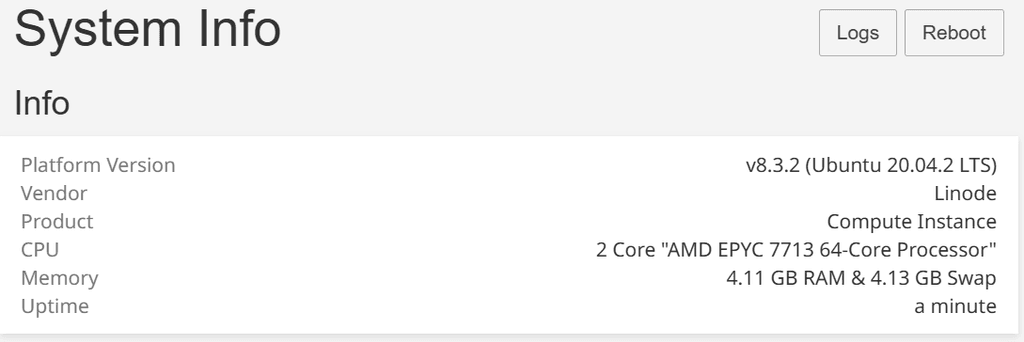

We were able to inspect the server today morning and after some debugging it turns out the system was booting a kernel which doesn't have memory cgroups enabled. In fact that kernel wasn't even installed on the system but apparently linode provides a way to boot the system with other kernels. This seems what caused the issues that the containers couldn't start anymore.

-

Everything seems to be back up and running now. Thank you!

Any advice which latest kernel version I should be using? There are many options...

Edit: Oh maybe I should upgrade 20.04 as a first step...

https://docs.cloudron.io/guides/upgrade-ubuntu-22/

https://docs.cloudron.io/guides/upgrade-ubuntu-24/ -

J joseph has marked this topic as solved on

-

I think the kernel is defined by ubuntu upstream . Found a list here - https://askubuntu.com/questions/517136/list-of-ubuntu-versions-with-corresponding-linux-kernel-version and another at https://en.wikipedia.org/wiki/Ubuntu_version_history#Table_of_versions

-

Thank you that is helpful.

So maybe the correct option is to use the "Direct Disk" option that boots the kernel installed on the disk to allow the OS updates to manage the kernel version. This should head off any future issues and I won't have to coordinate when I upgrade to 22.04 and 24.04.

-

J jcalag referenced this topic

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login