The models load in the void

-

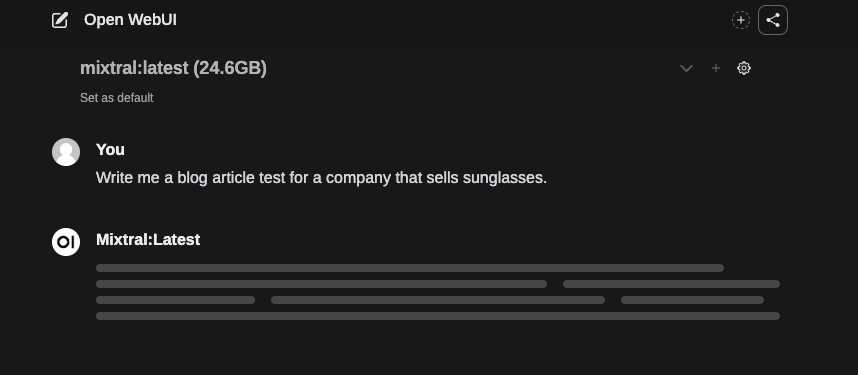

I don't know if it's a real bug or not, in doubt I prefer to talk about it here. I downloaded three different models and this error occurs on all models. I have 100GB of RAM allocated to OpenWebUI And my infrastructure has 12 cores. The fact is that even when I restart the application, etc. Sometimes it works, but very often the IA loads in the void leaving me on this page indefinitely :

I don't know if it's normal or not but OpenWebUI doesn't use any RAM at all, it only uses my CPU. If I believe my htop, the application uses less than 1GB of RAM when it is used.

Another thing, always if I believe my htop, even if I leave the application loading indefinitely and therefore consequently after a while I close either the chat or the tab, but cores continues to be used a lot.

At some point, I wanted to try the Llama2 70B model and I let it run for about 40 or even 50 minutes. At some point, I closed the page or refreshed it, I don't remember. And the answer appeared like magic. The model had not been written in my eyes, but it was as if the interface had not been updated and had continued to show me the load. But the answer had already been written and when I refreshed it, it appeared.

The problem is that the Llama2 70B was probably too big for my infrastructure, but the 7B's... I don't understand why they put so much time to charge. Knowing that sometimes they charge almost instantly.

-

-

Okay @girish thanks for your answer. If you find a server where they perform it might interest me a lot.

-

Out of curiosity, based on current usage -- I know locally storing the models takes up a lot of space and that can be overcome by using volumes. I'm having the same issue where when receiving responses, it's loading like I'm back on dial-up internet. There doesn't seem to be enough logging or metrics, so it seems we're limited in trying to see what the cause is. My assumption is that I should be looking at CPU usage?

-

Out of curiosity, based on current usage -- I know locally storing the models takes up a lot of space and that can be overcome by using volumes. I'm having the same issue where when receiving responses, it's loading like I'm back on dial-up internet. There doesn't seem to be enough logging or metrics, so it seems we're limited in trying to see what the cause is. My assumption is that I should be looking at CPU usage?

@JLX89 @Dont-Worry have a look at this thread

-

R robw referenced this topic on