Should/can Cloudron check whether disk has sufficient space before running an app's update?

-

I just ran into a similar issue as this one, when the app Rallly tried to update today.

My server has about 8GB space left, but that's apparently not enough to run the update.

The main issue isn't so much the update failing, Cloudron can't do much about that.

The issue is rather that Cloudron is trying to perform the update daily, despite the fact it failed yesterday already, due to the same issue (not enough disk space on device).But, when if failed yesterday, I didn't have monitoring on the server, and I noticed the app "Rallly" was broken, but I didn't understand why. Now, I understand it tried to update yesterday, and failed, and did so again today.

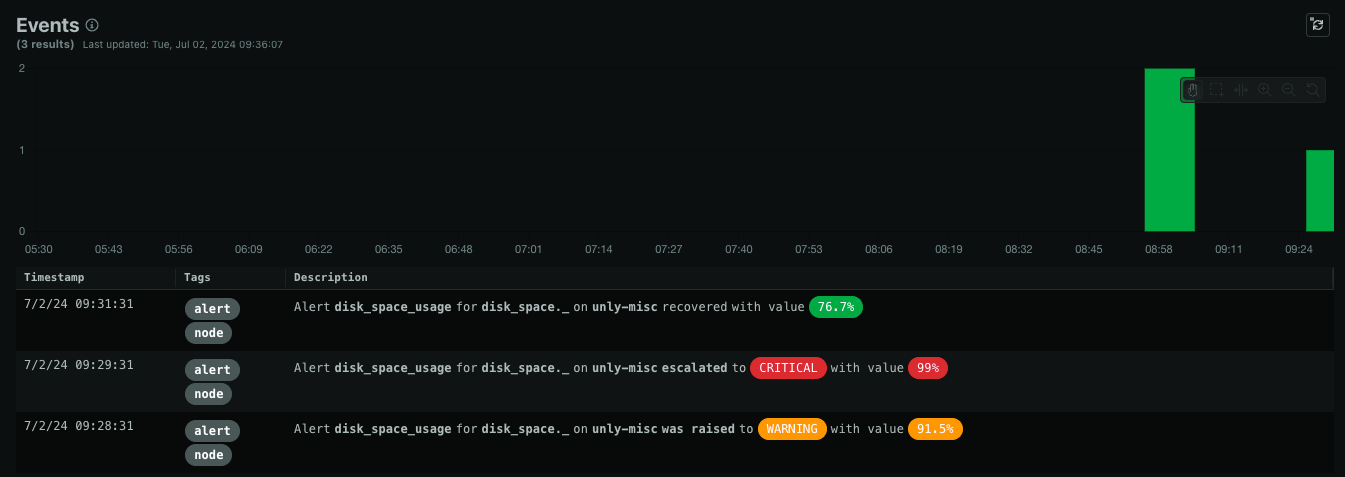

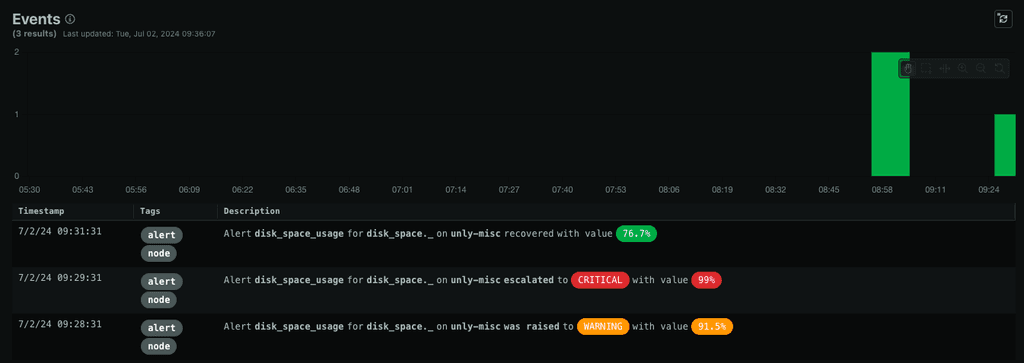

Now that I understand the issue better (thanks to my Netdata monitoring), I wonder about:

- Could Cloudron know, beforehand, how much space an update is gonna take (roughly), I believe it could know the size of the Docker image it will download, and compare it with the disk space left, to raise an alarm and/or to not perform the update automatically if disk space is clearly insufficient.

- In the event where Cloudron tries to do the update, and fails, could it not try to do the update automatically again? (knowing it will fail)

I know I can stop automated updates for this app, but that's a manual solution, I think Cloudron could help us by avoiding running into this kind of issue (which might tear down other apps in the process).

-

Also, in my case, the issue happened while updating Rallly.

I looked for the size of the Docker Image, and it's about 500mb

root@unly-misc:~# curl -s https://hub.docker.com/v2/repositories/lukevella/rallly/tags/latest/ | jq '.full_size' 510832205So, I fail to understand why it's failing the update with 8Gb of disk space left.

Edit: Looking at the Docker images directly, the file is 10.2GB.

That's odd it weights so much, it's hard to explain.root@unly-misc:~# docker images --format "{{.Repository}}:{{.Tag}} {{.ID}} {{.Size}}" cloudron/co.rallly.cloudronapp:20240620-132420-727aea519 6724902697a3 10.2GB ... -

We don't use upstream docker images. Cloudron builds it's own for every app. This is required to meet our deployment requirements (like readonly filesystem) , addon support etc.

We build it like https://git.cloudron.io/cloudron/rallly-app . Maybe there are some optimizations that can be done. But the image size is a combination of the base image (which is quite big and has all the necessary tools to debug apps).

-

I think something might be wrong with how Rallly has been setup then. The upstream is 500MB, the Cloudron's is 10Gb, 20 times more.

I don't recognize this behavior with other apps:

root@unly-misc:~# docker images --format "{{.Repository}}:{{.Tag}} {{.ID}} {{.Size}}" cloudron/com.metabase.cloudronapp:20240628-191401-64473d75c 702ac40462e0 2.79GB cloudron/co.rallly.cloudronapp:20240620-132420-727aea519 6724902697a3 10.2GB cloudron/io.changedetection.cloudronapp:20240617-125108-019d2ff03 b23079c56084 3.92GB cloudron/tech.ittools.cloudron:20240515-092352-014c36070 3fccf9f15005 2.22GB registry.docker.com/cloudron/postgresql:5.2.1 333f887a27f7 2.75GB registry.docker.com/cloudron/sftp:3.8.6 b735f2120189 2.23GB registry.docker.com/cloudron/mail:3.12.1 ea18fc4dd1c7 2.96GB registry.docker.com/cloudron/mongodb:6.0.0 4b95d24318a2 2.69GB registry.docker.com/cloudron/graphite:3.4.3 dbd026164ada 2.28GB cloudron/it.kutt.cloudronapp:20231017-142419-29446a137 847c1860e3df 2.94GB registry.docker.com/cloudron/redis:3.5.2 80e7a4079e6b 2.22GB registry.docker.com/cloudron/mysql:3.4.2 c7085a52532b 2.53GB registry.docker.com/cloudron/turn:1.7.2 152b1fb9690e 2.22GB registry.docker.com/cloudron/base:4.2.0 6ec7c1ab3983 2.21GBOther apps are 2-4GB.