App ran out of memory, related to PHP settings ?

-

Hey folks,

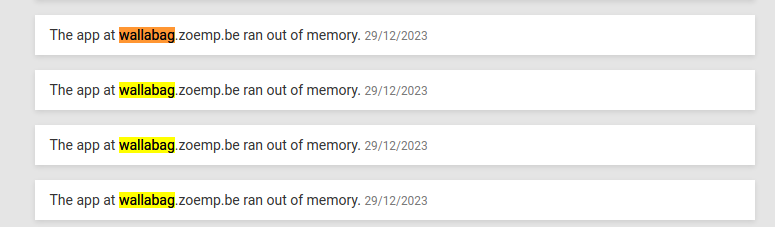

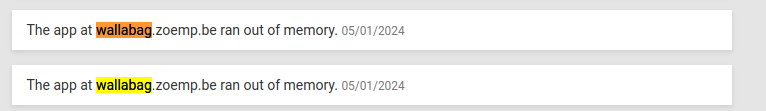

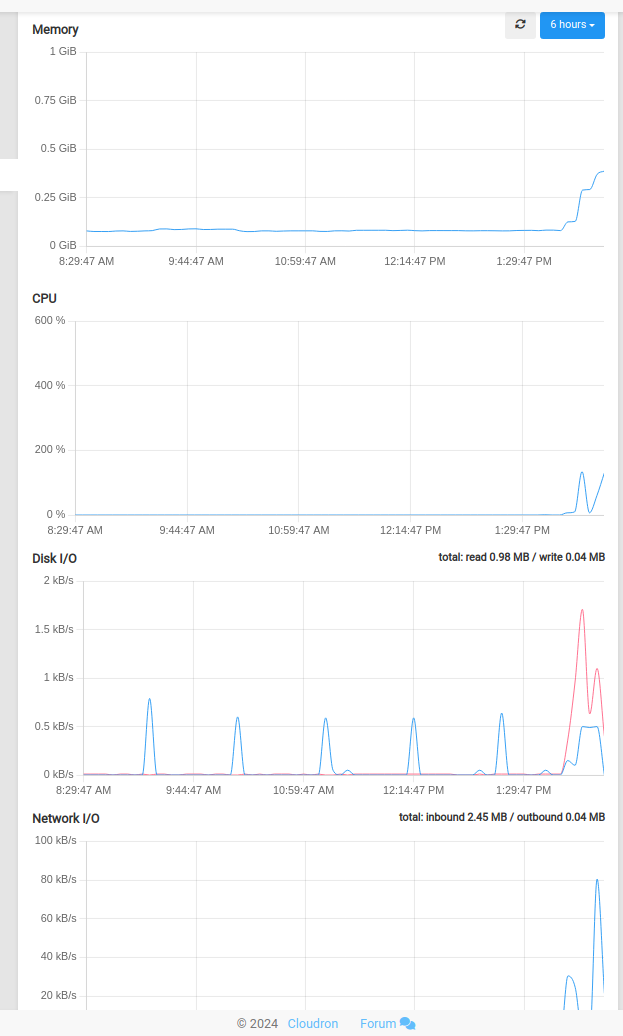

I have noticed recurrent memory issues with Wallabag from the time I've started using it.

...

Most often the app just restarts, but I have also increased the memory a few times without noticing improvements.

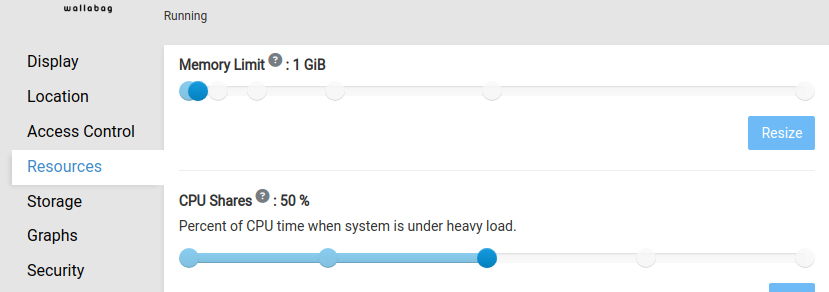

One example is when I save a link to a big for for later, Wallabag seems to not have enough memory (even with 1GB of allocated memory in Cloudron) to handle a 20MB PDF file so it crashes.

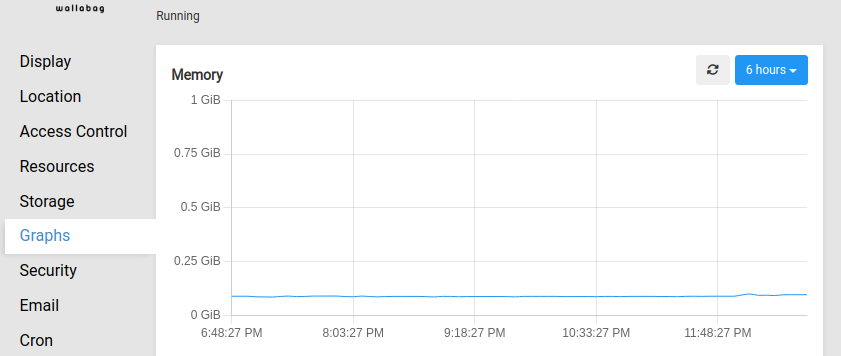

I have no idea why allocated memory is not reflected in the memory graph in resources, the app just keep crashing while the resources graph show nothing like hitting the limits. When adding a link from the mobile client of Wallabag to Wallabag server, the server sometimes just refuses (500 error).

So I have added my case to a Wallabag issue at https://github.com/wallabag/android-app/issues/905 and someone pointed out based on my application logs that the PHP process was maxed out at 256 MB despite my 1GB allocated memory. It seems that the settings for PHP are hardcoded (https://git.cloudron.io/cloudron/wallabag-app/-/blob/master/Dockerfile#L41 ) and I suspect that this could be the cause of those issues but I'm not sure ?

Should we make those parameters more dynamic ? Or is there anything else that should be first investigated ?

And why the resources graph seems incorrect ?I'm overriding the PHP memory limit in File Manager from now and I'll keep you posted.

EDIT : how to double check from the terminal if the setting is taken into account ? Some default values still appear in php.ini after restarts. -

Thank you @robi so far it seems consistent with my new settings.

root@b1d427fa-7054-4a74-b56f-88b51113fa2c:/app/code/wallabag# php -ini | grep memory memory_limit => 768M => 768M Collecting memory statistics => No opcache.memory_consumption => 128 => 128 opcache.preferred_memory_model => no value => no value opcache.protect_memory => Off => Off -

Thank you @robi so far it seems consistent with my new settings.

root@b1d427fa-7054-4a74-b56f-88b51113fa2c:/app/code/wallabag# php -ini | grep memory memory_limit => 768M => 768M Collecting memory statistics => No opcache.memory_consumption => 128 => 128 opcache.preferred_memory_model => no value => no value opcache.protect_memory => Off => Off@MorganGeek maybe increase the memory to say 2GB ? Note that since these are apache apps, 1GB (of which only 500M is RAM) is shared between apache processes. If we have 5 apache processes, that doesn't leave much . Many app frameworks will store the full file upload in memory before saving it somewhere.

-

Thanks @girish It seems super heavy to me to need 2GB for something like Wallabag that should not be doing a lot except when adding links ? Or maybe I misunderstand how the app works but then I would look at another alternative to Wallabag if it's a memory hog

-

@MorganGeek the memory limit is just an upper bound for the app - https://docs.cloudron.io/apps/#memory-limit . It probably requires this in bursts . When it's not using that much memory, it's available to other apps. It's not "reserved" for the app as such.

-

Thank you for the clarification ! I'm probably anyway abandon Wallabag in favor of other more lightweight apps as most of the features I like in Wallabag seem to cause crashes or are poorly optimized. That would be an opportunity for me to try to port some app to Cloudron. Have a great day !

Wallabag seems clearly not tuned at all. Something like a link/bookmarks management app should be snappy as a Shaarli can be (just saving dumb links, or give the option to).

Saving a link to a PDF or to a page web should be fast.

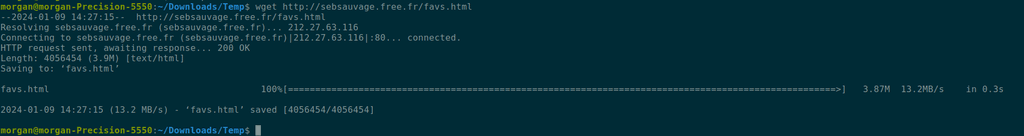

Example with a http://sebsauvage.free.fr/favs.html

A curl operation from local is super fast

But wallabag is unable to save the same URL and just timeouts after 200sec

I liked the app, so it's really a pity

-

There are frequent cases of pages that take long and longer to load into Wallabag for me. There is also the case of a PDF that takes quite some time to load that I mentioned in the GitHub issue. I would love to try with the other bookmarks management tools available in open source or in Cloudron and see it it compares for the same URLs.

I'll also follow your advice and open an issue upstream (but they have hundred of issues open for long time and several slowness/performance issues open for 7 years..., so I'm not sure they are interested in solving new performance issues). -

@MorganGeek Totally unrelated to Cloudron but related to Linux servers hosting PHP applications, I ran into an issue where a Laravel application failed because of a lack of MySQL resources. I researching the specific message, many layers deep was a comment about an insufficient swap file. The server had plenty of RAM available, so it seemed unlikely. But my swap file turned out to be small and almost full (not even sure why it was needed at all). I increased the swap file and miraculously my problem disappeared. Application is now running. I would check the swap file and its utilization. I don't know why this seemed to matter, but I would up doing this across all my Linux servers. Many had 512MB swap files and one had no swap file at all - all from legacy images at various cloud providers. Perhaps this is worth checking and if not as large as your RAM, increasing the swap file size.