Voicechat on Cloudron - A fast, fully local AI Voicechat using WebSockets

-

- Main Site:

- Git: https://github.com/lhl/voicechat2

- Licence: Apache 2.0

- Demo: https://private-user-images.githubusercontent.com/2581/353759826-498ce979-18b6-4225-b0da-01b6910e2bd7.webm

On an 7900-class AMD RDNA3 card, voice-to-voice latency is in the 1 second range:

Whisper large-v2 (Q5)

Llama 3 8B (Q4_K_M)

tts_models/en/vctk/vits (Coqui TTS default VITS models)

On a 4090, using Faster Whisper with faster-distil-whisper-large-v2 we can cut the latency down to as low as 300ms

-

- Main Site:

- Git: https://github.com/lhl/voicechat2

- Licence: Apache 2.0

- Demo: https://private-user-images.githubusercontent.com/2581/353759826-498ce979-18b6-4225-b0da-01b6910e2bd7.webm

On an 7900-class AMD RDNA3 card, voice-to-voice latency is in the 1 second range:

Whisper large-v2 (Q5)

Llama 3 8B (Q4_K_M)

tts_models/en/vctk/vits (Coqui TTS default VITS models)

On a 4090, using Faster Whisper with faster-distil-whisper-large-v2 we can cut the latency down to as low as 300ms

@LoudLemur What advantage would this have above OpenWeb UI which can do Voice Chat I believe, among other things, as far as I can see, and it's using the same technologies, Whipser and Llama3?

-

Hi, micmc!

This is what Llama 3.1 405b has to say about the two:

"VoiceChat (lhl/voicechat2):

Features:

Local AI voice chat system

Uses WebSockets for communication

Fully local (voice-to-voice) implementation

Uses Whisper large-v2 (Q5) for speech recognition

Utilizes Llama 3 8B (Q4_K_M) for language processing

Employs tts_models/en/vctk/vits (Coqui TTS default VITS models) for text-to-speech

Pros:Fast performance: Voice-to-voice latency is in the 1-second range on high-end GPUs

Fully local: Doesn't require internet connection for core functionality

Open-source: Available on GitHub for customization and community contributions

Utilizes state-of-the-art AI models for speech recognition and language processing

Cons:Requires powerful hardware: Optimal performance seems to be on high-end GPUs (e.g., AMD RDNA3 card)

May have limited features compared to more established voice chat solutions

Potentially complex setup for non-technical users

Limited documentation available

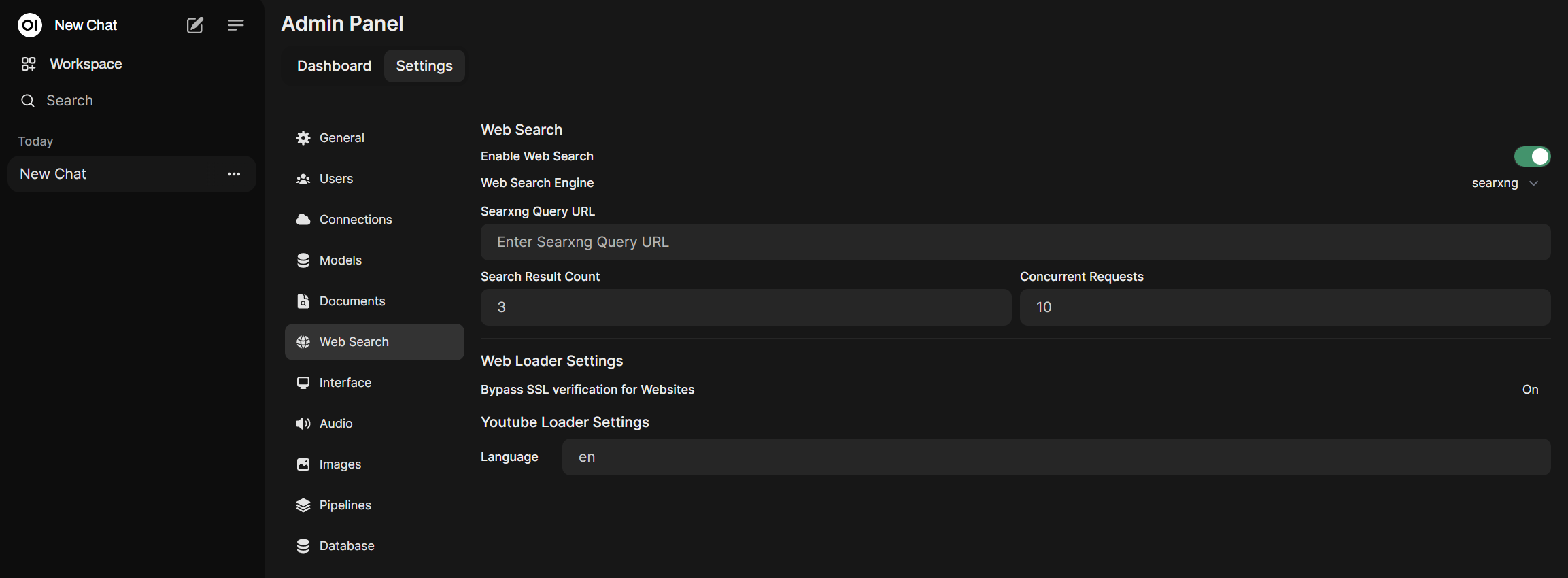

OpenWebUI:Features:

Web-based user interface for AI interactions

Docker and Kubernetes support for easy deployment

Integration with OpenAI-compatible APIs

Customizable OpenAI API URL

Support for both Ollama and CUDA-tagged images

Functions and pipeline support for extended capabilities

Pros:Effortless setup using Docker or Kubernetes

Flexible API integration (OpenAI, LMStudio, GroqCloud, Mistral, etc.)

Web-based interface for easy access

Extensible with functions and pipelines

Active development and community support

Supports multiple deployment options (pip, Docker, Kubernetes)

Cons:Documentation may be lacking in some areas, making integration challenging

Primarily focused on text-based interactions, unlike VoiceChat's voice-to-voice approach

May require more setup for voice capabilities (if needed)

Potential learning curve for utilizing all features and integrations

Comparison:Focus:

VoiceChat is specifically designed for voice-to-voice AI interactions.

OpenWebUI is a more general-purpose web interface for AI interactions, primarily text-based.

Deployment:VoiceChat seems to require local installation and configuration.

OpenWebUI offers multiple deployment options, including Docker and Kubernetes, making it potentially easier to set up and scale.

Flexibility:VoiceChat is focused on local, offline use with specific AI models.

OpenWebUI offers more flexibility in terms of API integrations and customization options.

User Interface:VoiceChat likely has a minimal interface focused on voice interactions.

OpenWebUI provides a web-based interface that can be accessed from various devices.

Community and Development:Both are open-source, but OpenWebUI appears to have more active development and a larger community.

Use Case:VoiceChat is ideal for users needing offline, voice-based AI interactions with low latency.

OpenWebUI is better suited for users who need a flexible, web-based interface for various AI interactions and integrations.

In conclusion, the choice between VoiceChat and OpenWebUI depends on the specific needs of the user. VoiceChat is more specialized for voice-based AI interactions, while OpenWebUI offers a more versatile platform for general AI interactions with easier deployment options. Users prioritizing voice capabilities and offline use might prefer VoiceChat, while those needing a flexible, web-based solution with various integrations might find OpenWebUI more suitable."