-

Hi all,

I've been playing around with data visualisation options for some work. Right now it's all "somewhere on the internet" using different dataviz services.

I know cloudron packages a lot of different dataviz tools, but I (possibly mistakenly) think they're DB driven - get your data into a DB then it can visualise it.

Most of the datasources I'm looking at are file based, CSV, etc. I know this isn't so efficient but the data isn't real time - e.g. world population to date, average global income etc.

(Also I've kept away from DBs this far because they scare the crap out of me. I had an exchange DB failure and loss when I was a sysadmin about 15 years ago and it was the worst experience of my life!)

I'm looking for some advice on the "engineering" side of dataviz.

What I'm looking for

Recommendations on what packages available currently on Cloudron I use to achieve the following :

I can put data sources (CSV,tsv, xls) somewhere hosted on Cloudron, then use one of the visualisation package to visualise that data.

I may not be explaining my use case clearly enough, so will clarify as best I can.

Thanks!

-

Hi all,

I've been playing around with data visualisation options for some work. Right now it's all "somewhere on the internet" using different dataviz services.

I know cloudron packages a lot of different dataviz tools, but I (possibly mistakenly) think they're DB driven - get your data into a DB then it can visualise it.

Most of the datasources I'm looking at are file based, CSV, etc. I know this isn't so efficient but the data isn't real time - e.g. world population to date, average global income etc.

(Also I've kept away from DBs this far because they scare the crap out of me. I had an exchange DB failure and loss when I was a sysadmin about 15 years ago and it was the worst experience of my life!)

I'm looking for some advice on the "engineering" side of dataviz.

What I'm looking for

Recommendations on what packages available currently on Cloudron I use to achieve the following :

I can put data sources (CSV,tsv, xls) somewhere hosted on Cloudron, then use one of the visualisation package to visualise that data.

I may not be explaining my use case clearly enough, so will clarify as best I can.

Thanks!

@ei8fdb Kaggle is good as the data there is used for Machine Learning:

https://www.kaggle.com/datasets

DataUSA:

https://datausa.io/Politics:

https://data.fivethirtyeight.com/If you can abide proprietary, Big Data, Google:

https://www.google.com/publicdata/directoryThe census office provides updates periodically. The UK's came out a few months ago, so is quite current.

-

@ei8fdb Kaggle is good as the data there is used for Machine Learning:

https://www.kaggle.com/datasets

DataUSA:

https://datausa.io/Politics:

https://data.fivethirtyeight.com/If you can abide proprietary, Big Data, Google:

https://www.google.com/publicdata/directoryThe census office provides updates periodically. The UK's came out a few months ago, so is quite current.

Hi @LoudLemur thanks for the data source links.

What I'm looking for is Cloudron packages I can use.

-

Hi all,

I've been playing around with data visualisation options for some work. Right now it's all "somewhere on the internet" using different dataviz services.

I know cloudron packages a lot of different dataviz tools, but I (possibly mistakenly) think they're DB driven - get your data into a DB then it can visualise it.

Most of the datasources I'm looking at are file based, CSV, etc. I know this isn't so efficient but the data isn't real time - e.g. world population to date, average global income etc.

(Also I've kept away from DBs this far because they scare the crap out of me. I had an exchange DB failure and loss when I was a sysadmin about 15 years ago and it was the worst experience of my life!)

I'm looking for some advice on the "engineering" side of dataviz.

What I'm looking for

Recommendations on what packages available currently on Cloudron I use to achieve the following :

I can put data sources (CSV,tsv, xls) somewhere hosted on Cloudron, then use one of the visualisation package to visualise that data.

I may not be explaining my use case clearly enough, so will clarify as best I can.

Thanks!

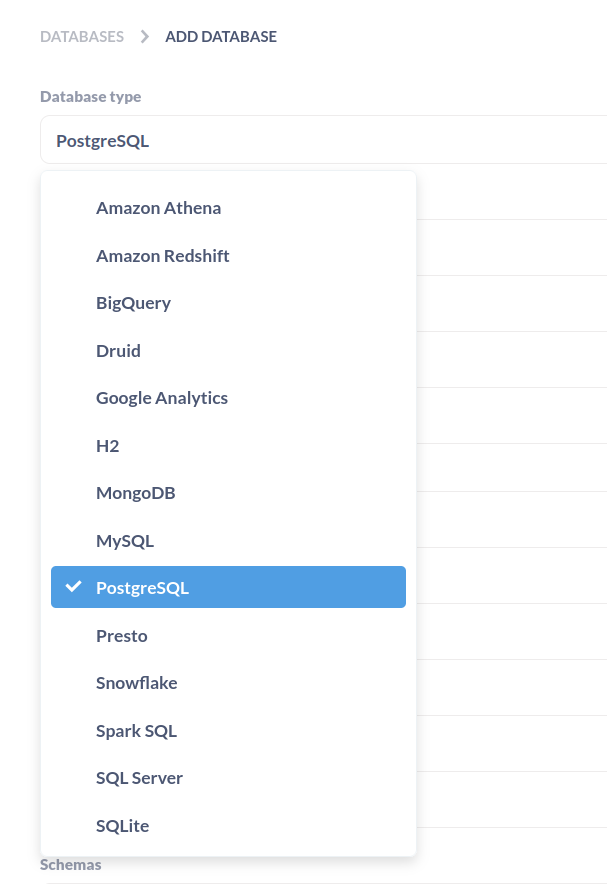

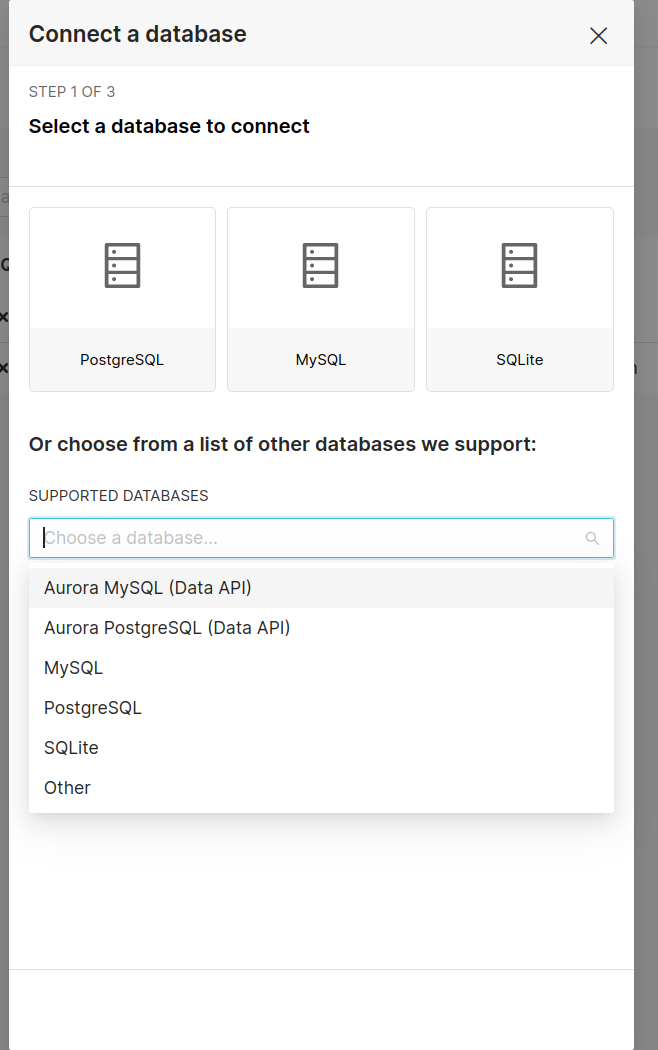

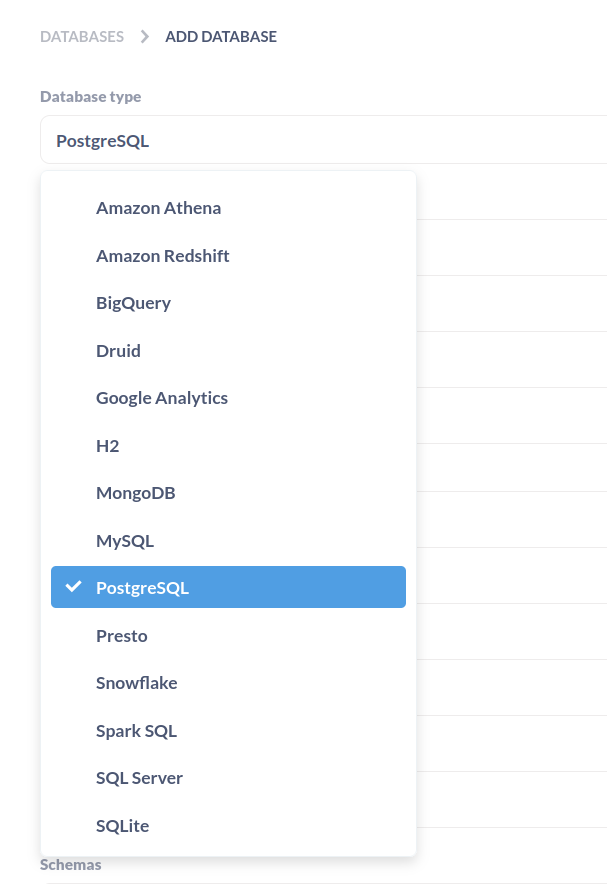

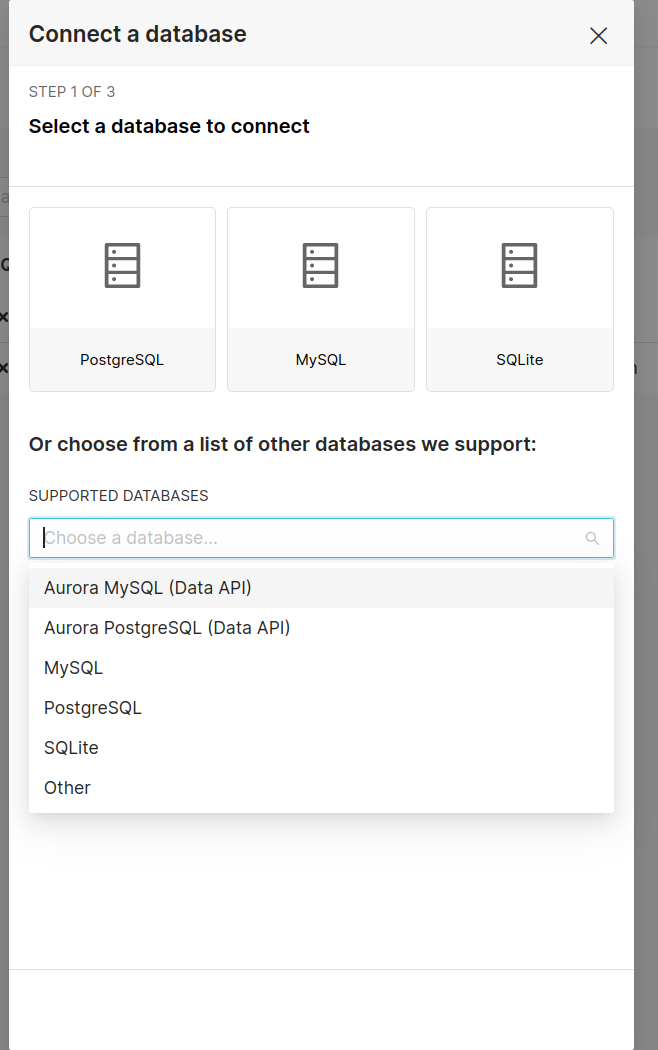

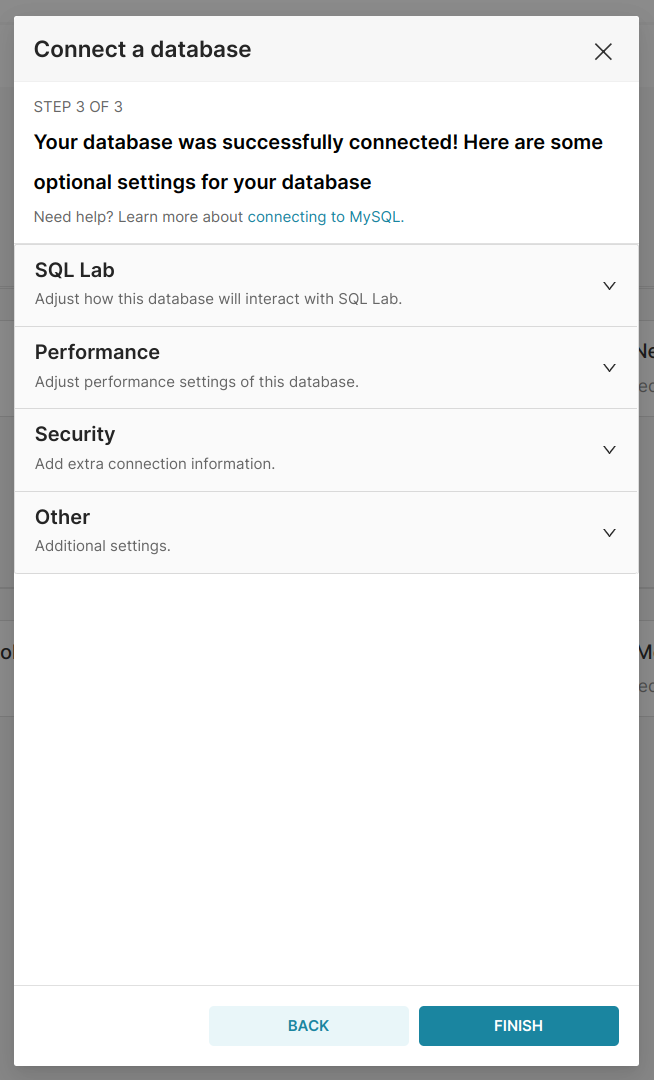

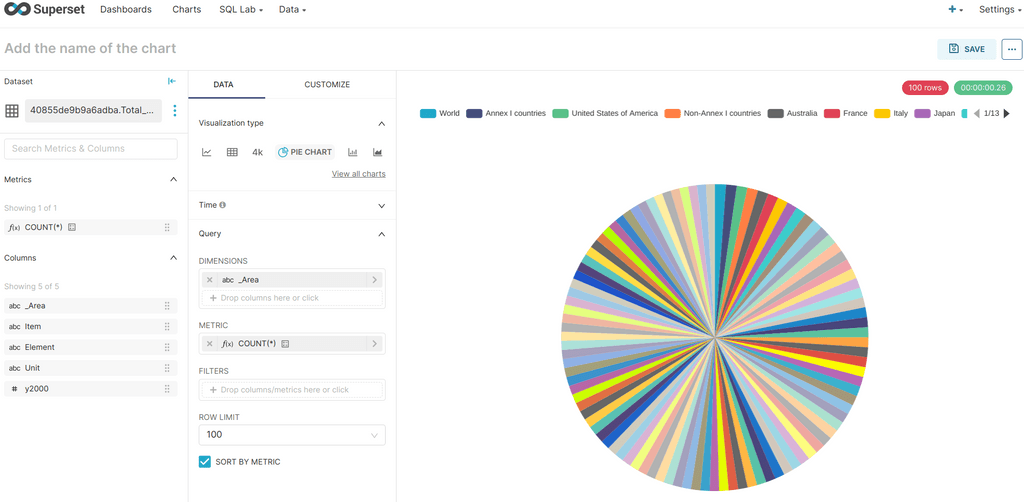

@ei8fdb To be fair, this is what Metabase supports by default:

And this is superset

Without anything from the communities (like csv addons/plugins) you need to find a way to transform your data to a supported database. MySQL and Postgres are the preferred choices if you want to store your data on your local Cloudron instance.

For a start (to try out the Cloudron apps) connect to a Cloudron app via the dashboard and look in the env file (if there is no env, use the terminal and 'env' on the console). Look for the credentials for the database addon and use that information in superset or metabase.

Later, install a LAMP (which has the mysql addon enabled) and try to figure out how to import your data directly into the database. https://docs.cloudron.io/apps/lamp/

I have never used it, but one of my favorite tools for cleaning messy data https://openrefine.org/ has a sql exporter https://openrefine.org/docs/manual/exporting#sql-statement-exporter

Maybe that will help. -

SQLite works very well for all this. Our database is MySQL but we don't want to give the dataviz apps access to the database, if we can avoid it. So, we have a script that creates a sqlite database with the values we need from MySQL. And then use sqlite database in the apps.

-

SQLite works very well for all this. Our database is MySQL but we don't want to give the dataviz apps access to the database, if we can avoid it. So, we have a script that creates a sqlite database with the values we need from MySQL. And then use sqlite database in the apps.

-

@ei8fdb To be fair, this is what Metabase supports by default:

And this is superset

Without anything from the communities (like csv addons/plugins) you need to find a way to transform your data to a supported database. MySQL and Postgres are the preferred choices if you want to store your data on your local Cloudron instance.

For a start (to try out the Cloudron apps) connect to a Cloudron app via the dashboard and look in the env file (if there is no env, use the terminal and 'env' on the console). Look for the credentials for the database addon and use that information in superset or metabase.

Later, install a LAMP (which has the mysql addon enabled) and try to figure out how to import your data directly into the database. https://docs.cloudron.io/apps/lamp/

I have never used it, but one of my favorite tools for cleaning messy data https://openrefine.org/ has a sql exporter https://openrefine.org/docs/manual/exporting#sql-statement-exporter

Maybe that will help.Thanks for the helpful answer @luckow.

@luckow said in Lots of dataviz apps, but what to use for data sources?:

you need to find a way to transform your data to a supported database

Am I right in saying my assumption is correct - all the datavis packages available on Cloudron require databases? This isn't a criticism, I only want to make sure I've not missed something.

If my assumption is correct, I guess the next question is:

from the package/options available now on Cloudron - what is the easiest option to use to create a DB, of whatever type, MySQL, Postgres, SQLite, whatever?

Any suggestions are welcome!

And yes mentioning Openrefine - this is one of the tools I am using. I would like to have the data I'm visualising to be available online, and possibly interactive in some way. Hence my search for something available via Cloudron.

-

SQLite works very well for all this. Our database is MySQL but we don't want to give the dataviz apps access to the database, if we can avoid it. So, we have a script that creates a sqlite database with the values we need from MySQL. And then use sqlite database in the apps.

@girish said in Lots of dataviz apps, but what to use for data sources?:

SQLite works very well for all this.

Thanks @girish, and it is this - creating a database - step that I'm unfamiliar with.

Can I use a LAMP stack app to create a DB server, as (I think) @luckow was suggesting? Sorry for the very basic question!

-

@girish said in Lots of dataviz apps, but what to use for data sources?:

SQLite works very well for all this.

Thanks @girish, and it is this - creating a database - step that I'm unfamiliar with.

Can I use a LAMP stack app to create a DB server, as (I think) @luckow was suggesting? Sorry for the very basic question!

-

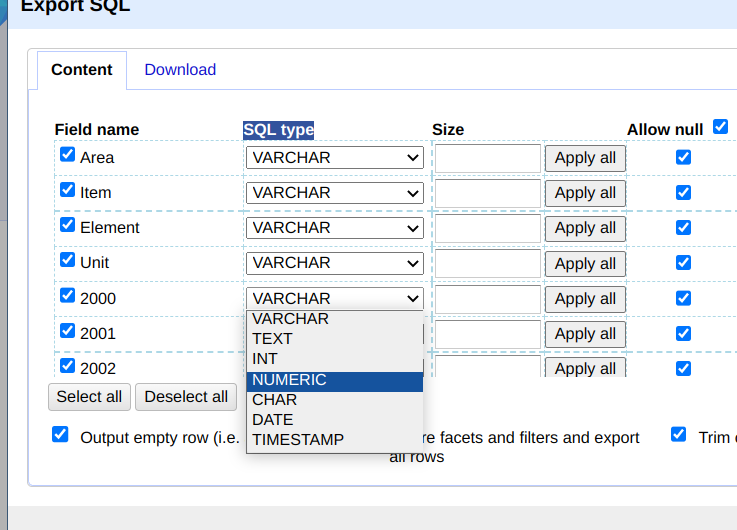

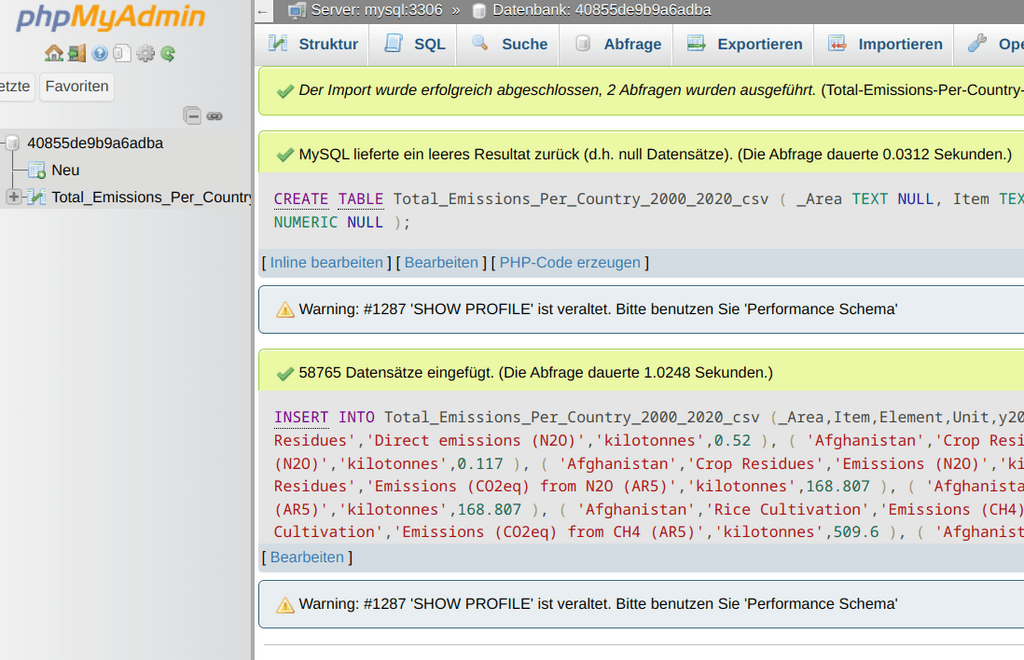

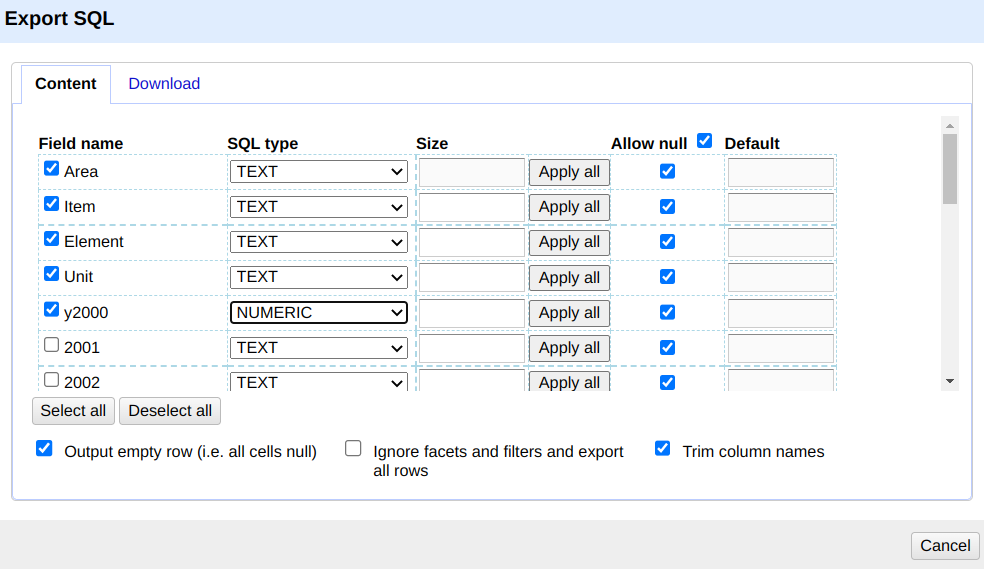

ok. a quick try.

- Download https://www.kaggle.com/datasets/justin2028/total-emissions-per-country-2000-2020

- Install LAMP (important: give more RAM)

- import CSV into openrefine

- export sql from openrefine

- import sql export into lamp via phpmyadmin

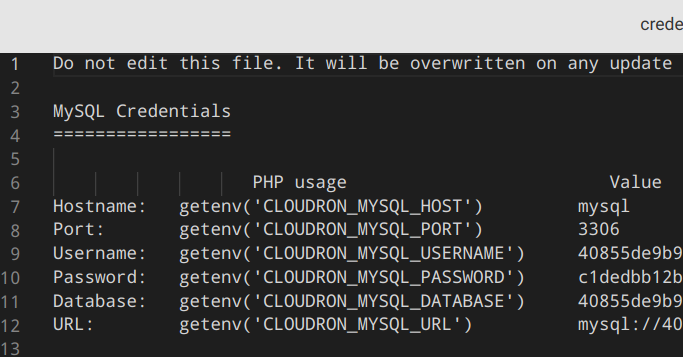

- fire up the filemanager in lamp and look for credentials.txt

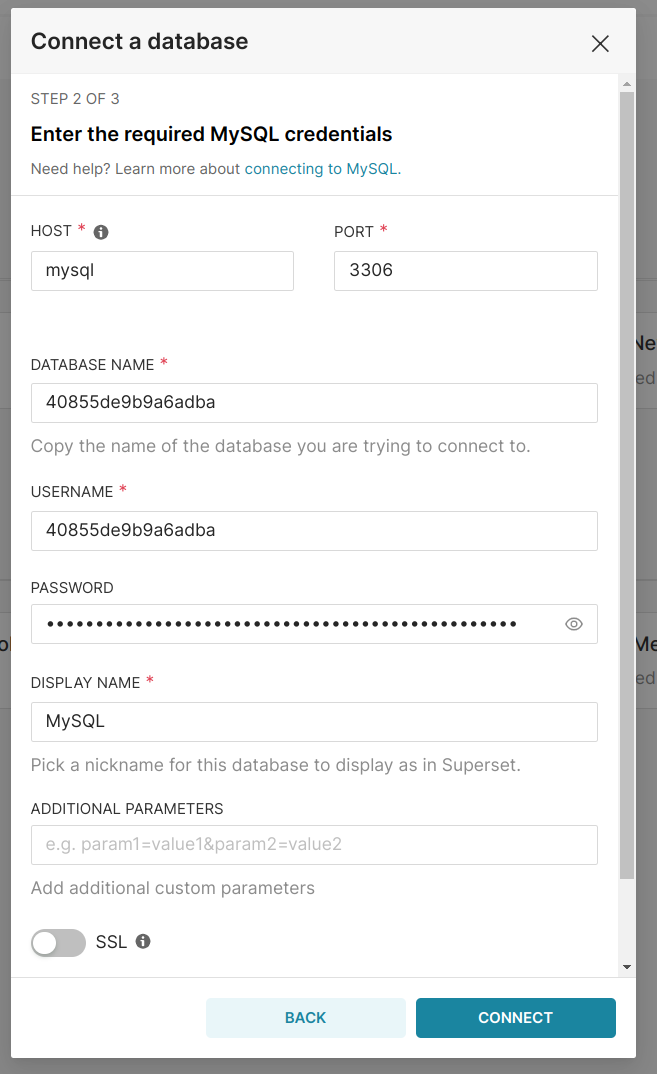

- install superset or metabase.

- in my case adding the mysql credentials into superset

- a click on connect brings

- et voilà

Without being a pro in mysql, this worked for me

-

N nebulon moved this topic from Apps on

N nebulon moved this topic from Apps on

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login