Firstly, thank you @girish and @martinv for being far more useful than a compass at the South Pole! Still nothing useful from the Rocket.Chat forum.

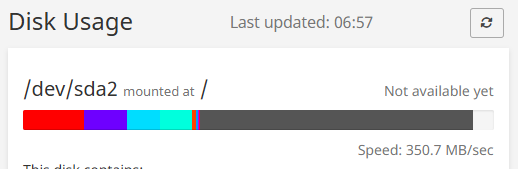

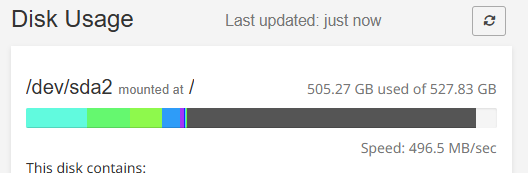

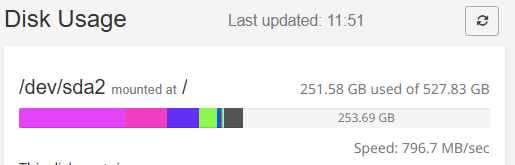

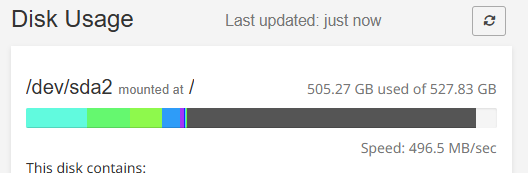

I have set up that cron task to run every 15 minutes, since our log file seemed to be growing very quickly. (After freeing up 40 Gb of disk space yesterday from other apps, our Cloudron server filled up again overnight.) However the cron task seems to be a workaround rather than a solution...

Also in very strange news, the rocketchat_apps_logs.bson file does not seem to be growing any more, even between cron task runs. I'm still not sure what's in that data, I haven't been able to look at it yet, but I'm guessing it's log data for third party Rocket.Chat apps.

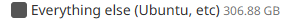

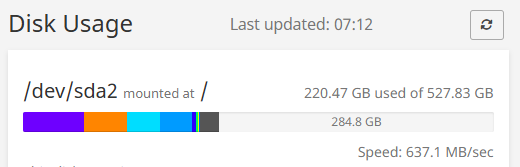

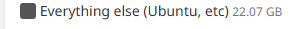

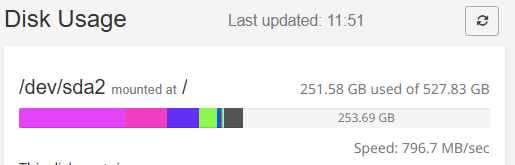

Something else is now filling up the disk very quickly, a Gb every few minutes. I haven't figured out what yet. It does seem to correspond to running Rocket.Chat.

I noticed some other strange things and potentially useful notes while working through it:

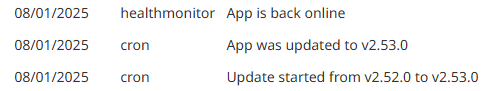

Firstly, the disk space problem must be very new or we would've seen it ages ago.

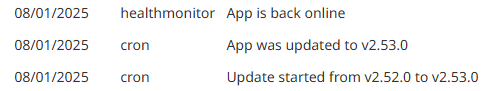

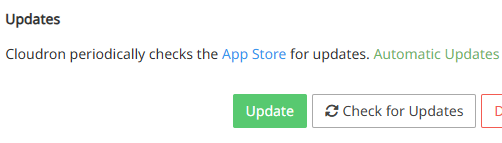

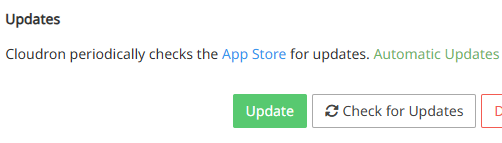

I see there was an auto update to the 2.53.0 package last week.

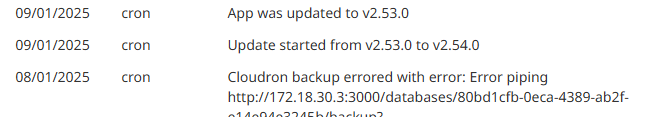

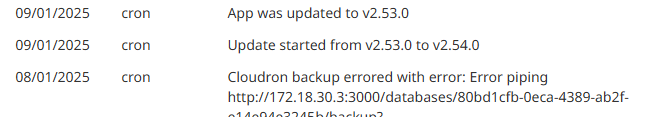

Then the next day, an update to 2.54.0... Or was there?! (I think there was.)

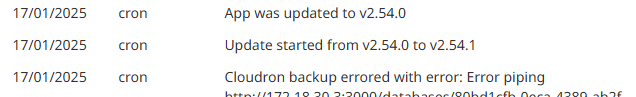

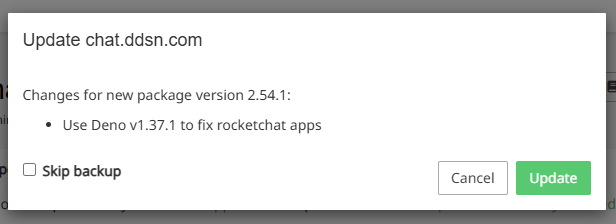

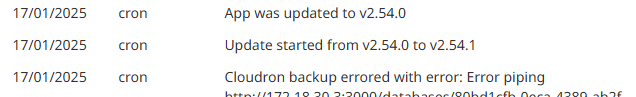

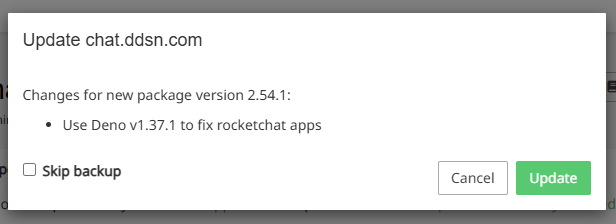

I'm now seeing this behavior on each update, and it wasn't happening before. 2.54.1 landed a few days ago when the same backup error and strange event log effect. I think this is when the problem most likely started.

I still see the Update button after the update and clicking it offers to upgrade to the same version again. I notice this is not the latest package (which is 2.54.2.)

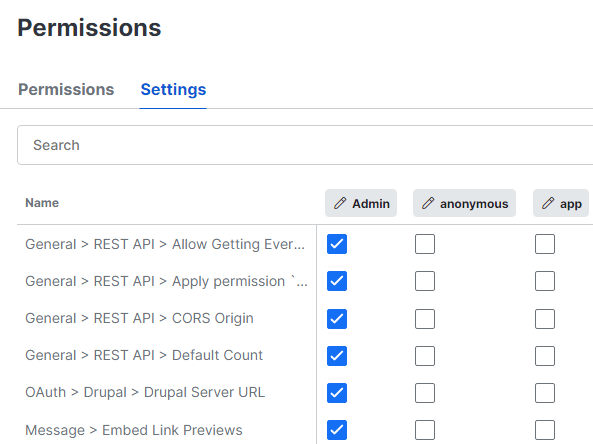

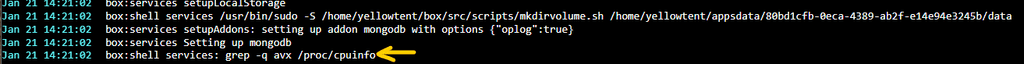

I noticed the 2.54.x series of updates do contain updates to the apps management system. Could it be related?

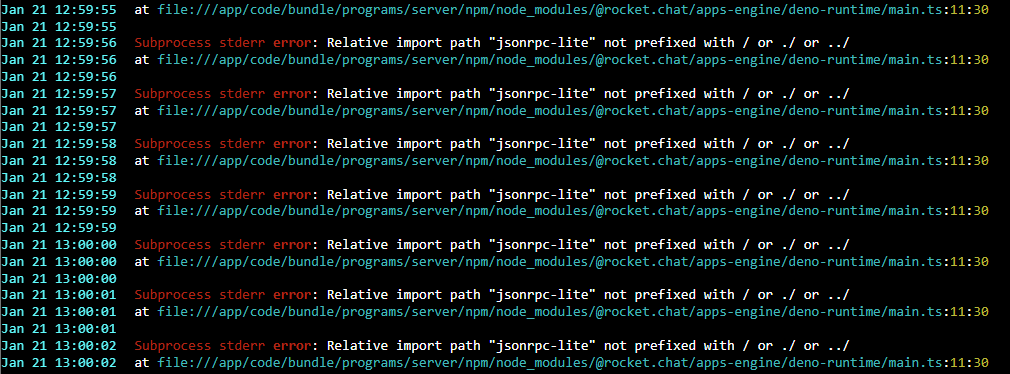

I noticed that when I manually ran the update, that's when the rocketchat_apps_logs.bson file seemed to diminish in size: it was no longer hundreds of Gb after the update. But I also noticed this:

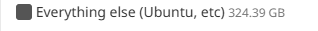

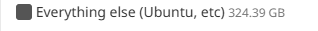

Strangely, I couldn't find that data anywhere on the file system by digging through du reports; the disk usage across all directories did not add up to the disk usage I could see with df.

After a reboot it seemed to disappear. Is this something strange about the overlay file system that I don't understand?

Something that may or may not be relevant: Our app backups are going to a Backblaze data store, we don't keep them locally.

I'm going to try re-running the app update again to see if I can get to 2.54.2, and to see if the same thing happens with our rapidly growing data disappearing as part of the update.