Ollama: permissions issue when using volume storage

-

@ntnsndr the supervisor config file in the package sets additional env vars like HOME and NTLK_DATA . Have you tried restarting the app (which ends up restarting supervisor) ?

-

@joseph I was only try to run ollama manually to understand why it is not starting on its own when the app boots.

So, when I reboot the app with the

/media/ollama-voldirectory at 777 permissions, here is what I get:Apr 15 08:31:05 2025-04-15 14:31:05,744 CRIT Supervisor is running as root. Privileges were not dropped because no user is specified in the config file. If you intend to run as root, you can set user=root in the config file to avoid this message. Apr 15 08:31:05 2025-04-15 14:31:05,744 INFO Included extra file "/etc/supervisor/conf.d/ollama.conf" during parsing Apr 15 08:31:05 2025-04-15 14:31:05,744 INFO Included extra file "/etc/supervisor/conf.d/openwebui.conf" during parsing Apr 15 08:31:05 2025-04-15 14:31:05,748 INFO RPC interface 'supervisor' initialized Apr 15 08:31:05 2025-04-15 14:31:05,748 CRIT Server 'unix_http_server' running without any HTTP authentication checking Apr 15 08:31:05 2025-04-15 14:31:05,749 INFO supervisord started with pid 1 Apr 15 08:31:06 2025-04-15 14:31:06,752 INFO spawned: 'ollama' with pid 25 Apr 15 08:31:06 2025-04-15 14:31:06,755 INFO spawned: 'openwebui' with pid 26 Apr 15 08:31:06 2025/04/15 14:31:06 routes.go:1231: INFO server config env="map[CUDA_VISIBLE_DEVICES: GPU_DEVICE_ORDINAL: HIP_VISIBLE_DEVICES: HSA_OVERRIDE_GFX_VERSION: HTTPS_PROXY: HTTP_PROXY: NO_PROXY: OLLAMA_CONTEXT_LENGTH:2048 OLLAMA_DEBUG:false OLLAMA_FLASH_ATTENTION:false OLLAMA_GPU_OVERHEAD:0 OLLAMA_HOST:http://127.0.0.1:11434 OLLAMA_INTEL_GPU:false OLLAMA_KEEP_ALIVE:5m0s OLLAMA_KV_CACHE_TYPE: OLLAMA_LLM_LIBRARY: OLLAMA_LOAD_TIMEOUT:5m0s OLLAMA_MAX_LOADED_MODELS:0 OLLAMA_MAX_QUEUE:512 OLLAMA_MODELS:/media/ollama-vol/models OLLAMA_MULTIUSER_CACHE:false OLLAMA_NEW_ENGINE:false OLLAMA_NOHISTORY:false OLLAMA_NOPRUNE:false OLLAMA_NUM_PARALLEL:0 OLLAMA_ORIGINS:[http://localhost https://localhost http://localhost:* https://localhost:* http://127.0.0.1 https://127.0.0.1 http://127.0.0.1:* https://127.0.0.1:* http://0.0.0.0 https://0.0.0.0 http://0.0.0.0:* https://0.0.0.0:* app://* file://* tauri://* vscode-webview://* vscode-file://*] OLLAMA_SCHED_SPREAD:false ROCR_VISIBLE_DEVICES: http_proxy: https_proxy: no_proxy:]" Apr 15 08:31:06 Error: mkdir /media/ollama-vol/models/blobs: permission denied Apr 15 08:31:06 2025-04-15 14:31:06,790 WARN exited: ollama (exit status 1; not expected) Apr 15 08:31:07 2025-04-15 14:31:07,792 INFO spawned: 'ollama' with pid 33 Apr 15 08:31:07 2025-04-15 14:31:07,793 INFO success: openwebui entered RUNNING state, process has stayed up for > than 1 seconds (startsecs) Apr 15 08:31:07 2025/04/15 14:31:07 routes.go:1231: INFO server config env="map[CUDA_VISIBLE_DEVICES: GPU_DEVICE_ORDINAL: HIP_VISIBLE_DEVICES: HSA_OVERRIDE_GFX_VERSION: HTTPS_PROXY: HTTP_PROXY: NO_PROXY: OLLAMA_CONTEXT_LENGTH:2048 OLLAMA_DEBUG:false OLLAMA_FLASH_ATTENTION:false OLLAMA_GPU_OVERHEAD:0 OLLAMA_HOST:http://127.0.0.1:11434 OLLAMA_INTEL_GPU:false OLLAMA_KEEP_ALIVE:5m0s OLLAMA_KV_CACHE_TYPE: OLLAMA_LLM_LIBRARY: OLLAMA_LOAD_TIMEOUT:5m0s OLLAMA_MAX_LOADED_MODELS:0 OLLAMA_MAX_QUEUE:512 OLLAMA_MODELS:/media/ollama-vol/models OLLAMA_MULTIUSER_CACHE:false OLLAMA_NEW_ENGINE:false OLLAMA_NOHISTORY:false OLLAMA_NOPRUNE:false OLLAMA_NUM_PARALLEL:0 OLLAMA_ORIGINS:[http://localhost https://localhost http://localhost:* https://localhost:* http://127.0.0.1 https://127.0.0.1 http://127.0.0.1:* https://127.0.0.1:* http://0.0.0.0 https://0.0.0.0 http://0.0.0.0:* https://0.0.0.0:* app://* file://* tauri://* vscode-webview://* vscode-file://*] OLLAMA_SCHED_SPREAD:false ROCR_VISIBLE_DEVICES: http_proxy: https_proxy: no_proxy:]" Apr 15 08:31:07 Error: mkdir /media/ollama-vol/models/blobs: permission denied Apr 15 08:31:07 2025-04-15 14:31:07,823 WARN exited: ollama (exit status 1; not expected) Apr 15 08:31:09 2025-04-15 14:31:09,828 INFO spawned: 'ollama' with pid 43 Apr 15 08:31:09 2025/04/15 14:31:09 routes.go:1231: INFO server config env="map[CUDA_VISIBLE_DEVICES: GPU_DEVICE_ORDINAL: HIP_VISIBLE_DEVICES: HSA_OVERRIDE_GFX_VERSION: HTTPS_PROXY: HTTP_PROXY: NO_PROXY: OLLAMA_CONTEXT_LENGTH:2048 OLLAMA_DEBUG:false OLLAMA_FLASH_ATTENTION:false OLLAMA_GPU_OVERHEAD:0 OLLAMA_HOST:http://127.0.0.1:11434 OLLAMA_INTEL_GPU:false OLLAMA_KEEP_ALIVE:5m0s OLLAMA_KV_CACHE_TYPE: OLLAMA_LLM_LIBRARY: OLLAMA_LOAD_TIMEOUT:5m0s OLLAMA_MAX_LOADED_MODELS:0 OLLAMA_MAX_QUEUE:512 OLLAMA_MODELS:/media/ollama-vol/models OLLAMA_MULTIUSER_CACHE:false OLLAMA_NEW_ENGINE:false OLLAMA_NOHISTORY:false OLLAMA_NOPRUNE:false OLLAMA_NUM_PARALLEL:0 OLLAMA_ORIGINS:[http://localhost https://localhost http://localhost:* https://localhost:* http://127.0.0.1 https://127.0.0.1 http://127.0.0.1:* https://127.0.0.1:* http://0.0.0.0 https://0.0.0.0 http://0.0.0.0:* https://0.0.0.0:* app://* file://* tauri://* vscode-webview://* vscode-file://*] OLLAMA_SCHED_SPREAD:false ROCR_VISIBLE_DEVICES: http_proxy: https_proxy: no_proxy:]" Apr 15 08:31:09 Error: mkdir /media/ollama-vol/models/blobs: permission denied Apr 15 08:31:09 2025-04-15 14:31:09,862 WARN exited: ollama (exit status 1; not expected) Apr 15 08:31:10 => Healtheck error: Error: connect ECONNREFUSED 172.18.16.191:8080 -

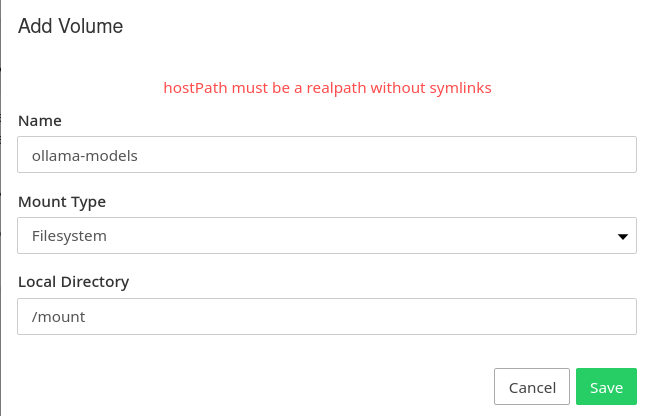

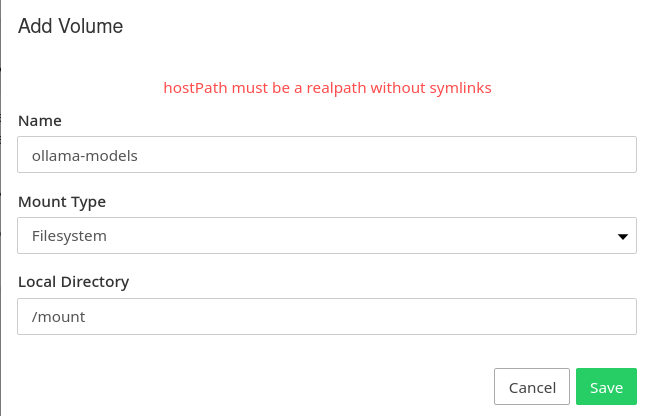

Relatedly (I am trying to try again with another volume), does anyone see if I'm doing something wrong here?

To create the

/media/ollama-voldirectory before I had tomkdirit manually before adding the volume.Could there be something screwy with my filesystem permissions in general?

-

Did you ever try without the volume if it is working at all?

If no, that should be done first to minimize the error potential. -

@BrutalBirdie yes, as noted above: "The problem does not occur under the default app settings. But it does when I use the recommended path of creating a separate volume for the models."

-

Huh

Then there must be something really strange going on.

Either perimissions on the server itself or some other ghost in the shell. -

Relatedly (I am trying to try again with another volume), does anyone see if I'm doing something wrong here?

To create the

/media/ollama-voldirectory before I had tomkdirit manually before adding the volume.Could there be something screwy with my filesystem permissions in general?

@ntnsndr the Local Directory is something like /opt/ollama-models . You have to create this directory on the server manually . This prevents admins from mounting random paths (the idea being that only a person with SSH access can "create" paths).

In your initial post, what kind of volume are you using? I wonder if it's CIFS and it doesn't support file permissions ?