LiteLLM on Cloudron: Simplify model access, spend tracking and fallbacks across 100+ LLMs

App Wishlist

3

Posts

2

Posters

449

Views

2

Watching

-

- Main Page: https://www.litellm.ai/

- Git: https://github.com/BerriAI/litellm

- Licence: MIT? https://github.com/BerriAI/litellm?tab=License-1-ov-file#readme

- Dockerfile: Yes https://github.com/QuantumNous/new-api/blob/main/Dockerfile

- Demo: Youtube video on main page

- Summary:

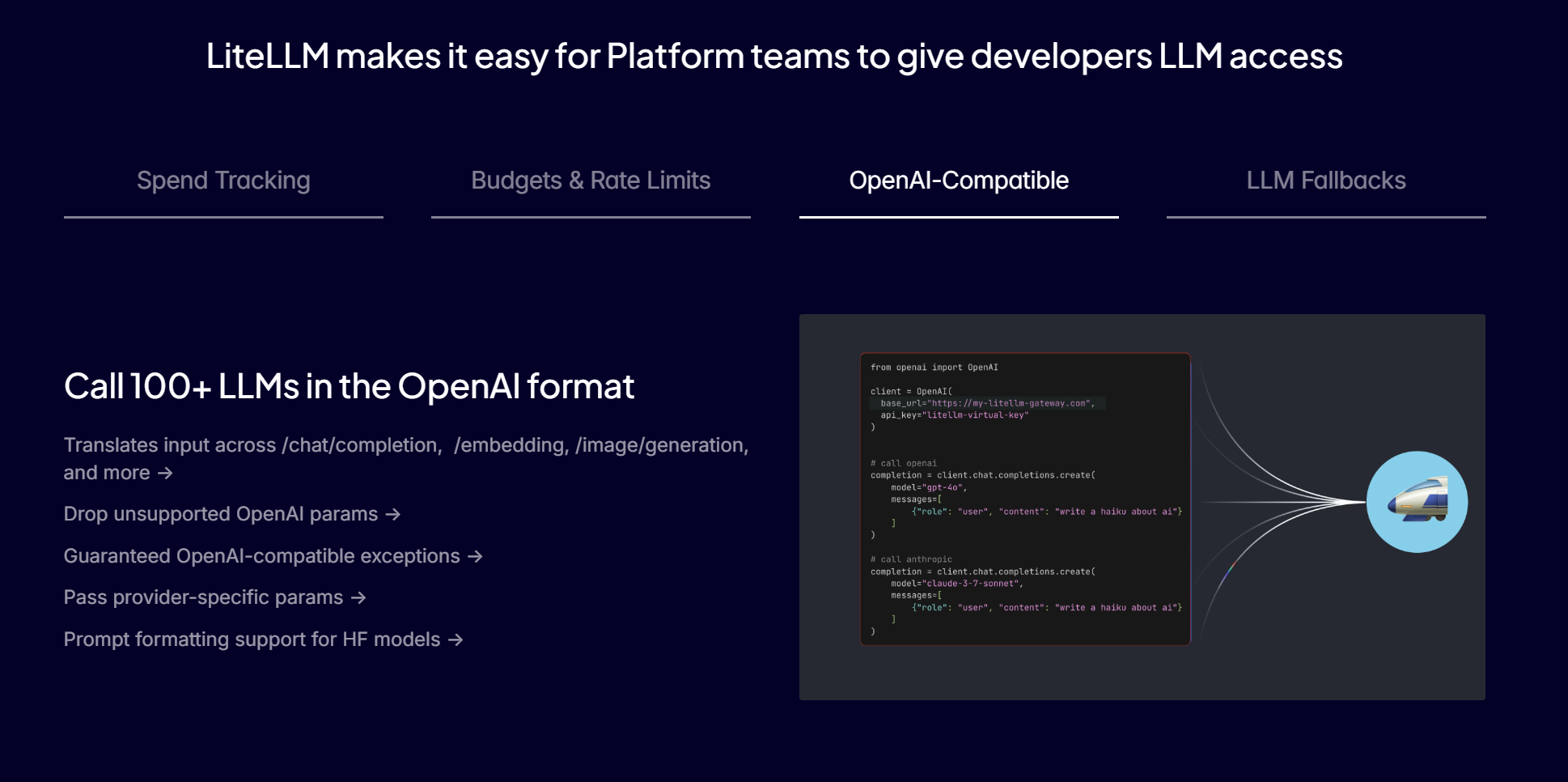

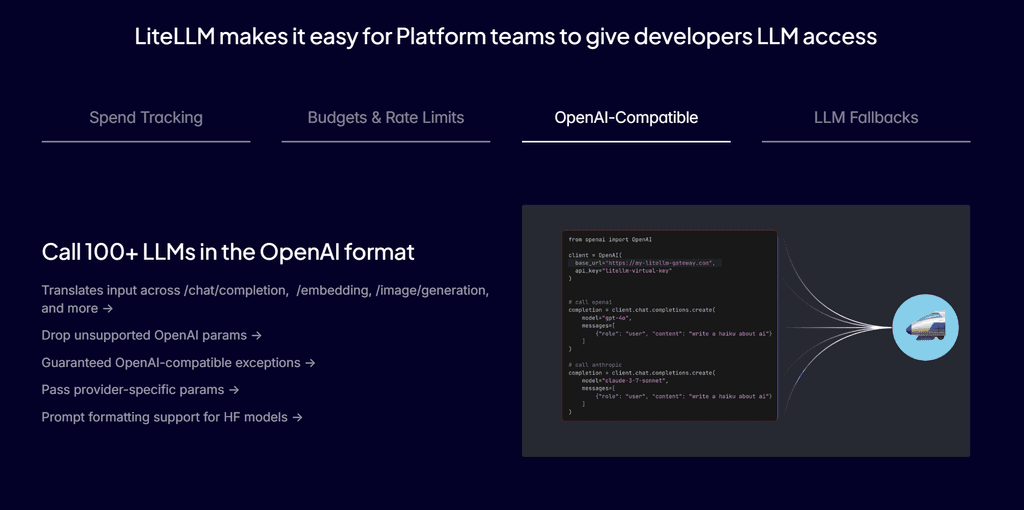

- Call 100+ LLMs using the OpenAI Input/Output Format

- Translate inputs to provider's completion, embedding, and image_generation endpoints

- (Consistent output, text responses will always be available at ['choices'][0]['message']['content']

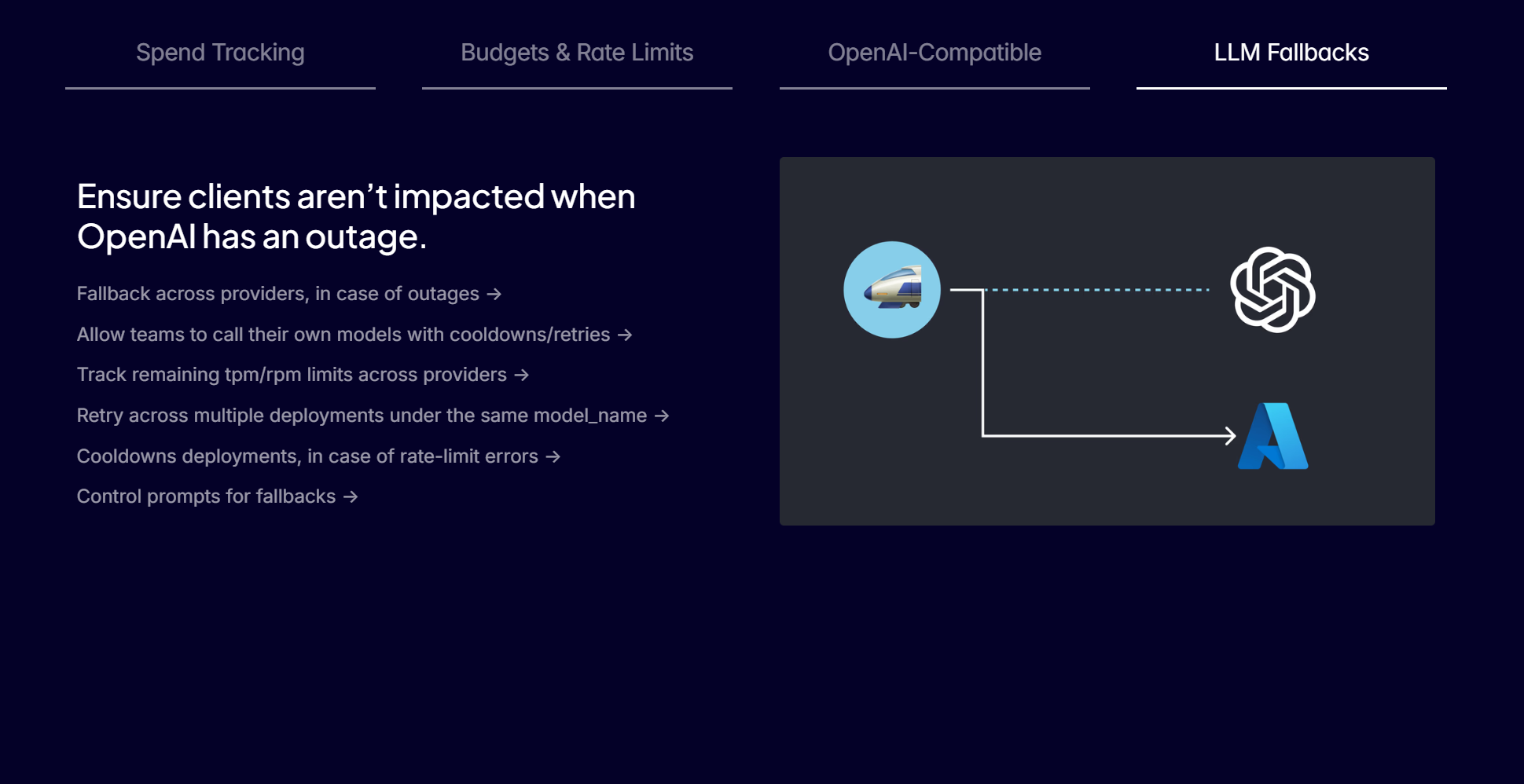

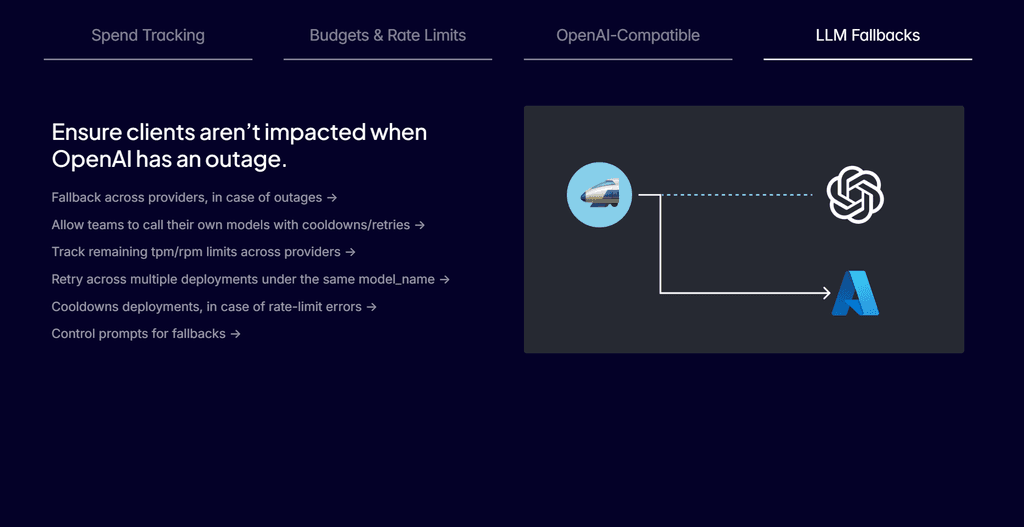

- Retry/fallback logic across multiple deployments (e.g. Azure/OpenAI) - Router

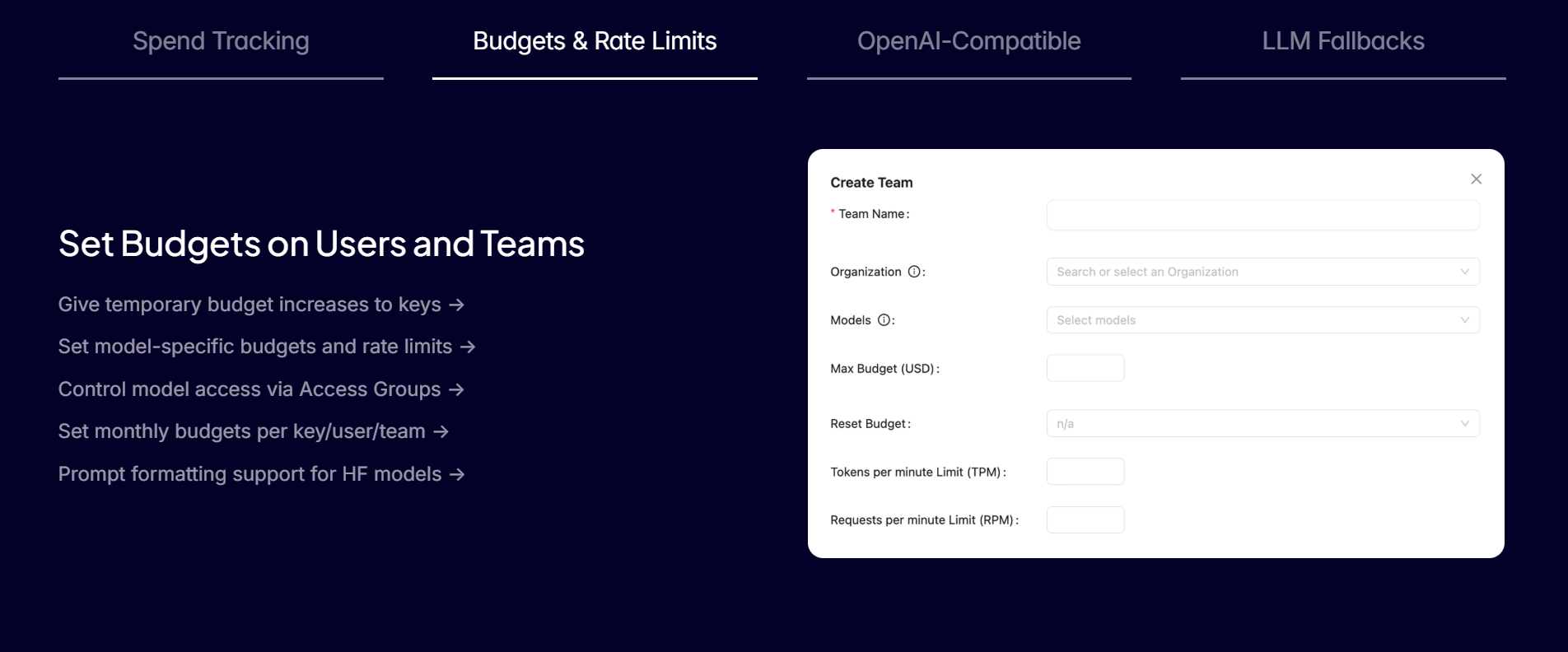

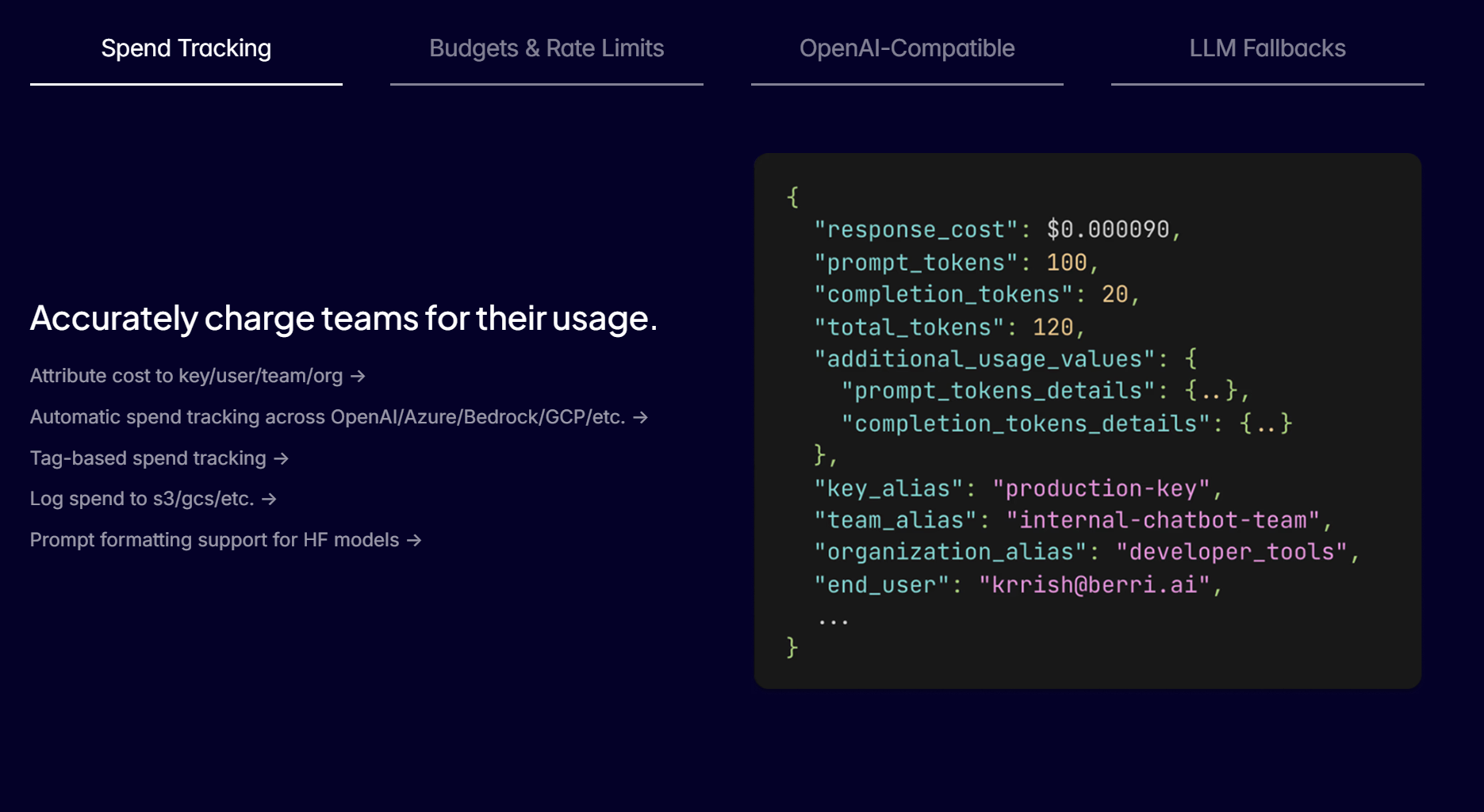

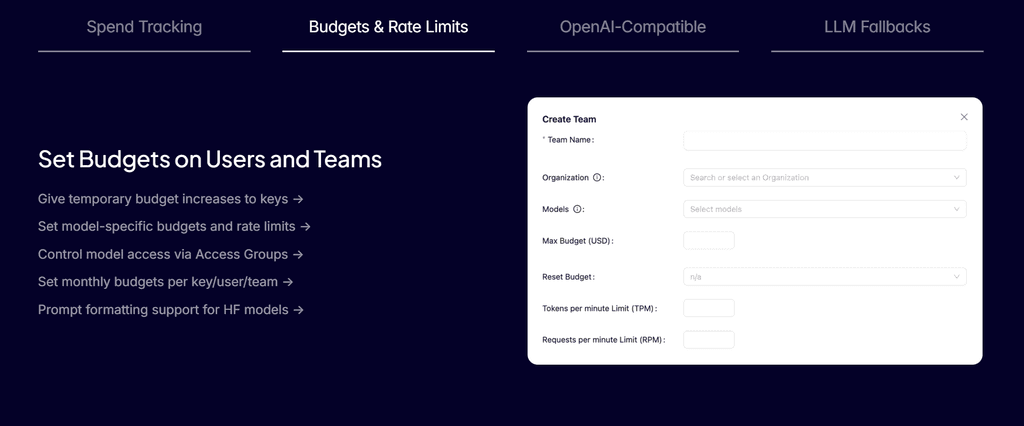

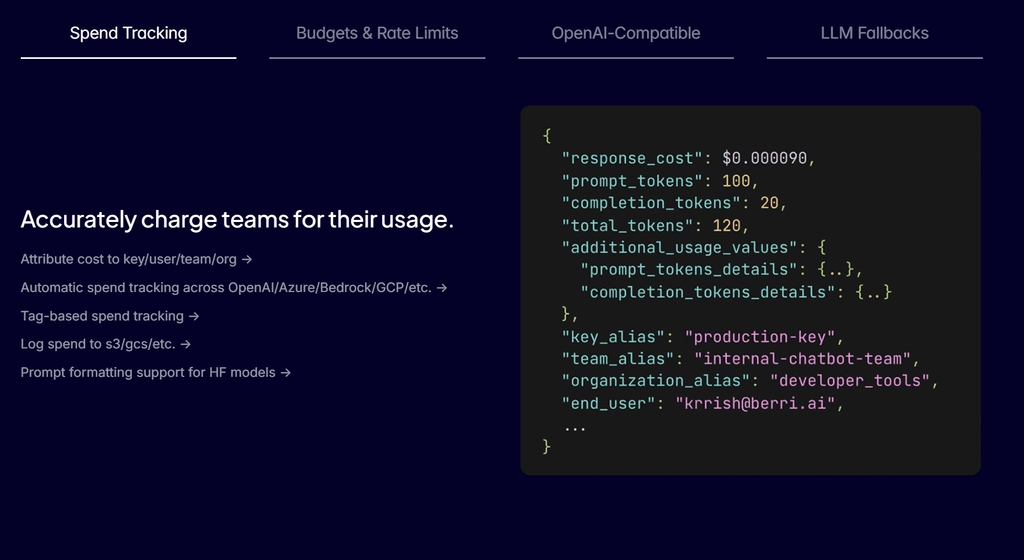

- Track spend & set budgets per project LiteLLM Proxy Server

- Notes: Supported LLM providers:

https://docs.litellm.ai/docs/providers

-

Alternative to / Libhunt link: OpenRouter

https://alternativeto.net/software/litellm/ -

Screenshots: images, brand logo

-

other posts on this:

-

other posts on this:

@marcusquinn Thanks for that. Looks like my post is a duplicate of:

https://forum.cloudron.io/topic/13141/litellm-openrouter-self-hosted-alternative-proxy-provides-access-to-openai-bedrock-anthropic-gemini-etc?_=1759700788590(I posted 3 apps, checked the first one, and then forgot to check if the other two had already been requested!)

@girish What is the procedure to merge this dupe with @jagan 's earlier request here?

I can see the topic tools, merge option, but it is a bit confusing on how to paste/include jagan's thread.