Feedback: Cloudron Update 6.2.4

-

One of a few Cloudron instances has a problem with mysql after the update. I lost some data and everytime I restore the app from a backup I ended up with "Error : Addons Error - Error restoring mysql: read ECONNRESET". Just saying

-

One of a few Cloudron instances has a problem with mysql after the update. I lost some data and everytime I restore the app from a backup I ended up with "Error : Addons Error - Error restoring mysql: read ECONNRESET". Just saying

@luckow Were you updating from 6.1.2 ? Did I understand correctly that one app out of all your Cloudron instances had an update issue? What app was this (built-in or custom?) ? Also, can you provide the last few lines of the logs of mysql service when you trigger a restore ?

-

@luckow Were you updating from 6.1.2 ? Did I understand correctly that one app out of all your Cloudron instances had an update issue? What app was this (built-in or custom?) ? Also, can you provide the last few lines of the logs of mysql service when you trigger a restore ?

@girish custom app (staging environment. here: drupal 9.x) It hits 2 from 9 stage apps. No clue why only 2

Mar 12 19:19:46 box:tasks 6843: {"percent":65,"message":"Download LALA.jpg finished"} Mar 12 19:19:46 box:backups Recreating empty directories in {"localRoot":"/home/yellowtent/appsdata/7ef8d8c0-3c51-45c5-b0af-a98bcb43da98","layout":[]} Mar 12 19:19:46 box:backups downloadApp: time: 11.72 Mar 12 19:19:46 box:services URL restoreAddons Mar 12 19:19:46 box:services URL restoreAddons: restoring ["localstorage","mysql","sendmail"] Mar 12 19:19:46 box:services URL restoreMySql Mar 12 19:19:53 box:apptask URL error installing app: BoxError: Error restoring mysql: read ECONNRESET at Request._callback (/home/yellowtent/box/src/services.js:1354:40) at self.callback (/home/yellowtent/box/node_modules/request/request.js:185:22) at Request.emit (events.js:327:22) at Request.EventEmitter.emit (domain.js:467:12) at Request.onRequestError (/home/yellowtent/box/node_modules/request/request.js:877:8) at ClientRequest.emit (events.js:315:20) at ClientRequest.EventEmitter.emit (domain.js:467:12) at TLSSocket.socketErrorListener (_http_client.js:469:9) at TLSSocket.emit (events.js:315:20) at TLSSocket.EventEmitter.emit (domain.js:467:12) { reason: 'Addons Error', details: {}6.1.2 -> 6.2.4

-

@girish custom app (staging environment. here: drupal 9.x) It hits 2 from 9 stage apps. No clue why only 2

Mar 12 19:19:46 box:tasks 6843: {"percent":65,"message":"Download LALA.jpg finished"} Mar 12 19:19:46 box:backups Recreating empty directories in {"localRoot":"/home/yellowtent/appsdata/7ef8d8c0-3c51-45c5-b0af-a98bcb43da98","layout":[]} Mar 12 19:19:46 box:backups downloadApp: time: 11.72 Mar 12 19:19:46 box:services URL restoreAddons Mar 12 19:19:46 box:services URL restoreAddons: restoring ["localstorage","mysql","sendmail"] Mar 12 19:19:46 box:services URL restoreMySql Mar 12 19:19:53 box:apptask URL error installing app: BoxError: Error restoring mysql: read ECONNRESET at Request._callback (/home/yellowtent/box/src/services.js:1354:40) at self.callback (/home/yellowtent/box/node_modules/request/request.js:185:22) at Request.emit (events.js:327:22) at Request.EventEmitter.emit (domain.js:467:12) at Request.onRequestError (/home/yellowtent/box/node_modules/request/request.js:877:8) at ClientRequest.emit (events.js:315:20) at ClientRequest.EventEmitter.emit (domain.js:467:12) at TLSSocket.socketErrorListener (_http_client.js:469:9) at TLSSocket.emit (events.js:315:20) at TLSSocket.EventEmitter.emit (domain.js:467:12) { reason: 'Addons Error', details: {}6.1.2 -> 6.2.4

-

@luckow I think this is just MySQL wanting more memory. If you go to services -> mysql -> logs, do you see it restarting? Sometimes importing dumps can take a lot lot of memory.

@girish nope. Same error with doubled ram. Problem is a Duplicate entry in my app backup.

Mar 12 22:03:57 restore: restoring database 9e21f4c46c93e8f9 Mar 12 22:04:05 Stderr from db import: ERROR 1062 (23000) at line 4144: Duplicate entry 'masse' for key 'search_total.PRIMARY' Mar 12 22:04:05 events.js:292 Mar 12 22:04:05 throw er; // Unhandled 'error' event Mar 12 22:04:05 ^ Mar 12 22:04:05 Error: write EPIPE Mar 12 22:04:05 at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:94:16) Mar 12 22:04:05 Emitted 'error' event on Socket instance at: Mar 12 22:04:05 at Socket.onerror (internal/streams/readable.js:760:14) Mar 12 22:04:05 at Socket.emit (events.js:315:20) Mar 12 22:04:05 at emitErrorNT (internal/streams/destroy.js:106:8) Mar 12 22:04:05 at emitErrorCloseNT (internal/streams/destroy.js:74:3) Mar 12 22:04:05 at processTicksAndRejections (internal/process/task_queues.js:80:21) { Mar 12 22:04:05 errno: -32, Mar 12 22:04:05 code: 'EPIPE', Mar 12 22:04:05 syscall: 'write' Mar 12 22:04:05 } Mar 12 22:04:05 2021-03-12 21:04:05,178 INFO exited: mysql-service (exit status 1; not expected) -

@girish nope. Same error with doubled ram. Problem is a Duplicate entry in my app backup.

Mar 12 22:03:57 restore: restoring database 9e21f4c46c93e8f9 Mar 12 22:04:05 Stderr from db import: ERROR 1062 (23000) at line 4144: Duplicate entry 'masse' for key 'search_total.PRIMARY' Mar 12 22:04:05 events.js:292 Mar 12 22:04:05 throw er; // Unhandled 'error' event Mar 12 22:04:05 ^ Mar 12 22:04:05 Error: write EPIPE Mar 12 22:04:05 at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:94:16) Mar 12 22:04:05 Emitted 'error' event on Socket instance at: Mar 12 22:04:05 at Socket.onerror (internal/streams/readable.js:760:14) Mar 12 22:04:05 at Socket.emit (events.js:315:20) Mar 12 22:04:05 at emitErrorNT (internal/streams/destroy.js:106:8) Mar 12 22:04:05 at emitErrorCloseNT (internal/streams/destroy.js:74:3) Mar 12 22:04:05 at processTicksAndRejections (internal/process/task_queues.js:80:21) { Mar 12 22:04:05 errno: -32, Mar 12 22:04:05 code: 'EPIPE', Mar 12 22:04:05 syscall: 'write' Mar 12 22:04:05 } Mar 12 22:04:05 2021-03-12 21:04:05,178 INFO exited: mysql-service (exit status 1; not expected) -

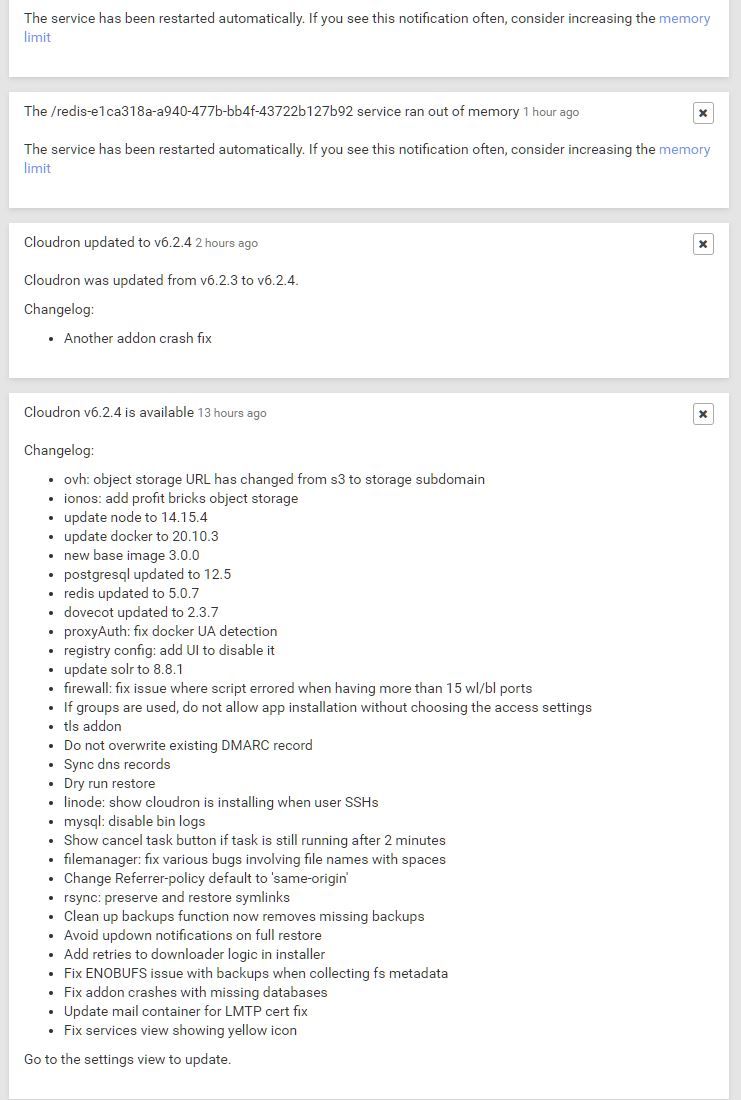

After updating my redis is complaining ...

any ideas? what I should do? This looks like a regression.

Also looking at the graphite logs:

Mar 12 23:07:05 12/03/2021 22:07:05 :: [tagdb] Error tagging collectd.localhost.table-0e95e7bb-2ffb-4b5c-af67-64484fe51e33-memory.gauge-cache: Error requesting http://127.0.0.1:80/tags/tagMultiSeries: HTTPConnectionPool(host='127.0.0.1', port=80): Max retries exceeded with url: /tags/tagMultiSeries (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fdc0f856af0>: Failed to establish a new connection: [Errno 111] Connection refused')) Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Tagging collectd.localhost.table-e1ca318a-a940-477b-bb4f-43722b127b92-memory.gauge-hierarchical_memory_limit, collectd.localhost.table-d03dd73e-4045-42f7-8e6d-7b6e36b2caba-memory.gauge-total_active_file, collectd.localhost.table-9cea909a-e860-4a20-8d38-bd7820088c93-memory.gauge-inactive_anon, collectd.localhost.table-4d6310dd-104d-4eae-bdd2-025ad353bc45-memory.gauge-total_pgmajfault, collectd.localhost.table-42c605b3-e603-43a2-bfa7-f24fc238c899-memory.gauge-pgpgin, collectd.localhost.table-33db8df1-0687-4415-b5a2-d1ffc5a1339c-memory.gauge-total_swap Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Error tagging collectd.localhost.table-e1ca318a-a940-477b-bb4f-43722b127b92-memory.gauge-hierarchical_memory_limit, collectd.localhost.table-d03dd73e-4045-42f7-8e6d-7b6e36b2caba-memory.gauge-total_active_file, collectd.localhost.table-9cea909a-e860-4a20-8d38-bd7820088c93-memory.gauge-inactive_anon, collectd.localhost.table-4d6310dd-104d-4eae-bdd2-025ad353bc45-memory.gauge-total_pgmajfault, collectd.localhost.table-42c605b3-e603-43a2-bfa7-f24fc238c899-memory.gauge-pgpgin, collectd.localhost.table-33db8df1-0687-4415-b5a2-d1ffc5a1339c-memory.gauge-total_swap: Error requesting http://127.0.0.1:80/tags/tagMultiSeries: HTTPConnectionPool(host='127.0.0.1', port=80): Max retries exceeded with url: /tags/tagMultiSeries (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fdc0e772700>: Failed to establish a new connection: [Errno 111] Connection refused')) Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Tagging collectd.localhost.table-0ea62e64-2569-4095-942e-81f50c4c93a3-memory.gauge-shmem Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Error tagging collectd.localhost.table-0ea62e64-2569-4095-942e-81f50c4c93a3-memory.gauge-shmem: Error requesting http://127.0.0.1:80/tags/tagMultiSeries: HTTPConnectionPool(host='127.0.0.1', port=80): Max retries exceeded with url: /tags/tagMultiSeries (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fdc0e7b18e0>: Failed to establish a new connection: [Errno 111] Connection refused')) Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Tagging collectd.localhost.aggregation-cpu-max.cpu-system Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Error tagging collectd.localhost.aggregation-cpu-max.cpu-system: Error requesting http://127.0.0.1:80/tags/tagMultiSeries: HTTPConnectionPool(host='127.0.0.1', port=80): Max retries exceeded with url: /tags/tagMultiSeries (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fdc0e789b20>: Failed to establish a new connection: [Errno 111] Connection refused'))and redis gets oom:

Mar 12 22:54:34 2021-03-12 21:54:34,993 INFO exited: redis (terminated by SIGKILL; not expected) Mar 12 22:54:35 2021-03-12 21:54:35,003 INFO spawned: 'redis' with pid 577 Mar 12 22:54:35 2021-03-12 21:54:35,003 INFO reaped unknown pid 576 Mar 12 22:54:35 577:C 12 Mar 2021 21:54:35.037 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo Mar 12 22:54:35 577:C 12 Mar 2021 21:54:35.037 # Redis version=5.0.7, bits=64, commit=00000000, modified=0, pid=577, just started Mar 12 22:54:35 577:C 12 Mar 2021 21:54:35.037 # Configuration loaded Mar 12 22:54:35 577:M 12 Mar 2021 21:54:35.039 * Running mode=standalone, port=6379. Mar 12 22:54:35 577:M 12 Mar 2021 21:54:35.040 # Server initialized Mar 12 22:54:35 577:M 12 Mar 2021 21:54:35.040 # WARNING overcommit_memory is set to 0! Background save may fail under low memory condition. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect. Mar 12 22:54:35 577:M 12 Mar 2021 21:54:35.611 * DB loaded from disk: 0.571 seconds -

Also looking at the graphite logs:

Mar 12 23:07:05 12/03/2021 22:07:05 :: [tagdb] Error tagging collectd.localhost.table-0e95e7bb-2ffb-4b5c-af67-64484fe51e33-memory.gauge-cache: Error requesting http://127.0.0.1:80/tags/tagMultiSeries: HTTPConnectionPool(host='127.0.0.1', port=80): Max retries exceeded with url: /tags/tagMultiSeries (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fdc0f856af0>: Failed to establish a new connection: [Errno 111] Connection refused')) Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Tagging collectd.localhost.table-e1ca318a-a940-477b-bb4f-43722b127b92-memory.gauge-hierarchical_memory_limit, collectd.localhost.table-d03dd73e-4045-42f7-8e6d-7b6e36b2caba-memory.gauge-total_active_file, collectd.localhost.table-9cea909a-e860-4a20-8d38-bd7820088c93-memory.gauge-inactive_anon, collectd.localhost.table-4d6310dd-104d-4eae-bdd2-025ad353bc45-memory.gauge-total_pgmajfault, collectd.localhost.table-42c605b3-e603-43a2-bfa7-f24fc238c899-memory.gauge-pgpgin, collectd.localhost.table-33db8df1-0687-4415-b5a2-d1ffc5a1339c-memory.gauge-total_swap Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Error tagging collectd.localhost.table-e1ca318a-a940-477b-bb4f-43722b127b92-memory.gauge-hierarchical_memory_limit, collectd.localhost.table-d03dd73e-4045-42f7-8e6d-7b6e36b2caba-memory.gauge-total_active_file, collectd.localhost.table-9cea909a-e860-4a20-8d38-bd7820088c93-memory.gauge-inactive_anon, collectd.localhost.table-4d6310dd-104d-4eae-bdd2-025ad353bc45-memory.gauge-total_pgmajfault, collectd.localhost.table-42c605b3-e603-43a2-bfa7-f24fc238c899-memory.gauge-pgpgin, collectd.localhost.table-33db8df1-0687-4415-b5a2-d1ffc5a1339c-memory.gauge-total_swap: Error requesting http://127.0.0.1:80/tags/tagMultiSeries: HTTPConnectionPool(host='127.0.0.1', port=80): Max retries exceeded with url: /tags/tagMultiSeries (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fdc0e772700>: Failed to establish a new connection: [Errno 111] Connection refused')) Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Tagging collectd.localhost.table-0ea62e64-2569-4095-942e-81f50c4c93a3-memory.gauge-shmem Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Error tagging collectd.localhost.table-0ea62e64-2569-4095-942e-81f50c4c93a3-memory.gauge-shmem: Error requesting http://127.0.0.1:80/tags/tagMultiSeries: HTTPConnectionPool(host='127.0.0.1', port=80): Max retries exceeded with url: /tags/tagMultiSeries (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fdc0e7b18e0>: Failed to establish a new connection: [Errno 111] Connection refused')) Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Tagging collectd.localhost.aggregation-cpu-max.cpu-system Mar 12 23:07:26 12/03/2021 22:07:26 :: [tagdb] Error tagging collectd.localhost.aggregation-cpu-max.cpu-system: Error requesting http://127.0.0.1:80/tags/tagMultiSeries: HTTPConnectionPool(host='127.0.0.1', port=80): Max retries exceeded with url: /tags/tagMultiSeries (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fdc0e789b20>: Failed to establish a new connection: [Errno 111] Connection refused'))and redis gets oom:

Mar 12 22:54:34 2021-03-12 21:54:34,993 INFO exited: redis (terminated by SIGKILL; not expected) Mar 12 22:54:35 2021-03-12 21:54:35,003 INFO spawned: 'redis' with pid 577 Mar 12 22:54:35 2021-03-12 21:54:35,003 INFO reaped unknown pid 576 Mar 12 22:54:35 577:C 12 Mar 2021 21:54:35.037 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo Mar 12 22:54:35 577:C 12 Mar 2021 21:54:35.037 # Redis version=5.0.7, bits=64, commit=00000000, modified=0, pid=577, just started Mar 12 22:54:35 577:C 12 Mar 2021 21:54:35.037 # Configuration loaded Mar 12 22:54:35 577:M 12 Mar 2021 21:54:35.039 * Running mode=standalone, port=6379. Mar 12 22:54:35 577:M 12 Mar 2021 21:54:35.040 # Server initialized Mar 12 22:54:35 577:M 12 Mar 2021 21:54:35.040 # WARNING overcommit_memory is set to 0! Background save may fail under low memory condition. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect. Mar 12 22:54:35 577:M 12 Mar 2021 21:54:35.611 * DB loaded from disk: 0.571 seconds@drpaneas Just give that redis more memory. The "e1ca318a" is the app id. If you put in the search in the apps view, you will know which app's redis needs a bit more memory. It was reported earlier that pixelfed's redis needs more memory after the update. I guess this is because of update to Redis 5.