Optimal settings for Backblaze or other s3 backup services

-

Hi guys,

I'm playing around with Backblaze for backups instead of Vultr Object Storage, mostly just due to the same price getting me 1TB instead of 250GB, and performance when uploading tarball seems to be approximately the same (also wanted to use my home NAS but having issues with that for now, that's a project for another day, lol).

This got me thinking... what are the optimum settings to ensure the speediest upload to Backblaze? This logic we find here will likely applies to other s3 services but I'm thinking Backblaze specifically in this case.

What I've learned so far from Backblaze's docs... they seem to recommend a max part size of 128 MB (source: https://help.backblaze.com/hc/en-us/articles/1260806302690-Quickstart-Guide-for-Synology-Cloud-Sync-and-B2-Cloud-Storage), though conflicting that I've heard that we should use their API to determine a value it gives us individually about recommendedPartSize, however I can't see why that'd be different for people, so not sure how that comes to be.

Some questions I want to try and answer (either myself for others or if others have already done the homework please chime in):

- What is the optimal format when most files are small in size vs large in size? I have a mix, where some large 1-4 GB files on my Cloudron, but most are of course way smaller KB-sized files. So for me, would it make sense to use rsync or tgz as the format?

- If using rsync, what are the optimum settings, such as for max part size (I believe it's 128 MB from Backblazes docs though I want to confirm this), copy parallel, download parallel, upload parallel?

The above results will assume memory isn't a limitation in the Cloudron service, because of course some with low memory won't be able to get the best speeds without killing the Backup process from using up too much memory.

I have roughly 60 GB of data to backup, which is why I was thinking rsync may be better, but I've seen rsync create other issues sometimes with s3 buckets being limiting factors of how many files you can delete at once if you were to try and clean up your account or remove a particular backup, etc, as there are more than 50,000 files per backup which I seem to hit a limit of when trying to then remove them later on in the web GUI of Vultr for example.

I'm going to play around with this myself for now and will report back if I find any particular method much better than the other, though I kind of suspect they'll all be similar.

-

Would be great to see your results! One thing to note is that each region also often has different configuration settings. On a side note, B2 download can be quite slow if that matters (atleast back then when they launched, my understanding is this is by design since they target "archival" like AWS Glacier).

-

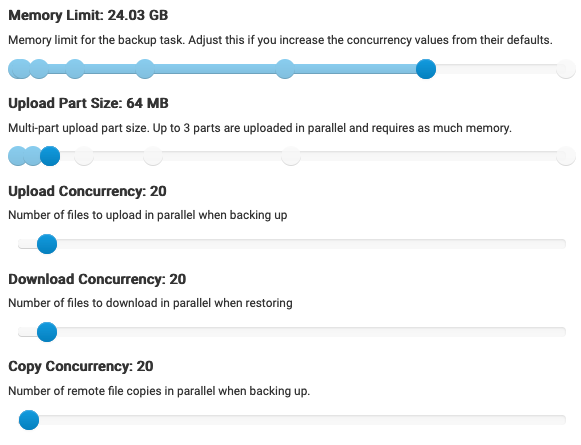

Finally managed to get backups stable with backblaze for a ~1TB cloudron with these settings (using encryption and rsync):

Some notes:

- During initial backups, memory was by far the most common failure. The amount needed was higher than I first expected for a given upload size and concurrency. I found (upload part size) x (upload concurrency) x 4 was roughly the amount needed. i.e. (64mb)*(20)*4 = ~5120mb.

- Scheduling backups at a time when server load is low and allotting as much memory as comfortable helped stability a lot. I ended up keeping my memory limit much higher than strictly necessary. This could probably be reduced, but stability is more important to me at the moment.

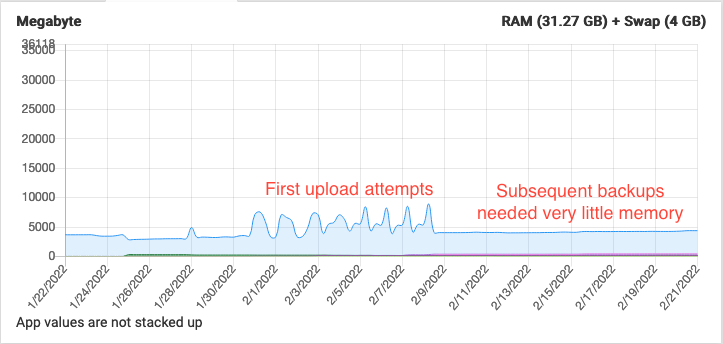

- In the graph above memory tended to spike once per backup- @girish maybe this is an issue that could be optimized?

- After initial upload, the memory required for subsequent rsyncs dropped off significantly as expected

- Uploading ~1TB of data with the above took approx. 24h

Curious to see what others find from optimizing these further, but glad to have a working baseline in the mean time.

I'm also curious to see what people's monthly bills tally up to. Waiting for the first month's bill to come in but B2 seems more cost effective than Wasabi so far!