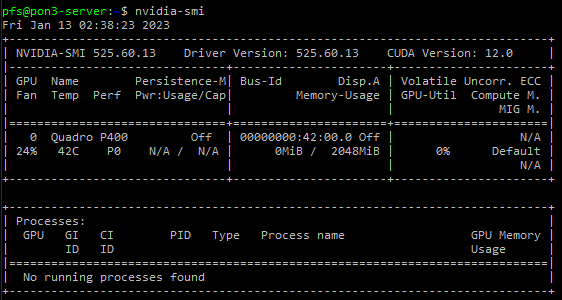

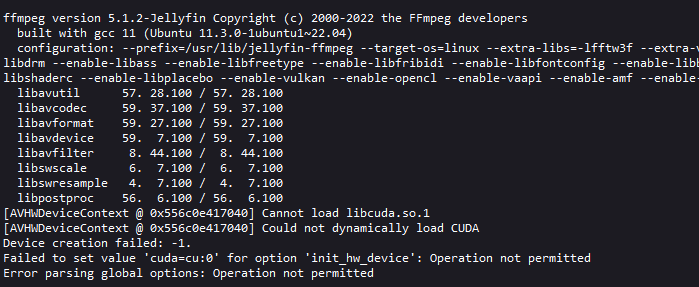

CUDA not permitted

-

N nebulon marked this topic as a question on

N nebulon marked this topic as a question on

-

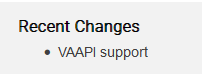

vaapiis supported with that last package update. Regarding CUDA I guess we simply have no devices to test this with easily. -

@nebulon I can provide access to mine if you think that will help to implement it? I'm sure a fair amount of people would love to use CUDA features with it.

-

@natzilla that might indeed be helpful. If you don't mind us installing a jellyfin test instance on your system, then please enable remote SSH support for us and send us a mail with the dashboard domain to support@cloudron.io

-

@natzilla that might indeed be helpful. If you don't mind us installing a jellyfin test instance on your system, then please enable remote SSH support for us and send us a mail with the dashboard domain to support@cloudron.io

-

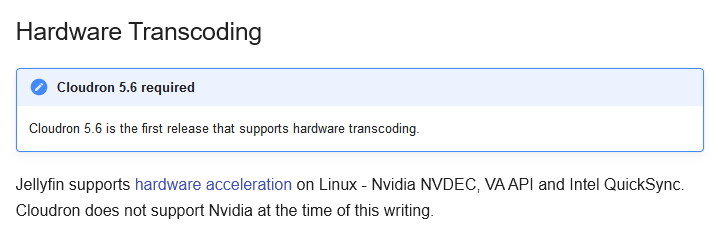

After some investigation, it turns out that one has to use a custom docker version provided by nvidia to add support for this. https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#getting-started and https://github.com/NVIDIA/nvidia-docker

So this means that we have to somehow add support on the Cloudron platform side as well as make sure things are working in nvidia and then also non-nvidia case, given that docker parts have to be compatible with whatever the server currently provides hardware wise.

So this is a bit out of scope for the coming release but hopefully we can get this supported afterwards.

-

After some investigation, it turns out that one has to use a custom docker version provided by nvidia to add support for this. https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#getting-started and https://github.com/NVIDIA/nvidia-docker

So this means that we have to somehow add support on the Cloudron platform side as well as make sure things are working in nvidia and then also non-nvidia case, given that docker parts have to be compatible with whatever the server currently provides hardware wise.

So this is a bit out of scope for the coming release but hopefully we can get this supported afterwards.

I'm surprised I missed that, but I'm also surprised Nvidia had to make this difficult. Going forward I'm willing to provide you the access you need for testing implementation whenever that can happen. I'll have to live with none hardware acceleration for now.

-

After some investigation, it turns out that one has to use a custom docker version provided by nvidia to add support for this. https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#getting-started and https://github.com/NVIDIA/nvidia-docker

So this means that we have to somehow add support on the Cloudron platform side as well as make sure things are working in nvidia and then also non-nvidia case, given that docker parts have to be compatible with whatever the server currently provides hardware wise.

So this is a bit out of scope for the coming release but hopefully we can get this supported afterwards.

@nebulon this appears to use a docker configuration in privileged mode to get access to the hardware device, which goes against the cloudron use case and security posture.

A better approach would be to use a different

runcsuch as sysbox-runc that can solve that issue without requiring privileged mode. -

After some investigation, it turns out that one has to use a custom docker version provided by nvidia to add support for this. https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#getting-started and https://github.com/NVIDIA/nvidia-docker

So this means that we have to somehow add support on the Cloudron platform side as well as make sure things are working in nvidia and then also non-nvidia case, given that docker parts have to be compatible with whatever the server currently provides hardware wise.

So this is a bit out of scope for the coming release but hopefully we can get this supported afterwards.

-

After some investigation, it turns out that one has to use a custom docker version provided by nvidia to add support for this. https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#getting-started and https://github.com/NVIDIA/nvidia-docker

So this means that we have to somehow add support on the Cloudron platform side as well as make sure things are working in nvidia and then also non-nvidia case, given that docker parts have to be compatible with whatever the server currently provides hardware wise.

So this is a bit out of scope for the coming release but hopefully we can get this supported afterwards.

@nebulon it looks like that nvidia-docker has been superseded by nvidia container toolkit

https://github.com/NVIDIA/nvidia-container-toolkit

Does that make the issue go away or change anything at all ?

I'm also happy to allow you access to my cloudron server with nvidia GPU to try out.I've got the nvidia drivers installed, and nvidia container toolkit installed also, but...

In this guide

https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

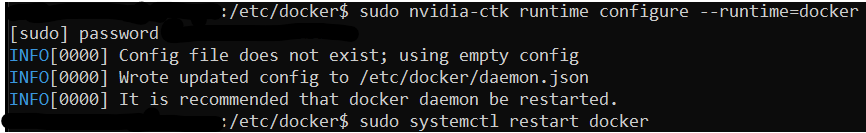

I have stopped at running the configuration commandsudo nvidia-ctk runtime configure --runtime=dockerbecause the file /etc/docker/daemon.json doesn't exist... (and I can't find it anywhere else either) which leads me to think it's not going to work.

Also theres nothing explaining what effect it will have on the existing docker containers, I'm assuming it will add access to the GPU to all containers. -

Did it make any difference?

Assuming its functioning I would think the docker start would still have to be modified.

ie docker run --gpu all <container> where ever that is. -

Ended up removing jellyfin from cloudron, its basically useless without hardware transcoding, imo.

I followed these instructions https://www.geeksforgeeks.org/how-to-install-jellyfin-media-server-on-ubuntu-22-04/ to install it in ubuntu (i know its not a supported config). And streaming with nvidia support makes it work like a different product. I don't have fancy DNS or SSL certs ...but it works

-

Ended up removing jellyfin from cloudron, its basically useless without hardware transcoding, imo.

I followed these instructions https://www.geeksforgeeks.org/how-to-install-jellyfin-media-server-on-ubuntu-22-04/ to install it in ubuntu (i know its not a supported config). And streaming with nvidia support makes it work like a different product. I don't have fancy DNS or SSL certs ...but it works

-

J james has marked this topic as solved on

J james has marked this topic as solved on