Issue with Lengthy and Failing Updates

-

@archos How much memory have you given the backup task ? Backups -> Configure -> Advanced. Maybe try increasing that a lot more.

@girish Thank you for the response, I tried adding more memory. There was only 7 GB available for backup. I have another question, shouldn't rsync be uploading only changed files? For example, it uploads photos in Nextcloud and videos in Peertube every day anew."

-

@girish Thank you for the response, I tried adding more memory. There was only 7 GB available for backup. I have another question, shouldn't rsync be uploading only changed files? For example, it uploads photos in Nextcloud and videos in Peertube every day anew."

@archos yes, it should upload only changed files. Which backend are you using? If it's uploading every day again and again, it's most likely because it has never succeeded in previous attempts. Once, the first attempt succeeds, the future attempts won't re-upload.

-

@archos yes, it should upload only changed files. Which backend are you using? If it's uploading every day again and again, it's most likely because it has never succeeded in previous attempts. Once, the first attempt succeeds, the future attempts won't re-upload.

@girish Ok, thank you for the information. I use backup on IDrive e2. The backups are performed daily, but I only keep two. It's true that lately the backups have occasionally failed. I will try creating a new bucket and maybe now that I've added more memory, it will be better.

-

@girish Ok, thank you for the information. I use backup on IDrive e2. The backups are performed daily, but I only keep two. It's true that lately the backups have occasionally failed. I will try creating a new bucket and maybe now that I've added more memory, it will be better.

@archos I added more memory for backups and created a new bucket. The first manually initiated backup went smoothly, but the second automatic one ended with 'Backup failed' again. I am attaching the log below. What's also strange is that the second backup again took 8 hours. I have set up the backups using Rsync, but it still re-uploads all videos, photos, books, etc., which is a tremendous amount of data—I have a total of 912 GB used. So, I'm not sure where the problem could be.

I also think that rsync uploads all the data from the server every day. This would match the size of the first bucket, which is 3.64 TB, even though I only keep two daily backups. I would be grateful for any advice.box:tasks update 9868: {"percent":55.05405405405404,"message":"Copied 321629 files with error: null"} May 04 07:20:32 box:tasks setCompleted - 9868: {"result":null,"error":{"stack":"BoxError: Backup not found\n at Object.setState (/home/yellowtent/box/src/backups.js:238:42)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async rotateAppBackup (/home/yellowtent/box/src/backuptask.js:303:5)\n at async backupAppWithTag (/home/yellowtent/box/src/backuptask.js:383:12)\n at async fullBackup (/home/yellowtent/box/src/backuptask.js:503:29)","name":"BoxError","reason":"Not found","details":{},"message":"Backup not found"}} May 04 07:20:32 box:tasks update 9868: {"percent":100,"result":null,"error":{"stack":"BoxError: Backup not found\n at Object.setState (/home/yellowtent/box/src/backups.js:238:42)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async rotateAppBackup (/home/yellowtent/box/src/backuptask.js:303:5)\n at async backupAppWithTag (/home/yellowtent/box/src/backuptask.js:383:12)\n at async fullBackup (/home/yellowtent/box/src/backuptask.js:503:29)","name":"BoxError","reason":"Not found","details":{},"message":"Backup not found"}} May 04 07:20:32 box:taskworker Task took 22829.343 seconds [no timestamp] Backup not found -

@archos strange... it says that it copied the files but after that it fails. If you reach out to us at support@cloudron.io , I can debug further.

-

So two backups were completed successfully, but today the backup failed again. Mainly through rsync, it backs up the entire Cloudron each time, instead of incremental backups.

That seems strange to me.May 07 07:30:11 box:tasks setCompleted - 9891: {"result":null,"error":{"stack":"BoxError: Backup not found\n at Object.setState (/home/yellowtent/box/src/backups.js:238:42)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async rotateAppBackup (/home/yellowtent/box/src/backuptask.js:303:5)\n at async backupAppWithTag (/home/yellowtent/box/src/backuptask.js:383:12)\n at async fullBackup (/home/yellowtent/box/src/backuptask.js:503:29)","name":"BoxError","reason":"Not found","details":{},"message":"Backup not found"}} May 07 07:30:11 box:tasks update 9891: {"percent":100,"result":null,"error":{"stack":"BoxError: Backup not found\n at Object.setState (/home/yellowtent/box/src/backups.js:238:42)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async rotateAppBackup (/home/yellowtent/box/src/backuptask.js:303:5)\n at async backupAppWithTag (/home/yellowtent/box/src/backuptask.js:383:12)\n at async fullBackup (/home/yellowtent/box/src/backuptask.js:503:29)","name":"BoxError","reason":"Not found","details":{},"message":"Backup not found"}} May 07 07:30:11 box:taskworker Task took 23409.098 seconds [no timestamp] Backup not found -

To update this thread. There seems to be a race where the backup cleaner kicks in while the full backup is still busy backing up apps. Those take a very long time so the cleaner already starts purging app backups from the database which then in the end cannot be referenced and thus the whole backup will error.

This will be good have fixed, currently testing a patch...

-

To update this thread. There seems to be a race where the backup cleaner kicks in while the full backup is still busy backing up apps. Those take a very long time so the cleaner already starts purging app backups from the database which then in the end cannot be referenced and thus the whole backup will error.

This will be good have fixed, currently testing a patch...

-

To update this thread. There seems to be a race where the backup cleaner kicks in while the full backup is still busy backing up apps. Those take a very long time so the cleaner already starts purging app backups from the database which then in the end cannot be referenced and thus the whole backup will error.

This will be good have fixed, currently testing a patch...

@nebulon Hello,

Unfortunately, the backup got stuck again today, this time during the Privatebin update. The update has not been completed since yesterday, and neither has the backup.:database Connection 7252 error: Packets out of order. Got: 0 Expected: 2 PROTOCOL_PACKETS_OUT_OF_ORDER May 14 10:12:21 box:database Connection 7258 error: Packets out of order. Got: 0 Expected: 2 PROTOCOL_PACKETS_OUT_OF_ORDER May 14 10:12:21 box:database Connection 7256 error: Packets out of order. Got: 0 Expected: 2 PROTOCOL_PACKETS_OUT_OF_ORDER May 14 10:12:21 box:database Connection 7253 error: Packets out of order. Got: 0 Expected: 2 PROTOCOL_PACKETS_OUT_OF_ORDER May 14 10:12:21 box:database Connection 7255 error: Packets out of order. Got: 0 Expected: 2 PROTOCOL_PACKETS_OUT_OF_ORDER -

I was looking at it just now, so apparently backups have succeeded afterwards, however one backup run in this case takes around 5h. Since the backup does work on live-data for 5h a lot of things may interfere (like in this run database connection issues)... all this is of course not ideal, but the current strategy is to start all over again if it fails midway to avoid random state with the goal to eventually succeed.

This is of course no great answer for the moment. Not just specific to this, but 100s of Gb on top of various degrees of stable connections + remote storage protocols and endpoints which behave inconsistently in our experience (especially the lower cost they get) make this all not easy

However there is much room to improve on our side with time.

However there is much room to improve on our side with time.Maybe we have to revisit this to look into other similar use-case setups how fine-grained and periodically other platforms deal with this, especially when cost per Gb or I/O is a factor.

-

I was looking at it just now, so apparently backups have succeeded afterwards, however one backup run in this case takes around 5h. Since the backup does work on live-data for 5h a lot of things may interfere (like in this run database connection issues)... all this is of course not ideal, but the current strategy is to start all over again if it fails midway to avoid random state with the goal to eventually succeed.

This is of course no great answer for the moment. Not just specific to this, but 100s of Gb on top of various degrees of stable connections + remote storage protocols and endpoints which behave inconsistently in our experience (especially the lower cost they get) make this all not easy

However there is much room to improve on our side with time.

However there is much room to improve on our side with time.Maybe we have to revisit this to look into other similar use-case setups how fine-grained and periodically other platforms deal with this, especially when cost per Gb or I/O is a factor.

@nebulon said in Issue with Lengthy and Failing Updates:

This is of course no great answer for the moment. Not just specific to this, but 100s of Gb on top of various degrees of stable connections + remote storage protocols and endpoints which behave inconsistently in our experience (especially the lower cost they get) make this all not easy However there is much room to improve on our side with time.

Recommended solutions help. Backup works with this and this and this service. It's unreliable with that and that and that service.

Next big help would be for your backups to pace themselves for the long haul. You could pre-estimate size and refuse to run the backup in intervals which do not allow enough time to finish the backup (hourly, perhaps daily is not enough for some crazy Cloudroner with hundreds of gigabytes of data: we were just mailed by someone who uses our software with 260 TB of storage, argh, all at consumer level, not enterprise level).

Next you could cap backup speed not to max out the bandwidth for a working server.

Yes, there's lots Cloudron could do to make backups easier and more successful.

Ours fail all the time despite following guidelines. We had to manually remove 16GB of failed updates from our server (failed updates should autodelete), and then manually clean out NextCloud deleted files (which Cloudron could make automatic by default, even if NextCloud developers don't care about server space as they plan for dedicated servers and object space, our intrepid leaders at Cloudron could). And Cloudron didn't tell us we had to update from Ubuntu 18 LTS for backups and updates to work properly so we were caught in a closed loop. It's not easy setting up a service as complex as Cloudron but there is some low-hanging fruit here.

-

I was looking at it just now, so apparently backups have succeeded afterwards, however one backup run in this case takes around 5h. Since the backup does work on live-data for 5h a lot of things may interfere (like in this run database connection issues)... all this is of course not ideal, but the current strategy is to start all over again if it fails midway to avoid random state with the goal to eventually succeed.

This is of course no great answer for the moment. Not just specific to this, but 100s of Gb on top of various degrees of stable connections + remote storage protocols and endpoints which behave inconsistently in our experience (especially the lower cost they get) make this all not easy

However there is much room to improve on our side with time.

However there is much room to improve on our side with time.Maybe we have to revisit this to look into other similar use-case setups how fine-grained and periodically other platforms deal with this, especially when cost per Gb or I/O is a factor.

@nebulon Hello, thank you for the information. I find it odd why Rsync always backs up all the files. Yes, the first backup is large, but afterwards, only the changed files should be backed up, not all the videos from Peertube and many GB from Nextcloud every day. I've tried several backup services, kept two-day and four-week backups, but Rsync always backs up the entire server each time. That's why I now keep only two backups. I use Rsync on another server as well, and there is no problem there.

That's why the backups are slow when the server backs up almost 1 TB of data daily. -

@nebulon Hello, thank you for the information. I find it odd why Rsync always backs up all the files. Yes, the first backup is large, but afterwards, only the changed files should be backed up, not all the videos from Peertube and many GB from Nextcloud every day. I've tried several backup services, kept two-day and four-week backups, but Rsync always backs up the entire server each time. That's why I now keep only two backups. I use Rsync on another server as well, and there is no problem there.

That's why the backups are slow when the server backs up almost 1 TB of data daily.@archos said in Issue with Lengthy and Failing Updates:

@nebulon Hello, thank you for the information. I find it odd why Rsync always backs up all the files. Yes, the first backup is large, but afterwards, only the changed files should be backed up, not all the videos from Peertube and many GB from Nextcloud every day.

Are you sure those files that seem identical but are copied regardless are not modified in any way? Other timestamps for example?

-

@archos said in Issue with Lengthy and Failing Updates:

@nebulon Hello, thank you for the information. I find it odd why Rsync always backs up all the files. Yes, the first backup is large, but afterwards, only the changed files should be backed up, not all the videos from Peertube and many GB from Nextcloud every day.

Are you sure those files that seem identical but are copied regardless are not modified in any way? Other timestamps for example?

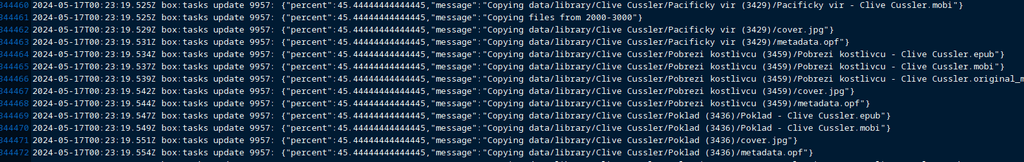

@necrevistonnezr The server occupies 960.45 GB and I currently maintain only two backups, with a total size of 2 TB. The attached image shows the log from today's backup of the Calibre application. I uploaded books to this library about two years ago. Since then, I haven't uploaded any new books to Calibre and no one else has made any changes, yet every day, the entire 10 GB of books is being backed up. It doesn't make sense for the two backups to occupy 2 TB when the server itself only uses nearly 1 TB.

-

I would recommend to investigate whether the same files in the app and in the backup are indeed identical.

rsync would not copy identical files all over again.

I use rsync to backup to an external harddrive - my Cloudron instance is around ~ 250 GB and all my backups (7 daily, 4 weekly, 12 monthly) going back to June 2023 occupy ~ 350 GB.

The same backups that I push via restic to Onedrive going back to June 2022 (!) occupy ~ 310 GB (restic is very good in compression and de-duplication). -

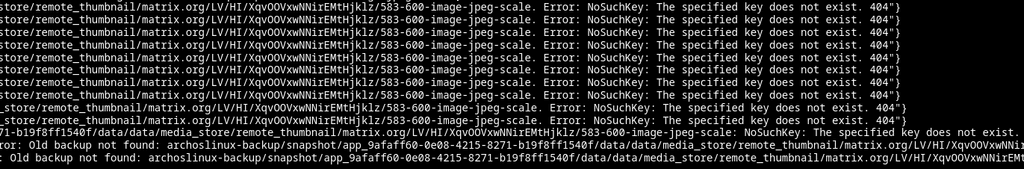

Unfortunately, the backups fail to complete again. I have tried to manually initiate the backup process twice, but each time the backup fails with an error, specifically with the Matrix application. I have no idea what to do. I also tried using a Hetzner box, but the backups take even longer there.

-

The issue with the backup was likely caused by the Matrix app. I turned off the backup in Matrix and initiated a new backup. Within two hours, the backup was successfully completed. I'll see how the backup turns out tonight.

Something is wrong with the Matrix Synapse. -

Hello everyone, it's been quiet for a few days since I turned off the automatic backups at Matrix, but today the backup failed again. It's frustrating, and from what I see on the forum, I'm not the only one having issues with backups.

Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copying files from 0-3"} May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copying config.json"} May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copying data/coolwsd.xml"} May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copying fsmetadata.json"} May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (1) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:00:26 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (2) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:00:46 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (3) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:01:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (4) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:01:26 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (5) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:01:46 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (6) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:02:07 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (7) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:02:27 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (8) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:02:47 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (9) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:03:07 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (10) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:03:27 box:storage/s3 copy: s3 copy error when copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json: InternalError: We encountered an internal error, please try again.: cause(EOF) May 25 01:03:27 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (11) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:03:27 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copied 3 files with error: BoxError: Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)"} May 25 01:03:27 box:backuptask copy: copied to 2024-05-24-230002-287/app_collabora.arch-linux.cz_v1.17.3 errored. error: Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF) May 25 01:03:27 box:taskworker Task took 205.487 seconds May 25 01:03:27 box:tasks setCompleted - 10016: {"result":null,"error":{"stack":"BoxError: Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)\n at Response.done (/home/yellowtent/box/src/storage/s3.js:338:48)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:367:18)\n at Request.callListeners (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:106:20)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:686:14)\n at Request.transition (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:38:9)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:688:12)","name":"BoxError","reason":"External Error","details":{},"message":"Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)"}} May 25 01:03:27 box:tasks update 10016: {"percent":100,"result":null,"error":{"stack":"BoxError: Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)\n at Response.done (/home/yellowtent/box/src/storage/s3.js:338:48)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:367:18)\n at Request.callListeners (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:106:20)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:686:14)\n at Request.transition (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:38:9)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:688:12)","name":"BoxError","reason":"External Error","details":{},"message":"Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)"}} [no timestamp] Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)