Backup cleanup task starts but do not finish the job

-

Use case 1: In one instance I see multiple started, but not finished backup cleaner jobs with the same log message.

box:taskworker Starting task 3335. Logs are at /home/yellowtent/platformdata/logs/tasks/3335.log box:backupcleaner run: retention is {"keepWithinSecs":604800} box:shell mounts: mountpoint -q -- /mnt/cloudronbackup box:database Connection 422 error: Packets out of order. Got: 0 Expected: 7 PROTOCOL_PACKETS_OUT_OF_ORDERUse case 2: In another instance other error, but also started but not finished.

retention is{"keepDaily":3,"keepWeekly":4,"keepMonthly":6}... 2024-11-29T03:30:29.878Z box:tasks update 21410: {"message":"Removing app backup (b178e0a6-ffe8-4ede-976c-c382f998a50d): app_b178e0a6-ffe8-4ede-976c-c382f998a50d_v3.55.0_97d403e1"} 2024-11-29T03:30:30.451Z box:tasks update 21410: {"message":"2024-11-14-030035-138/app_n8n.example.org_v3.55.0: Removing directory /mnt/cloudronbackup/my_example_org/2024-11-14-030035-138/app_n8n.example.org_v3.55.0"} 2024-11-29T03:30:30.523Z box:tasks update 21410: {"message":"Removing directory /mnt/cloudronbackup/my_example_org/2024-11-14-030035-138/app_n8n.example.org_v3.55.0"} 2024-11-29T03:30:30.524Z box:shell filesystem: rm -rf /mnt/cloudronbackup/my_example_org/2024-11-14-030035-138/app_n8n.example.org_v3.55.0 2024-11-29T03:38:00.508Z box:backupcleaner removeBackup: unable to prune backup directory /mnt/cloudronbackup/my_example_org/2024-11-14-030035-138: ENOTEMPTY: directory not empty, rmdir '/mnt/cloudronbackup/my_example_org/2024-11-14-030035-138' 2024-11-29T03:38:00.521Z box:backupcleaner removeBackup: removed 2024-11-14-030035-138/app_n8n.example.org_v3.55.0 ...Use case 2: The Jobs are finished now as a error. Some magic or the there is a hidden timeout.

-

Looks like two totally different issues. For case 1 no clue why the database queries would be out of order. Is this easily reproducible?

For case 2, looking at the logs, I wonder why it has mixed messages about the app domain? Is this because you manually replaced those or is this actually mixing domains in the original logs? Why a

rm -rfwouldn't work on a folder which contains data is strange. Can you check permissions with that folder? -

J joseph marked this topic as a question on

-

Looks like two totally different issues. For case 1 no clue why the database queries would be out of order. Is this easily reproducible?

For case 2, looking at the logs, I wonder why it has mixed messages about the app domain? Is this because you manually replaced those or is this actually mixing domains in the original logs? Why a

rm -rfwouldn't work on a folder which contains data is strange. Can you check permissions with that folder?Yes, internal 2 different issues, but both had ~ 5 running backup cleanup jobs.

I do not see case one at the moment, but case 2 still.

I bet only one job should be started and when job 1 does something, which job 2 tries also that this can happen.

-

Ok just trying to understand this better. The issue is that those cleanup jobs run in parallel? They are ok to run in parallel as such, so they shouldn't fail due to that.

The

unable to prune backup directoryis just logging that fact, but it would not fail here.Do you see other errors or failing tasks as such?

-

Ok just trying to understand this better. The issue is that those cleanup jobs run in parallel? They are ok to run in parallel as such, so they shouldn't fail due to that.

The

unable to prune backup directoryis just logging that fact, but it would not fail here.Do you see other errors or failing tasks as such?

At some ENOTEMPTY errors I see a new error.

box:backupcleaner removeBackup: unable to prune backup directory /mnt/cloudronbackup/myhost/2024-11-26-030148-145: ENOTEMPTY: directory not empty, rmdir '/mnt/cloudronbackup/myhost/2024-11-26-030148-145' Dec 09 05:28:23 box:backupcleaner removeBackup: error removing app_cef6575d-2b80-4e31-bf0f-01b61e1ca149_v1.4.3_344f2264 from database. BoxError: Backup not found at Object.del (/home/yellowtent/box/src/backups.js:281:42) at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { reason: 'Not found', details: {} } Dec 09 05:28:23 -

-

When I trust systemctl status box-task-12345.service, then all 4 tasks try to remove the same (

rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0).

I opened the process (rm -rf) with strace. which still runs syscall unlinkat/newfstatat. (pid 130049)newfstatat(4, "tpgnVSb3898pzsvVCOpQQ-IwDccdLmSNWN1n-DYUdlo", {st_mode=S_IFREG|0644, st_size=2180, ...}, AT_SYMLINK_NOFOLLOW) = 0 unlinkat(4, "tpgnVSb3898pzsvVCOpQQ-IwDccdLmSNWN1n-DYUdlo", 0) = 0All other tasks tries the same:

newfstatat(4, "FRWM67Hwddxd+j4LlMw0eNkJtl1TB-NY4hupbahkGyTyWLn0Kduf8YTNra3FrvU3", 0x55fcd3be73e8, AT_SYMLINK_NOFOLLOW) = -1 ENOENT (No such file or directory) unlinkat(4, "FRWM67Hwddxd+j4LlMw0eNkJtl1TB-NY4hupbahkGyTyWLn0Kduf8YTNra3FrvU3", 0) = -1 ENOENT (No such file or directory)Summary:

box-task-21539.service 130049 rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0 # still running box-task-21538.service 130047 rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0 box-task-21543.service 130046 rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0 box-task-21545.service 130048 rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0I will wait and see what will be happing when task 1 is finished.

-

Ok so before we get into this, are the tasks finishing and if so, also sucessfully? Despite the log lines you had posted earlier, the files are also gone afterwards?

Trying to find out if there is anything to debug in the first place or if the log lines were just confusing.

-

Ok so before we get into this, are the tasks finishing and if so, also sucessfully? Despite the log lines you had posted earlier, the files are also gone afterwards?

Trying to find out if there is anything to debug in the first place or if the log lines were just confusing.

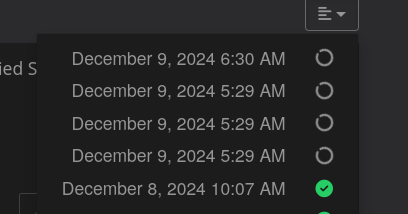

The tasks are still running, box-task-21538.service started Sun 2024-12-08 23:30:02 UTC; so I need to wait to say which task was sucessfully, which not.

The directory

/mnt/cloudronbackup/my_example_org/2024-11-14-030035-138is gone from my first post. -

The four jobs from about was all successfully.

So what I take from it. A job just start and do not care if the other job already runs, perhaps they try to cleanup the same.

And perhaps it is faster, because job A delete file A and job B can delete file B, when it is faster than job A.

I did not read the entire log to verify that this was the case.Just a idea:

A cleanup-job should look/claim what it does so that a following job do not try it also and waste time to find out that the file list in his memory was already deleted.

-

Yeah I guess the main question is, why some were even running parallel. Timewise they should be spaced out, so maybe those were just manually triggered or took unexpectedly long?

-

So 2 hours seem plenty of time to finish removing a bunch of files and folders, strange how they took so long then.

At least I don't see anything to fix as such, locking them can have other sideeffects and a task to do some file deletion isn't going to consume much resources anyways. Maybe the target filesystem in this case is very slow?

-

So 2 hours seem plenty of time to finish removing a bunch of files and folders, strange how they took so long then.

At least I don't see anything to fix as such, locking them can have other sideeffects and a task to do some file deletion isn't going to consume much resources anyways. Maybe the target filesystem in this case is very slow?

-

N nebulon has marked this topic as solved on

N nebulon has marked this topic as solved on

The host fs (cloudron) is not a ssd (netcup).

The host fs (cloudron) is not a ssd (netcup).