Backup before upgrade failing.

-

N nebulon marked this topic as a question on

N nebulon marked this topic as a question on

-

Which S3 provider are you using? Maybe Copy operation is not allowed with the access keys you generated?

-

can you double check the S3 credentials you have provided? Is this somehow related to the upgrade you mention or are all backups failing?

It's Akamai, but it was working fine until we installed a new Mastodon app. It just seems to be this one app app is failing, all the other apps are backing up fine to the same bucket with the same credentials.

I don't think it is credentials as all other apps are backing up fine including other mastodon app installs.

-

Which S3 provider are you using? Maybe Copy operation is not allowed with the access keys you generated?

-

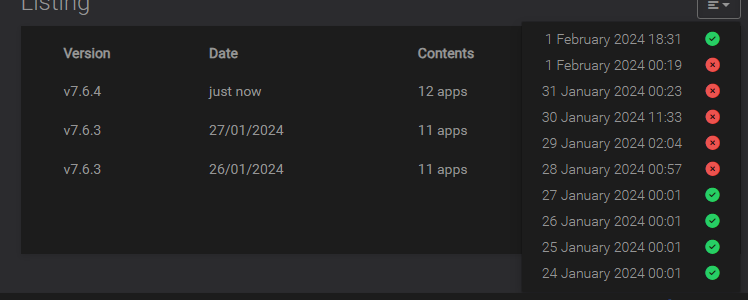

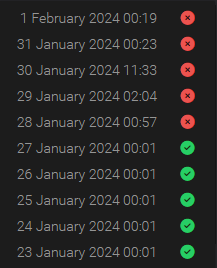

As you can see worked fine until the 28 when we installed a new Mastodon app, since then the error here

Jan 31 00:23:27box:tasks setCompleted - 1695: {"result":null,"error":{"stack":"BoxError: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24578670468 bytes): AccessDenied AccessDenied: null\n at done (/home/yellowtent/box/src/storage/s3.js:338:48)\n at Response.<anonymous> (/home/yellowtent/box/src/storage/s3.js:414:71)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:367:18)\n at Request.callListeners (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:106:20)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:686:14)\n at Request.transition (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:38:9)","name":"BoxError","reason":"External Error","details":{},"message":"Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24578670468 bytes): AccessDenied AccessDenied: null"}} Jan 31 00:23:27box:tasks update 1695: {"percent":100,"result":null,"error":{"stack":"BoxError: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24578670468 bytes): AccessDenied AccessDenied: null\n at done (/home/yellowtent/box/src/storage/s3.js:338:48)\n at Response.<anonymous> (/home/yellowtent/box/src/storage/s3.js:414:71)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:367:18)\n at Request.callListeners (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:106:20)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:686:14)\n at Request.transition (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:38:9)","name":"BoxError","reason":"External Error","details":{},"message":"Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24578670468 bytes): AccessDenied AccessDenied: null"}} Jan 31 00:23:27box:taskworker Task took 1405.247 secondsAnd to repeat all other apps, including other mastodon installs are backing up and unloading to the s3 backet with out issue until it hits the one mastodon app, then it stools for hours and fails.

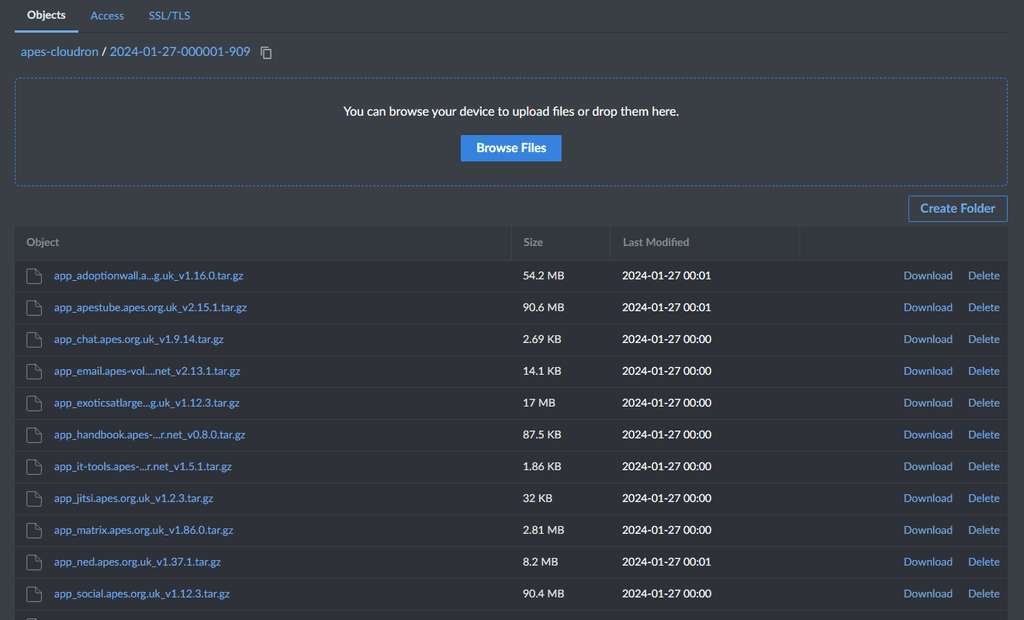

Backup from 27/01/2024

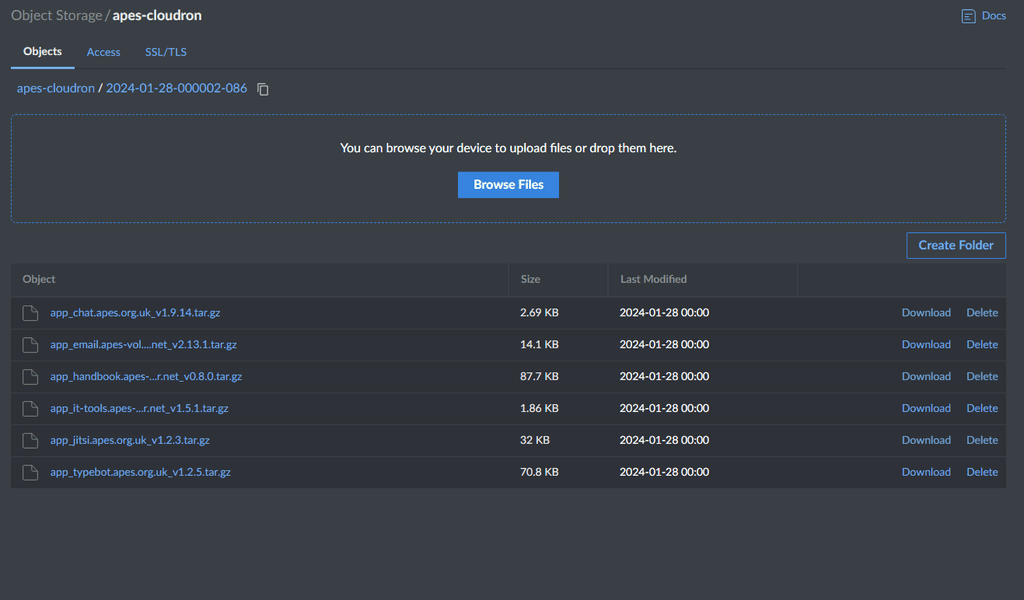

Backup from 28/01/2024

As you can see after the 27th even apps that backuped before the 28th are not there as the new mastodon install backup that is failing is stopping the rest. -

This is not necessarily a Cloudron problem. I've had the same issues with my Mastodon while trying to use S3/Minio. Unfortunately the solution is in Cloudron, by turning off auto backups. The same goes for my Peertube instance. But both use too much data to not use Minio, so I haven't tried seeing if they back up ok when they use only the Cloudron disk. In that sense I count it an unintended blessing, because if the backups had to backup all of the data that these have, it would overwhelm the backup destination! I supposed the ideal would be that the apps use S3/Minio to back up their own data, and then just the app code gets backed up with the rest of the Cloudron backup, but for me at least it's easier to put all my Mastodon eggs into one Minio basket, including the media for the instance (not just the media from the feed and cache).

-

This is not necessarily a Cloudron problem. I've had the same issues with my Mastodon while trying to use S3/Minio. Unfortunately the solution is in Cloudron, by turning off auto backups. The same goes for my Peertube instance. But both use too much data to not use Minio, so I haven't tried seeing if they back up ok when they use only the Cloudron disk. In that sense I count it an unintended blessing, because if the backups had to backup all of the data that these have, it would overwhelm the backup destination! I supposed the ideal would be that the apps use S3/Minio to back up their own data, and then just the app code gets backed up with the rest of the Cloudron backup, but for me at least it's easier to put all my Mastodon eggs into one Minio basket, including the media for the instance (not just the media from the feed and cache).

Sorry I disagree the issue is Cloudron, if it was a mastodon or peertube issue surely all installs of mastodon or peertube would be affected.

So far I have 2 mastodons on this server on another server I have 6 running, non but one install is having this issue. They all use Linode (Akamai) s3 storage.

I also have 3 peer tubes installed and non of them have this issue.

The fact that the 1 mastodon install that has now developed this fault was backing up fine for 2 days after the installation then suddenly stop saying there is a permission and connection problem.

In the errors, I have seen 2 points of reference.

- AccessDenied AccessDenied: null

*{"stack":"BoxError: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24578670468 bytes): AccessDenied AccessDenied: null\n at done (/home/yellowtent/box/src/storage/s3.js:338:48)\n at Response.

Says to me there is something going on in that install in the above location that is stopping the transfer of this file or similar.

It has no references to timeouts, or authentication and the fact that we have 22 apps all saving backups to the same s3 storage provider and none but one app from one of our two servers running the same apps is having an issue, days to me it was possibly a issue with the install it's self.

The log from creation gives this.

18:29 Automatic backups were disabled

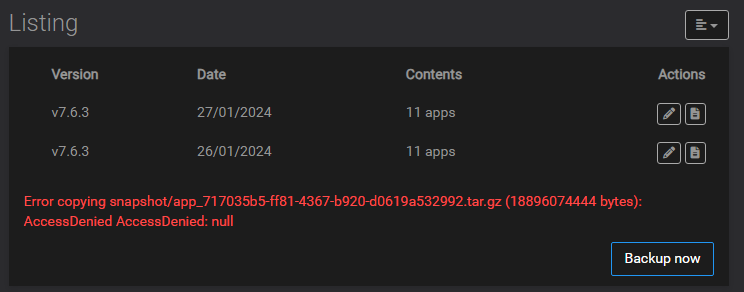

Yesterday Cloudron backup errored with error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (18896074444 bytes): AccessDenied AccessDenied: null

31/01/2024 App was re-configured

31/01/2024 Cloudron backup errored with error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24578670468 bytes): AccessDenied AccessDenied: null

30/01/2024 Cloudron update errored. Error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24224413103 bytes): AccessDenied AccessDenied: null

30/01/2024 Cloudron update errored. Error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (22101377103 bytes): AccessDenied AccessDenied: null

30/01/2024 App was re-configured

30/01/2024 App was updated to v1.12.4

30/01/2024 Update started from v1.12.3 to v1.12.4

30/01/2024 Cloudron update errored. Error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (21104142961 bytes): AccessDenied AccessDenied: null

30/01/2024 Memory limit was set to 8589934592

30/01/2024 Cloudron update errored. Error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (20214423535 bytes): AccessDenied AccessDenied: null

30/01/2024 App is back online

30/01/2024 Memory limit was set to 4294967296

30/01/2024 App ran out of memory

29/01/2024 App ran out of memory

29/01/2024 Cloudron backup errored with error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (27315752703 bytes): AccessDenied AccessDenied: null

28/01/2024 App ran out of memory

28/01/2024 Cloudron backup errored with error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (15586703963 bytes): AccessDenied AccessDenied: null

20/01/2024 Automatic updates were disabled

22/12/2023 App was re-configured

22/12/2023 App was re-configured

19/12/2023 Label was set to "APES Social"

19/12/2023 CPU shares was set to 75%

19/12/2023 Memory limit was set to 2147483648

19/12/2023 Mastodon (package v1.12.3) was installed - AccessDenied AccessDenied: null

-

I think then that you have corrupt media somewhere in that Mastodon instance. I've come across this, too. An app used to backup, then doesn't, and so often, despite few clear log guidance, I could always trace it back to some corrupt jpg or png or mov or something which was making the backup hang. Those few "out of memory" log entries suggest this, too. Something in that Mastodon instance is making the whole backup process hang. So, I am not sure it is Cloudron (you said you have others already running... so not Cloudron).

FWIW, I have this corrupt media issue on Cloudron, but also on other servers using different types of backup approaches. It's almost always some weird bit of media, or not enough memory.

-

Sorry I disagree the issue is Cloudron, if it was a mastodon or peertube issue surely all installs of mastodon or peertube would be affected.

So far I have 2 mastodons on this server on another server I have 6 running, non but one install is having this issue. They all use Linode (Akamai) s3 storage.

I also have 3 peer tubes installed and non of them have this issue.

The fact that the 1 mastodon install that has now developed this fault was backing up fine for 2 days after the installation then suddenly stop saying there is a permission and connection problem.

In the errors, I have seen 2 points of reference.

- AccessDenied AccessDenied: null

*{"stack":"BoxError: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24578670468 bytes): AccessDenied AccessDenied: null\n at done (/home/yellowtent/box/src/storage/s3.js:338:48)\n at Response.

Says to me there is something going on in that install in the above location that is stopping the transfer of this file or similar.

It has no references to timeouts, or authentication and the fact that we have 22 apps all saving backups to the same s3 storage provider and none but one app from one of our two servers running the same apps is having an issue, days to me it was possibly a issue with the install it's self.

The log from creation gives this.

18:29 Automatic backups were disabled

Yesterday Cloudron backup errored with error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (18896074444 bytes): AccessDenied AccessDenied: null

31/01/2024 App was re-configured

31/01/2024 Cloudron backup errored with error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24578670468 bytes): AccessDenied AccessDenied: null

30/01/2024 Cloudron update errored. Error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (24224413103 bytes): AccessDenied AccessDenied: null

30/01/2024 Cloudron update errored. Error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (22101377103 bytes): AccessDenied AccessDenied: null

30/01/2024 App was re-configured

30/01/2024 App was updated to v1.12.4

30/01/2024 Update started from v1.12.3 to v1.12.4

30/01/2024 Cloudron update errored. Error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (21104142961 bytes): AccessDenied AccessDenied: null

30/01/2024 Memory limit was set to 8589934592

30/01/2024 Cloudron update errored. Error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (20214423535 bytes): AccessDenied AccessDenied: null

30/01/2024 App is back online

30/01/2024 Memory limit was set to 4294967296

30/01/2024 App ran out of memory

29/01/2024 App ran out of memory

29/01/2024 Cloudron backup errored with error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (27315752703 bytes): AccessDenied AccessDenied: null

28/01/2024 App ran out of memory

28/01/2024 Cloudron backup errored with error: Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (15586703963 bytes): AccessDenied AccessDenied: null

20/01/2024 Automatic updates were disabled

22/12/2023 App was re-configured

22/12/2023 App was re-configured

19/12/2023 Label was set to "APES Social"

19/12/2023 CPU shares was set to 75%

19/12/2023 Memory limit was set to 2147483648

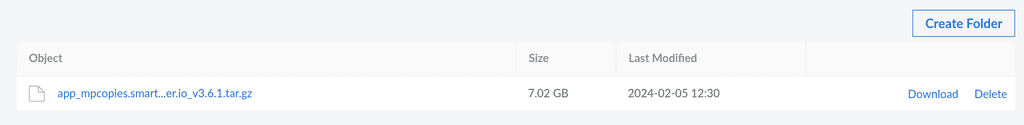

19/12/2023 Mastodon (package v1.12.3) was installed@mhgcic I think akamai is misleading us with the AccessDenied message. Can you tell me the size of the

717035b5-ff81-4367-b920-d0619a532992app ? My suspicion is that multi-part file copy is failing. Is it above 1GB? You can get the exact size withdu -hcs /home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992Can you also tell me which linode region you are using for storage? I can quickly try this myself first before debugging on your setup .

- AccessDenied AccessDenied: null

-

@mhgcic I think akamai is misleading us with the AccessDenied message. Can you tell me the size of the

717035b5-ff81-4367-b920-d0619a532992app ? My suspicion is that multi-part file copy is failing. Is it above 1GB? You can get the exact size withdu -hcs /home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992Can you also tell me which linode region you are using for storage? I can quickly try this myself first before debugging on your setup .

@girish I have messaged you the details, I have also sent 2 links one to a manual backup log and an auto backup log from the app having the issue.

But this is where it seems to go wrong.

Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Copying with concurrency of 10"} Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Copying files from 0-1"} Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Copying (multipart) snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz"} Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Copying part 1 - /apes-cloudron/snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz bytes=0-1073741823"} Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Copying part 2 - /apes-cloudron/snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz bytes=1073741824-2147483647"} Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Copying part 3 - /apes-cloudron/snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz bytes=2147483648-3221225471"} Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Retrying (1) multipart copy of snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz. Error: AccessDenied: null 403"} Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Aborting multipart copy of snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz"} Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Retrying (1) multipart copy of snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz. Error: AccessDenied: null 403"} Feb 04 00:53:35box:tasks update 1757: {"percent":42.1764705882353,"message":"Retrying (1) multipart copy of snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz. Error: AccessDenied: null 403"} Feb 04 00:53:35box:storage/s3 copy: s3 copy error when copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz: AccessDenied: null -

@mhgcic I think akamai is misleading us with the AccessDenied message. Can you tell me the size of the

717035b5-ff81-4367-b920-d0619a532992app ? My suspicion is that multi-part file copy is failing. Is it above 1GB? You can get the exact size withdu -hcs /home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992Can you also tell me which linode region you are using for storage? I can quickly try this myself first before debugging on your setup .

@girish There is also an error when moving the app storage location.

Feb 04 07:38:45box:services setupAddons: setting up addon ldap with options {} Feb 04 07:38:45box:services setupAddons: setting up addon scheduler with options {"cleanup":{"schedule":"11 01 * * *","command":"/app/pkg/cleanup.sh"}} Feb 04 07:38:45box:tasks update 1764: {"percent":60,"message":"Moving data dir"} Feb 04 07:38:45box:apptask moveDataDir: migrating data from /mnt/APES-Cloudron-Apps/apes-social to /home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992/data Feb 04 07:38:45box:shell moveDataDir spawn: /usr/bin/sudo -S /home/yellowtent/box/src/scripts/mvvolume.sh /mnt/APES-Cloudron-Apps/apes-social /home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992/data Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (11612115214 bytes): AccessDenied AccessDenied: nullAlso spotted the error at beginning of manual backup

Feb 04 07:48:01box:services Backing up redis Feb 04 07:48:0211:M 04 Feb 2024 07:48:02.339 * DB saved on disk Feb 04 07:48:02box:services pipeRequestToFile: connected with status code 200 Feb 04 07:48:02box:backuptask snapshotApp: social.apes.org.uk took 3.595 seconds Feb 04 07:48:02box:backuptask runBackupUpload: adjusting heap size to 7936M Feb 04 07:48:02box:tasks update 1766: {"percent":30,"message":"Uploading app snapshot social.apes.org.uk"} Feb 04 07:48:02box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 spawn: /usr/bin/sudo -S -E --close-from=4 /home/yellowtent/box/src/scripts/backupupload.js snapshot/app_717035b5-ff81-4367-b920-d0619a532992 tgz {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} Feb 04 07:48:03box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-04T07:48:03.333Z box:backupupload Backing up {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} to snapshot/app_717035b5-ff81-4367-b920-d0619a532992 Feb 04 07:48:03box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-04T07:48:03.378Z box:backuptask upload: path snapshot/app_717035b5-ff81-4367-b920-d0619a532992 format tgz dataLayout {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} Feb 04 07:48:03box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-04T07:48:03.701Z box:backuptask upload: mount point status is {"state":"active"} Feb 04 07:48:03box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-04T07:48:03.702Z box:backuptask checkPreconditions: getting disk usage of /home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992 Feb 04 07:48:062024-02-04T07:48:06.225Z pid=21 tid=2py5 class=AccountRefreshWorker jid=781afa1c0547fa8e89c2393e INFO: start Feb 04 07:48:08I, [2024-02-04T07:48:08.403158 #21] INFO -- : [paperclip] Trying to link /tmp/8811dd3a1fe9e37f30449af1bb7af22920240204-21-avis8o.jpg to /tmp/91fd285ab7aa4bed1a85cc7b719a3c3b20240204-21-zuvgxs.jpg Feb 04 07:48:08I, [2024-02-04T07:48:08.403738 #21] INFO -- : Command :: file -b --mime '/tmp/91fd285ab7aa4bed1a85cc7b719a3c3b20240204-21-zuvgxs.jpg' Feb 04 07:48:08I, [2024-02-04T07:48:08.427840 #21] INFO -- : [paperclip] Trying to link /tmp/8811dd3a1fe9e37f30449af1bb7af22920240204-21-avis8o.jpg to /tmp/4d2f96832408c1bfb04c1bc49e8f2fa620240204-21-2zmbf6.jpg Feb 04 07:48:08I, [2024-02-04T07:48:08.475345 #21] INFO -- : [paperclip] Trying to link /tmp/4d7addef9fa136018b13f110e3e7844920240204-21-1tqmqn.jpg to /tmp/20e8de22e1ff7ae9852e8c67504a6ece20240204-21-qkr69r.jpg Feb 04 07:48:08I, [2024-02-04T07:48:08.475686 #21] INFO -- : Command :: file -b --mime '/tmp/20e8de22e1ff7ae9852e8c67504a6ece20240204-21-qkr69r.jpg' Feb 04 07:48:08I, [2024-02-04T07:48:08.480197 #21] INFO -- : [paperclip] Trying to link /tmp/4d7addef9fa136018b13f110e3e7844920240204-21-1tqmqn.jpg to /tmp/606cb0cd88fee865cad88da6e4b7011320240204-21-sdwwx5.jpg Feb 04 07:48:102024-02-04T07:48:10.301Z pid=21 tid=2rvd class=ActivityPub::SynchronizeFeaturedCollectionWorker jid=981d062c8b2b10c44cb13388 INFO: start Feb 04 07:48:102024-02-04T07:48:10.306Z pid=21 tid=2py5 class=AccountRefreshWorker jid=781afa1c0547fa8e89c2393e elapsed=4.081 INFO: done Feb 04 07:48:102024-02-04T07:48:10.306Z pid=21 tid=2py5 class=ActivityPub::SynchronizeFeaturedTagsCollectionWorker jid=dc25e94a5590aba7bf09e75b INFO: start Feb 04 07:48:102024-02-04T07:48:10.921Z pid=21 tid=2py5 class=ActivityPub::SynchronizeFeaturedTagsCollectionWorker jid=dc25e94a5590aba7bf09e75b elapsed=0.615 INFO: done Feb 04 07:48:102024-02-04T07:48:10.938Z pid=21 tid=2rvd class=ActivityPub::SynchronizeFeaturedCollectionWorker jid=981d062c8b2b10c44cb13388 elapsed=0.637 INFO: done Feb 04 07:48:10I, [2024-02-04T07:48:10.275191 #21] INFO -- : [paperclip] Trying to link /tmp/4d2f96832408c1bfb04c1bc49e8f2fa620240204-21-2zmbf6.jpg to /tmp/91fd285ab7aa4bed1a85cc7b719a3c3b20240204-21-e83oo7.jpg Feb 04 07:48:10I, [2024-02-04T07:48:10.275478 #21] INFO -- : Command :: file -b --mime '/tmp/91fd285ab7aa4bed1a85cc7b719a3c3b20240204-21-e83oo7.jpg' Feb 04 07:48:10I, [2024-02-04T07:48:10.278122 #21] INFO -- : [paperclip] Trying to link /tmp/606cb0cd88fee865cad88da6e4b7011320240204-21-sdwwx5.jpg to /tmp/20e8de22e1ff7ae9852e8c67504a6ece20240204-21-lppj2s.jpg Feb 04 07:48:10I, [2024-02-04T07:48:10.278387 #21] INFO -- : Command :: file -b --mime '/tmp/20e8de22e1ff7ae9852e8c67504a6ece20240204-21-lppj2s.jpg' Feb 04 07:48:13box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-04T07:48:13.787Z box:backuptask checkPreconditions: total required=12333489203 available=undefined Feb 04 07:48:13box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-04T07:48:13.787Z box:backupformat/tgz upload: Uploading {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} to snapshot/app_717035b5-ff81-4367-b920-d0619a532992 Error copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz (11612115214 bytes): AccessDenied AccessDenied: null -

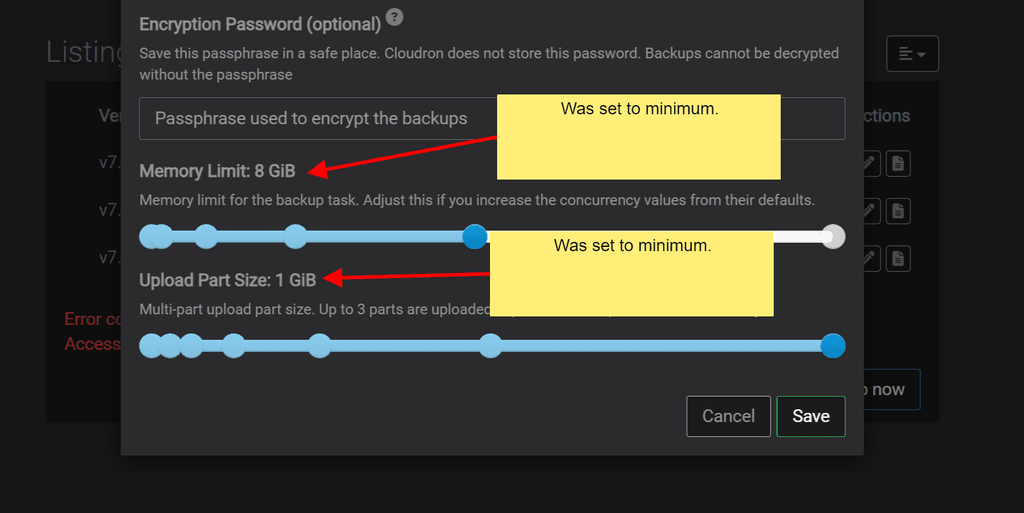

I have found a work around and maybe confirmation on whats causing the issues.

I changed these

The backups still failed.

I changed from tarball to rsync and it is backing up fine.

This confirms it has to do with the creation of files via splitting them, and permissions on the server stopping upload to s3 maybe?

It may take longer for backups to perform for the moment, but I will keep it as rsync for the moment.

-

@mhgcic thanks for the update. I will take a look into testing with the Frankfurt region for multipart copies.

-

mm, it works for me with 7GB file sizes in Frankfurt.

If you hit this again, contact us on support@cloudron.io, so we can debug this on the server.

-

G girish has marked this topic as solved on

G girish has marked this topic as solved on

-

mm, it works for me with 7GB file sizes in Frankfurt.

If you hit this again, contact us on support@cloudron.io, so we can debug this on the server.

Thanks, I really feel like it is something going on on the cloudron server not so much s3, I have other apps backing up just as this one app.

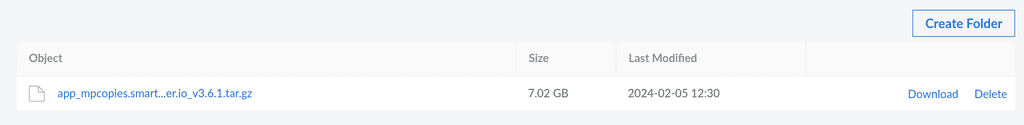

I have been investigating further.

I removed relays, waited 2 day, and put backups back to the Tarball method.

The app size has gone from just over 10GB to approx 2GB. Backups are now working as a tarball.

eb 06 08:13:39box:services pipeRequestToFile: connected with status code 200 Feb 06 08:13:39box:backuptask snapshotApp: social.apes.org.uk took 3.067 seconds Feb 06 08:13:39box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading app snapshot social.apes.org.uk"} Feb 06 08:13:39box:backuptask runBackupUpload: adjusting heap size to 7936M Feb 06 08:13:39box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 spawn: /usr/bin/sudo -S -E --close-from=4 /home/yellowtent/box/src/scripts/backupupload.js snapshot/app_717035b5-ff81-4367-b920-d0619a532992 tgz {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} Feb 06 08:13:40box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:40.458Z box:backupupload Backing up {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} to snapshot/app_717035b5-ff81-4367-b920-d0619a532992 Feb 06 08:13:40box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:40.494Z box:backuptask upload: path snapshot/app_717035b5-ff81-4367-b920-d0619a532992 format tgz dataLayout {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} Feb 06 08:13:40box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:40.675Z box:backuptask upload: mount point status is {"state":"active"} Feb 06 08:13:40box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:40.675Z box:backuptask checkPreconditions: getting disk usage of /home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992 Feb 06 08:13:45box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:45.672Z box:backuptask checkPreconditions: total required=1933915851 available=undefined Feb 06 08:13:45box:backupformat/tgz upload: Uploading {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} to snapshot/app_717035b5-ff81-4367-b920-d0619a532992 Feb 06 08:13:55box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 40M@4MBps (social.apes.org.uk)"} Feb 06 08:14:05box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 151M@11MBps (social.apes.org.uk)"} Feb 06 08:14:15box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 261M@11MBps (social.apes.org.uk)"} Feb 06 08:14:25box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 386M@13MBps (social.apes.org.uk)"} Feb 06 08:14:35box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 541M@16MBps (social.apes.org.uk)"} Feb 06 08:14:45box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 719M@18MBps (social.apes.org.uk)"} Feb 06 08:14:55box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 900M@18MBps (social.apes.org.uk)"} Feb 06 08:15:06box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 1029M@13MBps (social.apes.org.uk)"} Feb 06 08:15:16box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 1206M@18MBps (social.apes.org.uk)"} Feb 06 08:15:26box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 1389M@18MBps (social.apes.org.uk)"} Feb 06 08:15:36box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 1568M@18MBps (social.apes.org.uk)"} Feb 06 08:16:09box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:16:09.039Z box:storage/s3 Uploaded snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz with partSize 1073741824: {"Location":"eu-central-1.linodeobjects.com/apes-cloudron/snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz","Bucket":"apes-cloudron","Key":"snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz","ETag":""} Feb 06 08:16:09box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:16:09.040Z box:backupupload upload completed. error: null Feb 06 08:16:09box:backuptask runBackupUpload: result - {"result":""} Feb 06 08:16:09box:backuptask uploadAppSnapshot: social.apes.org.uk uploaded to snapshot/app_717035b5-ff81-4367-b920-d0619a532992. 149.349 seconds Feb 06 08:16:09box:backuptask rotateAppBackup: rotating social.apes.org.uk to path 2024-02-06-081327-325/app_social.apes.org.uk_v1.12.5 Feb 06 08:16:09box:tasks update 1793: {"percent":42.1764705882353,"message":"Copying with concurrency of 10"} Feb 06 08:16:09box:tasks update 1793: {"percent":42.1764705882353,"message":"Copying files from 0-1"} Feb 06 08:16:09box:tasks update 1793: {"percent":42.1764705882353,"message":"Copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz"} Feb 06 08:16:12box:tasks update 1793: {"percent":42.1764705882353,"message":"Copied 1 files with error: null"} Feb 06 08:16:12box:backuptask copy: copied successfully to 2024-02-06-081327-325/app_social.apes.org.uk_v1.12.5. Took 3.156 seconds Feb 06 08:16:12box:backuptask fullBackup: app social.apes.org.uk backup finished. Took 155.627 secondsSo I think

- A file created from one of the relays is causing the issue. Hence now all relays have been removed backups now work.

- The creation of the slitted backup files does not have the correct permissions at the time of creation to upload to s3.

- Timeout?

- One other possibility is I had some relays disabled as they were producing Chinese language posts. Could these relays being disabled be causing an issue with uploading backup?

I am going to readd relays until the issue comes up again.

-

Thanks, I really feel like it is something going on on the cloudron server not so much s3, I have other apps backing up just as this one app.

I have been investigating further.

I removed relays, waited 2 day, and put backups back to the Tarball method.

The app size has gone from just over 10GB to approx 2GB. Backups are now working as a tarball.

eb 06 08:13:39box:services pipeRequestToFile: connected with status code 200 Feb 06 08:13:39box:backuptask snapshotApp: social.apes.org.uk took 3.067 seconds Feb 06 08:13:39box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading app snapshot social.apes.org.uk"} Feb 06 08:13:39box:backuptask runBackupUpload: adjusting heap size to 7936M Feb 06 08:13:39box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 spawn: /usr/bin/sudo -S -E --close-from=4 /home/yellowtent/box/src/scripts/backupupload.js snapshot/app_717035b5-ff81-4367-b920-d0619a532992 tgz {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} Feb 06 08:13:40box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:40.458Z box:backupupload Backing up {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} to snapshot/app_717035b5-ff81-4367-b920-d0619a532992 Feb 06 08:13:40box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:40.494Z box:backuptask upload: path snapshot/app_717035b5-ff81-4367-b920-d0619a532992 format tgz dataLayout {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} Feb 06 08:13:40box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:40.675Z box:backuptask upload: mount point status is {"state":"active"} Feb 06 08:13:40box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:40.675Z box:backuptask checkPreconditions: getting disk usage of /home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992 Feb 06 08:13:45box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:13:45.672Z box:backuptask checkPreconditions: total required=1933915851 available=undefined Feb 06 08:13:45box:backupformat/tgz upload: Uploading {"localRoot":"/home/yellowtent/appsdata/717035b5-ff81-4367-b920-d0619a532992","layout":[]} to snapshot/app_717035b5-ff81-4367-b920-d0619a532992 Feb 06 08:13:55box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 40M@4MBps (social.apes.org.uk)"} Feb 06 08:14:05box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 151M@11MBps (social.apes.org.uk)"} Feb 06 08:14:15box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 261M@11MBps (social.apes.org.uk)"} Feb 06 08:14:25box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 386M@13MBps (social.apes.org.uk)"} Feb 06 08:14:35box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 541M@16MBps (social.apes.org.uk)"} Feb 06 08:14:45box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 719M@18MBps (social.apes.org.uk)"} Feb 06 08:14:55box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 900M@18MBps (social.apes.org.uk)"} Feb 06 08:15:06box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 1029M@13MBps (social.apes.org.uk)"} Feb 06 08:15:16box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 1206M@18MBps (social.apes.org.uk)"} Feb 06 08:15:26box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 1389M@18MBps (social.apes.org.uk)"} Feb 06 08:15:36box:tasks update 1793: {"percent":42.1764705882353,"message":"Uploading backup 1568M@18MBps (social.apes.org.uk)"} Feb 06 08:16:09box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:16:09.039Z box:storage/s3 Uploaded snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz with partSize 1073741824: {"Location":"eu-central-1.linodeobjects.com/apes-cloudron/snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz","Bucket":"apes-cloudron","Key":"snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz","ETag":""} Feb 06 08:16:09box:shell backup-snapshot/app_717035b5-ff81-4367-b920-d0619a532992 (stderr): 2024-02-06T08:16:09.040Z box:backupupload upload completed. error: null Feb 06 08:16:09box:backuptask runBackupUpload: result - {"result":""} Feb 06 08:16:09box:backuptask uploadAppSnapshot: social.apes.org.uk uploaded to snapshot/app_717035b5-ff81-4367-b920-d0619a532992. 149.349 seconds Feb 06 08:16:09box:backuptask rotateAppBackup: rotating social.apes.org.uk to path 2024-02-06-081327-325/app_social.apes.org.uk_v1.12.5 Feb 06 08:16:09box:tasks update 1793: {"percent":42.1764705882353,"message":"Copying with concurrency of 10"} Feb 06 08:16:09box:tasks update 1793: {"percent":42.1764705882353,"message":"Copying files from 0-1"} Feb 06 08:16:09box:tasks update 1793: {"percent":42.1764705882353,"message":"Copying snapshot/app_717035b5-ff81-4367-b920-d0619a532992.tar.gz"} Feb 06 08:16:12box:tasks update 1793: {"percent":42.1764705882353,"message":"Copied 1 files with error: null"} Feb 06 08:16:12box:backuptask copy: copied successfully to 2024-02-06-081327-325/app_social.apes.org.uk_v1.12.5. Took 3.156 seconds Feb 06 08:16:12box:backuptask fullBackup: app social.apes.org.uk backup finished. Took 155.627 secondsSo I think

- A file created from one of the relays is causing the issue. Hence now all relays have been removed backups now work.

- The creation of the slitted backup files does not have the correct permissions at the time of creation to upload to s3.

- Timeout?

- One other possibility is I had some relays disabled as they were producing Chinese language posts. Could these relays being disabled be causing an issue with uploading backup?

I am going to readd relays until the issue comes up again.

@mhgcic I suspect this is to do with the final file size of the back up. Atleast, per the initial failure logs, the backup was created and it was also uploaded fine to s3. Where it was failing is the "remote copy" part. This part is known to be flaky across s3 providers. I am now testing > 10GB. Yesteday, I stopped at 7GB!

-

@mhgcic I suspect this is to do with the final file size of the back up. Atleast, per the initial failure logs, the backup was created and it was also uploaded fine to s3. Where it was failing is the "remote copy" part. This part is known to be flaky across s3 providers. I am now testing > 10GB. Yesteday, I stopped at 7GB!

-

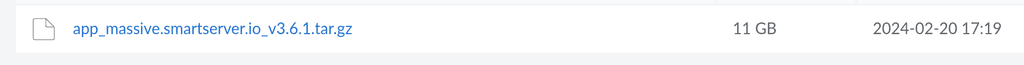

To follow up, 11GB worked fine too.