-

context :

corporate environment with a proxy

ubuntu VM, with ubuntu 22prep :

Proxy for APT :

--> /etc/apt/apt.conf.d/aptproxyAcquire::http::Proxy "http://iproxy:8080"; Acquire::https::Proxy "http://iproxy:8080";Docker will need to pull images, it won't work because of the proxy

create /etc/systemd/system/docker.service.d/http-proxy.conf

[Service] Environment="HTTP_PROXY=http://iproxy:8080" Environment="HTTPS_PROXY=http://iproxy:8080" Environment="NO_PROXY=localhost,127.0.0.1"Next, Wget and Curl

.wgetrc, .curlrc in both the cloudron user of the VM and the root used /root/

even like that I couldn't get the "check version" of the script to work out, couldn't fetch the release, so I commented those parts and basically fetched the zip file of the last release from the gitlab, and unzip it in my /home/cloudron/ from where I was going to run the cloudron-setup as root.

I then defined a variable for this unziped folder and made sure to point the box_src_folder to the right location

#!/bin/bash set -eu -o pipefail function exitHandler() { rm -f /etc/update-motd.d/91-cloudron-install-in-progress } trap exitHandler EXIT vergte() { greater_version=$(echo -e "$1\n$2" | sort -rV | head -n1) [[ "$1" == "${greater_version}" ]] && return 0 || return 1 } # change this to a hash when we make a upgrade release readonly LOG_FILE="/var/log/cloudron-setup.log" readonly MINIMUM_DISK_SIZE_GB="18" # this is the size of "/" and required to fit in docker images 18 is a safe bet for different reporting on 20GB min readonly MINIMUM_MEMORY="949" # this is mostly reported for 1GB main memory (DO 957, EC2 949, Linode 989, Serverdiscounter.com 974) readonly curl="curl --fail --connect-timeout 20 --retry 10 --retry-delay 2 --max-time 2400" # copied from cloudron-resize-fs.sh readonly rootfs_type=$(LC_ALL=C df --output=fstype / | tail -n1) readonly physical_memory=$(LC_ALL=C free -m | awk '/Mem:/ { print $2 }') readonly disk_size_bytes=$(LC_ALL=C df --output=size / | tail -n1) readonly disk_size_gb=$((${disk_size_bytes}/1024/1024)) readonly RED='\033[31m' readonly GREEN='\033[32m' readonly DONE='\033[m' # verify the system has minimum requirements met if [[ "${rootfs_type}" != "ext4" && "${rootfs_type}" != "xfs" ]]; then echo "Error: Cloudron requires '/' to be ext4 or xfs" # see #364 exit 1 fi if [[ "${physical_memory}" -lt "${MINIMUM_MEMORY}" ]]; then echo "Error: Cloudron requires atleast 1GB physical memory" exit 1 fi if [[ "${disk_size_gb}" -lt "${MINIMUM_DISK_SIZE_GB}" ]]; then echo "Error: Cloudron requires atleast 20GB disk space (Disk space on / is ${disk_size_gb}GB)" exit 1 fi if [[ "$(uname -m)" != "x86_64" ]]; then echo "Error: Cloudron only supports amd64/x86_64" exit 1 fi if cvirt=$(systemd-detect-virt --container); then echo "Error: Cloudron does not support ${cvirt}, only runs on bare metal or with full hardware virtualization" exit 1 fi # do not use is-active in case box service is down and user attempts to re-install if systemctl cat box.service >/dev/null 2>&1; then echo "Error: Cloudron is already installed. To reinstall, start afresh" exit 1 fi provider="generic" requestedVersion="" installServerOrigin="https://api.cloudron.io" apiServerOrigin="https://api.cloudron.io" webServerOrigin="https://cloudron.io" consoleServerOrigin="https://console.cloudron.io" sourceTarballUrl="" rebootServer="true" setupToken="" # this is a OTP for securing an installation (https://forum.cloudron.io/topic/6389/add-password-for-initial-configuration) appstoreSetupToken="" cloudronId="" appstoreApiToken="" redo="true" args=$(getopt -o "" -l "help,provider:,version:,env:,skip-reboot,generate-setup-token,setup-token:,redo" -n "$0" -- "$@") eval set -- "${args}" while true; do case "$1" in --help) echo "See https://docs.cloudron.io/installation/ on how to install Cloudron"; exit 0;; --provider) provider="$2"; shift 2;; --version) requestedVersion="$2"; shift 2;; --env) if [[ "$2" == "dev" ]]; then apiServerOrigin="https://api.dev.cloudron.io" webServerOrigin="https://dev.cloudron.io" consoleServerOrigin="https://console.dev.cloudron.io" installServerOrigin="https://api.dev.cloudron.io" elif [[ "$2" == "staging" ]]; then apiServerOrigin="https://api.staging.cloudron.io" webServerOrigin="https://staging.cloudron.io" consoleServerOrigin="https://console.staging.cloudron.io" installServerOrigin="https://api.staging.cloudron.io" elif [[ "$2" == "unstable" ]]; then installServerOrigin="https://api.dev.cloudron.io" fi shift 2;; --skip-reboot) rebootServer="false"; shift;; --redo) redo="true"; shift;; --setup-token) appstoreSetupToken="$2"; shift 2;; --generate-setup-token) setupToken="$(openssl rand -hex 10)"; shift;; --) break;; *) echo "Unknown option $1"; exit 1;; esac done # Only --help works as non-root if [[ ${EUID} -ne 0 ]]; then echo "This script should be run as root." > /dev/stderr exit 1 fi # Only --help works with mismatched ubuntu ubuntu_version=$(lsb_release -rs) if [[ "${ubuntu_version}" != "16.04" && "${ubuntu_version}" != "18.04" && "${ubuntu_version}" != "20.04" && "${ubuntu_version}" != "22.04" ]]; then echo "Cloudron requires Ubuntu 18.04, 20.04, 22.04" > /dev/stderr exit 1 fi if which nginx >/dev/null || which docker >/dev/null || which node > /dev/null; then if [[ "${redo}" == "false" ]]; then echo "Error: Some packages like nginx/docker/nodejs are already installed. Cloudron requires specific versions of these packages and will install them as part of its installation. Please start with a fresh Ubuntu install and run this script again." > /dev/stderr exit 1 fi fi # Install MOTD file for stack script style installations. this is removed by the trap exit handler. Heredoc quotes prevents parameter expansion cat > /etc/update-motd.d/91-cloudron-install-in-progress <<'EOF' #!/bin/bash printf "**********************************************************************\n\n" printf "\t\t\tWELCOME TO CLOUDRON\n" printf "\t\t\t-------------------\n" printf '\n\e[1;32m%-6s\e[m\n\n' "Cloudron is installing. Run 'tail -f /var/log/cloudron-setup.log' to view progress." printf "Cloudron overview - https://docs.cloudron.io/ \n" printf "Cloudron setup - https://docs.cloudron.io/installation/#setup \n" printf "\nFor help and more information, visit https://forum.cloudron.io\n\n" printf "**********************************************************************\n" EOF chmod +x /etc/update-motd.d/91-cloudron-install-in-progress # workaround netcup setting immutable bit. can be removed in 8.0 if lsattr -l /etc/resolv.conf 2>/dev/null | grep -q Immutable; then chattr -i /etc/resolv.conf fi # Can only write after we have confirmed script has root access echo "Running cloudron-setup with args : $@" > "${LOG_FILE}" echo "" echo "##############################################" echo " Cloudron Setup (${requestedVersion:-latest})" echo "##############################################" echo "" echo " Follow setup logs in a second terminal with:" echo " $ tail -f ${LOG_FILE}" echo "" echo " Join us at https://forum.cloudron.io for any questions." echo "" echo "=> Updating apt and installing script dependencies" if ! apt-get update &>> "${LOG_FILE}"; then echo "Could not update package repositories. See ${LOG_FILE}" exit 1 fi if ! DEBIAN_FRONTEND=noninteractive apt-get -o Dpkg::Options::="--force-confdef" -o Dpkg::Options::="--force-confold" -y install --no-install-recommends curl python3 ubuntu-standard software-properties-common -y &>> "${LOG_FILE}"; then echo "Could not install setup dependencies (curl). See ${LOG_FILE}" exit 1 fi echo "=> Validating setup token" if [[ -n "${appstoreSetupToken}" ]]; then if ! httpCode=$(curl -sX POST -H "Content-type: application/json" -o /tmp/response.json -w "%{http_code}" --data "{\"setupToken\": \"${appstoreSetupToken}\"}" "${apiServerOrigin}/api/v1/cloudron_setup_done"); then echo "Could not reach ${apiServerOrigin} to complete setup" exit 1 fi if [[ "${httpCode}" != "200" ]]; then echo -e "Failed to validate setup token.\n$(cat /tmp/response.json)" exit 1 fi setupResponse=$(cat /tmp/response.json) cloudronId=$(echo "${setupResponse}" | python3 -c 'import json,sys;obj=json.load(sys.stdin);print(obj["cloudronId"])') appstoreApiToken=$(echo "${setupResponse}" | python3 -c 'import json,sys;obj=json.load(sys.stdin);print(obj["cloudronToken"])') fi echo "=> Checking version" #if ! releaseJson=$($curl -s "${installServerOrigin}/api/v1/releases?boxVersion=${requestedVersion}"); then # echo "Failed to get release information" # exit 1 #fi requestedVersion="7.7.1" version="7.7.1" # if [[ "$requestedVersion" == "" ]]; then # version=$(echo "${releaseJson}" | python3 -c 'import json,sys;obj=json.load(sys.stdin);print(obj["version"])') # else # version="${requestedVersion}" # fi # if vergte "${version}" "7.5.99"; then # if ! grep -q avx /proc/cpuinfo; then # echo "Cloudron version ${version} requires AVX support in the CPU. No avx found in /proc/cpuinfo" # exit 1 # fi # fi # if ! sourceTarballUrl=$(echo "${requestedVersion}" | python3 -c 'import json,sys;obj=json.load(sys.stdin);print(obj["info"]["sourceTarballUrl"])'); then # echo "No source code for version '${requestedVersion:-latest}'" # exit 1 # fi # echo "=> Downloading Cloudron version ${version} ..." # box_src_tmp_dir=$(mktemp -dt box-src-XXXXXX) # if ! $curl -sLk "${sourceTarballUrl}" | tar -zxf - -C "${box_src_tmp_dir}"; then # echo "Could not download source tarball. See ${LOG_FILE} for details" # exit 1 # fi # echo -n "=> Installing base dependencies (this takes some time) ..." # init_ubuntu_script=$(test -f "${box_src_tmp_dir}/scripts/init-ubuntu.sh" && echo "${box_src_tmp_dir}/scripts/init-ubuntu.sh" || echo "${box_src_tmp_dir}/baseimage/initializeBaseUbuntuImage.sh") # if ! /bin/bash "${init_ubuntu_script}" &>> "${LOG_FILE}"; then # echo "Init script failed. See ${LOG_FILE} for details" # exit 1 # fi # echo "" # Define the URL of the Cloudron release file releaseZip="box-v7.7.1.zip" # Create a temporary directory to extract the release file box_src_tmp_dir=box-v7.7.1 # Extract the release file # echo "=> Extracting Cloudron release v7.7.1 ..." # if ! unzip -q "${releaseZip}" -d "${box_src_tmp_dir}"; then # echo "Could not extract Cloudron release file. See ${LOG_FILE} for details" # exit 1 # fi # Check if init script exists and run it echo -n "=> Installing base dependencies (this takes some time) ..." init_ubuntu_script=$(test -f "${box_src_tmp_dir}/scripts/init-ubuntu.sh" && echo "${box_src_tmp_dir}/scripts/init-ubuntu.sh" || echo "${box_src_tmp_dir}/baseimage/initializeBaseUbuntuImage.sh") if ! /bin/bash "${init_ubuntu_script}" &>> "${LOG_FILE}"; then echo "Init script failed. See ${LOG_FILE} for details" exit 1 fi echo "" # The provider flag is still used for marketplace images mkdir -p /etc/cloudron echo "${provider}" > /etc/cloudron/PROVIDER [[ ! -z "${setupToken}" ]] && echo "${setupToken}" > /etc/cloudron/SETUP_TOKEN echo -n "=> Installing Cloudron version ${version} (this takes some time) ..." if ! /bin/bash "${box_src_tmp_dir}/scripts/installer.sh" &>> "${LOG_FILE}"; then echo "Failed to install cloudron. See ${LOG_FILE} for details" exit 1 fi echo "" mysql -uroot -ppassword -e "REPLACE INTO box.settings (name, value) VALUES ('api_server_origin', '${apiServerOrigin}');" 2>/dev/null mysql -uroot -ppassword -e "REPLACE INTO box.settings (name, value) VALUES ('web_server_origin', '${webServerOrigin}');" 2>/dev/null mysql -uroot -ppassword -e "REPLACE INTO box.settings (name, value) VALUES ('console_server_origin', '${consoleServerOrigin}');" 2>/dev/null if [[ -n "${appstoreSetupToken}" ]]; then mysql -uroot -ppassword -e "REPLACE INTO box.settings (name, value) VALUES ('cloudron_id', '${cloudronId}');" 2>/dev/null mysql -uroot -ppassword -e "REPLACE INTO box.settings (name, value) VALUES ('appstore_api_token', '${appstoreApiToken}');" 2>/dev/null fi echo -n "=> Waiting for cloudron to be ready (this takes some time) ..." while true; do echo -n "." if status=$($curl -k -s -f "http://localhost:3000/api/v1/cloudron/status" 2>/dev/null); then break # we are up and running fi sleep 10 done ip4=$(curl -s -k --fail --connect-timeout 10 --max-time 10 https://ipv4.api.cloudron.io/api/v1/helper/public_ip | sed -n -e 's/.*"ip": "\(.*\)"/\1/p' || true) ip6=$(curl -s -k --fail --connect-timeout 10 --max-time 10 https://ipv6.api.cloudron.io/api/v1/helper/public_ip | sed -n -e 's/.*"ip": "\(.*\)"/\1/p' || true) url4="" url6="" fallbackUrl="" if [[ -z "${setupToken}" ]]; then [[ -n "${ip4}" ]] && url4="https://${ip4}" [[ -n "${ip6}" ]] && url6="https://[${ip6}]" [[ -z "${ip4}" && -z "${ip6}" ]] && fallbackUrl="https://<IP>" else [[ -n "${ip4}" ]] && url4="https://${ip4}/?setupToken=${setupToken}" [[ -n "${ip6}" ]] && url6="https://[${ip6}]/?setupToken=${setupToken}" [[ -z "${ip4}" && -z "${ip6}" ]] && fallbackUrl="https://<IP>?setupToken=${setupToken}" fi echo -e "\n\n${GREEN}After reboot, visit one of the following URLs and accept the self-signed certificate to finish setup.${DONE}\n" [[ -n "${url4}" ]] && echo -e " * ${GREEN}${url4}${DONE}" [[ -n "${url6}" ]] && echo -e " * ${GREEN}${url6}${DONE}" [[ -n "${fallbackUrl}" ]] && echo -e " * ${GREEN}${fallbackUrl}${DONE}" if [[ "${rebootServer}" == "true" ]]; then systemctl stop box mysql # sometimes mysql ends up having corrupt privilege tables # https://www.gnu.org/savannah-checkouts/gnu/bash/manual/bash.html#ANSI_002dC-Quoting read -p $'\n'"The server has to be rebooted to apply all the settings. Reboot now ? [Y/n] " yn yn=${yn:-y} case $yn in [Yy]* ) exitHandler; systemctl reboot;; * ) exit;; esac fiAt that point I could move on to the next part :

I'm not sure about this step but I had to comment :

# on ubuntu 18.04 and 20.04, this is the default. this requires resolvconf for DNS to work further after the disable systemctl stop systemd-resolved || true systemctl disable systemd-resolved || trueit was part of the issue of losing internet connectivity but since I did it also when I disabled/stoped unbound and cloudron-firewall, I'm not sure now which one did the trick.

there the script run pretty much as it is but you need to make sure your .wgetrc and .curlrc file configured with your proxy is a the /root/ location, since we run the script with sudo

I also had to turn off SSL cert validation for it to pass my proxy.wgetrc

http_proxy = http://iproxy:8080 https_proxy = http://iproxy:8080 use_proxy = on check-certificate = off debug = on.curlrc

proxy="http://iproxy:8080" insecure.npmrc

proxy=http://iproxy:8080/ https-proxy=http://iproxy:8080/ loglevel=verbose registry=https://registry.npmjs.org/the only things I had to adapt for the init script to work is

#systemctl disable systemd-resolved and

#systemctl restart unboundwith these enabled it would just cut my network and I would lose entirely connections to the outside, which I still need to APT and for NPM steps later on

once you're past the installer and have docker, npm done

you can move on to /setup/start.sh

Here I had to comment anything related to unbound, or otherwise I would lose internet connectivity again

#!/bin/bash set -eu -o pipefail # This script is run after the box code is switched. This means that this script # should pretty much always succeed. No network logic/download code here. function log() { echo -e "$(date +'%Y-%m-%dT%H:%M:%S')" "==> start: $1" } log "Cloudron Start" readonly USER="yellowtent" readonly HOME_DIR="/home/${USER}" readonly BOX_SRC_DIR="${HOME_DIR}/box" readonly PLATFORM_DATA_DIR="${HOME_DIR}/platformdata" readonly APPS_DATA_DIR="${HOME_DIR}/appsdata" readonly BOX_DATA_DIR="${HOME_DIR}/boxdata/box" readonly MAIL_DATA_DIR="${HOME_DIR}/boxdata/mail" readonly script_dir="$(cd "$(dirname "${BASH_SOURCE[0]}")" && pwd)" readonly ubuntu_version=$(lsb_release -rs) cp -f "${script_dir}/../scripts/cloudron-support" /usr/bin/cloudron-support cp -f "${script_dir}/../scripts/cloudron-translation-update" /usr/bin/cloudron-translation-update cp -f "${script_dir}/../scripts/cloudron-logs" /usr/bin/cloudron-logs # this needs to match the cloudron/base:2.0.0 gid if ! getent group media; then addgroup --gid 500 --system media fi log "Configuring docker" cp "${script_dir}/start/docker-cloudron-app.apparmor" /etc/apparmor.d/docker-cloudron-app systemctl enable apparmor systemctl restart apparmor usermod ${USER} -a -G docker if ! grep -q ip6tables /etc/systemd/system/docker.service.d/cloudron.conf; then log "Adding ip6tables flag to docker" # https://github.com/moby/moby/pull/41622 echo -e "[Service]\nExecStart=\nExecStart=/usr/bin/dockerd -H fd:// --log-driver=journald --exec-opt native.cgroupdriver=cgroupfs --storage-driver=overlay2 --experimental --ip6tables --userland-proxy=false" > /etc/systemd/system/docker.service.d/cloudron.conf systemctl daemon-reload systemctl restart docker fi if ! grep -q userland-proxy /etc/systemd/system/docker.service.d/cloudron.conf; then log "Adding userland-proxy=false to docker" # https://github.com/moby/moby/pull/41622 echo -e "[Service]\nExecStart=\nExecStart=/usr/bin/dockerd -H fd:// --log-driver=journald --exec-opt native.cgroupdriver=cgroupfs --storage-driver=overlay2 --experimental --ip6tables --userland-proxy=false" > /etc/systemd/system/docker.service.d/cloudron.conf systemctl daemon-reload systemctl restart docker fi mkdir -p "${BOX_DATA_DIR}" mkdir -p "${APPS_DATA_DIR}" mkdir -p "${MAIL_DATA_DIR}" # keep these in sync with paths.js log "Ensuring directories" mkdir -p "${PLATFORM_DATA_DIR}/graphite" mkdir -p "${PLATFORM_DATA_DIR}/mysql" mkdir -p "${PLATFORM_DATA_DIR}/postgresql" mkdir -p "${PLATFORM_DATA_DIR}/mongodb" mkdir -p "${PLATFORM_DATA_DIR}/redis" mkdir -p "${PLATFORM_DATA_DIR}/tls" mkdir -p "${PLATFORM_DATA_DIR}/addons/mail/banner" \ "${PLATFORM_DATA_DIR}/addons/mail/dkim" mkdir -p "${PLATFORM_DATA_DIR}/collectd" mkdir -p "${PLATFORM_DATA_DIR}/logrotate.d" mkdir -p "${PLATFORM_DATA_DIR}/acme" mkdir -p "${PLATFORM_DATA_DIR}/backup" mkdir -p "${PLATFORM_DATA_DIR}/logs/backup" \ "${PLATFORM_DATA_DIR}/logs/updater" \ "${PLATFORM_DATA_DIR}/logs/tasks" \ "${PLATFORM_DATA_DIR}/logs/collectd" mkdir -p "${PLATFORM_DATA_DIR}/update" mkdir -p "${PLATFORM_DATA_DIR}/sftp/ssh" # sftp keys mkdir -p "${PLATFORM_DATA_DIR}/firewall" mkdir -p "${PLATFORM_DATA_DIR}/sshfs" mkdir -p "${PLATFORM_DATA_DIR}/cifs" mkdir -p "${PLATFORM_DATA_DIR}/oidc" # ensure backups folder exists and is writeable mkdir -p /var/backups chmod 777 /var/backups log "Configuring journald" sed -e "s/^#SystemMaxUse=.*$/SystemMaxUse=100M/" \ -e "s/^#ForwardToSyslog=.*$/ForwardToSyslog=no/" \ -i /etc/systemd/journald.conf # When rotating logs, systemd kills journald too soon sometimes # See https://github.com/systemd/systemd/issues/1353 (this is upstream default) sed -e "s/^WatchdogSec=.*$/WatchdogSec=3min/" \ -i /lib/systemd/system/systemd-journald.service usermod -a -G systemd-journal ${USER} # Give user access to system logs if [[ ! -d /var/log/journal ]]; then # in some images, this directory is not created making system log to /run/systemd instead mkdir -p /var/log/journal chown root:systemd-journal /var/log/journal chmod g+s /var/log/journal # sticky bit for group propagation fi systemctl daemon-reload systemctl restart systemd-journald # Give user access to nginx logs (uses adm group) usermod -a -G adm ${USER} log "Setting up unbound" cp -f "${script_dir}/start/unbound.conf" /etc/unbound/unbound.conf.d/cloudron-network.conf # update the root anchor after a out-of-disk-space situation (see #269) unbound-anchor -a /var/lib/unbound/root.key log "Adding systemd services" cp -r "${script_dir}/start/systemd/." /etc/systemd/system/ systemctl daemon-reload systemctl enable --now cloudron-syslog systemctl enable unbound systemctl enable box systemctl enable cloudron-firewall systemctl enable --now cloudron-disable-thp # update firewall rules. this must be done after docker created it's rules #systemctl restart cloudron-firewall # For logrotate systemctl enable --now cron # ensure unbound runs #systemctl restart unbound # ensure cloudron-syslog runs systemctl restart cloudron-syslog log "Configuring sudoers" rm -f /etc/sudoers.d/${USER} /etc/sudoers.d/cloudron cp "${script_dir}/start/sudoers" /etc/sudoers.d/cloudron log "Configuring collectd" rm -rf /etc/collectd /var/log/collectd.log "${PLATFORM_DATA_DIR}/collectd/collectd.conf.d" ln -sfF "${PLATFORM_DATA_DIR}/collectd" /etc/collectd cp "${script_dir}/start/collectd/collectd.conf" "${PLATFORM_DATA_DIR}/collectd/collectd.conf" systemctl restart collectd log "Configuring sysctl" # If privacy extensions are not disabled on server, this breaks IPv6 detection # https://bugs.launchpad.net/ubuntu/+source/procps/+bug/1068756 if [[ ! -f /etc/sysctl.d/99-cloudimg-ipv6.conf ]]; then echo "==> Disable temporary address (IPv6)" echo -e "# See https://bugs.launchpad.net/ubuntu/+source/procps/+bug/1068756\nnet.ipv6.conf.all.use_tempaddr = 0\nnet.ipv6.conf.default.use_tempaddr = 0\n\n" > /etc/sysctl.d/99-cloudimg-ipv6.conf sysctl -p fi log "Configuring logrotate" if ! grep -q "^include ${PLATFORM_DATA_DIR}/logrotate.d" /etc/logrotate.conf; then echo -e "\ninclude ${PLATFORM_DATA_DIR}/logrotate.d\n" >> /etc/logrotate.conf fi cp "${script_dir}/start/logrotate/"* "${PLATFORM_DATA_DIR}/logrotate.d/" # logrotate files have to be owned by root, this is here to fixup existing installations where we were resetting the owner to yellowtent chown root:root "${PLATFORM_DATA_DIR}/logrotate.d/" log "Adding motd message for admins" cp "${script_dir}/start/cloudron-motd" /etc/update-motd.d/92-cloudron log "Configuring nginx" # link nginx config to system config unlink /etc/nginx 2>/dev/null || rm -rf /etc/nginx ln -s "${PLATFORM_DATA_DIR}/nginx" /etc/nginx mkdir -p "${PLATFORM_DATA_DIR}/nginx/applications/dashboard" mkdir -p "${PLATFORM_DATA_DIR}/nginx/cert" cp "${script_dir}/start/nginx/nginx.conf" "${PLATFORM_DATA_DIR}/nginx/nginx.conf" cp "${script_dir}/start/nginx/mime.types" "${PLATFORM_DATA_DIR}/nginx/mime.types" touch "${PLATFORM_DATA_DIR}/nginx/trusted.ips" if ! grep -q "^Restart=" /etc/systemd/system/multi-user.target.wants/nginx.service; then # default nginx service file does not restart on crash echo -e "\n[Service]\nRestart=always\n" >> /etc/systemd/system/multi-user.target.wants/nginx.service fi # worker_rlimit_nofile in nginx config can be max this number mkdir -p /etc/systemd/system/nginx.service.d if ! grep -q "^LimitNOFILE=" /etc/systemd/system/nginx.service.d/cloudron.conf 2>/dev/null; then echo -e "[Service]\nLimitNOFILE=16384\n" > /etc/systemd/system/nginx.service.d/cloudron.conf fi systemctl daemon-reload systemctl start nginx # restart mysql to make sure it has latest config if [[ ! -f /etc/mysql/mysql.cnf ]] || ! diff -q "${script_dir}/start/mysql.cnf" /etc/mysql/mysql.cnf >/dev/null; then # wait for all running mysql jobs cp "${script_dir}/start/mysql.cnf" /etc/mysql/mysql.cnf while true; do if ! systemctl list-jobs | grep mysql; then break; fi log "Waiting for mysql jobs..." sleep 1 done log "Stopping mysql" systemctl stop mysql while mysqladmin ping 2>/dev/null; do log "Waiting for mysql to stop..." sleep 1 done fi # the start/stop of mysql is separate to make sure it got reloaded with latest config and it's up and running before we start the new box code # when using 'system restart mysql', it seems to restart much later and the box code loses connection during platform startup (dangerous!) log "Starting mysql" systemctl start mysql while ! mysqladmin ping 2>/dev/null; do log "Waiting for mysql to start..." sleep 1 done readonly mysql_root_password="password" mysqladmin -u root -ppassword password password # reset default root password readonly mysqlVersion=$(mysql -NB -u root -p${mysql_root_password} -e 'SELECT VERSION()' 2>/dev/null) if [[ "${mysqlVersion}" == "8.0."* ]]; then # mysql 8 added a new caching_sha2_password scheme which mysqljs does not support mysql -u root -p${mysql_root_password} -e "ALTER USER 'root'@'localhost' IDENTIFIED WITH mysql_native_password BY '${mysql_root_password}';" fi mysql -u root -p${mysql_root_password} -e 'CREATE DATABASE IF NOT EXISTS box' # set HOME explicity, because it's not set when the installer calls it. this is done because # paths.js uses this env var and some of the migrate code requires box code log "Migrating data" cd "${BOX_SRC_DIR}" if ! HOME=${HOME_DIR} BOX_ENV=cloudron DATABASE_URL=mysql://root:${mysql_root_password}@127.0.0.1/box "${BOX_SRC_DIR}/node_modules/.bin/db-migrate" up; then log "DB migration failed" exit 1 fi log "Changing ownership" # note, change ownership after db migrate. this allow db migrate to move files around as root and then we can fix it up here # be careful of what is chown'ed here. subdirs like mysql,redis etc are owned by the containers and will stop working if perms change chown -R "${USER}" /etc/cloudron chown "${USER}:${USER}" -R "${PLATFORM_DATA_DIR}/nginx" "${PLATFORM_DATA_DIR}/collectd" "${PLATFORM_DATA_DIR}/addons" "${PLATFORM_DATA_DIR}/acme" "${PLATFORM_DATA_DIR}/backup" "${PLATFORM_DATA_DIR}/logs" "${PLATFORM_DATA_DIR}/update" "${PLATFORM_DATA_DIR}/sftp" "${PLATFORM_DATA_DIR}/firewall" "${PLATFORM_DATA_DIR}/sshfs" "${PLATFORM_DATA_DIR}/cifs" "${PLATFORM_DATA_DIR}/tls" "${PLATFORM_DATA_DIR}/oidc" chown "${USER}:${USER}" "${PLATFORM_DATA_DIR}/INFRA_VERSION" 2>/dev/null || true chown "${USER}:${USER}" "${PLATFORM_DATA_DIR}" chown "${USER}:${USER}" "${APPS_DATA_DIR}" chown "${USER}:${USER}" -R "${BOX_DATA_DIR}" # do not chown the boxdata/mail directory entirely; dovecot gets upset chown "${USER}:${USER}" "${MAIL_DATA_DIR}" log "Starting Cloudron" systemctl start box sleep 2 # give systemd sometime to start the processes log "Almost done"I had to do sudo npm install inside /home/yellowtent/box to be able to have db-migrate and all the node modules installed an available, for some reason the npm rebuild, even when suceeding was not enough to get db-migrate available inside /node_modules/.bin/

And that's where I'm now, all the steps of the install scripts are successfully done

2024-03-28T17:14:05 ==> start: Configuring sudoers 2024-03-28T17:14:05 ==> start: Configuring collectd 2024-03-28T17:14:05 ==> start: Configuring sysctl 2024-03-28T17:14:05 ==> start: Configuring logrotate 2024-03-28T17:14:05 ==> start: Adding motd message for admins 2024-03-28T17:14:05 ==> start: Configuring nginx 2024-03-28T17:14:05 ==> start: Starting mysql mysqladmin: [Warning] Using a password on the command line interface can be insecure. Warning: Since password will be sent to server in plain text, use ssl connection to ensure password safety. mysql: [Warning] Using a password on the command line interface can be insecure. mysql: [Warning] Using a password on the command line interface can be insecure. 2024-03-28T17:14:05 ==> start: Migrating data [INFO] No migrations to run [INFO] Done 2024-03-28T17:14:06 ==> start: Changing ownership 2024-03-28T17:14:06 ==> start: Starting Cloudron 2024-03-28T17:14:08 ==> start: Almost doneI then went and enabled an started cloudron-firewall, unbound

I have the box service started and running

cloudron@cloudron:/home/yellowtent/box$ sudo systemctl status box ● box.service - Cloudron Admin Loaded: loaded (/etc/systemd/system/box.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2024-03-28 17:37:34 UTC; 5s ago Main PID: 57071 (node) Tasks: 11 (limit: 77024) Memory: 89.3M (max: 400.0M available: 310.6M) CPU: 1.652s CGroup: /system.slice/box.service └─57071 node /home/yellowtent/box/box.js Mar 28 17:37:34 cloudron systemd[1]: Started Cloudron Admin. Mar 28 17:37:35 cloudron sudo[57084]: pam_unix(sudo:session): session opened for user root(uid=0) by (uid=808) Mar 28 17:37:35 cloudron sudo[57084]: pam_unix(sudo:session): session closed for user root Mar 28 17:37:35 cloudron sudo[57090]: pam_unix(sudo:session): session opened for user root(uid=0) by (uid=808) Mar 28 17:37:35 cloudron sudo[57090]: pam_unix(sudo:session): session closed for user rootbut docker ps does not return anything, I'm like 99% done

What am I missing ???

● docker.service - Docker Application Container Engine Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled) Drop-In: /etc/systemd/system/docker.service.d └─cloudron.conf, http-proxy.conf Active: active (running) since Thu 2024-03-28 17:45:31 UTC; 3min 56s ago TriggeredBy: ● docker.socket Docs: https://docs.docker.com Main PID: 1304 (dockerd) Tasks: 15 Memory: 101.5M CPU: 1.013s CGroup: /system.slice/docker.service └─1304 /usr/bin/dockerd -H fd:// --log-driver=journald --exec-opt native.cgroupdriver=cgroupfs --storage-driver=overlay2 --experimental --ip6tables --userland-proxy=false Mar 28 17:45:30 cloudron dockerd[1304]: time="2024-03-28T17:45:30.748556243Z" level=info msg="Starting up" Mar 28 17:45:30 cloudron dockerd[1304]: time="2024-03-28T17:45:30.748643487Z" level=warning msg="Running experimental build" Mar 28 17:45:30 cloudron dockerd[1304]: time="2024-03-28T17:45:30.839810877Z" level=info msg="[graphdriver] trying configured driver: overlay2" Mar 28 17:45:31 cloudron dockerd[1304]: time="2024-03-28T17:45:31.077073358Z" level=info msg="Loading containers: start." Mar 28 17:45:31 cloudron dockerd[1304]: time="2024-03-28T17:45:31.770434481Z" level=info msg="Default bridge (docker0) is assigned with an IP address 172.17.0.0/16. Daemon option --bip can be > Mar 28 17:45:31 cloudron dockerd[1304]: time="2024-03-28T17:45:31.878706144Z" level=info msg="Loading containers: done." Mar 28 17:45:31 cloudron dockerd[1304]: time="2024-03-28T17:45:31.932918012Z" level=info msg="Docker daemon" commit=9dbdbd4 graphdriver=overlay2 version=23.0.6 Mar 28 17:45:31 cloudron dockerd[1304]: time="2024-03-28T17:45:31.933320591Z" level=info msg="Daemon has completed initialization" Mar 28 17:45:31 cloudron systemd[1]: Started Docker Application Container Engine. Mar 28 17:45:31 cloudron dockerd[1304]: time="2024-03-28T17:45:31.985672471Z" level=info msg="API listen on /run/docker.sock"What is clear is that, the moment I allow systemctl systemd-resolved to be stopped and unbound to be started, I lose internet connection, I can't use APT but most of all I lose DNS resolution to my network proxySo I guess i'm going to give in for the night and tomorow dive into the unbound settings and network settings to see how I can reach a compromise here

-

Just to keep track while its still hot :

1)So for some reason, even tho the docker pulling of all the images succeeded, none of the docker images are running

sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES- there must have been issues with npm install, not just the part with the box/ but also the dashboard of cloudron itself

nginx log return this

2024/03/28 18:08:35 [error] 1790#1790: *4 "/home/yellowtent/box/dashboard/dist/index.html" is not foundthere is no dist folder inside /box/dashboard

-

for the sake of showing my docker pull worked and the script chain properly to start.sh

cloudron@cloudron:/home/yellowtent/box$ sudo ./scripts/installer.sh 2024-03-28T18:47:09 ==> installer: Updating from # release version. do not edit manually to # release version. do not edit manually /usr/bin/docker /usr/bin/node npm verb cli /usr/local/node-18.16.0/bin/node /usr/bin/npm npm info using npm@9.5.1 npm info using node@v18.16.0 npm verb title npm rebuild npm verb argv "rebuild" "--unsafe-perm" npm verb logfile logs-max:10 dir:/root/.npm/_logs/2024-03-28T18_47_10_305Z- npm verb logfile /root/.npm/_logs/2024-03-28T18_47_10_305Z-debug-0.log npm info run cpu-features@0.0.9 install node_modules/cpu-features node buildcheck.js > buildcheck.gypi && node-gyp rebuild npm info run ssh2@1.15.0 install node_modules/ssh2 node install.js npm info run ssh2@1.15.0 install { code: 0, signal: null } npm info run cpu-features@0.0.9 install { code: 0, signal: null } npm info run tldjs@2.3.1 postinstall node_modules/tldjs node ./bin/postinstall.js npm info run tldjs@2.3.1 postinstall { code: 0, signal: null } rebuilt dependencies successfully npm verb exit 0 npm info ok 2024-03-28T18:47:14 ==> installer: downloading new addon images 2024-03-28T18:47:14 ==> installer: Pulling docker images: registry.docker.com/cloudron/base:4.2.0@sha256:46da2fffb36353ef714f97ae8e962bd2c212ca091108d768ba473078319a47f4 registry.docker.com/cloudron/graphite:3.4.3@sha256:75df420ece34b31a7ce8d45b932246b7f524c123e1854f5e8f115a9e94e33f20 registry.docker.com/cloudron/mail:3.12.1@sha256:f539bea6c7360d3c0aa604323847172359593f109b304bb2d2c5152ca56be05c registry.docker.com/cloudron/mongodb:6.0.0@sha256:1108319805acfb66115aa96a8fdbf2cded28d46da0e04d171a87ec734b453d1e registry.docker.com/cloudron/mysql:3.4.2@sha256:379749708186a89f4ae09d6b23b58bc6d99a2005bac32e812b4b1dafa47071e4 registry.docker.com/cloudron/postgresql:5.2.1@sha256:5ef3aea8873da25ea5e682e458b11c99fc8df25ae90c7695a6f40bda8d120057 registry.docker.com/cloudron/redis:3.5.2@sha256:5c3d9a912d3ad723b195cfcbe9f44956a2aa88f9e29f7da3ef725162f8e2829a registry.docker.com/cloudron/sftp:3.8.6@sha256:6b4e3f192c23eadb21d2035ba05f8432d7961330edb93921f36a4eaa60c4a4aa registry.docker.com/cloudron/turn:1.7.2@sha256:9ed8da613c1edc5cb8700657cf6e49f0f285b446222a8f459f80919945352f6d registry.docker.com/cloudron/base@sha256:46da2fffb36353ef714f97ae8e962bd2c212ca091108d768ba473078319a47f4: Pulling from cloudron/base Digest: sha256:46da2fffb36353ef714f97ae8e962bd2c212ca091108d768ba473078319a47f4 Status: Image is up to date for registry.docker.com/cloudron/base@sha256:46da2fffb36353ef714f97ae8e962bd2c212ca091108d768ba473078319a47f4 registry.docker.com/cloudron/base:4.2.0@sha256:46da2fffb36353ef714f97ae8e962bd2c212ca091108d768ba473078319a47f4 4.2.0: Pulling from cloudron/base Digest: sha256:46da2fffb36353ef714f97ae8e962bd2c212ca091108d768ba473078319a47f4 Status: Image is up to date for registry.docker.com/cloudron/base:4.2.0 registry.docker.com/cloudron/base:4.2.0 registry.docker.com/cloudron/graphite@sha256:75df420ece34b31a7ce8d45b932246b7f524c123e1854f5e8f115a9e94e33f20: Pulling from cloudron/graphite Digest: sha256:75df420ece34b31a7ce8d45b932246b7f524c123e1854f5e8f115a9e94e33f20 Status: Image is up to date for registry.docker.com/cloudron/graphite@sha256:75df420ece34b31a7ce8d45b932246b7f524c123e1854f5e8f115a9e94e33f20 registry.docker.com/cloudron/graphite:3.4.3@sha256:75df420ece34b31a7ce8d45b932246b7f524c123e1854f5e8f115a9e94e33f20 3.4.3: Pulling from cloudron/graphite Digest: sha256:75df420ece34b31a7ce8d45b932246b7f524c123e1854f5e8f115a9e94e33f20 Status: Image is up to date for registry.docker.com/cloudron/graphite:3.4.3 registry.docker.com/cloudron/graphite:3.4.3 registry.docker.com/cloudron/mail@sha256:f539bea6c7360d3c0aa604323847172359593f109b304bb2d2c5152ca56be05c: Pulling from cloudron/mail Digest: sha256:f539bea6c7360d3c0aa604323847172359593f109b304bb2d2c5152ca56be05c Status: Image is up to date for registry.docker.com/cloudron/mail@sha256:f539bea6c7360d3c0aa604323847172359593f109b304bb2d2c5152ca56be05c registry.docker.com/cloudron/mail:3.12.1@sha256:f539bea6c7360d3c0aa604323847172359593f109b304bb2d2c5152ca56be05c 3.12.1: Pulling from cloudron/mail Digest: sha256:f539bea6c7360d3c0aa604323847172359593f109b304bb2d2c5152ca56be05c Status: Image is up to date for registry.docker.com/cloudron/mail:3.12.1 registry.docker.com/cloudron/mail:3.12.1 registry.docker.com/cloudron/mongodb@sha256:1108319805acfb66115aa96a8fdbf2cded28d46da0e04d171a87ec734b453d1e: Pulling from cloudron/mongodb Digest: sha256:1108319805acfb66115aa96a8fdbf2cded28d46da0e04d171a87ec734b453d1e Status: Image is up to date for registry.docker.com/cloudron/mongodb@sha256:1108319805acfb66115aa96a8fdbf2cded28d46da0e04d171a87ec734b453d1e registry.docker.com/cloudron/mongodb:6.0.0@sha256:1108319805acfb66115aa96a8fdbf2cded28d46da0e04d171a87ec734b453d1e 6.0.0: Pulling from cloudron/mongodb Digest: sha256:1108319805acfb66115aa96a8fdbf2cded28d46da0e04d171a87ec734b453d1e Status: Image is up to date for registry.docker.com/cloudron/mongodb:6.0.0 registry.docker.com/cloudron/mongodb:6.0.0 registry.docker.com/cloudron/mysql@sha256:379749708186a89f4ae09d6b23b58bc6d99a2005bac32e812b4b1dafa47071e4: Pulling from cloudron/mysql Digest: sha256:379749708186a89f4ae09d6b23b58bc6d99a2005bac32e812b4b1dafa47071e4 Status: Image is up to date for registry.docker.com/cloudron/mysql@sha256:379749708186a89f4ae09d6b23b58bc6d99a2005bac32e812b4b1dafa47071e4 registry.docker.com/cloudron/mysql:3.4.2@sha256:379749708186a89f4ae09d6b23b58bc6d99a2005bac32e812b4b1dafa47071e4 3.4.2: Pulling from cloudron/mysql Digest: sha256:379749708186a89f4ae09d6b23b58bc6d99a2005bac32e812b4b1dafa47071e4 Status: Image is up to date for registry.docker.com/cloudron/mysql:3.4.2 registry.docker.com/cloudron/mysql:3.4.2 registry.docker.com/cloudron/postgresql@sha256:5ef3aea8873da25ea5e682e458b11c99fc8df25ae90c7695a6f40bda8d120057: Pulling from cloudron/postgresql Digest: sha256:5ef3aea8873da25ea5e682e458b11c99fc8df25ae90c7695a6f40bda8d120057 Status: Image is up to date for registry.docker.com/cloudron/postgresql@sha256:5ef3aea8873da25ea5e682e458b11c99fc8df25ae90c7695a6f40bda8d120057 registry.docker.com/cloudron/postgresql:5.2.1@sha256:5ef3aea8873da25ea5e682e458b11c99fc8df25ae90c7695a6f40bda8d120057 5.2.1: Pulling from cloudron/postgresql Digest: sha256:5ef3aea8873da25ea5e682e458b11c99fc8df25ae90c7695a6f40bda8d120057 Status: Image is up to date for registry.docker.com/cloudron/postgresql:5.2.1 registry.docker.com/cloudron/postgresql:5.2.1 registry.docker.com/cloudron/redis@sha256:5c3d9a912d3ad723b195cfcbe9f44956a2aa88f9e29f7da3ef725162f8e2829a: Pulling from cloudron/redis Digest: sha256:5c3d9a912d3ad723b195cfcbe9f44956a2aa88f9e29f7da3ef725162f8e2829a Status: Image is up to date for registry.docker.com/cloudron/redis@sha256:5c3d9a912d3ad723b195cfcbe9f44956a2aa88f9e29f7da3ef725162f8e2829a registry.docker.com/cloudron/redis:3.5.2@sha256:5c3d9a912d3ad723b195cfcbe9f44956a2aa88f9e29f7da3ef725162f8e2829a 3.5.2: Pulling from cloudron/redis Digest: sha256:5c3d9a912d3ad723b195cfcbe9f44956a2aa88f9e29f7da3ef725162f8e2829a Status: Image is up to date for registry.docker.com/cloudron/redis:3.5.2 registry.docker.com/cloudron/redis:3.5.2 registry.docker.com/cloudron/sftp@sha256:6b4e3f192c23eadb21d2035ba05f8432d7961330edb93921f36a4eaa60c4a4aa: Pulling from cloudron/sftp Digest: sha256:6b4e3f192c23eadb21d2035ba05f8432d7961330edb93921f36a4eaa60c4a4aa Status: Image is up to date for registry.docker.com/cloudron/sftp@sha256:6b4e3f192c23eadb21d2035ba05f8432d7961330edb93921f36a4eaa60c4a4aa registry.docker.com/cloudron/sftp:3.8.6@sha256:6b4e3f192c23eadb21d2035ba05f8432d7961330edb93921f36a4eaa60c4a4aa 3.8.6: Pulling from cloudron/sftp Digest: sha256:6b4e3f192c23eadb21d2035ba05f8432d7961330edb93921f36a4eaa60c4a4aa Status: Image is up to date for registry.docker.com/cloudron/sftp:3.8.6 registry.docker.com/cloudron/sftp:3.8.6 registry.docker.com/cloudron/turn@sha256:9ed8da613c1edc5cb8700657cf6e49f0f285b446222a8f459f80919945352f6d: Pulling from cloudron/turn Digest: sha256:9ed8da613c1edc5cb8700657cf6e49f0f285b446222a8f459f80919945352f6d Status: Image is up to date for registry.docker.com/cloudron/turn@sha256:9ed8da613c1edc5cb8700657cf6e49f0f285b446222a8f459f80919945352f6d registry.docker.com/cloudron/turn:1.7.2@sha256:9ed8da613c1edc5cb8700657cf6e49f0f285b446222a8f459f80919945352f6d 1.7.2: Pulling from cloudron/turn Digest: sha256:9ed8da613c1edc5cb8700657cf6e49f0f285b446222a8f459f80919945352f6d Status: Image is up to date for registry.docker.com/cloudron/turn:1.7.2 registry.docker.com/cloudron/turn:1.7.2 2024-03-28T18:47:33 ==> installer: stop box service for update Stopping cloudron 2024-03-28T18:47:36 ==> installer: calling box setup scriptwith this part commented since I already have /home/yellowtent/

ready with the latest box version# ensure we are not inside the source directory, which we will remove now #cd /root #log "switching the box code" #rm -rf "${box_src_dir}" #mv "${box_src_tmp_dir}" "${box_src_dir}" #chown -R "${user}:${user}" "${box_src_dir}"For the start.sh script to finish with success I had to (keep unbound stopped)

and then inside /box/ run once npm install to have all the node_modules installed so that the migration can run properly. -

box.service

cloudron@cloudron:/home$ sudo systemctl status box.service ● box.service - Cloudron Admin Loaded: loaded (/etc/systemd/system/box.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2024-03-28 18:48:33 UTC; 4min 56s ago Main PID: 34909 (node) Tasks: 11 (limit: 77024) Memory: 56.7M (max: 400.0M available: 343.2M) CPU: 2.002s CGroup: /system.slice/box.service └─34909 node /home/yellowtent/box/box.js Mar 28 18:48:33 cloudron systemd[1]: Started Cloudron Admin. Mar 28 18:48:34 cloudron sudo[34941]: pam_unix(sudo:session): session opened for user root(uid=0) by (uid=808) Mar 28 18:48:34 cloudron sudo[34941]: pam_unix(sudo:session): session closed for user root Mar 28 18:48:34 cloudron sudo[34947]: pam_unix(sudo:session): session opened for user root(uid=0) by (uid=808) Mar 28 18:48:34 cloudron sudo[34947]: pam_unix(sudo:session): session closed for user root -

G girish moved this topic from Support on

G girish moved this topic from Support on

-

@rmdes Did you check the box logs already as to why it's failing to start the containers?

I guess somehow the docker containers must also proxy all the http calls via the proxy.

-

the box log is pretty silent actually, not much happening

when I restart the box service and check the box.logI see this /home/yellowtent/platformdata/logs/box.log

2024-03-29T10:22:45.608Z box:box Received SIGTERM. Shutting down. 2024-03-29T10:22:45.609Z box:platform uninitializing platform 2024-03-29T10:22:45.613Z box:tasks stopAllTasks: stopping all tasks 2024-03-29T10:22:45.613Z box:shell stopTask /usr/bin/sudo -S /home/yellowtent/box/src/scripts/stoptask.sh all 2024-03-29T10:22:49.622Z box:server ========================================== 2024-03-29T10:22:49.623Z box:server Cloudron # release version. do not edit manually 2024-03-29T10:22:49.623Z box:server ========================================== 2024-03-29T10:22:49.623Z box:platform initialize: start platform 2024-03-29T10:22:49.656Z box:tasks stopAllTasks: stopping all tasks 2024-03-29T10:22:49.657Z box:shell stopTask /usr/bin/sudo -S /home/yellowtent/box/src/scripts/stoptask.sh all 2024-03-29T10:22:49.749Z box:platform start: not activated. generating IP based redirection config 2024-03-29T10:22:49.755Z box:reverseproxy writeDefaultConfig: writing configs for endpoint "setup" 2024-03-29T10:22:49.756Z box:shell reload /usr/bin/sudo -S /home/yellowtent/box/src/scripts/restartservice.sh nginxNginx, box, docker services are all running and appear to be fine, but nothing is happening

also nginx error log keeps showing that there is missing dist/ folder inside /box/dashboard/

as if it hadn't been built in the first placeregarding docker proxy :

I'm able to pull any image from docker.io through the proxy

using /etc/systemd/system/docker.service.d/http-proxy.conf[Service] Environment="HTTP_PROXY=http://iproxy:8080" Environment="HTTPS_PROXY=http://iproxy:8080" Environment="NO_PROXY=localhost,127.0.0.1"I have a custom.conf for the unbound systemd service working now

meaning, I can have cloudron-firewall, unbound enabled and running and still maintain

an internet access to the outside networkserver: # this disables DNSSEC val-permissive-mode: yes # Specify your internal domains private-domain: "local.domain" domain-insecure: "local.domain" # Hardcode the Cloudron dashboard address local-data: "my.cloudron.local.domain. IN A 10.200.116.244" #local-data: "cloudron.local.domain. IN A 10.200.116.244" # Forward all queries to the internal DNS servers forward-zone: name: "." forward-addr: 10.200.X.X forward-addr: 10.200.X.X forward-addr: 10.200.X.X forward-addr: 10.200.X.X -

@rmdes it seems nginx is not restart. Does

systemctl restart nginxwork ? -

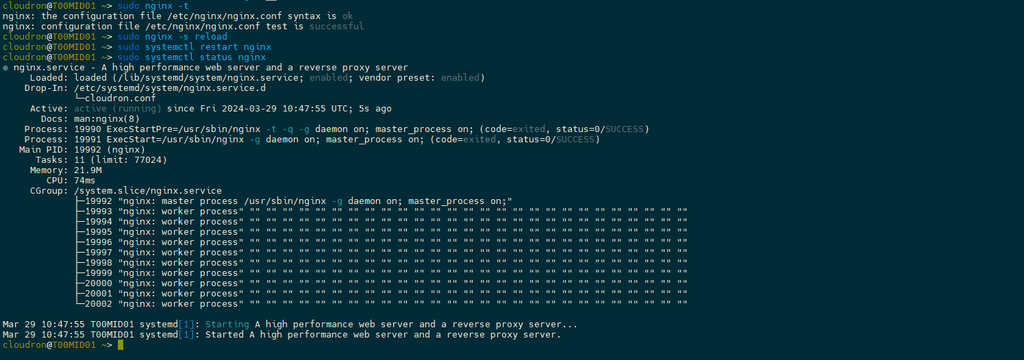

Appears to be the case :

journalctl -u nginx -f Mar 29 10:43:29 T00MID01 systemd[1]: Stopping A high performance web server and a reverse proxy server... Mar 29 10:43:29 T00MID01 systemd[1]: nginx.service: Deactivated successfully. Mar 29 10:43:29 T00MID01 systemd[1]: Stopped A high performance web server and a reverse proxy server. Mar 29 10:43:29 T00MID01 systemd[1]: Starting A high performance web server and a reverse proxy server... Mar 29 10:43:29 T00MID01 systemd[1]: Started A high performance web server and a reverse proxy server. cloudron@T00MID01 ~ [SIGINT]> sudo systemctl status nginx ● nginx.service - A high performance web server and a reverse proxy server Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled) Drop-In: /etc/systemd/system/nginx.service.d └─cloudron.conf Active: active (running) since Fri 2024-03-29 10:43:29 UTC; 23s ago Docs: man:nginx(8) Process: 18291 ExecStartPre=/usr/sbin/nginx -t -q -g daemon on; master_process on; (code=exited, status=0/SUCCESS) Process: 18292 ExecStart=/usr/sbin/nginx -g daemon on; master_process on; (code=exited, status=0/SUCCESS) Main PID: 18293 (nginx) Tasks: 11 (limit: 77024) Memory: 21.9M CPU: 74ms CGroup: /system.slice/nginx.service ├─18293 "nginx: master process /usr/sbin/nginx -g daemon on; master_process on;" ├─18294 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18295 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18296 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18297 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18298 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18299 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18300 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18301 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18302 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" └─18303 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" Mar 29 10:43:29 T00MID01 systemd[1]: Starting A high performance web server and a reverse proxy server... Mar 29 10:43:29 T00MID01 systemd[1]: Started A high performance web server and a reverse proxy server. -

Appears to be the case :

journalctl -u nginx -f Mar 29 10:43:29 T00MID01 systemd[1]: Stopping A high performance web server and a reverse proxy server... Mar 29 10:43:29 T00MID01 systemd[1]: nginx.service: Deactivated successfully. Mar 29 10:43:29 T00MID01 systemd[1]: Stopped A high performance web server and a reverse proxy server. Mar 29 10:43:29 T00MID01 systemd[1]: Starting A high performance web server and a reverse proxy server... Mar 29 10:43:29 T00MID01 systemd[1]: Started A high performance web server and a reverse proxy server. cloudron@T00MID01 ~ [SIGINT]> sudo systemctl status nginx ● nginx.service - A high performance web server and a reverse proxy server Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled) Drop-In: /etc/systemd/system/nginx.service.d └─cloudron.conf Active: active (running) since Fri 2024-03-29 10:43:29 UTC; 23s ago Docs: man:nginx(8) Process: 18291 ExecStartPre=/usr/sbin/nginx -t -q -g daemon on; master_process on; (code=exited, status=0/SUCCESS) Process: 18292 ExecStart=/usr/sbin/nginx -g daemon on; master_process on; (code=exited, status=0/SUCCESS) Main PID: 18293 (nginx) Tasks: 11 (limit: 77024) Memory: 21.9M CPU: 74ms CGroup: /system.slice/nginx.service ├─18293 "nginx: master process /usr/sbin/nginx -g daemon on; master_process on;" ├─18294 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18295 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18296 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18297 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18298 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18299 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18300 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18301 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" ├─18302 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" └─18303 "nginx: worker process" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" "" Mar 29 10:43:29 T00MID01 systemd[1]: Starting A high performance web server and a reverse proxy server... Mar 29 10:43:29 T00MID01 systemd[1]: Started A high performance web server and a reverse proxy server.@rmdes did the restart command itself work? I think that's where the box code is getting stuck.

-

the box log is pretty silent actually, not much happening

when I restart the box service and check the box.logI see this /home/yellowtent/platformdata/logs/box.log

2024-03-29T10:22:45.608Z box:box Received SIGTERM. Shutting down. 2024-03-29T10:22:45.609Z box:platform uninitializing platform 2024-03-29T10:22:45.613Z box:tasks stopAllTasks: stopping all tasks 2024-03-29T10:22:45.613Z box:shell stopTask /usr/bin/sudo -S /home/yellowtent/box/src/scripts/stoptask.sh all 2024-03-29T10:22:49.622Z box:server ========================================== 2024-03-29T10:22:49.623Z box:server Cloudron # release version. do not edit manually 2024-03-29T10:22:49.623Z box:server ========================================== 2024-03-29T10:22:49.623Z box:platform initialize: start platform 2024-03-29T10:22:49.656Z box:tasks stopAllTasks: stopping all tasks 2024-03-29T10:22:49.657Z box:shell stopTask /usr/bin/sudo -S /home/yellowtent/box/src/scripts/stoptask.sh all 2024-03-29T10:22:49.749Z box:platform start: not activated. generating IP based redirection config 2024-03-29T10:22:49.755Z box:reverseproxy writeDefaultConfig: writing configs for endpoint "setup" 2024-03-29T10:22:49.756Z box:shell reload /usr/bin/sudo -S /home/yellowtent/box/src/scripts/restartservice.sh nginxNginx, box, docker services are all running and appear to be fine, but nothing is happening

also nginx error log keeps showing that there is missing dist/ folder inside /box/dashboard/

as if it hadn't been built in the first placeregarding docker proxy :

I'm able to pull any image from docker.io through the proxy

using /etc/systemd/system/docker.service.d/http-proxy.conf[Service] Environment="HTTP_PROXY=http://iproxy:8080" Environment="HTTPS_PROXY=http://iproxy:8080" Environment="NO_PROXY=localhost,127.0.0.1"I have a custom.conf for the unbound systemd service working now

meaning, I can have cloudron-firewall, unbound enabled and running and still maintain

an internet access to the outside networkserver: # this disables DNSSEC val-permissive-mode: yes # Specify your internal domains private-domain: "local.domain" domain-insecure: "local.domain" # Hardcode the Cloudron dashboard address local-data: "my.cloudron.local.domain. IN A 10.200.116.244" #local-data: "cloudron.local.domain. IN A 10.200.116.244" # Forward all queries to the internal DNS servers forward-zone: name: "." forward-addr: 10.200.X.X forward-addr: 10.200.X.X forward-addr: 10.200.X.X forward-addr: 10.200.X.X@rmdes said in [Intranet] Install cloudron in a corporate network environment:

2024-03-29T10:22:49.623Z box:server Cloudron # release version. do not edit manually

This line is also worrying. Looks like something is wrong with the VERSION file.

So,

systemctl restart boxjust keeps getting stuck in that line? Something is making the nginx restart command just get stuck. Not sure what though. -

Perhaps related to how (see first post) I had to comment the "check version" part of the cloudron-setup and manually set the box_src_dir and the version (7.7.1)

requestedVersion="7.7.1"

version="7.7.1"Perhaps something should have been done to that VERSION thing when it's retrieved via the api ?

-

@rmdes said in [Intranet] Install cloudron in a corporate network environment:

2024-03-29T10:22:49.623Z box:server Cloudron # release version. do not edit manually

This line is also worrying. Looks like something is wrong with the VERSION file.

So,

systemctl restart boxjust keeps getting stuck in that line? Something is making the nginx restart command just get stuck. Not sure what though.@girish said in [Intranet] Install cloudron in a corporate network environment:

So, systemctl restart box just keeps getting stuck in that line?

yes correct, nothing happens after that and I can explore of the logs files I can get my hands on, I don't see any root issues

-

if anyone have any idea on what I could do to get this done, I'm all ear

With ups and downs, I got all the parts of all the scripts to run properly and install what they must

but still, even tho "box.js" is running and that box.service is running, same for docker etc..

I'm not seing cloudron starting as it shouldOnce I get it up and running I want to make a blog post about this and replicate the entire install procedure (with the added bonus now I know how I can configure my unbound service to work from the get go)

This means minimal modification of the original cloudron-setup and an easy way to replicate this install even in other proxy environnements/intranets.

-

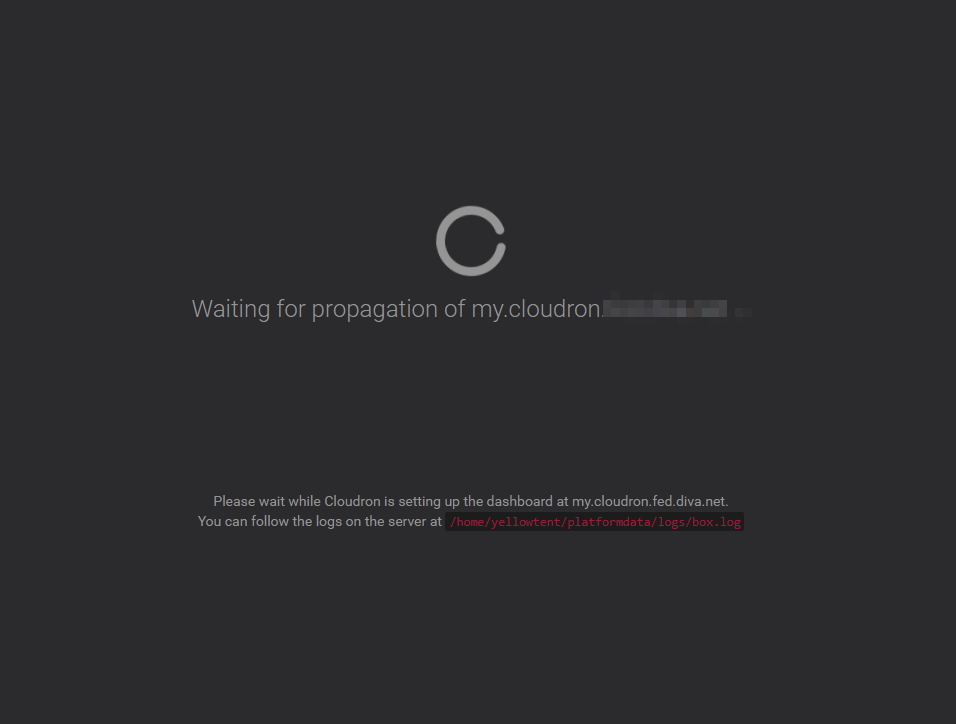

There is still something odd with the public IP detected by the cloudron (it does not exist)

and instead of using my ens160 network card IP it uses a local IP but I'm progressingcloudron@T00MID01:/home/yellowtent/box/src/scripts$ sudo grc tail -f /home/yellowtent/platformdata/logs/box.log 2024-04-01T09:19:49.677Z box:mail upsertDnsRecords: records of cloudron.***.** added 2024-04-01T09:19:49.679Z box:provision setProgress: setup - Registering location my.cloudron.***.** 2024-04-01T09:19:49.680Z box:mailserver restartMailIfActivated: skipping restart of mail container since Cloudron is not activated yet 2024-04-01T09:19:49.684Z box:dns upsertDNSRecord: location my on domain cloudron.***.** of type A with values ["10.200.XXX.XXX"] 2024-04-01T09:19:49.685Z box:dns/manual upsert: my for zone ***.** of type A with values ["10.200.XXX.XXX"] 2024-04-01T09:19:49.687Z box:provision setProgress: setup - Waiting for propagation of my.cloudron.***.** 2024-04-01T09:19:49.688Z box:dns/waitfordns waitForDns: waiting for my.cloudron.***.** to be 10.200.XXX.XXX in zone ns1.***.** 2024-04-01T09:19:49.689Z box:dns/waitfordns waitForDns: nameservers are ["ns1.***.**","ns2.***.**","ns3.***.**"] 2024-04-01T09:19:49.691Z box:dns/waitfordns resolveIp: Checking if my.cloudron.***.** has A record at 172.16.64.5 2024-04-01T09:19:54.638Z box:box Received SIGHUP. Re-reading configs. 2024-04-01T09:21:04.763Z box:dns/waitfordns resolveIp: No A record. Checking if my.cloudron.***.** has CNAME record at 172.16.64.5 2024-04-01T09:22:19.837Z box:dns/waitfordns isChangeSynced: NS ns1.***.** (172.16.64.5) not resolving my.cloudron.***.** (A): Error: queryCname ETIMEOUT my.cloudron.***.**. Ignoring 2024-04-01T09:22:19.837Z box:dns/waitfordns waitForDns: my.cloudron.***.** at ns ns1.***.**: done 2024-04-01T09:22:19.845Z box:dns/waitfordns resolveIp: Checking if my.cloudron.***.** has A record at 172.16.64.3I think I just need to define my A record to point to the VM IP and define a DNS record for cloudron.*. and I should be moving forward another step !

-

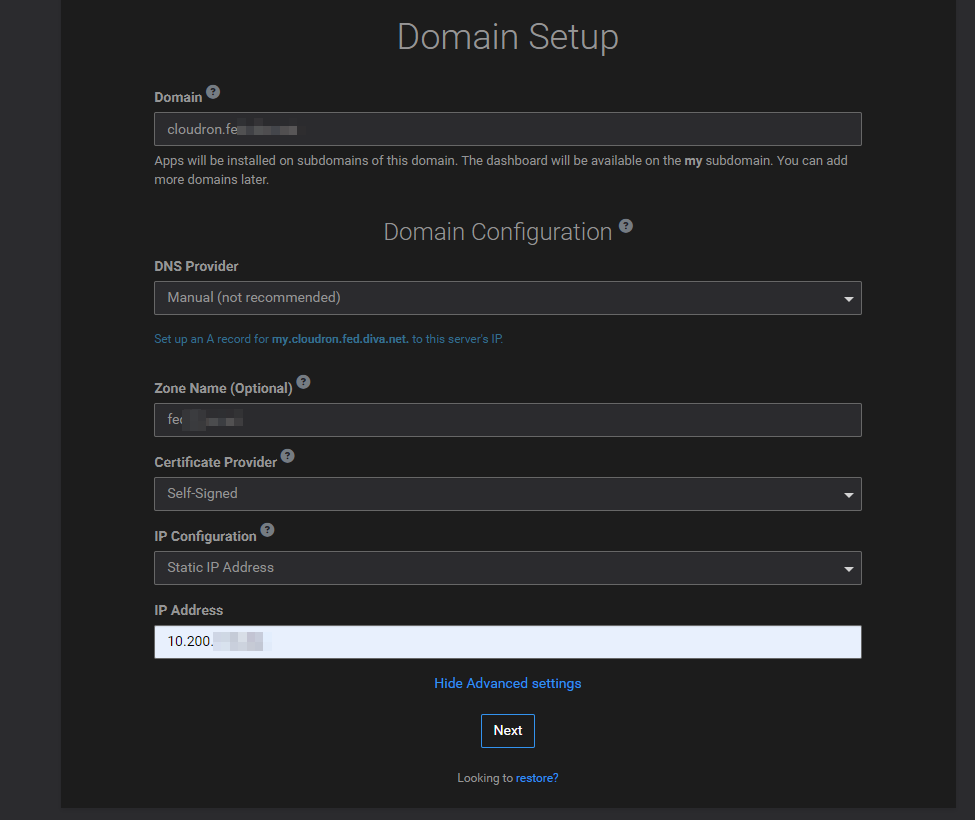

@rmdes the default public IP detection works by

curl https://ipv4.api.cloudron.io/api/v1/helper/public_ip. If this is not the case in your set up, you have to choose Manual IPv4 configuration in the networking . This is also available under Advanced options, when you set up DNS initially. -

this curl command does resolve but I guess it's detecting our F5 proxy/load-balancer not the actual IP of the VM on the intranet

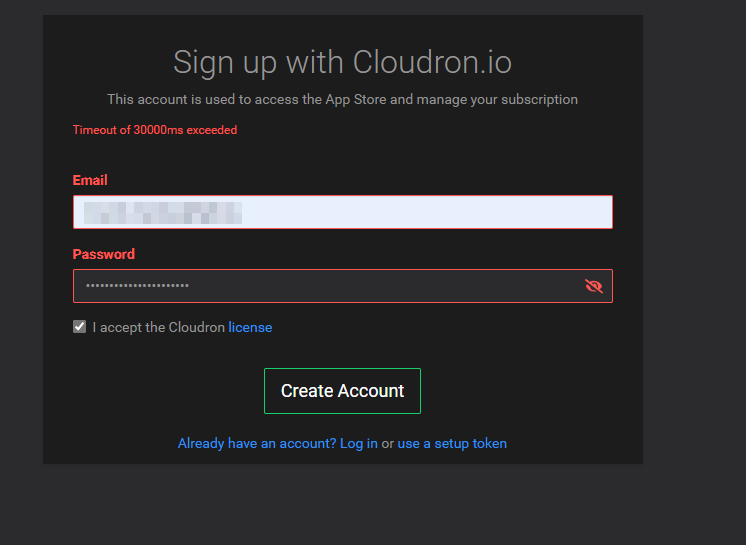

I'm tyring to setup the dashboard but even tho I select manual and I specify the IP of the VM, it keeps expecting an A record with an internal 172.XXX.X.XXX IP in the logs

I do see this kind of log entries tho2024-04-02T08:45:07.987Z box:dns/waitfordns waitForDns: my.cloudron.***.***.*** at ns .***.***.***: done 2024-04-02T08:45:07.988Z box:dns/waitfordns resolveIp: Checking if my.cloudron.***.***.*** has A record at NS 2024-04-02T08:45:07.990Z box:dns/waitfordns isChangeSynced: my.cloudron..***.***.*** (A) was resolved to 10.200.XXX.XX4 at NS .***.***.*** Expecting 10.200.XXX.XX4. Match true -

@rmdes Manual means it will still try to check if the DNS resolves to the IP address you have entered. You can choose noop if you want to skip that DNS check.