Issue with Lengthy and Failing Updates

-

@nebulon Hello, thank you for the information. I find it odd why Rsync always backs up all the files. Yes, the first backup is large, but afterwards, only the changed files should be backed up, not all the videos from Peertube and many GB from Nextcloud every day. I've tried several backup services, kept two-day and four-week backups, but Rsync always backs up the entire server each time. That's why I now keep only two backups. I use Rsync on another server as well, and there is no problem there.

That's why the backups are slow when the server backs up almost 1 TB of data daily.@archos said in Issue with Lengthy and Failing Updates:

@nebulon Hello, thank you for the information. I find it odd why Rsync always backs up all the files. Yes, the first backup is large, but afterwards, only the changed files should be backed up, not all the videos from Peertube and many GB from Nextcloud every day.

Are you sure those files that seem identical but are copied regardless are not modified in any way? Other timestamps for example?

-

@archos said in Issue with Lengthy and Failing Updates:

@nebulon Hello, thank you for the information. I find it odd why Rsync always backs up all the files. Yes, the first backup is large, but afterwards, only the changed files should be backed up, not all the videos from Peertube and many GB from Nextcloud every day.

Are you sure those files that seem identical but are copied regardless are not modified in any way? Other timestamps for example?

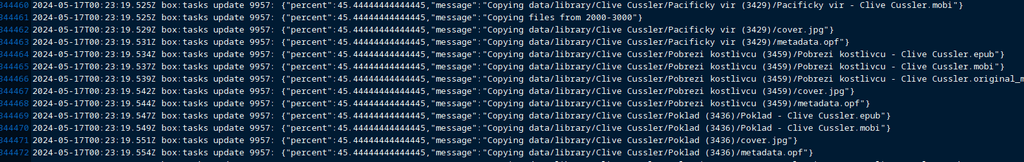

@necrevistonnezr The server occupies 960.45 GB and I currently maintain only two backups, with a total size of 2 TB. The attached image shows the log from today's backup of the Calibre application. I uploaded books to this library about two years ago. Since then, I haven't uploaded any new books to Calibre and no one else has made any changes, yet every day, the entire 10 GB of books is being backed up. It doesn't make sense for the two backups to occupy 2 TB when the server itself only uses nearly 1 TB.

-

I would recommend to investigate whether the same files in the app and in the backup are indeed identical.

rsync would not copy identical files all over again.

I use rsync to backup to an external harddrive - my Cloudron instance is around ~ 250 GB and all my backups (7 daily, 4 weekly, 12 monthly) going back to June 2023 occupy ~ 350 GB.

The same backups that I push via restic to Onedrive going back to June 2022 (!) occupy ~ 310 GB (restic is very good in compression and de-duplication). -

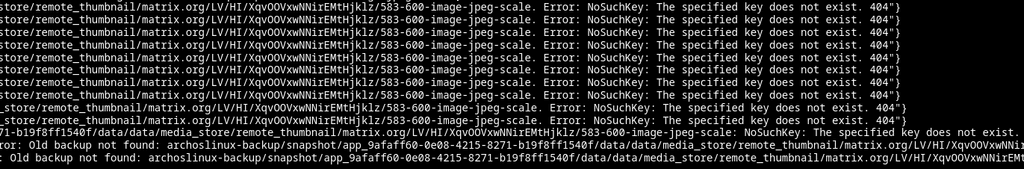

Unfortunately, the backups fail to complete again. I have tried to manually initiate the backup process twice, but each time the backup fails with an error, specifically with the Matrix application. I have no idea what to do. I also tried using a Hetzner box, but the backups take even longer there.

-

The issue with the backup was likely caused by the Matrix app. I turned off the backup in Matrix and initiated a new backup. Within two hours, the backup was successfully completed. I'll see how the backup turns out tonight.

Something is wrong with the Matrix Synapse. -

Hello everyone, it's been quiet for a few days since I turned off the automatic backups at Matrix, but today the backup failed again. It's frustrating, and from what I see on the forum, I'm not the only one having issues with backups.

Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copying files from 0-3"} May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copying config.json"} May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copying data/coolwsd.xml"} May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copying fsmetadata.json"} May 25 01:00:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (1) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:00:26 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (2) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:00:46 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (3) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:01:06 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (4) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:01:26 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (5) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:01:46 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (6) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:02:07 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (7) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:02:27 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (8) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:02:47 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (9) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:03:07 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (10) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:03:27 box:storage/s3 copy: s3 copy error when copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json: InternalError: We encountered an internal error, please try again.: cause(EOF) May 25 01:03:27 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Retrying (11) copy of fsmetadata.json. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 01:03:27 box:tasks update 10016: {"percent":3.7777777777777777,"message":"Copied 3 files with error: BoxError: Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)"} May 25 01:03:27 box:backuptask copy: copied to 2024-05-24-230002-287/app_collabora.arch-linux.cz_v1.17.3 errored. error: Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF) May 25 01:03:27 box:taskworker Task took 205.487 seconds May 25 01:03:27 box:tasks setCompleted - 10016: {"result":null,"error":{"stack":"BoxError: Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)\n at Response.done (/home/yellowtent/box/src/storage/s3.js:338:48)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:367:18)\n at Request.callListeners (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:106:20)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:686:14)\n at Request.transition (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:38:9)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:688:12)","name":"BoxError","reason":"External Error","details":{},"message":"Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)"}} May 25 01:03:27 box:tasks update 10016: {"percent":100,"result":null,"error":{"stack":"BoxError: Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)\n at Response.done (/home/yellowtent/box/src/storage/s3.js:338:48)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:367:18)\n at Request.callListeners (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:106:20)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:686:14)\n at Request.transition (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /home/yellowtent/box/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:38:9)\n at Request.<anonymous> (/home/yellowtent/box/node_modules/aws-sdk/lib/request.js:688:12)","name":"BoxError","reason":"External Error","details":{},"message":"Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF)"}} [no timestamp] Error copying archoslinux-backup/snapshot/app_070963ae-8ef3-4bcb-bab3-7ca671f4a72b/fsmetadata.json (148 bytes): InternalError InternalError: We encountered an internal error, please try again.: cause(EOF) -

I've tried manual backups several times, but each time I end up with the same error. I would really appreciate any help or advice. Thank you very much for the information.

May 25 08:12:10 box:tasks update 10025: {"percent":6.555555555555555,"message":"Copying with concurrency of 110"} May 25 08:12:10 box:tasks update 10025: {"percent":6.555555555555555,"message":"Copying files from 0-4"} May 25 08:12:10 box:tasks update 10025: {"percent":6.555555555555555,"message":"Copying config.json"} May 25 08:12:10 box:tasks update 10025: {"percent":6.555555555555555,"message":"Copying data/env"} May 25 08:12:10 box:tasks update 10025: {"percent":6.555555555555555,"message":"Copying fsmetadata.json"} May 25 08:12:10 box:tasks update 10025: {"percent":6.555555555555555,"message":"Copying postgresqldump"} May 25 08:12:10 box:tasks update 10025: {"percent":6.555555555555555,"message":"Retrying (1) copy of postgresqldump. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 08:12:30 box:tasks update 10025: {"percent":6.555555555555555,"message":"Retrying (2) copy of postgresqldump. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 08:12:50 box:tasks update 10025: {"percent":6.555555555555555,"message":"Retrying (3) copy of postgresqldump. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 08:13:10 box:tasks update 10025: {"percent":6.555555555555555,"message":"Retrying (4) copy of postgresqldump. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 08:13:31 box:tasks update 10025: {"percent":6.555555555555555,"message":"Retrying (5) copy of postgresqldump. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 08:13:51 box:tasks update 10025: {"percent":6.555555555555555,"message":"Retrying (6) copy of postgresqldump. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 08:14:11 box:tasks update 10025: {"percent":6.555555555555555,"message":"Retrying (7) copy of postgresqldump. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 08:14:31 box:tasks update 10025: {"percent":6.555555555555555,"message":"Retrying (8) copy of postgresqldump. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} May 25 08:14:51 box:tasks update 10025: {"percent":6.555555555555555,"message":"Retrying (9) copy of postgresqldump. Error: InternalError: We encountered an internal error, please try again.: cause(EOF) 500"} -

@archos for bugs like Synapse, I am fixing our code to have better logs . Off late, there's been a bunch of backup related issues. It's not clear (yet) whether this is some bug in node or some node module or the upstream provider. The error happens randomly and the logs are not helpful. I will update this post when I have a patch.

-

J james has marked this topic as solved on

J james has marked this topic as solved on