"docker-volumes" is filling my whole server storage

-

Perfect thank you Girish ! Doing it rn.

-

I reclaimed only 10.73 gb...

What does this new "Docker-Volumes" volume correspond to, which I didn't have before, and is it absolutely imperative for the operation of my instance?

Because I've just upgraded my infrastructure so that it can be used for several years (by adding a new remote storage volume). But if my server's internal disk saturates so quickly, it will have been in vain and I risk losing access to my instance (for me, and my users).

-

@Dont-Worry Can you check the output of

docker system df? This is where the size of docker volumes comes from. Note that the disk usage information is not realtime. You have to click the refresh button (not the browser refresh) to get the latest disk usage. -

G girish marked this topic as a question on

G girish marked this topic as a question on

-

I refresh this button very often i saw that it wasn't live updated.

Here are the output of docker system df :

user:~$ sudo docker system df TYPE TOTAL ACTIVE SIZE RECLAIMABLE Images 52 51 61.19GB 4.705GB (7%) Containers 67 48 0B 0B Local Volumes 123 123 179.2GB 0B (0%) Build Cache 0 0 0B 0B -

I refresh this button very often i saw that it wasn't live updated.

Here are the output of docker system df :

user:~$ sudo docker system df TYPE TOTAL ACTIVE SIZE RECLAIMABLE Images 52 51 61.19GB 4.705GB (7%) Containers 67 48 0B 0B Local Volumes 123 123 179.2GB 0B (0%) Build Cache 0 0 0B 0B@Dont-Worry I think this means that some app has a very large amount of /run or /tmp directory. Can you try

docker system df -vand see which volume id it is?Then, get the container which has that volume attached:

docker ps -a --format '{{ .ID }}' | xargs -I {} docker inspect -f '{{ .Name }}{{ printf "\n" }}{{ range .Mounts }}{{ printf "\n\t" }}{{ .Type }} {{ if eq .Type "bind" }}{{ .Source }}{{ end }}{{ .Name }} => {{ .Destination }}{{ end }}{{ printf "\n" }}' {}If you

docker inspect <containerid>you will see which app is having a large run time directory. Maybe we have a bug in some app package. -

here is what is inside the docker that uses a lots of memory, it seems that it is a part of the Emby content I added on my server. but it shouldn't be there because i moved Emby to a new volume. I don't understand why there is videos and audio files on the server disk.

user:~$ sudo docker exec -it docker_ID /bin/bash root@sftp:/app/code# cd /tmp root@sftp:/tmp# ls -lah total 130G -rw-r--r-- 1 root root 631M May 20 12:43 -9Xo-89Hb6E0UzpXKPvMtvT_.mp4 -rw-r--r-- 1 root root 2.5G May 20 16:13 -B0LYra-w572_N_Fl-z1Fg8Y.mp4 -rw-r--r-- 1 root root 971M May 20 13:54 -H0OPKJKqQmK53HFZTtxHkCe.mp4 -rw-r--r-- 1 root root 727M May 20 17:56 -XLVWBgNrI4b4bLPiShFvnAo.mp4 -rw-r--r-- 1 root root 1.7G May 20 17:52 -kzTrAAk7QbN6242Zsh0y2WG.mp4 drwxrwxrwt 3 root root 20K May 31 00:00 . drwxr-xr-x 1 root root 4.0K May 15 13:39 .. -rw-r--r-- 1 root root 38M May 29 11:20 0AO3hMZZHlD0vqoaeWODHD3u.wav -rw-r--r-- 1 root root 1.9G May 20 17:22 0K4x3d-JnMca3po-P3XxyQVa.mp4 -rw-r--r-- 1 root root 773M May 30 20:49 0PD5NHwvcHCyqBW10OHjXNp6.mp4 -rw-r--r-- 1 root root 32M May 29 10:57 0gVIwfG9iUrr4LgZ7B4nj0lh.wav -rw-r--r-- 1 root root 285M May 20 12:51 0ivU3H-H5UVFr_pGHnUZC5i8.mp4 -rw-r--r-- 1 root root 1.3G May 20 14:29 1OJcVLRZu9IaBzS8sjBb6Pyt.mp4 -rw-r--r-- 1 root root 24M May 29 10:57 2MmAbQr3bHzIr3YBwQ0vxx6H.wav -rw-r--r-- 1 root root 1.6G May 20 17:16 2W9hoUqn4P_o_knItBS67roC.mp4 -rw-r--r-- 1 root root 2.0G May 20 17:41 2jCmAN6k66TMsDveEFymrUc2.mp4 -rw-r--r-- 1 root root 722M May 25 12:31 3Dn7W1qdek7dHzl2DCE_r7Oy.mp4 -rw-r--r-- 1 root root 206M May 26 17:49 3l09HELCbvab-3JuPzn9beQV.mp4 -rw-r--r-- 1 root root 691M May 20 12:02 3uAaDEsKnUIYiwPqVrvBNpLW.mp4 -rw-r--r-- 1 root root 1.7G May 20 16:24 43ozkqpS5eSPdDu5lH0g-TrA.mp4 -rw-r--r-- 1 root root 893M May 23 19:38 4WMP1WSNJSoTb_JC8eELHgBw.mp4 -rw-r--r-- 1 root root 2.7G May 20 18:17 4zyr1wP6x7kol_I7KyTxCRuC.mp4 -rw-r--r-- 1 root root 1.2G May 20 14:01 5J8Yzydl0JaqXVwx5eOcsnHG.mp4 -rw-r--r-- 1 root root 1.5G May 20 15:04 6a-Rch6JMMVKNxScWLbRhNej.mp4 -rw-r--r-- 1 root root 1.5G May 20 16:28 6eStd3htgBBORarTU7KRcfib.mp4 -rw-r--r-- 1 root root 43M May 26 18:13 7FCXztMm6avCLwg4928xN1Us.mp4 -rw-r--r-- 1 root root 1.9G May 20 17:47 7FMk3KiO-XdbH0ackDu8WNAB.mp4 -rw-r--r-- 1 root root 1.4G May 26 12:00 7LiJ8GPR6CANDoBc5Hi0U6Bh.mp4 -rw-r--r-- 1 root root 388M May 26 18:19 7WJajkpjHZ-_fRC5X5JdQZ7Z.mp4 -rw-r--r-- 1 root root 41M May 26 11:54 7uAq-IwmbkaBN4lknPWtQrdY.mp4 -rw-r--r-- 1 root root 293M May 26 17:48 96g09TTadLH9u4TZ-ANs1rMm.mp4 -rw-r--r-- 1 root root 492M May 20 12:04 97lsl2kmk0TYKs1G6V3JNIDV.mp4 -rw-r--r-- 1 root root 1.9G May 20 18:02 9JdvMD6wTyhiDzaRW7qNQKTH.mp4 -rw-r--r-- 1 root root 3.2G May 20 14:39 A-TH8ayPCIqwHErZDc1hJ-mZ.mp4 -rw-r--r-- 1 root root 731M May 26 18:03 AOfkTofzY554Eyyvv_ktLnR_.mp4 -rw-r--r-- 1 root root 496M May 20 11:53 Alcm67xX01zsFu7XPeJw1EAi.mp4 -rw-r--r-- 1 root root 1.9G May 20 13:22 BhriY68M_QDXRU03iYb8aMj-.mp4 -rw-r--r-- 1 root root 377M May 26 18:12 C4btxzo8oMlRnv0wlG_9pPdJ.mp4 -rw-r--r-- 1 root root 1.9G May 20 13:46 Dfn0tISTx1SdbPBZVwqR-A50.mp4 -rw-r--r-- 1 root root 418M May 20 12:22 E5ezhGVju75qs4996hYV53sr.mp4 -rw-r--r-- 1 root root 902M May 30 20:56 ED7IP87SAxRpMHPeJw0gS5Ig.mp4 -rw-r--r-- 1 root root 1.7G May 20 17:35 EOasUK6qeSuwt9AzVpm4khpa.mp4 -rw-r--r-- 1 root root 157M May 26 17:51 Eq6DZ4-DJnKeQ-hzMZmpzWRt.mp4 -rw-r--r-- 1 root root 1.8G May 26 11:38 FkrrBHV3qWKamDzSB_NuUnLy.mp4 -rw-r--r-- 1 root root 1.3G May 20 13:32 FtZSEF9xDFvHyxpqYyH4w6OI.mp4 -rw-r--r-- 1 root root 586M May 20 13:57 G9lYmMQdxkkVAt_oryG_1zf7.mp4 -rw-r--r-- 1 root root 2.4G May 20 18:10 GpJajRF3_P1eEJhmelNRewfR.mp4 -rw-r--r-- 1 root root 40M May 29 10:58 GrvUgP_8Ruu9AcyFBCoVuxdx.wav -rw-r--r-- 1 root root 340M May 30 20:42 GuiNGpI65P_ORQihBle9dfIW.mp4 -rw-r--r-- 1 root root 50M May 26 18:17 Hy4v_M56sY6PgepX13g-7a2R.mp4 -rw-r--r-- 1 root root 290M May 20 12:39 ICWFP3yjybxvd0Omp97NPYwx.mp4 -rw-r--r-- 1 root root 634M May 20 12:29 IKbOuQAq73HTM2cZTAPWoYpc.mp4 -rw-r--r-- 1 root root 1.7G May 20 15:46 J6lY8DeQsZzVipaJhQ4ZHR7o.mp4 -rw-r--r-- 1 root root 584M May 20 12:51 JHk11UrPle1EZePvQEkyJsi4.mp4 -rw-r--r-- 1 root root 59M May 26 18:17 JPdRyJ8brxmT9nK_8t6g_5aJ.mp4 -rw-r--r-- 1 root root 1.2G May 20 13:27 JX4EQwTHDGCdsWVcZaWOOjkK.mp4 -rw-r--r-- 1 root root 177M May 26 18:00 LeZbMxAb3I2j2YutFiyz036z.mp4 -rw-r--r-- 1 root root 2.6G May 20 15:11 LsjYxUycWLII6pqR9TB1P8d7.mp4 -rw-r--r-- 1 root root 33M May 29 10:57 MJSBcPE3dvigrTN2af32ju5R.wav -rw-r--r-- 1 root root 2.3G May 20 14:54 MQDExgN7hUkv-wy36lJvq8Ew.mp4 -rw-r--r-- 1 root root 319M May 26 11:32 NZXi5L4tyXJHA36KVjkoBjAo.mp4 -rw-r--r-- 1 root root 484M May 20 12:07 NkAG6803oCLgU-LiCEi46EJa.mp4 -rw-r--r-- 1 root root 2.0G May 20 14:46 NsHmhEQ9vbwfoV5l-_PG3V7g.mp4 -rw-r--r-- 1 root root 325M May 20 19:27 O5SlPE4FLOuqGTZjKVeEV-iv.mp4 -rw-r--r-- 1 root root 866M May 20 11:48 OMsHdSlMbw1YxUHvMDVVvuPq.mp4 -rw-r--r-- 1 root root 1.9G May 20 17:28 OszH7FbqGto5CKmW55sUCUMg.mp4 -rw-r--r-- 1 root root 30M May 29 10:58 OvcAUGvT9F9ivZ5ZJ51dWQpF.wav -rw-r--r-- 1 root root 1.3G May 20 13:34 OyEBSKwKvKZYuT58lSivfyBm.mp4 -rw-r--r-- 1 root root 51M May 29 10:57 P8hZzG0lqCenq6VQ2pxSCT6P.wav -rw-r--r-- 1 root root 199M May 26 11:36 Pchx7wxD2bJK5IpC4TXDcgUd.mp4 -rw-r--r-- 1 root root 77M May 25 13:23 Plzm5S6c2woqz2Jz5-ABqiep.mp4 -rw-r--r-- 1 root root 297M May 23 19:39 RRMDAsc7SwhvE0Z0w_Eyuahb.mp4 -rw-r--r-- 1 root root 1.3G May 20 11:57 RaenQ-PiEd_nrKBRQMXVK25O.mp4 -rw-r--r-- 1 root root 1.9G May 20 13:46 SXM8teq8DVLmiKAL1eE_PTxr.mp4 -rw-r--r-- 1 root root 680M May 20 12:39 Sh2wIFlT8cg6Cjh31rUTlz_7.mp4 -rw-r--r-- 1 root root 379M May 20 20:10 UC9z-j60Hci2l0QZBFbbZfiS.mp4 -rw-r--r-- 1 root root 228M May 23 19:40 UhHElZ5e3BwNQEajnB6GpcC6.mp4 -rw-r--r-- 1 root root 1.1G May 20 14:05 V3Wf6k5LskSoG7FviMaCmSY1.mp4 -rw-r--r-- 1 root root 135M May 23 14:48 VBTTVuPeQuBhZgH-LtCqiSVy.mp4 -rw-r--r-- 1 root root 806M May 25 13:27 VEMzljNEMcAzksAo_nb6RuH9.mp4 -rw-r--r-- 1 root root 1.4G May 20 15:56 VkNRBxVYlvLB9dx3-Q16Emb3.mp4 -rw-r--r-- 1 root root 456M May 20 12:31 VwdvdtzDbjA_jQ3qhkNXC2S6.mp4 -rw-r--r-- 1 root root 429M May 20 13:06 X8Ikbg6hNG71BUF8oC7zjVep.mp4 -rw-r--r-- 1 root root 176M May 26 12:01 XBnF4Y6cCwJij_XBr2HA8vJY.mp4 -rw-r--r-- 1 root root 2.2G May 20 16:35 Y4fqlGk8W6Su5FXtTba6HWBH.mp4 -rw-r--r-- 1 root root 773M May 20 11:46 YBHxC2Vo3AHszIYD3x-K9gns.mp4 -rw-r--r-- 1 root root 16M May 29 11:22 YMpOo_vqfnzep8xUx8zrWQpp.wav -rw-r--r-- 1 root root 1.2G May 20 13:54 YSJU8K5Caudu1oMhX0-a5HIS.mp4 -rw-r--r-- 1 root root 3.6G May 20 19:36 YW64K1HL_7oyQEwJCfbz5nKH.mp4 -rw-r--r-- 1 root root 270M May 26 11:56 YiSQ7xKap3kpTiJPwFaUipI2.mp4 -rw-r--r-- 1 root root 190M May 20 20:31 YqP5gd4str1rdX4Fxa1_X7uz.mp4 -rw-r--r-- 1 root root 2.2G May 20 14:23 ZCtXoF9dzT5KjJxw0QOnhh8Z.mp4 -rw-r--r-- 1 root root 41M May 26 18:17 ZZoFmB_p0Hqtx2sNiEitKKpH.mp4 -rw-r--r-- 1 root root 140M May 26 11:54 _0H5pzEwB0FzZBWTNyB3XSH-.mp4 -rw-r--r-- 1 root root 3.8G May 20 11:27 _0lpdtFL4Kk_46NOWZapOPVv.mp4 -rw-r--r-- 1 root root 741M May 26 11:32 _fDYIkByrZ3noWw3Ps6y_27x.mp4 -rw-r--r-- 1 root root 1.3G May 30 20:32 _tPQ6lck1Ac_pGfv9ShU-uCf.mp4 -rw-r--r-- 1 root root 342M May 23 14:50 aqchuAFh5xyS0ulo3TxCnOq7.mp4 -rw-r--r-- 1 root root 1.1G May 20 17:10 b5t3MXVuWtmmsMF7P47S_xIu.mp4 -rw-r--r-- 1 root root 525M May 20 12:25 bLEfDuneT1X4i2ADPKlSPrCi.mp4 -rw-r--r-- 1 root root 296M May 20 20:27 c36ixv72ro6VQB0zGx3jFrqz.mp4 -rw-r--r-- 1 root root 974M May 20 17:07 ctJQUfzn8Runpytz0Vln60OM.mp4 -rw-r--r-- 1 root root 42M May 21 09:14 di0_xKFUQ4Vb2HMQiDd2GUwx.wav -rw-r--r-- 1 root root 47M May 29 11:21 eWKDqF5YQ2cNsnCn3aAmIaAR.wav -rw-r--r-- 1 root root 293M May 29 11:12 fRK0amAQCgKBeMz1Re81qR4K.mp4 -rw-r--r-- 1 root root 2.1G May 20 16:48 fftwRj7aJaC-iPrPyVxzd7O9.mp4 -rw-r--r-- 1 root root 647M May 20 13:24 fg_DDLRbhZ8BGoqMJtFzOktQ.mp4 -rw-r--r-- 1 root root 1.1G May 20 13:02 fuF6EY2NmRfs1BfKvrEOpl37.mp4 -rw-r--r-- 1 root root 522M May 20 12:20 gZfgsbBBIPNGAStQozof76C_.mp4 -rw-r--r-- 1 root root 904M May 25 13:29 grisaZAd45PjVJG7JurOG08d.mp4 -rw-r--r-- 1 root root 1.2G May 26 11:57 hUg338GVp_bCHafdCwvWVqRJ.mp4 -rw-r--r-- 1 root root 1.1G May 20 12:00 heTGjUl0oz0rHHoWEgYNucFr.mp4 -rw-r--r-- 1 root root 1.5G May 20 19:40 hzb0iQmQCETuEZVaKYyBT_j-.mp4 -rw-r--r-- 1 root root 895M May 23 14:54 iPU0QF5BtinSRrxdFSX3z6Pr.mp4 -rw-r--r-- 1 root root 2.1G May 20 15:00 j1iGJbesY5WlU6cdHForhR_M.mp4 -rw-r--r-- 1 root root 435M May 20 12:35 j5gyxOEYXvhggAUoim-9JAdi.mp4 -rw-r--r-- 1 root root 40M May 26 18:17 jofAl9muVGf-AlW9S1cr35Z5.mp4 -rw-r--r-- 1 root root 37M May 29 10:58 kkv3FaqcFa0iRWjXGMwqrt9Y.wav -rw-r--r-- 1 root root 271M May 26 11:36 lehhNnBVgXenA8LchONjlCLx.mp4 -rw-r--r-- 1 root root 1.7G May 20 15:23 m4FIMZK4PK5IdespZzjD-LAW.mp4 -rw-r--r-- 1 root root 377M May 26 18:17 m56N2T-LDexVZllePBjyX5vV.mp4 -rw-r--r-- 1 root root 55M May 26 18:17 mrVn-Ja3gvKz0ip1S_dz_xKb.mp4 -rw-r--r-- 1 root root 37M May 29 11:21 nHlXzZnAVcnzL1KFJierDODm.wav -rw-r--r-- 1 root root 1.7G May 20 16:01 nam0Bnj1-W6ZnclNJvJvv89S.mp4 -rw-r--r-- 1 root root 420M May 26 17:51 nvvtIhNU2on2pNc7L9ihAxCm.mp4 -rw-r--r-- 1 root root 1.1G May 20 13:00 nwSQ4g20ocIZMXPQyxV9gcQu.mp4 -rw-r--r-- 1 root root 138M May 20 12:08 o7Q17cnI_Y_ao_QNo2rsmMEH.mp4 -rw-r--r-- 1 root root 35M May 26 18:17 oZHKQUXngFaJ3-8XjYnozIWc.mp4 -rw-r--r-- 1 root root 465M May 26 17:47 p3v34DQmajvxZUSPTkaiGzH_.mp4 -rw-r--r-- 1 root root 354M May 20 20:37 p6k2PM3n74N-2PVbuvZ2P_-C.mp4 -rw-r--r-- 1 root root 1.3G May 28 16:21 q7WUNBf4ukiiOZpjYTbDay2n.mp4 -rw-r--r-- 1 root root 694M May 20 12:06 qNsyEsL5hGcbnpejlgpn5s1X.mp4 -rw-r--r-- 1 root root 220M May 26 11:37 qV83Ui82P8NmhjactYM75PQr.mp4 -rw-r--r-- 1 root root 740M May 29 11:18 rBeECqPzXEDzD0tsJHw4zNyF.mp4 -rw-r--r-- 1 root root 1.1G May 20 16:53 rCL8jgw9oXbZMiuaxkTN62fL.mp4 -rw-r--r-- 1 root root 186M May 23 19:41 rPR7BcWS2g8pnsQq_PF0V4Yy.mp4 -rw-r--r-- 1 root root 32M May 26 18:17 rPlywafz2Tpxl3ybXEHS1_SE.mp4 -rw-r--r-- 1 root root 653M May 20 12:48 re9C-TOqNTzj_9jxR8-IL9F3.mp4 -rw-r--r-- 1 root root 506M May 20 13:07 ssbaOJslRD7WOYGfLZ6V1vZu.mp4 -rw-r--r-- 1 root root 1.2G May 20 14:12 tUV0D9eueawU4C4ArhXbiQdV.mp4 -rw-r--r-- 1 root root 1.6G May 20 15:16 t_lbOWfA8095RkXKcPC5PfJR.mp4 -rw-r--r-- 1 root root 1.7G May 20 16:40 u8y_JFNOPQuvLxFTVfTntrph.mp4 -rw-r--r-- 1 root root 54M May 26 11:38 uXXtn_bQvndlSvaVZAi9hcfX.mp4 -rw-r--r-- 1 root root 1.1G May 20 11:52 ubu-VaLYtTvsTj-EjqjS8GQs.mp4 drwxr-xr-x 3 root root 4.0K May 15 13:39 v8-compile-cache-0 -rw-r--r-- 1 root root 32M May 29 11:20 vDlBz8h09tXhxBb1qUUTSsEC.wav -rw-r--r-- 1 root root 42M May 26 18:17 vWyTNWAYXx4km_TnO8bShKo3.mp4 -rw-r--r-- 1 root root 30M May 29 11:22 vbK_1rTfZxicn1YNpA3jeun_.wav -rw-r--r-- 1 root root 523M May 20 13:36 w74k40ynd5NwFqXJvZGb_kqO.mp4 -rw-r--r-- 1 root root 973M May 20 14:16 w8ZYAS6VfF4rY9m4JNzYTriY.mp4 -rw-r--r-- 1 root root 445M May 20 12:49 xQ7ng7Jv6mMxY5SBkGNxenKn.mp4 -rw-r--r-- 1 root root 1010M May 20 14:09 xhQNc2x9fxW9RDCpykIJnI_f.mp4 -rw-r--r-- 1 root root 790M May 30 20:54 xs7j2DV86LFhALFgh6DoTOwc.mp4 -rw-r--r-- 1 root root 43M May 29 11:22 xw4cK9Laz7MNSVi_ACNthAlU.wav -rw-r--r-- 1 root root 1.1G May 26 11:38 yTYtwv61fl1uCHp2KmLlggHj.mp4 -rw-r--r-- 1 root root 31M May 29 11:21 yqB8s0kMiPS4DFLlmDA1TKqH.wav -rw-r--r-- 1 root root 249M May 20 12:53 zM7pGFL9qBntFQcufioiAADt.mp4 -rw-r--r-- 1 root root 594M May 25 12:36 ze_tnsaG-YLJGe1Clpp4ybsS.mp4 root@sftp:/tmp# -

I'm currently experiencing an issue with my Cloudron server where the

/tmpdirectories in several Docker containers are consuming a significant amount of disk space. Following the initial guidance, I performed the following steps:-

Checked the mounted volumes for each container:

Using the commandsudo docker ps -a --format '{{ .ID }}' | xargs -I {} sudo docker inspect -f '{{ .ID }} {{ .Name }}{{ printf "\n" }}{{ range .Mounts }}{{ printf "\n\t" }}{{ .Type }} {{ if eq .Type "bind" }}{{ .Source }}{{ end }}{{ .Name }} => {{ .Destination }}{{ end }}{{ printf "\n" }}' {}, I identified the volumes mounted in each container and found that several containers have significant data in their/tmpdirectories. -

Detailed inspection of

/tmpdirectories:

I listed the contents of the/tmpdirectories in each container using:containers=$(sudo docker ps -a -q) for container in $containers; do echo "Checking /tmp in container $container:" sudo docker exec -it $container sh -c "du -sh /tmp/*" || echo "/tmp is empty or not accessible" echo "-----------------------" doneThis revealed that several containers have large files or directories within

/tmp, some reaching up to several gigabytes. -

Plan to clean

/tmpdirectories:

Based on the findings, I plan to clean up the/tmpdirectories using the following command:containers=$(sudo docker ps -a -q) for container in $containers; do echo "Cleaning /tmp in container $container:" sudo docker exec -it $container sh -c "rm -rf /tmp/*" || echo "/tmp is empty or not accessible" echo "-----------------------" done

However, before proceeding, I wanted to consult with the Cloudron team to ensure that this approach won't inadvertently affect any critical application functionality.

Additionally, here are the details regarding the container consuming the most space:

Inside one of the containers, it seems that there are parts of Emby content (videos and audio files) that should not be there because Emby was moved to a new volume. However, the server disk still has these files:

user:~$ sudo docker exec -it docker_ID /bin/bash root@sftp:/app/code# cd /tmp root@sftp:/tmp# ls -lah total 130G -rw-r--r-- 1 root root 631M May 20 12:43 -9Xo-89Hb6E0UzpXKPvMtvT_.mp4 -rw-r--r-- 1 root root 2.5G May 20 16:13 -B0LYra-w572_N_Fl-z1Fg8Y.mp4 -rw-r--r-- 1 root root 971M May 20 13:54 -H0OPKJKqQmK53HFZTtxHkCe.mp4 -rw-r--r-- 1 root root 727M May 20 17:56 -XLVWBgNrI4b4bLPiShFvnAo.mp4 -rw-r--r-- 1 root root 1.7G May 20 17:52 -kzTrAAk7QbN6242Zsh0y2WG.mp4 -rw-r--r-- 1 root root 38M May 29 11:20 0AO3hMZZHlD0vqoaeWODHD3u.wav -rw-r--r-- 1 root root 1.9G May 20 17:22 0K4x3d-JnMca3po-P3XxyQVa.mp4 -rw-r--r-- 1 root root 773M May 30 20:49 0PD5NHwvcHCyqBW10OHjXNp6.mp4 -rw-r--r-- 1 root root 32M May 29 10:57 0gVIwfG9iUrr4LgZ7B4nj0lh.wav -rw-r--r-- 1 root root 285M May 20 12:51 0ivU3H-H5UVFr_pGHnUZC5i8.mp4 ... -rw-r--r-- 1 root root 1.2G May 20 13:54 YSJU8K5Caudu1oMhX0-a5HIS.mp4 -rw-r--r-- 1 root root 3.6G May 20 19:36 YW64K1HL_7oyQEwJCfbz5nKH.mp4 -rw-r--r-- 1 root root 270M May 26 11:56 YiSQ7xKap3kpTiJPwFaUipI2.mp4 -rw-r--r-- 1 root root 190M May 20 20:31 YqP5gd4str1rdX4Fxa1_X7uz.mp4 -rw-r--r-- 1 root root 2.2G May 20 14:23 ZCtXoF9dzT5KjJxw0QOnhh8Z.mp4 ...Questions:

- Is there a recommended way to handle large

/tmpdirectories in Docker containers on a Cloudron server? - Are there any specific precautions I should take before cleaning these directories?

- Is there a way to prevent these large files from accumulating in

/tmpin the future?

Any guidance or recommendations would be greatly appreciated.

-

-

@Dont-Worry Not sure why all those files are there. Those files are not created by Cloudron itself, we don't use an app's tmp directory. Maybe some Emby feature is buggy? I use Emby as well for many years now and atleast my Emby's tmp here is practically empty.

Cloudron has some logic to cleanup tmp of apps. It deletes 10 days old files using

find /tmp -type f -mtime +10 -exec rm -rf {} +. -

I'm currently experiencing an issue with my Cloudron server where the

/tmpdirectories in several Docker containers are consuming a significant amount of disk space. Following the initial guidance, I performed the following steps:-

Checked the mounted volumes for each container:

Using the commandsudo docker ps -a --format '{{ .ID }}' | xargs -I {} sudo docker inspect -f '{{ .ID }} {{ .Name }}{{ printf "\n" }}{{ range .Mounts }}{{ printf "\n\t" }}{{ .Type }} {{ if eq .Type "bind" }}{{ .Source }}{{ end }}{{ .Name }} => {{ .Destination }}{{ end }}{{ printf "\n" }}' {}, I identified the volumes mounted in each container and found that several containers have significant data in their/tmpdirectories. -

Detailed inspection of

/tmpdirectories:

I listed the contents of the/tmpdirectories in each container using:containers=$(sudo docker ps -a -q) for container in $containers; do echo "Checking /tmp in container $container:" sudo docker exec -it $container sh -c "du -sh /tmp/*" || echo "/tmp is empty or not accessible" echo "-----------------------" doneThis revealed that several containers have large files or directories within

/tmp, some reaching up to several gigabytes. -

Plan to clean

/tmpdirectories:

Based on the findings, I plan to clean up the/tmpdirectories using the following command:containers=$(sudo docker ps -a -q) for container in $containers; do echo "Cleaning /tmp in container $container:" sudo docker exec -it $container sh -c "rm -rf /tmp/*" || echo "/tmp is empty or not accessible" echo "-----------------------" done

However, before proceeding, I wanted to consult with the Cloudron team to ensure that this approach won't inadvertently affect any critical application functionality.

Additionally, here are the details regarding the container consuming the most space:

Inside one of the containers, it seems that there are parts of Emby content (videos and audio files) that should not be there because Emby was moved to a new volume. However, the server disk still has these files:

user:~$ sudo docker exec -it docker_ID /bin/bash root@sftp:/app/code# cd /tmp root@sftp:/tmp# ls -lah total 130G -rw-r--r-- 1 root root 631M May 20 12:43 -9Xo-89Hb6E0UzpXKPvMtvT_.mp4 -rw-r--r-- 1 root root 2.5G May 20 16:13 -B0LYra-w572_N_Fl-z1Fg8Y.mp4 -rw-r--r-- 1 root root 971M May 20 13:54 -H0OPKJKqQmK53HFZTtxHkCe.mp4 -rw-r--r-- 1 root root 727M May 20 17:56 -XLVWBgNrI4b4bLPiShFvnAo.mp4 -rw-r--r-- 1 root root 1.7G May 20 17:52 -kzTrAAk7QbN6242Zsh0y2WG.mp4 -rw-r--r-- 1 root root 38M May 29 11:20 0AO3hMZZHlD0vqoaeWODHD3u.wav -rw-r--r-- 1 root root 1.9G May 20 17:22 0K4x3d-JnMca3po-P3XxyQVa.mp4 -rw-r--r-- 1 root root 773M May 30 20:49 0PD5NHwvcHCyqBW10OHjXNp6.mp4 -rw-r--r-- 1 root root 32M May 29 10:57 0gVIwfG9iUrr4LgZ7B4nj0lh.wav -rw-r--r-- 1 root root 285M May 20 12:51 0ivU3H-H5UVFr_pGHnUZC5i8.mp4 ... -rw-r--r-- 1 root root 1.2G May 20 13:54 YSJU8K5Caudu1oMhX0-a5HIS.mp4 -rw-r--r-- 1 root root 3.6G May 20 19:36 YW64K1HL_7oyQEwJCfbz5nKH.mp4 -rw-r--r-- 1 root root 270M May 26 11:56 YiSQ7xKap3kpTiJPwFaUipI2.mp4 -rw-r--r-- 1 root root 190M May 20 20:31 YqP5gd4str1rdX4Fxa1_X7uz.mp4 -rw-r--r-- 1 root root 2.2G May 20 14:23 ZCtXoF9dzT5KjJxw0QOnhh8Z.mp4 ...Questions:

- Is there a recommended way to handle large

/tmpdirectories in Docker containers on a Cloudron server? - Are there any specific precautions I should take before cleaning these directories?

- Is there a way to prevent these large files from accumulating in

/tmpin the future?

Any guidance or recommendations would be greatly appreciated.

@Dont-Worry said in "docker-volumes" is filling my whole server storage:

Cloudron server where the /tmp directories in several Docker containers are consuming a significant amount of disk space

I thought the issue is Emby primarily. Do you see this for other apps too?

-

-

I'm currently experiencing an issue with my Cloudron server where the

/tmpdirectories in several Docker containers are consuming a significant amount of disk space. Following the initial guidance, I performed the following steps:-

Checked the mounted volumes for each container:

Using the commandsudo docker ps -a --format '{{ .ID }}' | xargs -I {} sudo docker inspect -f '{{ .ID }} {{ .Name }}{{ printf "\n" }}{{ range .Mounts }}{{ printf "\n\t" }}{{ .Type }} {{ if eq .Type "bind" }}{{ .Source }}{{ end }}{{ .Name }} => {{ .Destination }}{{ end }}{{ printf "\n" }}' {}, I identified the volumes mounted in each container and found that several containers have significant data in their/tmpdirectories. -

Detailed inspection of

/tmpdirectories:

I listed the contents of the/tmpdirectories in each container using:containers=$(sudo docker ps -a -q) for container in $containers; do echo "Checking /tmp in container $container:" sudo docker exec -it $container sh -c "du -sh /tmp/*" || echo "/tmp is empty or not accessible" echo "-----------------------" doneThis revealed that several containers have large files or directories within

/tmp, some reaching up to several gigabytes. -

Plan to clean

/tmpdirectories:

Based on the findings, I plan to clean up the/tmpdirectories using the following command:containers=$(sudo docker ps -a -q) for container in $containers; do echo "Cleaning /tmp in container $container:" sudo docker exec -it $container sh -c "rm -rf /tmp/*" || echo "/tmp is empty or not accessible" echo "-----------------------" done

However, before proceeding, I wanted to consult with the Cloudron team to ensure that this approach won't inadvertently affect any critical application functionality.

Additionally, here are the details regarding the container consuming the most space:

Inside one of the containers, it seems that there are parts of Emby content (videos and audio files) that should not be there because Emby was moved to a new volume. However, the server disk still has these files:

user:~$ sudo docker exec -it docker_ID /bin/bash root@sftp:/app/code# cd /tmp root@sftp:/tmp# ls -lah total 130G -rw-r--r-- 1 root root 631M May 20 12:43 -9Xo-89Hb6E0UzpXKPvMtvT_.mp4 -rw-r--r-- 1 root root 2.5G May 20 16:13 -B0LYra-w572_N_Fl-z1Fg8Y.mp4 -rw-r--r-- 1 root root 971M May 20 13:54 -H0OPKJKqQmK53HFZTtxHkCe.mp4 -rw-r--r-- 1 root root 727M May 20 17:56 -XLVWBgNrI4b4bLPiShFvnAo.mp4 -rw-r--r-- 1 root root 1.7G May 20 17:52 -kzTrAAk7QbN6242Zsh0y2WG.mp4 -rw-r--r-- 1 root root 38M May 29 11:20 0AO3hMZZHlD0vqoaeWODHD3u.wav -rw-r--r-- 1 root root 1.9G May 20 17:22 0K4x3d-JnMca3po-P3XxyQVa.mp4 -rw-r--r-- 1 root root 773M May 30 20:49 0PD5NHwvcHCyqBW10OHjXNp6.mp4 -rw-r--r-- 1 root root 32M May 29 10:57 0gVIwfG9iUrr4LgZ7B4nj0lh.wav -rw-r--r-- 1 root root 285M May 20 12:51 0ivU3H-H5UVFr_pGHnUZC5i8.mp4 ... -rw-r--r-- 1 root root 1.2G May 20 13:54 YSJU8K5Caudu1oMhX0-a5HIS.mp4 -rw-r--r-- 1 root root 3.6G May 20 19:36 YW64K1HL_7oyQEwJCfbz5nKH.mp4 -rw-r--r-- 1 root root 270M May 26 11:56 YiSQ7xKap3kpTiJPwFaUipI2.mp4 -rw-r--r-- 1 root root 190M May 20 20:31 YqP5gd4str1rdX4Fxa1_X7uz.mp4 -rw-r--r-- 1 root root 2.2G May 20 14:23 ZCtXoF9dzT5KjJxw0QOnhh8Z.mp4 ...Questions:

- Is there a recommended way to handle large

/tmpdirectories in Docker containers on a Cloudron server? - Are there any specific precautions I should take before cleaning these directories?

- Is there a way to prevent these large files from accumulating in

/tmpin the future?

Any guidance or recommendations would be greatly appreciated.

@Dont-Worry said in "docker-volumes" is filling my whole server storage:

Any guidance or recommendations would be greatly appreciated.

I would just cleanup Emby's tmp by hand now (just rm then). After that, periodically monitor the tmp to see what is getting accumulated there.

-

-

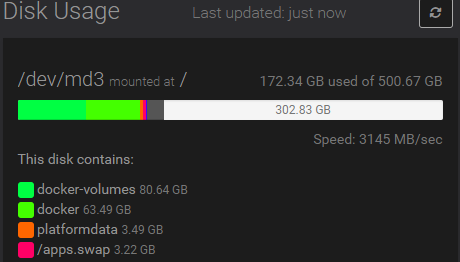

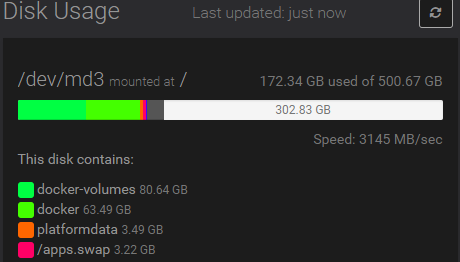

Thank you for your guidance. Following your recommendations, we have successfully managed to free up a significant amount of storage. Here's an overview of what we did:

Identified the issue: We found that a large number of video and audio files were accumulated in the /tmp directory of one of the Docker containers (even we still don't understand how they got there). These files were not expected to be there as we had already moved Emby to a new volume.

Cleaned up manually: As you suggested, we manually cleaned up the /tmp directory of the affected container. This involved deleting all the unnecessary files that were consuming a lot of space.

Before the cleanup, the Docker volumes were consuming approximately 158GB of storage. After the cleanup, we managed to reduce it down to just 15GB.

Here's a brief look at the cleanup process:

user:~$ sudo docker exec -it <container_id> /bin/bash root@sftp:/app/code# cd /tmp root@sftp:/tmp# ls -lah ... # Deleted all the large unwanted files root@sftp:/tmp# rm -rf *.mp4 *.wavWe will continue to monitor the /tmp directories in our Docker containers to ensure they do not fill up again. For now, this manual cleanup has significantly resolved our storage issue.

-

@Dont-Worry said in "docker-volumes" is filling my whole server storage:

Cloudron server where the /tmp directories in several Docker containers are consuming a significant amount of disk space

I thought the issue is Emby primarily. Do you see this for other apps too?

@girish there is still 15gb of data in the docker-volumes, I hope this is not gonna do the same. But for now this solution is working for short term use.

-

@Dont-Worry When you have time, dig into those 15GB too. Maybe some /run or /tmp is getting filled up with something. We have to do this app by app to understand why it's getting filled up. Because as mentioned, Cloudron doesn't access those directories, it's part of the apps.

-

Thanks for the guidance @girish . We've followed your recommendations and conducted a thorough cleanup of the /tmp and /run directories within our Docker containers. Here’s a summary of our findings and actions taken:

Containers Cleaned:

Emby

N8N

Cal

Uptime Kuma

OpenWebUI

Penpot

A few other containers which I could not identify preciselyActions Taken:

Cleared substantial temporary and cache files from the /run and /tmp directories across these containers.

Specifically, we found large amounts of data in the /run directories of Calcom and Penpot, and in the /tmp directories of Penpot and N8N.Results:

We successfully freed up approximately 8GB of space.

There are still about 8.5GB left in the docker-volumes.

Despite the cleanup, it appears that some applications, particularly the ones mentioned, might still have some lingering files contributing to the remaining usage. We will continue to monitor the disk usage and investigate further if necessary. -

G girish has marked this topic as solved on

G girish has marked this topic as solved on

-

Hi,

I wanted to provide an update on the ongoing issue with my server storage being filled by docker-volumes. Despite performing manual cleanups and managing Docker volumes, the problem persists. The data continues to reappear, causing storage usage to climb rapidly.

A couple of days ago, after extensive cleaning, we managed to free up space and bring it down to around 20GB. However, the storage usage has been increasing at an alarming rate of approximately 20GB per day. This rapid growth is not only concerning but also quite unstable, making it difficult to maintain a functional server.

Having to manually clean up the storage repeatedly is both time-consuming and frustrating. This situation is unsustainable in the long term, and I am reaching out to see if anyone has further insights into why this is happening. What exactly is causing the docker-volumes to fill up so quickly? Is there a specific application or a known issue that might be contributing to this problem?

Any advice or solutions to address this issue more permanently would be greatly appreciated.

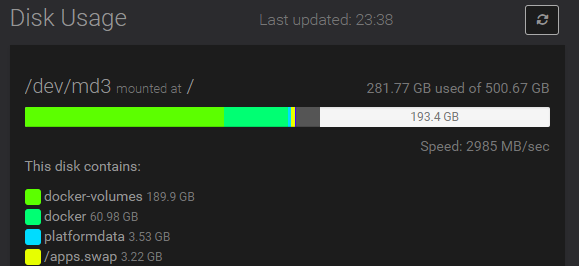

There is 20gb that has been added between this morning 7AM (63GB in Docker-Volumes) and now (80.64GB).

-

Hi,

I wanted to provide an update on the ongoing issue with my server storage being filled by docker-volumes. Despite performing manual cleanups and managing Docker volumes, the problem persists. The data continues to reappear, causing storage usage to climb rapidly.

A couple of days ago, after extensive cleaning, we managed to free up space and bring it down to around 20GB. However, the storage usage has been increasing at an alarming rate of approximately 20GB per day. This rapid growth is not only concerning but also quite unstable, making it difficult to maintain a functional server.

Having to manually clean up the storage repeatedly is both time-consuming and frustrating. This situation is unsustainable in the long term, and I am reaching out to see if anyone has further insights into why this is happening. What exactly is causing the docker-volumes to fill up so quickly? Is there a specific application or a known issue that might be contributing to this problem?

Any advice or solutions to address this issue more permanently would be greatly appreciated.

There is 20gb that has been added between this morning 7AM (63GB in Docker-Volumes) and now (80.64GB).

@Dont-Worry said in "docker-volumes" is filling my whole server storage:

What exactly is causing the docker-volumes to fill up so quickly? Is there a specific application or a known issue that might be contributing to this problem?

Have you identified which apps are filling up the volumes this time around? Is this emby again?

-

I don't have time to ding into this today. I'll do it tomorrow if i can, but there chances that it is Emby. But there is also other apps that have the same problem. It is not at the same scale because we move more data to Emby but still. I'll keep you informed ASAP.

-

Hi @girish and Cloudron team,

I wanted to update you on the situation with the

docker-volumesfilling up the server storage. After manually cleaning up the Docker volumes, we managed to free up a significant amount of space, but the issue persists as the storage fills up quickly again. Upon investigation, I found that the large files (e.g., mp4, mkv, mp3, wav, srt, webm) I had added to Emby and other applications keep reappearing in thedocker-volumesdirectory.Here are the details of what I observed:

Volume Details:

- The largest Docker volume taking up space was

/var/lib/docker/volumes/6504b9b60123a2ea63aeae015e51ce21d350e8139f743dd0b0c62f1ab5c8e350/_data, which had around 83GB of data. - I deleted unnecessary files using the following command:

sudo find /var/lib/docker/volumes/6504b9b60123a2ea63aeae015e51ce21d350e8139f743dd0b0c62f1ab5c8e350/_data -type f \( -name "*.mp4" -o -name "*.mkv" -o -name "*.mp3" -o -name "*.wav" -o -name "*.srt" -o -name "*.webm" \) -exec rm -f {} \;

Possible Cause:

- I have a suspicion that this issue might be related to how I added a new storage volume to my server. Here's what I did:

- Mounted the new storage volume on the server.

- Added the new storage volume in Cloudron via the admin panel.

- It seems that all the files I add to Emby and possibly other applications using this new volume are also being copied to the base server's storage.

Observation:

- The files accumulating in the

docker-volumesare consistently those that were added recently, suggesting a duplication issue. While the new volume works correctly and the files are accessible there, they are also being unnecessarily duplicated on the server's base storage.

Questions for the Cloudron Team:

- Could this be a bug or an issue with how Cloudron handles new storage volumes?

- Is it possible that Cloudron continues to store files on the base server storage even after moving applications to a new volume?

- How can we ensure that files are only stored on the new volume and not duplicated on the server's base storage?

Thank you for your assistance in resolving this issue. Your help and support have always been outstanding, and I hope we can find a solution to this soon. I'm ready to provide any additional information or perform further actions if needed.

- The largest Docker volume taking up space was

-

Hi @girish and Cloudron team,

I wanted to update you on the situation with the

docker-volumesfilling up the server storage. After manually cleaning up the Docker volumes, we managed to free up a significant amount of space, but the issue persists as the storage fills up quickly again. Upon investigation, I found that the large files (e.g., mp4, mkv, mp3, wav, srt, webm) I had added to Emby and other applications keep reappearing in thedocker-volumesdirectory.Here are the details of what I observed:

Volume Details:

- The largest Docker volume taking up space was

/var/lib/docker/volumes/6504b9b60123a2ea63aeae015e51ce21d350e8139f743dd0b0c62f1ab5c8e350/_data, which had around 83GB of data. - I deleted unnecessary files using the following command:

sudo find /var/lib/docker/volumes/6504b9b60123a2ea63aeae015e51ce21d350e8139f743dd0b0c62f1ab5c8e350/_data -type f \( -name "*.mp4" -o -name "*.mkv" -o -name "*.mp3" -o -name "*.wav" -o -name "*.srt" -o -name "*.webm" \) -exec rm -f {} \;

Possible Cause:

- I have a suspicion that this issue might be related to how I added a new storage volume to my server. Here's what I did:

- Mounted the new storage volume on the server.

- Added the new storage volume in Cloudron via the admin panel.

- It seems that all the files I add to Emby and possibly other applications using this new volume are also being copied to the base server's storage.

Observation:

- The files accumulating in the

docker-volumesare consistently those that were added recently, suggesting a duplication issue. While the new volume works correctly and the files are accessible there, they are also being unnecessarily duplicated on the server's base storage.

Questions for the Cloudron Team:

- Could this be a bug or an issue with how Cloudron handles new storage volumes?

- Is it possible that Cloudron continues to store files on the base server storage even after moving applications to a new volume?

- How can we ensure that files are only stored on the new volume and not duplicated on the server's base storage?

Thank you for your assistance in resolving this issue. Your help and support have always been outstanding, and I hope we can find a solution to this soon. I'm ready to provide any additional information or perform further actions if needed.

@Dont-Worry said in "docker-volumes" is filling my whole server storage:

The largest Docker volume taking up space was /var/lib/docker/volumes/6504b9b60123a2ea63aeae015e51ce21d350e8139f743dd0b0c62f1ab5c8e350/_data, which had around 83GB of data.

a) Was this Emby's tmp directory?

b) How are you adding files to Emby. Are you just uploading using the File Manager? Or is this via Emby's upload feature (i.e Emby Premiere)? - The largest Docker volume taking up space was

-

@Dont-Worry the term volume is used in many contexts and thus can be quite confusing/misleading. The explanation below might be more technical than you want it, but here goes:

-

In Docker context, volume refers to storage partitions managed by Docker itself. Docker volumes can be named or anonymous. Cloudron does not use Docker's named volumes. It uses anonymous volumes for /tmp and /run of apps.

-

In Cloudron context, volumes are external filesystems that are mounted into app containers. We use docker's "bind mounts" for this.

-

In Server context, volumes refer to Block storage. External disks that you can purchase separately from your VPS provider and attach to the server. For example, DO Volume

With this in mind:

-

Neither Cloudron nor Docker nor the VPS provider adds things to docker's anonymous volumes (/tmp and /run). Only the app has access to /tmp and /run of an app. These volumes are exclusive to the app and other apps cannot access them (i.e unlike a server, where the /tmp and /run is "shared", in cloudron it is totally separate for each app). This is why I ask if the volume which keeps filling up is Emby's tmp directory. If so, it's either the Emby package or Emby app at fault.

-

Neither Cloudron nor Docker nor the VPS provider add things to Cloudron's Volumes . Only the app add/removes stuff here. So again, it's either the Emby package or Emby app at fault.

Hope that helps clarify some confusion. It's pretty much 100% the fault of the app or the app package, but we need to identify the app(s) to fix the root cause.

Also, as a final note: App refers to Emby. App package refers to the Docker image that Cloudron team has built based on Emby binaries and configuration files. The fault could like in one of the two places.

-

-

G girish has marked this topic as unsolved on

G girish has marked this topic as unsolved on