40GB Disk full with a single app - n8n

-

I noticed my Cloudron instance has its disk full (40GB).

Due to the lack of space, I can't make a Cloudron backup before doing things. I did a Hetzner Snapshot instead.

I can't login into my n8n instance either (login/password), getting someProblem logging in : connect ECONNREFUSED fd00:c107:d509::4:5432to trigger some backup from there either (I have a workflow that does just that).Hetzner doesn't allow me to simply increase the disk size, I need to add a Volume instead, but the last time I played with that, I broke everything, and considering I can't do a backup... I'm playing it safe.

I believe the core issue in this case has to do with the n8n logs (they're stored in Postgres and it's weight is 12Go), I ran more ops today than usual and I'm not sure how much disk I had available before, maybe it filled what little was left.

https://community.n8n.io/t/hard-disk-full-where-to-delete-logs/2298Any bright idea on how to resolve this?

root@unly-n8n:~# df -h Filesystem Size Used Avail Use% Mounted on tmpfs 382M 1.8M 380M 1% /run /dev/sda1 38G 36G 0 100% / tmpfs 1.9G 0 1.9G 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock /dev/sda15 253M 142K 252M 1% /boot/efi overlay 38G 36G 0 100% /var/lib/docker/overlay2/f797c4c1c005c9129aea31407dc455d2100eb834513aaa76a5fa0a08979d74bb/merged overlay 38G 36G 0 100% /var/lib/docker/overlay2/d02eaa62c0423c990c16cfa61eba358b87b94e706e17e3b31a2c195e34e381c5/merged overlay 38G 36G 0 100% /var/lib/docker/overlay2/e7cf042bf6223b242488c492525656dcc67308d0c629a9020af2930114c51a6c/merged overlay 38G 36G 0 100% /var/lib/docker/overlay2/7381482e71e5fc423bc55350e0c0ed99300c80e9dac6f1dc1cced4422c848106/merged overlay 38G 36G 0 100% /var/lib/docker/overlay2/157344b3773f2f4cb9ec6e2415958197d644c9560cff6ba316ad2a5718056b99/merged overlay 38G 36G 0 100% /var/lib/docker/overlay2/32a88aaf52c508ee561d390ea85ebd63484712a754804a836b6df396ad6e508e/merged overlay 38G 36G 0 100% /var/lib/docker/overlay2/12319a31316ac3fb15c568dc5940f5a404005b67b2f0b7f418424c00fec9e67c/merged overlay 38G 36G 0 100% /var/lib/docker/overlay2/653289c4c1a2b5cdcc8a50d448dff14e0facdc525bc8bcd4082934a4ecf6e648/merged tmpfs 382M 4.0K 382M 1% /run/user/0root@unly-n8n:~# find / -type f -exec du -h {} + | sort -rh | head -n 10 du: cannot access '/proc/70461/task/70461/fdinfo/5': No such file or directory du: cannot access '/proc/70461/task/70461/fdinfo/6': No such file or directory du: cannot access '/proc/70461/task/70461/fdinfo/7': No such file or directory du: cannot access '/proc/70461/fdinfo/5': No such file or directory 12G /home/yellowtent/appsdata/693caa42-bcf9-44c2-8f44-78f8676fe44c/postgresqldump 3.8G /apps.swap 1.1G /home/yellowtent/platformdata/postgresql/14/main/base/35086/35128.4 1.1G /home/yellowtent/platformdata/postgresql/14/main/base/35086/35128.3 1.1G /home/yellowtent/platformdata/postgresql/14/main/base/35086/35128.2 1.1G /home/yellowtent/platformdata/postgresql/14/main/base/35086/35128.1 1.1G /home/yellowtent/platformdata/postgresql/14/main/base/35086/35128 255M /var/cache/apt/archives/linux-firmware_20220329.git681281e4-0ubuntu3.30_all.deb 243M /home/yellowtent/platformdata/postgresql/14/main/base/35086/35128.5 217M /var/lib/docker/overlay2/e7cf042bf6223b242488c492525656dcc67308d0c629a9020af2930114c51a6c/merged/usr/lib/postgresql/14/lib/vectors.so -

Considering the issue seemed to come from n8n logs, I tried to prune them, but it failed. I don't know if it's a n8n bug or a bug related to "n8n managed by Cloudron".

I noticed some of the failures were related to installing custom node modules, so I disabled those and restarted, but it didn't start the pruning either.

https://gist.github.com/Vadorequest/9e16a84422341b96b64cd874e3d7868c

-

I tried to stop/start the n8n app, but it won't restart anymore, with:

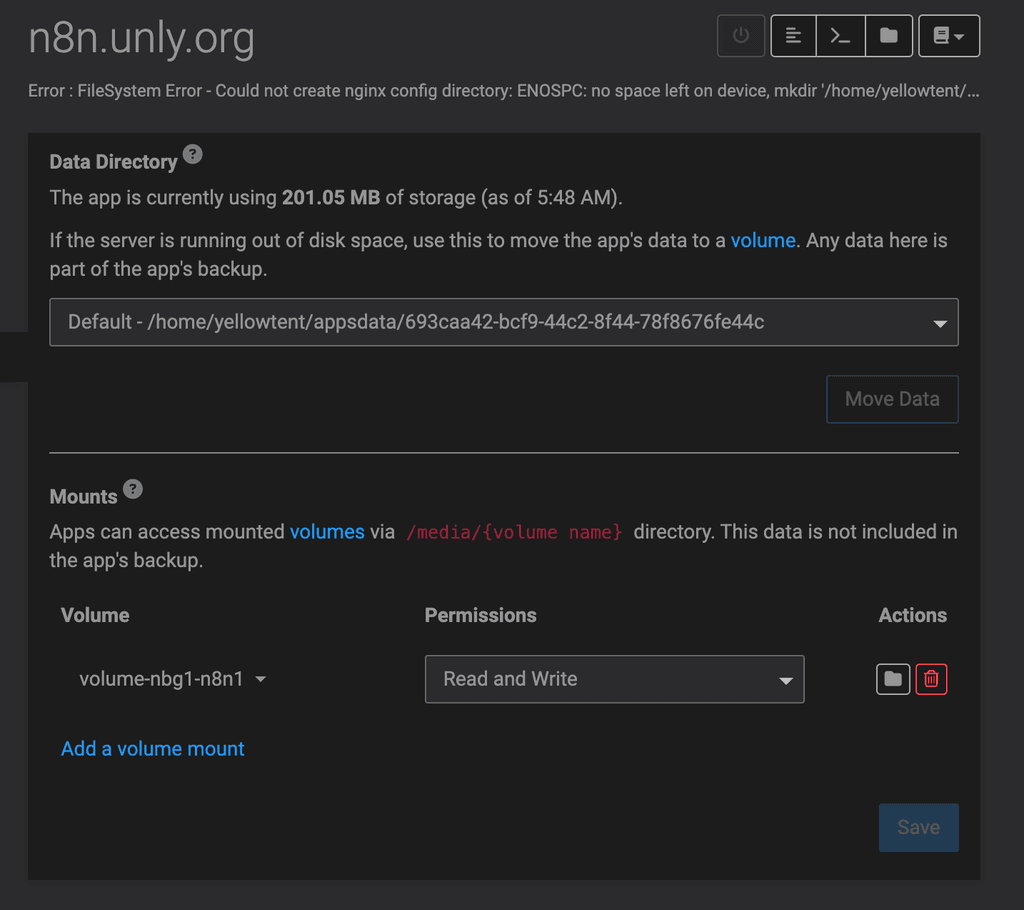

Error : FileSystem Error - Could not create nginx config directory: ENOSPC: no space left on device, mkdir '/home/yellowtent/platformdata/nginx/applications/693caa42-bcf9-44c2-8f44-78f8676fe44c' -

Due to the app being in "error state", I can't add a volume either.

The number of options at my disposal is starting to grow thin.

-

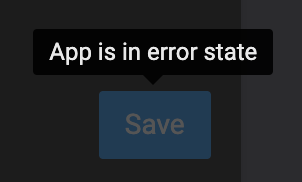

I thought Hetzner wasn't allowing me to rescale the instance to one with a bigger disk, but I was mistaken.

There was an option to hide different disks options.

-

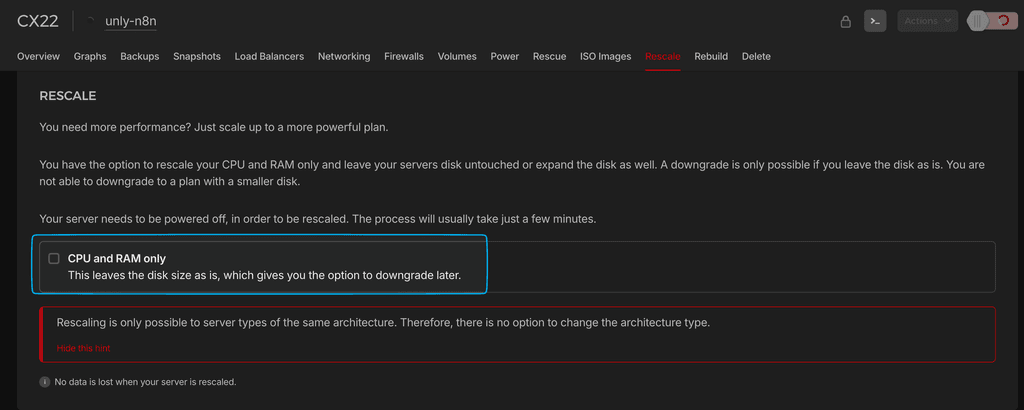

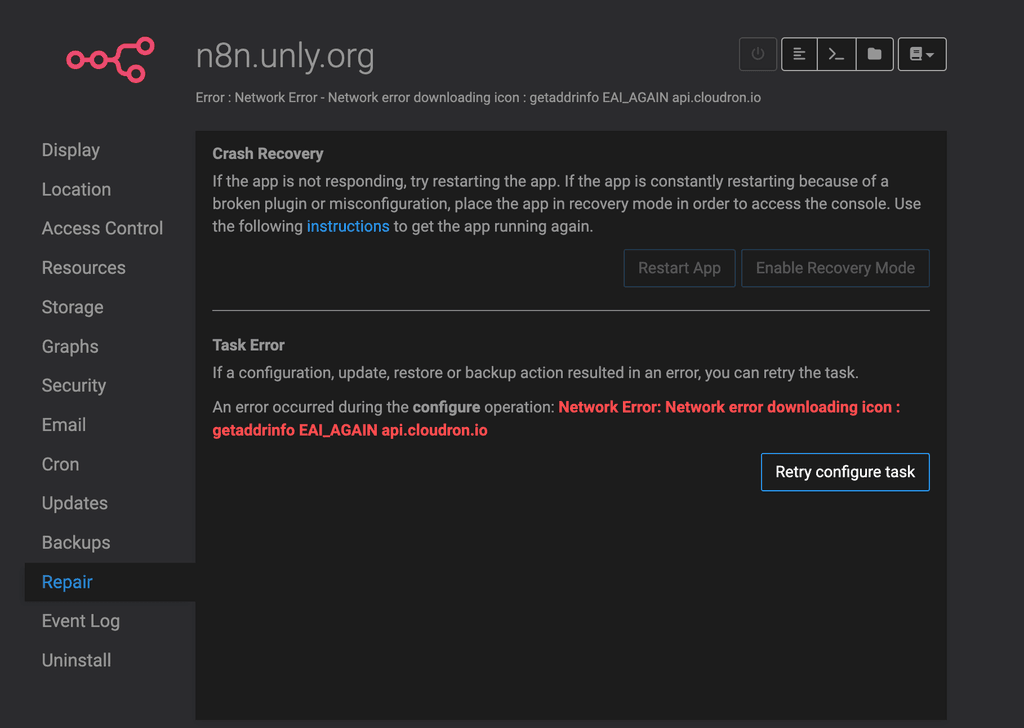

Despite the issue with the disk space being gone, n8n wouldn't restart due to being into "error state"...

So, I tried to click on

Retry configure task, but it failed with some dumb error about an icon not being fetchable...https://gist.github.com/Vadorequest/a57aa42f42c0e241a3076c17ba295b24

-

I tried to ping cloudron but the server doesn't seem to be willing.

root@unly-n8n:~# ping6 ipv6.api.cloudron.io ping6: ipv6.api.cloudron.io: Temporary failure in name resolution root@unly-n8n:~# ping ipv6.api.cloudron.io ping: ipv6.api.cloudron.io: Temporary failure in name resolution root@unly-n8n:~# ping api.cloudron.io ping: api.cloudron.io: Temporary failure in name resolution root@unly-n8n:~# ping google.com ping: google.com: Temporary failure in name resolutionI guess another restart might solve it...

-

Restarting didn't solve it. I don't know where this issue came from but I had to restart the DNS service

.

.root@unly-n8n:~# ping google.com ping: google.com: Temporary failure in name resolution root@unly-n8n:~# cat /etc/resolv.conf # Dynamic resolv.conf(5) file for glibc resolver(3) generated by resolvconf(8) # DO NOT EDIT THIS FILE BY HAND -- YOUR CHANGES WILL BE OVERWRITTEN # 127.0.0.53 is the systemd-resolved stub resolver. # run "systemd-resolve --status" to see details about the actual nameservers. root@unly-n8n:~# systemd-resolve --status systemd-resolve: command not found root@unly-n8n:~# sudo systemctl restart systemd-resolved.service root@unly-n8n:~# ping google.com PING google.com(fra24s06-in-x0e.1e100.net (2a00:1450:4001:829::200e)) 56 data bytes 64 bytes from fra24s06-in-x0e.1e100.net (2a00:1450:4001:829::200e): icmp_seq=1 ttl=116 time=5.00 ms 64 bytes from fra24s06-in-x0e.1e100.net (2a00:1450:4001:829::200e): icmp_seq=2 ttl=116 time=3.83 ms 64 bytes from fra24s06-in-x0e.1e100.net (2a00:1450:4001:829::200e): icmp_seq=3 ttl=116 time=3.69 ms ^C --- google.com ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2004ms rtt min/avg/max/mdev = 3.694/4.174/4.997/0.584 ms root@unly-n8n:~# ping6 ipv6.api.cloudron.io PING ipv6.api.cloudron.io(prod.cloudron.io (2604:a880:800:10::b66:f001)) 56 data bytes 64 bytes from prod.cloudron.io (2604:a880:800:10::b66:f001): icmp_seq=1 ttl=49 time=84.4 ms 64 bytes from prod.cloudron.io (2604:a880:800:10::b66:f001): icmp_seq=2 ttl=49 time=82.8 msRunning

sudo systemctl restart systemd-resolved.servicefixed this ping issue. -

After the ping was fixed, the "Retry configure task" worked and the n8n instance was up again.

But it wouldn't show my n8n workflows.

Restarting the Cloudron app fixed it.

What a journey.

-

Journey not over, the issue with DNS seems to be still there.

Could not resolve hostname github.com: Temporary failure in name resolution, but the ping from the server itself seems to work fine, so it might be the docker instance that could be buggy... But I'm completely in unknown waters there.Jun 28 23:58:14 2024-06-28T21:58:14.702Z | error | 400 ssh: Could not resolve hostname github.com: Temporary failure in name resolution <30>1 2024-06-28T21:58:14Z unly-n8n 693caa42-bcf9-44c2-8f44-78f8676fe44c 1050 693caa42-bcf9-44c2-8f44-78f8676fe44c - fatal: Could not read from remote repository. -

The internal DNS service "Unbound" was off, I turned it on again.

https://docs.cloudron.io/troubleshooting/#unbound

root@unly-n8n:~# root@unly-n8n:~# systemctl status unbound × unbound.service - Unbound DNS Resolver Loaded: loaded (/etc/systemd/system/unbound.service; enabled; vendor preset: enabled) Active: failed (Result: exit-code) since Fri 2024-06-28 21:43:24 UTC; 26min ago Process: 1223 ExecStart=/usr/sbin/unbound -d (code=exited, status=1/FAILURE) Main PID: 1223 (code=exited, status=1/FAILURE) CPU: 17ms Jun 28 21:43:24 unly-n8n systemd[1]: unbound.service: Scheduled restart job, restart counter is at 5. Jun 28 21:43:24 unly-n8n systemd[1]: Stopped Unbound DNS Resolver. Jun 28 21:43:24 unly-n8n systemd[1]: unbound.service: Start request repeated too quickly. Jun 28 21:43:24 unly-n8n systemd[1]: unbound.service: Failed with result 'exit-code'. Jun 28 21:43:24 unly-n8n systemd[1]: Failed to start Unbound DNS Resolver. root@unly-n8n:~# unbound-anchor -a /var/lib/unbound/root.key root@unly-n8n:~# systemctl restart unbound root@unly-n8n:~# systemctl status unbound ● unbound.service - Unbound DNS Resolver Loaded: loaded (/etc/systemd/system/unbound.service; enabled; vendor preset: enabled) Active: active (running) since Fri 2024-06-28 22:10:03 UTC; 3s ago Main PID: 6380 (unbound) Tasks: 1 (limit: 9160) Memory: 8.4M CPU: 100ms CGroup: /system.slice/unbound.service └─6380 /usr/sbin/unbound -d Jun 28 22:10:03 unly-n8n systemd[1]: Starting Unbound DNS Resolver... Jun 28 22:10:03 unly-n8n unbound[6380]: [6380:0] notice: init module 0: subnet Jun 28 22:10:03 unly-n8n unbound[6380]: [6380:0] notice: init module 1: validator Jun 28 22:10:03 unly-n8n unbound[6380]: [6380:0] notice: init module 2: iterator Jun 28 22:10:03 unly-n8n unbound[6380]: [6380:0] info: start of service (unbound 1.13.1). Jun 28 22:10:03 unly-n8n systemd[1]: Started Unbound DNS Resolver.And now my workflows are working (almost) properly.

I'll have to restart n8n again after re-enabling the node modules (i had disabled them because they were blocking the n8n boot due to packages not being installable due to the DNS issue) -

A AmbroiseUnly referenced this topic on

-

G girish marked this topic as a question on

G girish marked this topic as a question on

-

G girish has marked this topic as solved on

G girish has marked this topic as solved on

-

Good to know! Thanks