Automatic updates fail until I retry update/configure task

-

For a few months, I've had issue with all my apps failing to auto-update. I usually get a message like this:

Docker Error: (HTTP code 404) no such container - No such image: cloudron/com.gitlab.cloudronapp:20220504-210413-802d48119If I go to the repair tab, I can manually trigger it to succeed on each step of update and configure, but it seems to be unable to succeed itself.

Any idea on why this is happening?

-

Spent some time debugging this. The issue is that DNS queries from unbound just fail randomly.

For example:

root@my:~# host production.cloudflare.docker.com 127.0.0.1 Using domain server: Name: 127.0.0.1 Address: 127.0.0.1#53 Aliases: production.cloudflare.docker.com has address 104.18.121.25 production.cloudflare.docker.com has address 104.18.124.25 production.cloudflare.docker.com has address 104.18.122.25 production.cloudflare.docker.com has address 104.18.125.25 production.cloudflare.docker.com has address 104.18.123.25 Host production.cloudflare.docker.com not found: 3(NXDOMAIN)The last NXDOMAIN causes a problem. Trying to trace unbound:

May 26 17:55:54 unbound[687925]: [687925:0] info: 0RDd mod2 rep production.cloudflare.docker.com. MX IN May 26 17:55:54 unbound[687925]: [687925:0] debug: cache memory msg=154570 rrset=186541 infra=37659 val=88932 subnet=74504 May 26 17:55:54 unbound[687925]: [687925:0] debug: answer cb May 26 17:55:54 unbound[687925]: [687925:0] debug: Incoming reply id = 173e May 26 17:55:54 unbound[687925]: [687925:0] debug: Incoming reply addr = ip4 192.54.112.30 port 53 (len 16) May 26 17:55:54 unbound[687925]: [687925:0] debug: lookup size is 1 entries May 26 17:55:54 unbound[687925]: [687925:0] debug: received udp reply. May 26 17:55:54 unbound[687925]: [687925:0] debug: udp message[128:0] 173E818300010000000100010A636C6F7564666C61726506646F636B657203636F6D0000010001C01700060001000003840042066E732D32303709617773646E732D3235C01E11617773646E732D686F73746D617374657206616D617A6F6EC01E0000000100001C200000038400127500000151800000290200000080000000 May 26 17:55:54 unbound[687925]: [687925:0] debug: outnet handle udp reply May 26 17:55:54 unbound[687925]: [687925:0] debug: measured roundtrip at 18 msec May 26 17:55:54 unbound[687925]: [687925:0] debug: svcd callbacks start May 26 17:55:54 unbound[687925]: [687925:0] debug: worker svcd callback for qstate 0x55a4a9166c60 May 26 17:55:54 unbound[687925]: [687925:0] debug: mesh_run: start May 26 17:55:54 unbound[687925]: [687925:0] debug: iterator[module 2] operate: extstate:module_wait_reply event:module_event_reply May 26 17:55:54 unbound[687925]: [687925:0] info: iterator operate: query production.cloudflare.docker.com. MX IN May 26 17:55:54 unbound[687925]: [687925:0] debug: process_response: new external response event May 26 17:55:54 unbound[687925]: [687925:0] info: scrub for com. NS IN May 26 17:55:54 unbound[687925]: [687925:0] info: response for production.cloudflare.docker.com. MX IN May 26 17:55:54 unbound[687925]: [687925:0] info: reply from <com.> 192.54.112.30#53 May 26 17:55:54 unbound[687925]: [687925:0] info: incoming scrubbed packet: ;; ->>HEADER<<- opcode: QUERY, rcode: NXDOMAIN, id: 0 ;; flags: qr rd ra ; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 0 ;; QUESTION SECTION: cloudflare.docker.com. IN A ;; ANSWER SECTION: ;; AUTHORITY SECTION: docker.com. 30 IN SOA ns-207.awsdns-25.com. awsdns-hostmaster.amazon.com. 1 7200 900 1209600 86400 ;; ADDITIONAL SECTION: ;; MSG SIZE rcvd: 117 May 26 17:55:54 unbound[687925]: [687925:0] debug: iter_handle processing q with state QUERY RESPONSE STATE May 26 17:55:54 unbound[687925]: [687925:0] info: query response was NXDOMAIN ANSWER May 26 17:55:54 unbound[687925]: [687925:0] debug: iter_handle processing q with state FINISHED RESPONSE STATE May 26 17:55:54 unbound[687925]: [687925:0] info: finishing processing for production.cloudflare.docker.com. MX INThat root server response (192.54.112.30 - h.gtld-servers.net) is NXDOMAIN. In fact, all the root queries fail randomly now and then this way. It seems there is something which is hijacking DNS responses in the network. Indeed, this is in some private network, so maybe the ISP or some firewall in the middle is trying to prevent DNS DDoS or something and hijacking requests.

As a workaround, I configured to send all requests to Cloudflare and that works now. Created

/etc/unbound/unbound.conf.d/private-dns.confand thensystemctl restart unbound:# forward all queries to cloudflare forward-zone: name: "." forward-addr: 1.1.1.1 -

For a few months, I've had issue with all my apps failing to auto-update. I usually get a message like this:

Docker Error: (HTTP code 404) no such container - No such image: cloudron/com.gitlab.cloudronapp:20220504-210413-802d48119If I go to the repair tab, I can manually trigger it to succeed on each step of update and configure, but it seems to be unable to succeed itself.

Any idea on why this is happening?

@eyecreate I have seen that temporary error when Docker Hub goes down/is getting updated. I can't explain why you keep hitting this repeatedly though. Could it be that your auto update schedule somehow magically coincides with DockerHub's own update schedule (that would be something!).

Can you adjust your auto update time by 1 or 2 hours to check if that helps? This is under Settings -> Updates -> Change Schedule.

-

@eyecreate I have seen that temporary error when Docker Hub goes down/is getting updated. I can't explain why you keep hitting this repeatedly though. Could it be that your auto update schedule somehow magically coincides with DockerHub's own update schedule (that would be something!).

Can you adjust your auto update time by 1 or 2 hours to check if that helps? This is under Settings -> Updates -> Change Schedule.

-

@girish I have changed the update schedule and will get back with you if I notice nothing has changed.

-

I just noticed I think I've had this issue with my n8n apps, but not for anything else as far as I can tell. Strange.

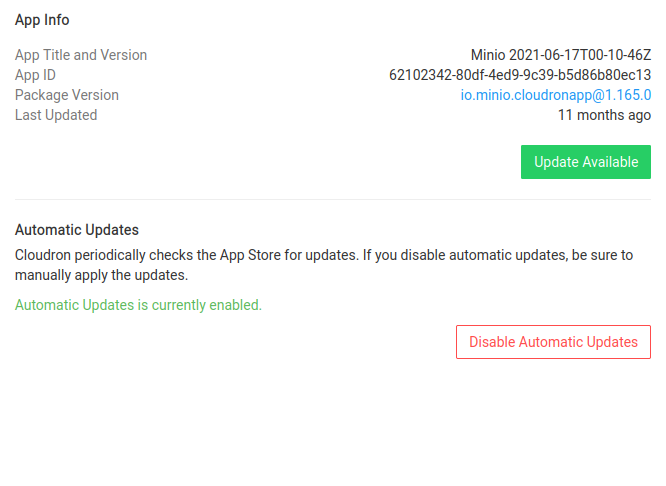

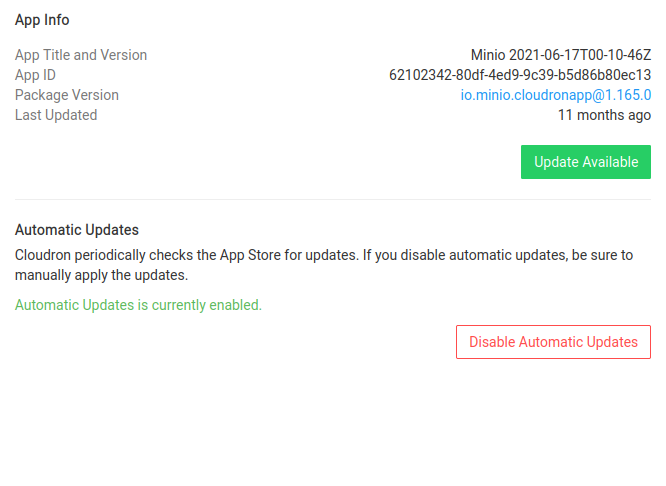

Actually, looks like maybe Minio has been having this issue too: I've got auto-updates available, but it says it hasn't been updated in 11 months!

(ah, but maybe that's because it's a major update so it wasn't auto-updating on purpose. Perhaps that explains n8n too?)

-

I just noticed I think I've had this issue with my n8n apps, but not for anything else as far as I can tell. Strange.

Actually, looks like maybe Minio has been having this issue too: I've got auto-updates available, but it says it hasn't been updated in 11 months!

(ah, but maybe that's because it's a major update so it wasn't auto-updating on purpose. Perhaps that explains n8n too?)

@jdaviescoates yes, minio is intentionally not auto-updating. This is because needs a new API domain. n8n also saw a major update because it gained user management (proxyAuth got removed).

-

After changing the update schedule, updates are still failing without manual repair/retry.

@eyecreate can you contact us on support@cloudron.io, we have to investigate on the server further.

-

G girish marked this topic as a question on

G girish marked this topic as a question on

-

Spent some time debugging this. The issue is that DNS queries from unbound just fail randomly.

For example:

root@my:~# host production.cloudflare.docker.com 127.0.0.1 Using domain server: Name: 127.0.0.1 Address: 127.0.0.1#53 Aliases: production.cloudflare.docker.com has address 104.18.121.25 production.cloudflare.docker.com has address 104.18.124.25 production.cloudflare.docker.com has address 104.18.122.25 production.cloudflare.docker.com has address 104.18.125.25 production.cloudflare.docker.com has address 104.18.123.25 Host production.cloudflare.docker.com not found: 3(NXDOMAIN)The last NXDOMAIN causes a problem. Trying to trace unbound:

May 26 17:55:54 unbound[687925]: [687925:0] info: 0RDd mod2 rep production.cloudflare.docker.com. MX IN May 26 17:55:54 unbound[687925]: [687925:0] debug: cache memory msg=154570 rrset=186541 infra=37659 val=88932 subnet=74504 May 26 17:55:54 unbound[687925]: [687925:0] debug: answer cb May 26 17:55:54 unbound[687925]: [687925:0] debug: Incoming reply id = 173e May 26 17:55:54 unbound[687925]: [687925:0] debug: Incoming reply addr = ip4 192.54.112.30 port 53 (len 16) May 26 17:55:54 unbound[687925]: [687925:0] debug: lookup size is 1 entries May 26 17:55:54 unbound[687925]: [687925:0] debug: received udp reply. May 26 17:55:54 unbound[687925]: [687925:0] debug: udp message[128:0] 173E818300010000000100010A636C6F7564666C61726506646F636B657203636F6D0000010001C01700060001000003840042066E732D32303709617773646E732D3235C01E11617773646E732D686F73746D617374657206616D617A6F6EC01E0000000100001C200000038400127500000151800000290200000080000000 May 26 17:55:54 unbound[687925]: [687925:0] debug: outnet handle udp reply May 26 17:55:54 unbound[687925]: [687925:0] debug: measured roundtrip at 18 msec May 26 17:55:54 unbound[687925]: [687925:0] debug: svcd callbacks start May 26 17:55:54 unbound[687925]: [687925:0] debug: worker svcd callback for qstate 0x55a4a9166c60 May 26 17:55:54 unbound[687925]: [687925:0] debug: mesh_run: start May 26 17:55:54 unbound[687925]: [687925:0] debug: iterator[module 2] operate: extstate:module_wait_reply event:module_event_reply May 26 17:55:54 unbound[687925]: [687925:0] info: iterator operate: query production.cloudflare.docker.com. MX IN May 26 17:55:54 unbound[687925]: [687925:0] debug: process_response: new external response event May 26 17:55:54 unbound[687925]: [687925:0] info: scrub for com. NS IN May 26 17:55:54 unbound[687925]: [687925:0] info: response for production.cloudflare.docker.com. MX IN May 26 17:55:54 unbound[687925]: [687925:0] info: reply from <com.> 192.54.112.30#53 May 26 17:55:54 unbound[687925]: [687925:0] info: incoming scrubbed packet: ;; ->>HEADER<<- opcode: QUERY, rcode: NXDOMAIN, id: 0 ;; flags: qr rd ra ; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 0 ;; QUESTION SECTION: cloudflare.docker.com. IN A ;; ANSWER SECTION: ;; AUTHORITY SECTION: docker.com. 30 IN SOA ns-207.awsdns-25.com. awsdns-hostmaster.amazon.com. 1 7200 900 1209600 86400 ;; ADDITIONAL SECTION: ;; MSG SIZE rcvd: 117 May 26 17:55:54 unbound[687925]: [687925:0] debug: iter_handle processing q with state QUERY RESPONSE STATE May 26 17:55:54 unbound[687925]: [687925:0] info: query response was NXDOMAIN ANSWER May 26 17:55:54 unbound[687925]: [687925:0] debug: iter_handle processing q with state FINISHED RESPONSE STATE May 26 17:55:54 unbound[687925]: [687925:0] info: finishing processing for production.cloudflare.docker.com. MX INThat root server response (192.54.112.30 - h.gtld-servers.net) is NXDOMAIN. In fact, all the root queries fail randomly now and then this way. It seems there is something which is hijacking DNS responses in the network. Indeed, this is in some private network, so maybe the ISP or some firewall in the middle is trying to prevent DNS DDoS or something and hijacking requests.

As a workaround, I configured to send all requests to Cloudflare and that works now. Created

/etc/unbound/unbound.conf.d/private-dns.confand thensystemctl restart unbound:# forward all queries to cloudflare forward-zone: name: "." forward-addr: 1.1.1.1 -

G girish has marked this topic as solved on

G girish has marked this topic as solved on

-

A stranger on the internet faced the exact issue - https://www.reddit.com/r/pihole/comments/o0rp2k/unbound_suddenly_failed_to_resolve_domain/

-

Spent some time debugging this. The issue is that DNS queries from unbound just fail randomly.

For example:

root@my:~# host production.cloudflare.docker.com 127.0.0.1 Using domain server: Name: 127.0.0.1 Address: 127.0.0.1#53 Aliases: production.cloudflare.docker.com has address 104.18.121.25 production.cloudflare.docker.com has address 104.18.124.25 production.cloudflare.docker.com has address 104.18.122.25 production.cloudflare.docker.com has address 104.18.125.25 production.cloudflare.docker.com has address 104.18.123.25 Host production.cloudflare.docker.com not found: 3(NXDOMAIN)The last NXDOMAIN causes a problem. Trying to trace unbound:

May 26 17:55:54 unbound[687925]: [687925:0] info: 0RDd mod2 rep production.cloudflare.docker.com. MX IN May 26 17:55:54 unbound[687925]: [687925:0] debug: cache memory msg=154570 rrset=186541 infra=37659 val=88932 subnet=74504 May 26 17:55:54 unbound[687925]: [687925:0] debug: answer cb May 26 17:55:54 unbound[687925]: [687925:0] debug: Incoming reply id = 173e May 26 17:55:54 unbound[687925]: [687925:0] debug: Incoming reply addr = ip4 192.54.112.30 port 53 (len 16) May 26 17:55:54 unbound[687925]: [687925:0] debug: lookup size is 1 entries May 26 17:55:54 unbound[687925]: [687925:0] debug: received udp reply. May 26 17:55:54 unbound[687925]: [687925:0] debug: udp message[128:0] 173E818300010000000100010A636C6F7564666C61726506646F636B657203636F6D0000010001C01700060001000003840042066E732D32303709617773646E732D3235C01E11617773646E732D686F73746D617374657206616D617A6F6EC01E0000000100001C200000038400127500000151800000290200000080000000 May 26 17:55:54 unbound[687925]: [687925:0] debug: outnet handle udp reply May 26 17:55:54 unbound[687925]: [687925:0] debug: measured roundtrip at 18 msec May 26 17:55:54 unbound[687925]: [687925:0] debug: svcd callbacks start May 26 17:55:54 unbound[687925]: [687925:0] debug: worker svcd callback for qstate 0x55a4a9166c60 May 26 17:55:54 unbound[687925]: [687925:0] debug: mesh_run: start May 26 17:55:54 unbound[687925]: [687925:0] debug: iterator[module 2] operate: extstate:module_wait_reply event:module_event_reply May 26 17:55:54 unbound[687925]: [687925:0] info: iterator operate: query production.cloudflare.docker.com. MX IN May 26 17:55:54 unbound[687925]: [687925:0] debug: process_response: new external response event May 26 17:55:54 unbound[687925]: [687925:0] info: scrub for com. NS IN May 26 17:55:54 unbound[687925]: [687925:0] info: response for production.cloudflare.docker.com. MX IN May 26 17:55:54 unbound[687925]: [687925:0] info: reply from <com.> 192.54.112.30#53 May 26 17:55:54 unbound[687925]: [687925:0] info: incoming scrubbed packet: ;; ->>HEADER<<- opcode: QUERY, rcode: NXDOMAIN, id: 0 ;; flags: qr rd ra ; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 0 ;; QUESTION SECTION: cloudflare.docker.com. IN A ;; ANSWER SECTION: ;; AUTHORITY SECTION: docker.com. 30 IN SOA ns-207.awsdns-25.com. awsdns-hostmaster.amazon.com. 1 7200 900 1209600 86400 ;; ADDITIONAL SECTION: ;; MSG SIZE rcvd: 117 May 26 17:55:54 unbound[687925]: [687925:0] debug: iter_handle processing q with state QUERY RESPONSE STATE May 26 17:55:54 unbound[687925]: [687925:0] info: query response was NXDOMAIN ANSWER May 26 17:55:54 unbound[687925]: [687925:0] debug: iter_handle processing q with state FINISHED RESPONSE STATE May 26 17:55:54 unbound[687925]: [687925:0] info: finishing processing for production.cloudflare.docker.com. MX INThat root server response (192.54.112.30 - h.gtld-servers.net) is NXDOMAIN. In fact, all the root queries fail randomly now and then this way. It seems there is something which is hijacking DNS responses in the network. Indeed, this is in some private network, so maybe the ISP or some firewall in the middle is trying to prevent DNS DDoS or something and hijacking requests.

As a workaround, I configured to send all requests to Cloudflare and that works now. Created

/etc/unbound/unbound.conf.d/private-dns.confand thensystemctl restart unbound:# forward all queries to cloudflare forward-zone: name: "." forward-addr: 1.1.1.1 -

@girish Glad you found a source and workaround. I wonder if the ISP or I have a custom DNS for the network causing that issue.

@eyecreate Is your custom DNS part of your router?

-

@eyecreate Is your custom DNS part of your router?