Experience with Cloudron/Matomo updates and medium/larger sites?

-

Has anyone got experience with Matomo updates on medium/larger sites - e.g. >100,000 monthly visits over longer periods?

Matomo themselves recommend a carefully planned manual procedure:

https://matomo.org/faq/on-premise/how-to-upgrade-matomo-to-the-latest-release/That of course works against one of Cloudron's biggest advantages, i.e. automatic updates.

Does anyone have any thoughts about handling larger installations in a more automated way?

Or has anyone tried leaving automatic updates enabled with larger sites and could tell us how it went?

-

Has anyone got experience with Matomo updates on medium/larger sites - e.g. >100,000 monthly visits over longer periods?

Matomo themselves recommend a carefully planned manual procedure:

https://matomo.org/faq/on-premise/how-to-upgrade-matomo-to-the-latest-release/That of course works against one of Cloudron's biggest advantages, i.e. automatic updates.

Does anyone have any thoughts about handling larger installations in a more automated way?

Or has anyone tried leaving automatic updates enabled with larger sites and could tell us how it went?

@robw No, however, the article there assumes a multi-server install and a constraint on certain resources. Cloudron is single server only, unless you separate your resources manually, ie with 2 Cloudron servers, one for websites one for Matomo.

100k visits is easily handled by a single instance, such as on Cloudron with sufficient resources. That also depends on how much you're doing in Matomo, basic tracking or lots of activity tracking.

Since updates happen the same way regardless of other factors on Cloudron, there is no difference.

You may simply wish to tune the update timing, or do them manually until you feel comfortable with the automation.

-

@robw No, however, the article there assumes a multi-server install and a constraint on certain resources. Cloudron is single server only, unless you separate your resources manually, ie with 2 Cloudron servers, one for websites one for Matomo.

100k visits is easily handled by a single instance, such as on Cloudron with sufficient resources. That also depends on how much you're doing in Matomo, basic tracking or lots of activity tracking.

Since updates happen the same way regardless of other factors on Cloudron, there is no difference.

You may simply wish to tune the update timing, or do them manually until you feel comfortable with the automation.

@robi said in Experience with Cloudron/Matomo updates and medium/larger sites?:

100k visits is easily handled by a single instance, such as on Cloudron with sufficient resources

I took think 100k/month is not that much. That's around 3k a day or 1 a second.

-

@robi said in Experience with Cloudron/Matomo updates and medium/larger sites?:

100k visits is easily handled by a single instance, such as on Cloudron with sufficient resources

I took think 100k/month is not that much. That's around 3k a day or 1 a second.

@girish said in Experience with Cloudron/Matomo updates and medium/larger sites?:

I took think 100k/month is not that much. That's around 3k a day or 1 a second.

Not sure about your maths there.

100k / 30 days = 3,333 / 24 hours / 60 minutes = ~2.3 per minute. (Did I get that right?)

100k / 30 days = 3,333 / 24 hours / 60 minutes = ~2.3 per minute. (Did I get that right?)100k visits/month is not that much, no... I think that's a small to medium sized site.

So collecting the data is generally only a small overhead on the server. Although to be accurate, concurrent requests can still become an issue even with one small site at that level, e.g. extrapolating from data above:

- Most local business sites process traffic around business hours, so perhaps multiply 2.3 per minute by 3 for an indicative standard minute during the work day.

- Advertising, email, or search peaks often cause sudden spikes, so (very roughly) multiply that by 20 to handle peak moments (though that number could be a lot higher), so we've got ~140 per minute.

- This gets us to around 2.5 visits per second for the peak...

- Visits are only part of the log data (page views, events, etc.)... So that could be 10-12 tracking API actions per second just for one small site.

Add a few sites and I think you might need to think carefully about those peaks, and that's just for data collection.

I was thinking more about Matomo's data processing than the data collection (e.g. it generally processes data every hour or so to keep the reporting UI fast, which takes up to a few minutes each time), and particularly about software updates. I think Matomo has to re-index all data after some if not many of its updates, or at least re-process its data when it adds new features, etc.

Assuming a small number somewhat standard active small sites:

100k visits / month

= up to 300k page views

... plus other actions (forms, events, other)

= up to 450k logs

... times only a small number of small sites (e.g. 5)

= ~ 2.2m logs / monthThe data in the tracking tables of Matomo's database seems to be about 30% of the total data it stores, most of the rest the rest is report data and what it calls "metrics".

So that can quickly turn into gigabytes of data over only a few months.

On a non-Cloudron Matomo instance that we host with fairly low traffic profiles like the ones outlined above, Matomo's default browser based data processing during a software update is not an option for us. We need to run updates manually from the CLI for any chance of success. And a failed update (which can happen even with the manual process) can grind the whole thing to a halt, potentially requiring lots of manual work and hours of re-indexing to clear out the report data and start over.

Hence my questions... I was wondering if anyone had experience over time and hence tips about using a Cloudron-hosted Matomo at those levels, especially with automatic updates turned on (which we don't have). Perhaps it's more efficient than our current system.

By the way, I think Matomo's "multi server" suggestions are useful even for single server environments. We definitely saw a huge difference in data processing bottlenecks once we separated the tracking UI onto a different website instance from the admin UI, even on the same server. I'm not exactly sure why - perhaps something to do with the read/write configurations of our MySQL instance (e.g. reads not waiting for writes to finish when running on a different site), or the web server's ability to handle concurrent requests, or CPU threading or RAM usage in the PHP worker processors, or a combination of all... I think it could be very beneficial to run two Matomo instances on one Cloudron server, or even one instance per site for the tracking API and one for the admin UI. Is it easy for both instances to speak to one database (I haven't checked what's required to access a database in a different container)?

-

@girish said in Experience with Cloudron/Matomo updates and medium/larger sites?:

I took think 100k/month is not that much. That's around 3k a day or 1 a second.

Not sure about your maths there.

100k / 30 days = 3,333 / 24 hours / 60 minutes = ~2.3 per minute. (Did I get that right?)

100k / 30 days = 3,333 / 24 hours / 60 minutes = ~2.3 per minute. (Did I get that right?)100k visits/month is not that much, no... I think that's a small to medium sized site.

So collecting the data is generally only a small overhead on the server. Although to be accurate, concurrent requests can still become an issue even with one small site at that level, e.g. extrapolating from data above:

- Most local business sites process traffic around business hours, so perhaps multiply 2.3 per minute by 3 for an indicative standard minute during the work day.

- Advertising, email, or search peaks often cause sudden spikes, so (very roughly) multiply that by 20 to handle peak moments (though that number could be a lot higher), so we've got ~140 per minute.

- This gets us to around 2.5 visits per second for the peak...

- Visits are only part of the log data (page views, events, etc.)... So that could be 10-12 tracking API actions per second just for one small site.

Add a few sites and I think you might need to think carefully about those peaks, and that's just for data collection.

I was thinking more about Matomo's data processing than the data collection (e.g. it generally processes data every hour or so to keep the reporting UI fast, which takes up to a few minutes each time), and particularly about software updates. I think Matomo has to re-index all data after some if not many of its updates, or at least re-process its data when it adds new features, etc.

Assuming a small number somewhat standard active small sites:

100k visits / month

= up to 300k page views

... plus other actions (forms, events, other)

= up to 450k logs

... times only a small number of small sites (e.g. 5)

= ~ 2.2m logs / monthThe data in the tracking tables of Matomo's database seems to be about 30% of the total data it stores, most of the rest the rest is report data and what it calls "metrics".

So that can quickly turn into gigabytes of data over only a few months.

On a non-Cloudron Matomo instance that we host with fairly low traffic profiles like the ones outlined above, Matomo's default browser based data processing during a software update is not an option for us. We need to run updates manually from the CLI for any chance of success. And a failed update (which can happen even with the manual process) can grind the whole thing to a halt, potentially requiring lots of manual work and hours of re-indexing to clear out the report data and start over.

Hence my questions... I was wondering if anyone had experience over time and hence tips about using a Cloudron-hosted Matomo at those levels, especially with automatic updates turned on (which we don't have). Perhaps it's more efficient than our current system.

By the way, I think Matomo's "multi server" suggestions are useful even for single server environments. We definitely saw a huge difference in data processing bottlenecks once we separated the tracking UI onto a different website instance from the admin UI, even on the same server. I'm not exactly sure why - perhaps something to do with the read/write configurations of our MySQL instance (e.g. reads not waiting for writes to finish when running on a different site), or the web server's ability to handle concurrent requests, or CPU threading or RAM usage in the PHP worker processors, or a combination of all... I think it could be very beneficial to run two Matomo instances on one Cloudron server, or even one instance per site for the tracking API and one for the admin UI. Is it easy for both instances to speak to one database (I haven't checked what's required to access a database in a different container)?

@robw said in Experience with Cloudron/Matomo updates and medium/larger sites?:

Not sure about your maths there. 100k / 30 days = 3,333 / 24 hours / 60 minutes = ~2.3 per minute. (Did I get that right?)

you are right! math fail...

-

@girish said in Experience with Cloudron/Matomo updates and medium/larger sites?:

I took think 100k/month is not that much. That's around 3k a day or 1 a second.

Not sure about your maths there.

100k / 30 days = 3,333 / 24 hours / 60 minutes = ~2.3 per minute. (Did I get that right?)

100k / 30 days = 3,333 / 24 hours / 60 minutes = ~2.3 per minute. (Did I get that right?)100k visits/month is not that much, no... I think that's a small to medium sized site.

So collecting the data is generally only a small overhead on the server. Although to be accurate, concurrent requests can still become an issue even with one small site at that level, e.g. extrapolating from data above:

- Most local business sites process traffic around business hours, so perhaps multiply 2.3 per minute by 3 for an indicative standard minute during the work day.

- Advertising, email, or search peaks often cause sudden spikes, so (very roughly) multiply that by 20 to handle peak moments (though that number could be a lot higher), so we've got ~140 per minute.

- This gets us to around 2.5 visits per second for the peak...

- Visits are only part of the log data (page views, events, etc.)... So that could be 10-12 tracking API actions per second just for one small site.

Add a few sites and I think you might need to think carefully about those peaks, and that's just for data collection.

I was thinking more about Matomo's data processing than the data collection (e.g. it generally processes data every hour or so to keep the reporting UI fast, which takes up to a few minutes each time), and particularly about software updates. I think Matomo has to re-index all data after some if not many of its updates, or at least re-process its data when it adds new features, etc.

Assuming a small number somewhat standard active small sites:

100k visits / month

= up to 300k page views

... plus other actions (forms, events, other)

= up to 450k logs

... times only a small number of small sites (e.g. 5)

= ~ 2.2m logs / monthThe data in the tracking tables of Matomo's database seems to be about 30% of the total data it stores, most of the rest the rest is report data and what it calls "metrics".

So that can quickly turn into gigabytes of data over only a few months.

On a non-Cloudron Matomo instance that we host with fairly low traffic profiles like the ones outlined above, Matomo's default browser based data processing during a software update is not an option for us. We need to run updates manually from the CLI for any chance of success. And a failed update (which can happen even with the manual process) can grind the whole thing to a halt, potentially requiring lots of manual work and hours of re-indexing to clear out the report data and start over.

Hence my questions... I was wondering if anyone had experience over time and hence tips about using a Cloudron-hosted Matomo at those levels, especially with automatic updates turned on (which we don't have). Perhaps it's more efficient than our current system.

By the way, I think Matomo's "multi server" suggestions are useful even for single server environments. We definitely saw a huge difference in data processing bottlenecks once we separated the tracking UI onto a different website instance from the admin UI, even on the same server. I'm not exactly sure why - perhaps something to do with the read/write configurations of our MySQL instance (e.g. reads not waiting for writes to finish when running on a different site), or the web server's ability to handle concurrent requests, or CPU threading or RAM usage in the PHP worker processors, or a combination of all... I think it could be very beneficial to run two Matomo instances on one Cloudron server, or even one instance per site for the tracking API and one for the admin UI. Is it easy for both instances to speak to one database (I haven't checked what's required to access a database in a different container)?

@robw said in Experience with Cloudron/Matomo updates and medium/larger sites?:

We need to run updates manually from the CLI for any chance of success

On Cloudron, we don't use the browser based data processing (because it doesn't work for low traffic websites anyway). We have a cron task which does this automatically periodically. https://git.cloudron.io/cloudron/matomo-app/-/blob/master/CloudronManifest.json#L17 and https://git.cloudron.io/cloudron/matomo-app/-/blob/master/cron.sh is the relevant bits of code.

FWIW, we use matomo on cloudron.io and on this forum and haven't had any issues. This forum is extremely busy and has almost 3M page hits every month.

-

@robw said in Experience with Cloudron/Matomo updates and medium/larger sites?:

We need to run updates manually from the CLI for any chance of success

On Cloudron, we don't use the browser based data processing (because it doesn't work for low traffic websites anyway). We have a cron task which does this automatically periodically. https://git.cloudron.io/cloudron/matomo-app/-/blob/master/CloudronManifest.json#L17 and https://git.cloudron.io/cloudron/matomo-app/-/blob/master/cron.sh is the relevant bits of code.

FWIW, we use matomo on cloudron.io and on this forum and haven't had any issues. This forum is extremely busy and has almost 3M page hits every month.

@girish said in Experience with Cloudron/Matomo updates and medium/larger sites?:

FWIW, we use matomo on cloudron.io and on this forum and haven't had any issues. This forum is extremely busy and has almost 3M page hits every month.

Can you say a bit more on how you use it on the forum?

-

@girish said in Experience with Cloudron/Matomo updates and medium/larger sites?:

FWIW, we use matomo on cloudron.io and on this forum and haven't had any issues. This forum is extremely busy and has almost 3M page hits every month.

Can you say a bit more on how you use it on the forum?

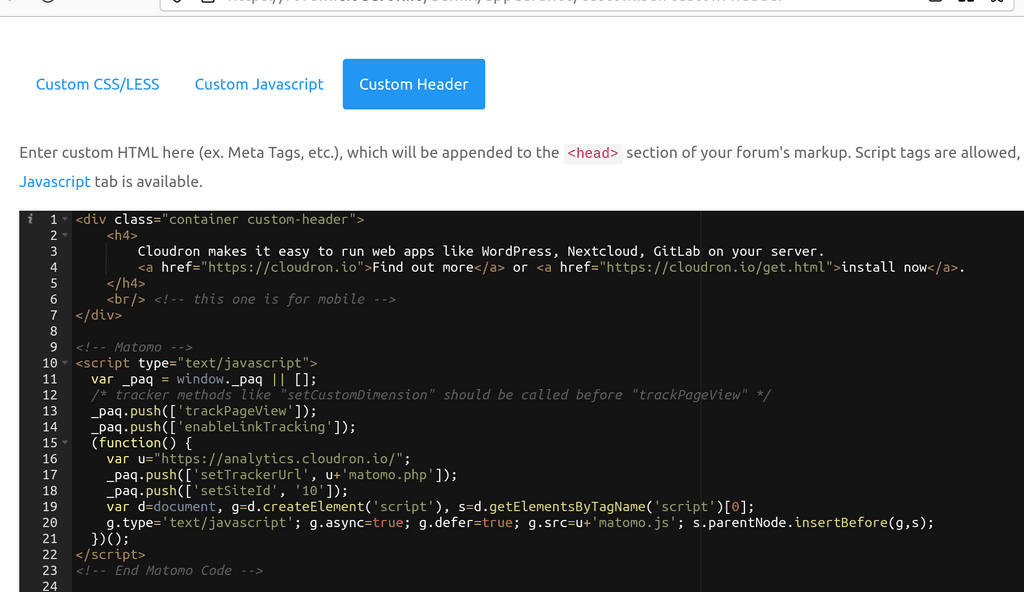

We add it in ACP -> Appearance -> Custom Header.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login