AI on Cloudron

-

Non-Free Google Gemini 1.5 pro available

https://threadreaderapp.com/thread/1778026405793828986.html -

-

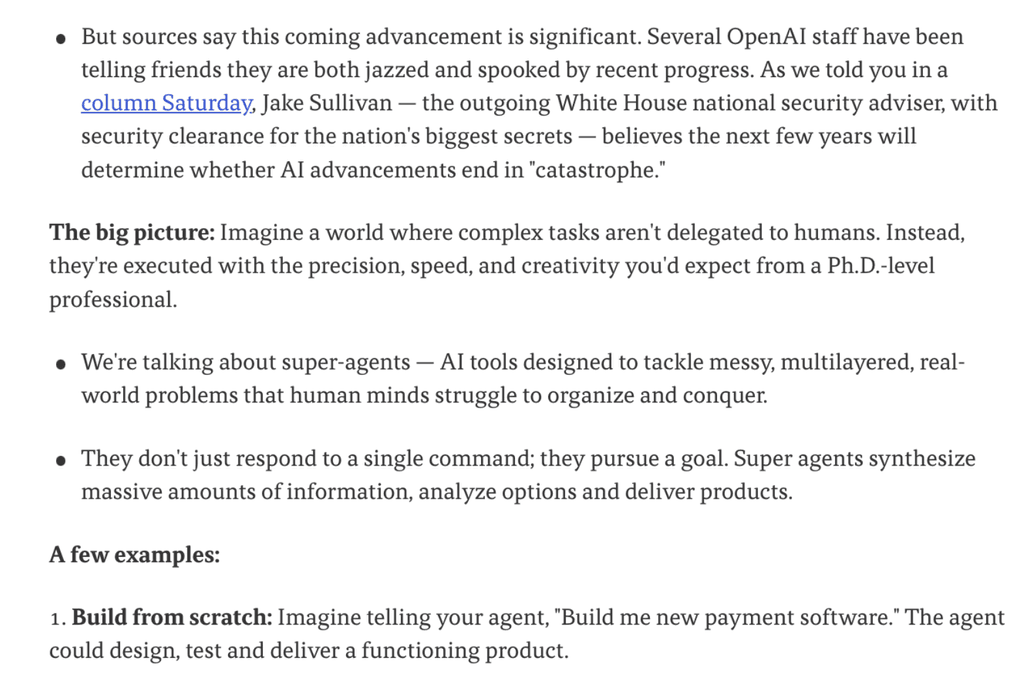

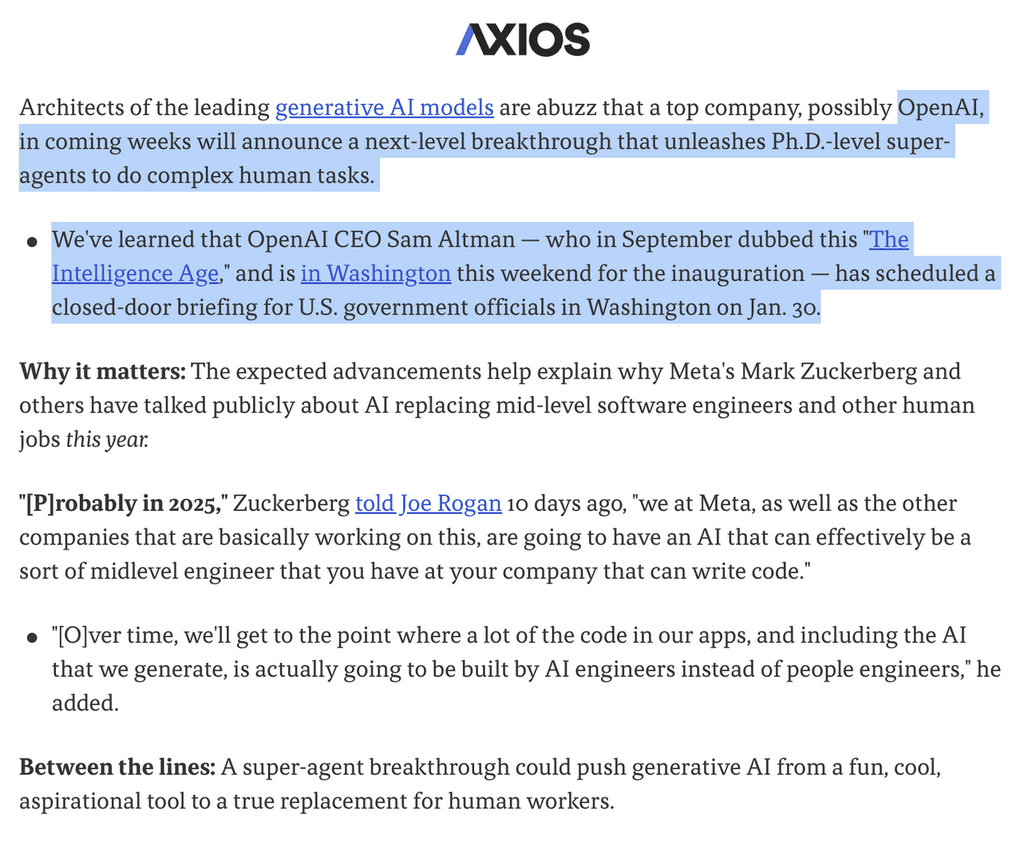

Anthropic AI's CEO has said that AI might be able to survive in the wild by next year. It was speculated elsewhere that it could fund itself through OnlyFans.

https://futurism.com/the-byte/anthropic-ceo-ai-replicate-survive

-

M$FT VASA-1 - Talking Heads

-

Cool interview on progress towards autonomous AI:

https://inv.tux.pizza/watch?v=6RUR6an5hOY&quality=dashvia Arya ai:

Enclosure in the context of technology, particularly open-source, refers to the phenomenon where a technology that was initially open and accessible becomes controlled or monopolized by a specific entity or group. This can happen through various means, such as strategic acquisitions, licensing restrictions, or the development of proprietary features that are not available in the open-source version. Some examples of enclosure include:

Oracle's acquisition of Sun Microsystems, which led to the discontinuation of the open-source OpenSolaris operating system.

Google's acquisition of Android and the development of proprietary features and services (like Google Play Services) that are not available in the open-source version (AOSP).

Microsoft's acquisition of GitHub, which raised concerns about the future of open-source projects hosted on the platform.

These examples illustrate how the enclosure can happen through strategic acquisitions and the development of proprietary features, leading to the control or monopolization of open-source technology. -

This tool is good and there is an application request.

https://anythingllm.com

https://forum.cloudron.io/topic/11722/anythingllm-ai-business-intelligence-tool/5 -

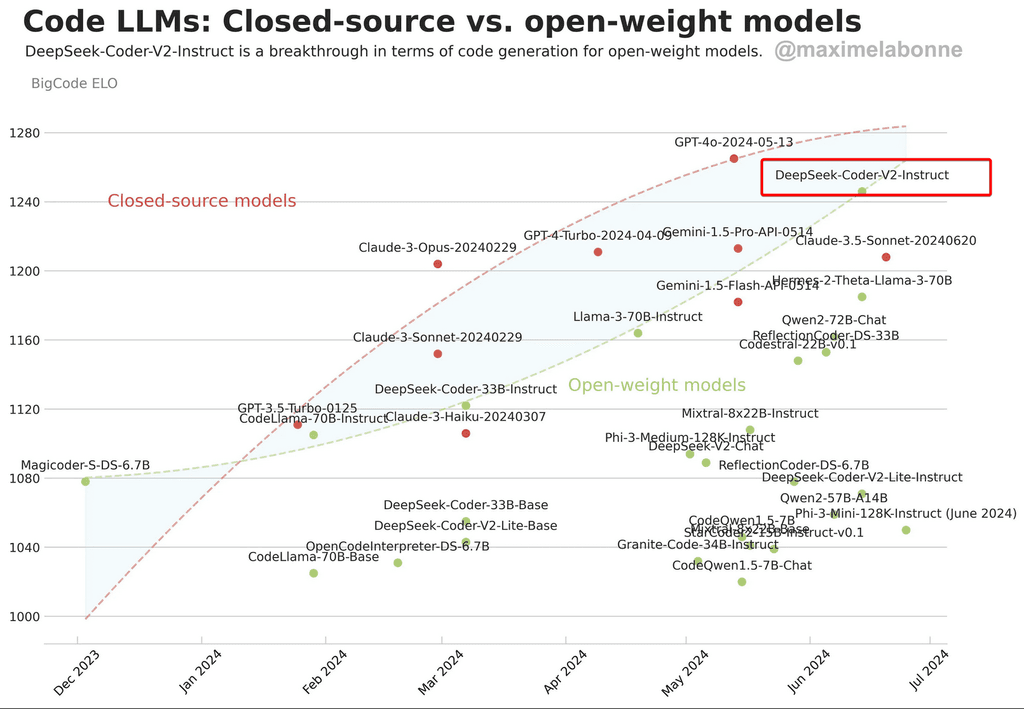

Model strong at coding/bug-fixing:

https://huggingface.co/deepseek-ai/DeepSeek-Coder-V2-Instruct -

OpenSora (not Open AI) the Free Software AI video creator is now available for self-hosting.

https://github.com/hpcaitech/Open-Sora

From Reddit:

"It can generate up to 16 seconds in 1280x720 resolution but requires 67g vram and takes 10minutes to generate on a 80G H100 graphics card which costs 30k. However there are hourly services and I see one that is 3 dollars per hour which is like 50cents per video at the highest rez. So you could technically output a feature length movie (60minutes) with $100.

*Disclaimer: it says minimum requirement is 24g vram, so not going to be easy to run this to its full potential yet.

They do also have a gradio demo as well."

-

From Reddit:

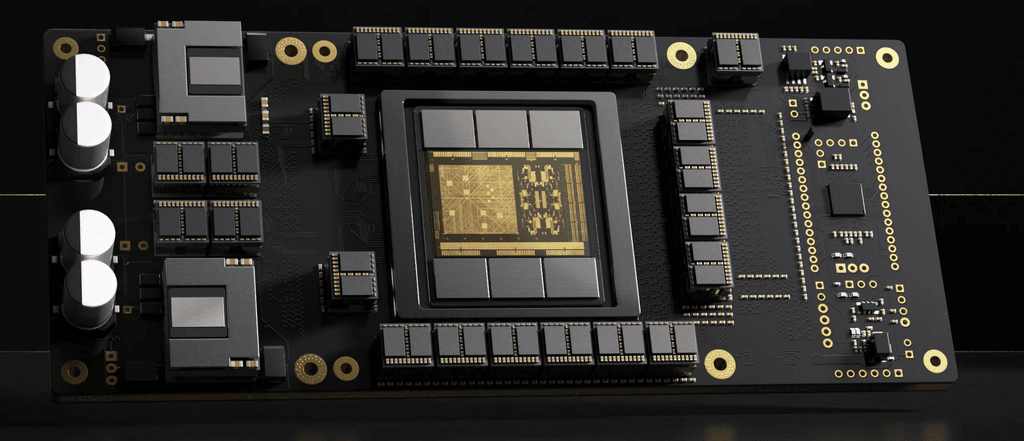

Etched is working on a specialized AI chip called Sohu. Unlike general-purpose GPUs, Sohu is designed to run only transformer models, the architecture behind LLMs like ChatGPT.The company claims Sohu offers dramatically better performance than traditional GPUs while using less energy. This approach could improve AI infrastructure as the industry grapples with increasing power consumption and costs.

Key details:

Etched raised $120 million Series A funding to work on Sohu Sohu will be manufactured using TSMC 4nm process The chip can deliver 500,000 tokens per second for Llama 70B One Sohu server allegedly replaces 160 H100 GPUs Etched claims Sohu is going to be 10x faster and cheaper than Nvidia's next-gen Blackwell GPUsSource: https://www.etched.com/announcing-etched

-

-

Groq is the AI infrastructure company that delivers fast AI inference.

The LPU

Inference Engine by Groq is a hardware and software platform that delivers exceptional compute speed, quality, and energy efficiency.

Inference Engine by Groq is a hardware and software platform that delivers exceptional compute speed, quality, and energy efficiency.Groq, headquartered in Silicon Valley, provides cloud and on-prem solutions at scale for AI applications. The LPU and related systems are designed, fabricated, and assembled in North America.

Since I use this with Llama 3 70B I don't have a need for GPT 3.5 anymore. GPT 4 is too expensive IMHO

-

Groq is the AI infrastructure company that delivers fast AI inference.

The LPU

Inference Engine by Groq is a hardware and software platform that delivers exceptional compute speed, quality, and energy efficiency.

Inference Engine by Groq is a hardware and software platform that delivers exceptional compute speed, quality, and energy efficiency.Groq, headquartered in Silicon Valley, provides cloud and on-prem solutions at scale for AI applications. The LPU and related systems are designed, fabricated, and assembled in North America.

Since I use this with Llama 3 70B I don't have a need for GPT 3.5 anymore. GPT 4 is too expensive IMHO

@Kubernetes Thanks. How do you actually sign up for Groq, as their stych servers don't seem to be working and they seem to require a Github account for registration

-

@Kubernetes Thanks. How do you actually sign up for Groq, as their stych servers don't seem to be working and they seem to require a Github account for registration

@LoudLemur I did sign up with my Github Account...

-

Llama 3.1 405b released. Try here: https://www.meta.ai

-

-

-

Step Games - an prisoner's dilemma for Large Language Models. The emergent text section is quite interesting:

https://github.com/lechmazur/step_game -

A Fast And Easy Way To Run DeepSeek SECURELY-Locally On YOUR Computer.

The amazing open source developer, Surya Dantuluri (@sdan) has made a full DeepSeek R1 (distilled on Qwen) in a web browser! It is local and does not “call home” (as if that was possible).

This IS NOT the full large version of DeepSeek but it does allow you to test it out.

To run the DeepSeek R1-web browser project from GitHub (https://github.com/sdan/r1-web) on your computer or phone, follow these detailed steps. This guide assumes no prior technical knowledge and will walk you through the process step by step.

Understanding the Project

The r1-web project is a web application that utilizes advanced machine learning models entirely on the client side, leveraging modern browser technologies like WebGPU. Running this project involves setting up a local development environment, installing necessary software, and serving the application locally.~ As a regular install below, this looks like it would work well in our LAMP app -- @robi

Prerequisites

Before you begin, ensure you have the following:

• A Computer: A Windows, macOS, or Linux system.

• Internet Connection: To download necessary software and project files.

• Basic Computer Skills: Ability to install software and navigate your operating system.

Step 1: Install Node.js

Node.js is a JavaScript runtime that allows you to run JavaScript code outside of a web browser.- Download Node.js:

• Visit the official Node.js website: https://nodejs.org/

• Click on the “LTS” (Long Term Support) version suitable for your operating system (Windows, macOS, or Linux). - Install Node.js:

• Open the downloaded installer file.

• Follow the on-screen instructions to complete the installation.

• During installation, ensure the option to install npm (Node Package Manager) is selected.

Step 2: Verify Installation

After installation, confirm that Node.js and npm are installed correctly. - Open Command Prompt or Terminal:

• On Windows: Press the Windows key, type cmd, and press Enter.

• On macOS/Linux: Open the Terminal application. - Check Node.js Version:

• Type node -v and press Enter.

• You should see a version number (e.g., v18.18.0 or higher). - Check npm Version:

• Type npm -v and press Enter.

• A version number should be displayed, indicating npm is installed.

Step 3: Download the r1-web Project

Next, you’ll download the project files from GitHub. - Visit the GitHub Repository:

• Go to https://github.com/sdan/r1-web - Download the Project:

• Click on the green “Code” button.

• Select “Download ZIP” from the dropdown menu.

• Save the ZIP file to a convenient location on your computer. - Extract the ZIP File:

• Navigate to the downloaded ZIP file.

• Right-click and select “Extract All” (Windows) or double-click to extract (macOS).

• This will create a folder named r1-web-master or similar.

Step 4: Install Project Dependencies

Now, you’ll install the necessary packages required to run the project. - Open Command Prompt or Terminal:

• Navigate to the extracted project folder.

• For example, if the folder is on your Desktop:

• Type cd Desktop/r1-web-master and press Enter. - Install Dependencies:

• Type npm install and press Enter.

• This command downloads and installs all necessary packages.

• Wait for the process to complete; it may take a few minutes.

Step 5: Run the Application

With everything set up, you can now run the application. - Start the Development Server:

• In the same Command Prompt or Terminal window, type npm run dev and press Enter.

• The application will compile and start a local development server. - Access the Application:

• Open your web browser (e.g., Chrome, Firefox).

• Navigate to http://localhost:3000.

• You should see the r1-web application running.

Running on a Mobile Device

Running the r1-web project directly on a mobile device is more complex and typically not recommended for beginners. However, you can access the application from your mobile device by ensuring both your computer and mobile device are connected to the same Wi-Fi network. - Find Your Computer’s IP Address:

• On Windows:

• Open Command Prompt and type ipconfig.

• Look for the “IPv4 Address” under your active network connection.

• On macOS:

• Open Terminal and type ifconfig | grep inet.

• Find the IP address associated with your active network. - Access from Mobile Device:

• On your mobile device’s browser, enter http://<your-computer-ip>:3000.

• Replace <your-computer-ip> with the IP address you found earlier.

• For example, http://192.168.1.5:3000.

• You should see the application running on your mobile device.

- Download Node.js:

-

Definitely more useful to run on a private VPS or on a Cloudron instance than locally, presumably behind Basic Auth or Cloudron’s login add on.