App runs out of memory

-

With the default settings I had the situation that Linkding did run out of memory. I didn't do anything special. However, I raised the memory now manually to 512MB

-

I had yesterday a strange situation about RAM. I got a notification that my mastodon (2GB) ran out of memory, so I now raised it to 4. When I took a look at the graph, it never went over the 1 GB line. I wondered if one of the reports might be wrong. How can it run out of memory, when following the graphs it never used more than 50%?

-

I had yesterday a strange situation about RAM. I got a notification that my mastodon (2GB) ran out of memory, so I now raised it to 4. When I took a look at the graph, it never went over the 1 GB line. I wondered if one of the reports might be wrong. How can it run out of memory, when following the graphs it never used more than 50%?

@RazielKanos said in App runs out of memory:

I had yesterday a strange situation about RAM. I got a notification that my mastodon (2GB) ran out of memory, so I now raised it to 4. When I took a look at the graph, it never went over the 1 GB line. I wondered if one of the reports might be wrong. How can it run out of memory, when following the graphs it never used more than 50%?

I think the problem is that the graphs aren't very good/ accurate. They don't poll constantly (that would be too resource intensive), and I guess can't poll at all when the app is down because it ran out of memory.

-

Actually, the stats are collected every 20s. But the issue is that when the system is low on RAM, the linux OOM Killer just picks a process at random (seemingly).

"The primary goal is to kill the least number of processes that minimizes the damage done and at the same time maximizing the amount of memory freed."

More info here as well - https://neo4j.com/developer/kb/linux-out-of-memory-killer/ . I think communicating this to end users is really hard. One should also consider that each VPS provider has their own kernel with their own configurations...

-

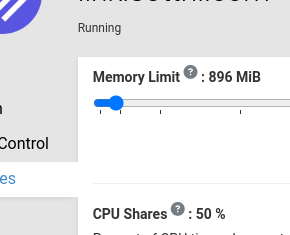

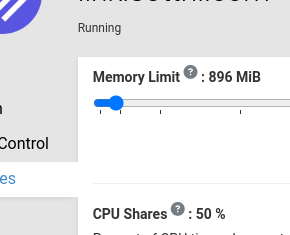

With the default settings I had the situation that Linkding did run out of memory. I didn't do anything special. However, I raised the memory now manually to 512MB

@Kubernetes said in App runs out of memory:

However, I raised the memory now manually to 512MB

I raised the default memory limit of the app as well now to 512MB for next package release.

-

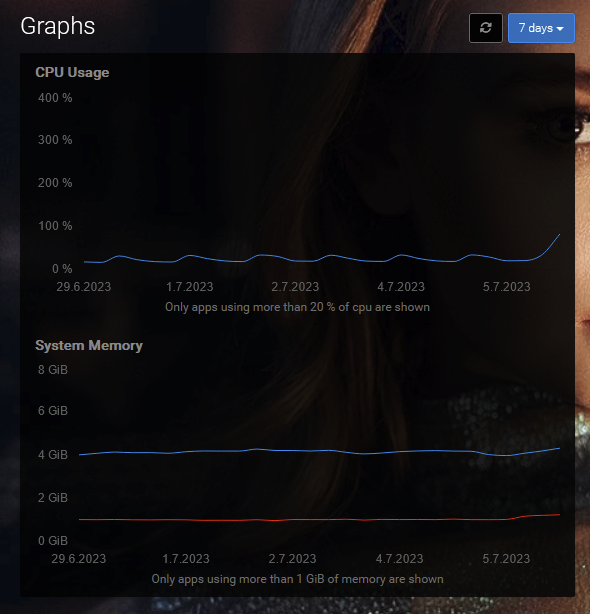

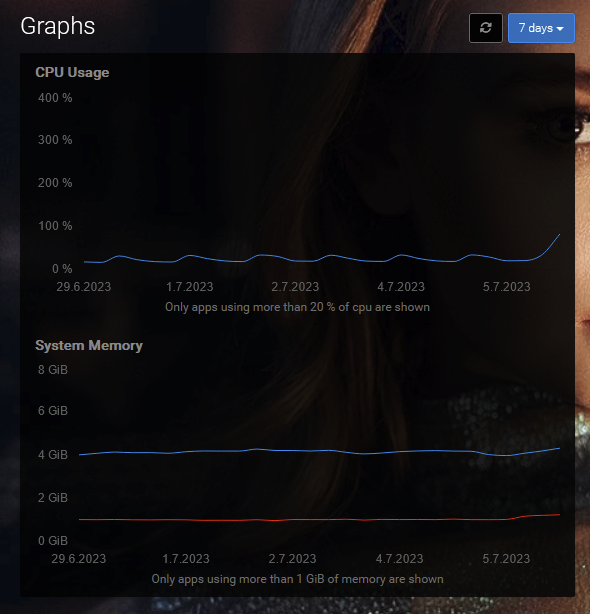

dunno, thought I just mention it, just in case something odd is going on, as you can see in the graph from the last 7 days, the whole system is far from it's max of 8 GB

-

dunno, thought I just mention it, just in case something odd is going on, as you can see in the graph from the last 7 days, the whole system is far from it's max of 8 GB

-

I couldn't figure it out with dmesg, but this worked, but i have no clue what all those numbers mean

root@Cloudron:~# grep "Killed process" /var/log/syslog

Jul 5 11:57:32 v2202211115363206125 kernel: [517559.408274] Memory cgroup out of memory: Killed process 36850 (bundle) total-vm:665380kB, anon-rss:372784kB, file-rss:6456kB, shmem-rss:0kB, UID:1000 pgtables:1276kB oom_score_adj:0 -

Personally I'm the only user of my whole Cloudron Instance (for now)

and Linkding with bearly 200 links,...OK I must admit;

I use in my Brave-Browser ...

- Linkding Extension

- Linkding Injector

Anyway; in GENERAL for Cloudron

- it would be nice to have a kind of Agent (AI (sic))

who try to predict these volatile parameters

and recommand preemptively settings for these.- MEMORY LIMIT

- CPU SHARES

-

Personally I'm the only user of my whole Cloudron Instance (for now)

and Linkding with bearly 200 links,...OK I must admit;

I use in my Brave-Browser ...

- Linkding Extension

- Linkding Injector

Anyway; in GENERAL for Cloudron

- it would be nice to have a kind of Agent (AI (sic))

who try to predict these volatile parameters

and recommand preemptively settings for these.- MEMORY LIMIT

- CPU SHARES

@JOduMonT i love the Linkding injector (display Linkding results on any Google Search results page), as well. Very helpful - do other bookmark managers provide this as well?