Oh, got it—thanks.

ntnsndr

Posts

-

Has anyone got the Element X App working with Cloudron Matrix? -

Has anyone got the Element X App working with Cloudron Matrix?Thanks for the clarity. Disappointing, since unified login is really crucial for my server.

Again, what an absurd situation: That the flagship app for Matrix doesn't work with SSO. How the heck do they explain that to their government/military customers?

Thanks, team Cloudron!

-

Has anyone got the Element X App working with Cloudron Matrix?@scooke What method did you use? Were you able to use the QR? Or did you manually join the server by address without the "selected homeserver doens't support password or oidc login" error?

-

Has anyone got the Element X App working with Cloudron Matrix?I'm encountering this as well. It is really disappointing as a statement on the Matrix ecosystem that the flagship Matrix app can't always log into the standard Matrix server. Do we have any ability to build in a workaround for Cloudron?

-

Matrix WhatsApp BridgeI'm looking to install the Zulip bridge in order to connect our Matrix server with a Zulip chat that collaborators use. Supporting bridges somehow seems important, as bridging is really central to Matrix's value proposition. (Of course, it is absurd that bridging requires third-party software installation rather than some kind of simple, integrated plugin architecture.)

Is there a way to set this up on the app/data side so it is persistent through updates?

Here are the options I'm looking at:

https://github.com/mautrix/zulip

https://github.com/GearKite/MatrixZulipBridge -

Propagation issue on a new domain/app deploymentOkay, found the solution. Somehow there was a URL redirect in Namecheap from EXAMPLE.org to www.EXAMPLE.org. When I removed that, the installation succeeded. Thank you! Sorry for the trouble.

-

Propagation issue on a new domain/app deploymentYes, both commands return the same result:

EXAMPLE.org has address 192.64.119.184 EXAMPLE.org has address 193.46.198.90The bottom IP address there is the one listed under IPv4 network on the Cloudron backend. I am not sure where the first one is coming from. It appears to be a local network IP.

-

Propagation issue on a new domain/app deploymentThis is strange. I haven't encountered this issue before.

I do have a static IP. I'm comparing the records to the ones that are working on Namecheap. The A Record IP address is the same, and so is the AAAA Record. So I'm not sure what I could change.

Currently the error I'm getting when I navigate to the website is that there is a self-signed security certificate.

I just tried re-establishing the domain on Cloudron and refreshing the certificates, but we are still stuck at the "Waiting for propagation" stage when installing. In my browser, the website is on the page that says "You are seeing this page because the DNS record of magicmountaintalks.org is set to this server's IP but Cloudron has no app configured for this domain."

Update: After a few minutes of trying the install, it reverts back to the "The certificate is not trusted because it is self-signed" error. And if I refresh the certificates, I can access the installed app, but the Cloudron backend is treating the install as having failed, so I can't do any administrative tasks. That seems to me like more likely a Cloudron issue than Namecheap, since the site is accessible.

Is there anything else you can recommend I try?

Thank you.

-

Propagation issue on a new domain/app deploymentI am having an issue with setting up a new app on my Cloudron system. I registered the domain on Namecheap two days ago, and immediately set up the domain in Cloudron using the API method, which has always worked fine. It has been over 24 hours. But when I attempt to install a WordPress site (Managed), some weird things are happening. The installation process gets stuck at

Installing - Waiting for propagation of EXAMPLE.org(anonymized). And the logs for the app look like this:Oct 27 09:43:44 box:dns/waitfordns waitForDns: nameservers are ["dns2.registrar-servers.com","dns1.registrar-servers.com"] Oct 27 09:43:44 box:dns/waitfordns resolveIp: Checking A for EXAMPLE.org at 156.154.133.200 Oct 27 09:43:44 box:dns/waitfordns isChangeSynced: EXAMPLE.org (A) was resolved to 192.64.119.184,193.46.198.90 at NS dns2.registrar-servers.com (156.154.133.200). Expecting 193.46.198.90. Match false Oct 27 09:43:44 box:dns/waitfordns resolveIp: Checking A for EXAMPLE.org at 2610:a1:1025::200 Oct 27 09:43:44 box:dns/waitfordns isChangeSynced: EXAMPLE.org (A) was resolved to 192.64.119.184,193.46.198.90 at NS dns2.registrar-servers.com (2610:a1:1025::200). Expecting 193.46.198.90. Match false Oct 27 09:43:44 box:dns/waitfordns waitForDns: EXAMPLE.org at ns dns2.registrar-servers.com: not done Oct 27 09:43:44 box:dns/waitfordns Attempt 187 failed. Will retry: ETRYAGAINHere's where it gets a little weirder. If I renew the domain certificates across Cloudron while it is stuck there, the website starts working; if I go to EXAMPLE.org, I see a WordPress website. But I can't get the installation process unstuck. And if I try reinstalling, the site disappears until I try the renewal again, which once again does not unstuck the installation process.

I attempted to install Surfer at that domain to see if it was a WordPress issue, but the same problem occurred.

Are there other diagnostics you'd recommend? Thanks in advance for your assistance.

-

Nextcloud update pushed too early?Just adding that I'm having this same issue with the disappearing Group Folders (now called Team Folders). It is a bit of a disaster because our group folder is the most important data in the organization.

I notice that the apps "Teams" and "Team Folders" were both not enabled after the update. I was able to reactivate Teams. But enabling Team Folders produces this error in the UX:

An error occurred during the request. Unable to proceed. json_decode(): Argument #1 ($json) must be of type string, null givenI'm grateful for how this community helps each other work through these issues together.

I'm working on backup/restore.

-

Collabora via Nextcloud app or Cloudron app?Okay, thanks! Just switched to the Cloudron app, and it is working nicely so far.

-

Collabora via Nextcloud app or Cloudron app?I'm curious about others' experiences: Do you use Collabora via Nextcloud's internal app, or via an external Cloudron app?

I have been using the former, and it works at first, but after some usage it starts getting this error:

Document loading failed Failed to load Nextcloud Office - please try again laterIs there a reason we should be using the external Cloudron app?

I like the idea of keeping Nextcloud and Collabora in one place through the Nextcloud app, but if there's a good reason to do so, I could go back to using Collabora in the Cloudron app.

-

Cloudron users can't create accountsYes, that worked for me! That seems strange, but it works.

-

Cloudron users can't create accounts@james good question:) Yes, I did, multiple times.

-

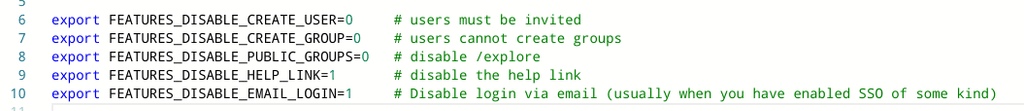

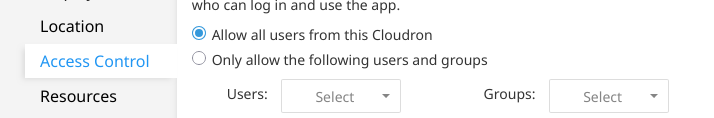

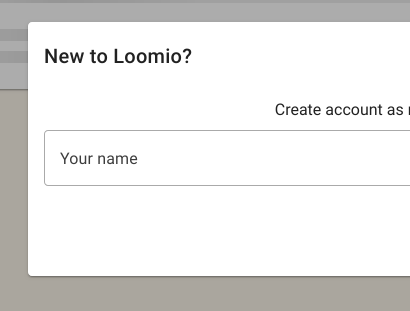

Cloudron users can't create accountsI'm having trouble enabling my users to access Loomio. For now, at least, the goal is for all Cloudron users to be able to log in. Here is my

env.sh:

And the Cloudron Access Control:

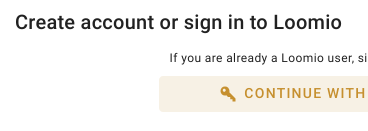

I was able to create an initial account. I'm the Cloudron admin account. But when a less-privileged account tries, here's what happens. They click the key button here:

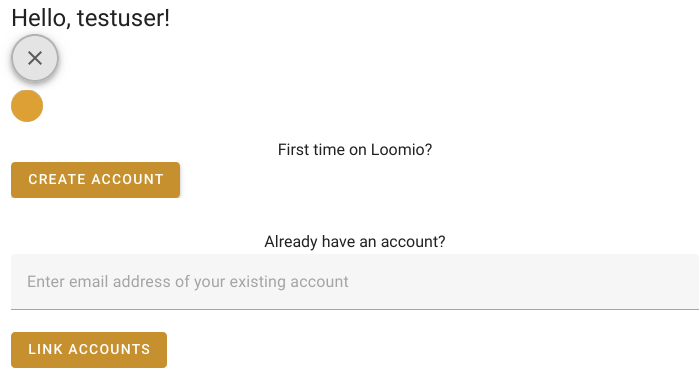

Then log into Cloudron if they haven't yet. Then they get this:

I try Create Account, and the next screen is this:

I then enter a name, hit enter, and get this:

The logs look like this:

ep 09 13:59:23 [09/Sep/2025:19:59:23 +0000] 172.18.0.1 "GET /socket.io/?channel_token=0a44509556dc6849817ae75c99dc27bb&EIO=4&transport=websocket&sid=DUSj2M0mfdpeHoplAAAG HTTP/1.1" "Mozilla/5.0 (X11; Linux x86_64; rv:142.0) Gecko/20100101 Firefox/142.0" 101 343 "-" 338.888 338.888 Sep 09 13:59:27 I, [2025-09-09T19:59:27.053758 #89] INFO -- : source=rack-timeout id=a9a67a5f-b092-4cc6-871a-507461756d07 wait=3ms timeout=15000ms state=ready Sep 09 13:59:27 I, [2025-09-09T19:59:27.054568 #89] INFO -- : [a9a67a5f-b092-4cc6-871a-507461756d07] Started POST "/api/v1/registrations" for 2601:280:4681:7f50:d03e:b131:c42b:9dbd at 2025-09-09 19:59:27 +0000 Sep 09 13:59:27 I, [2025-09-09T19:59:27.061332 #89] INFO -- : [a9a67a5f-b092-4cc6-871a-507461756d07] Processing by Api::V1::RegistrationsController#create as JSON Sep 09 13:59:27 I, [2025-09-09T19:59:27.061437 #89] INFO -- : [a9a67a5f-b092-4cc6-871a-507461756d07] Parameters: {"user" => {"name" => "test", "email" => "[FILTERED]"}, "registration" => {"user" => {"name" => "test", "email" => "[FILTERED]"}}} Sep 09 13:59:27 I, [2025-09-09T19:59:27.097082 #89] INFO -- : [a9a67a5f-b092-4cc6-871a-507461756d07] Filter chain halted as :permission_check rendered or redirected Sep 09 13:59:27 I, [2025-09-09T19:59:27.098055 #89] INFO -- : [a9a67a5f-b092-4cc6-871a-507461756d07] Completed 422 Unprocessable Entity in 36ms (Views: 0.2ms | ActiveRecord: 0.0ms (0 queries, 0 cached) | GC: 23.6ms) Sep 09 13:59:27 I, [2025-09-09T19:59:27.099929 #89] INFO -- : [a9a67a5f-b092-4cc6-871a-507461756d07] source=rack-timeout id=a9a67a5f-b092-4cc6-871a-507461756d07 wait=3ms timeout=15000ms service=46ms state=completed Sep 09 13:59:27 [09/Sep/2025:19:59:27 +0000] 172.18.0.1 "POST /api/v1/registrations HTTP/1.1" "Mozilla/5.0 (X11; Linux x86_64; rv:142.0) Gecko/20100101 Firefox/142.0" 422 1074 "https://loomio.medlab.host/dashboard" 0.049 0.049 Sep 09 13:59:30 [09/Sep/2025:19:59:30 +0000] 172.18.0.1 "GET / HTTP/1.1" "Mozilla (CloudronHealth)" 301 203 "-" 0.004 0.004 Sep 09 13:59:32 I, [2025-09-09T19:59:32.775609 #81] INFO -- : source=rack-timeout id=de58afb4-baea-43de-bed7-3b260013092f wait=2ms timeout=15000ms state=ready Sep 09 13:59:32 I, [2025-09-09T19:59:32.776522 #81] INFO -- : [de58afb4-baea-43de-bed7-3b260013092f] Started GET "/api/v1/boot/version?version=3.0.5&release=1757100919&now=1757447973262" for 128.138.65.206 at 2025-09-09 19:59:32 +0000 Sep 09 13:59:32 I, [2025-09-09T19:59:32.778088 #81] INFO -- : [de58afb4-baea-43de-bed7-3b260013092f] Processing by Api::V1::BootController#version as JSON Sep 09 13:59:32 I, [2025-09-09T19:59:32.778625 #81] INFO -- : [de58afb4-baea-43de-bed7-3b260013092f] Parameters: {"version" => "3.0.5", "release" => "1757100919", "now" => "1757447973262", "boot" => {}} Sep 09 13:59:32 I, [2025-09-09T19:59:32.782954 #81] INFO -- : [de58afb4-baea-43de-bed7-3b260013092f] Completed 200 OK in 4ms (Views: 0.1ms | ActiveRecord: 0.0ms (0 queries, 0 cached) | GC: 0.8ms) Sep 09 13:59:32 I, [2025-09-09T19:59:32.783555 #81] INFO -- : [de58afb4-baea-43de-bed7-3b260013092f] source=rack-timeout id=de58afb4-baea-43de-bed7-3b260013092f wait=2ms timeout=15000ms service=8ms state=completed Sep 09 13:59:32 [09/Sep/2025:19:59:32 +0000] 172.18.0.1 "GET /api/v1/boot/version?version=3.0.5&release=1757100919&now=1757447973262 HTTP/1.1" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/140.0.0.0 Safari/537.36" 200 1237 "https://loomio.medlab.host/dashboard" 0.010 0.010 Sep 09 13:59:40 [09/Sep/2025:19:59:40 +0000] 172.18.0.1 "GET / HTTP/1.1" "Mozilla (CloudronHealth)" 301 203 "-" 0.004 0.004Any thoughts on why this Cloudron user can't log in on Loomio?

Thanks in advance, and thank you for supporting this excellent app.

-

Email forwarding with Amazon SES@nichu42 thanks for engaging. Honestly it could go either way. That does appear to be the default meaning of "forwarding" in the Rainloop filter. But it would be fine if all the messages came from the same address if need be.

-

SSO login failingHere's the latest I'm seeing in the logs:

ug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET / HTTP/1.1" 200 3875 "-" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /_media/wiki/logo.png HTTP/1.1" 200 45912 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/exe/css.php?t=dokuwiki&tseed=ef7ebc214c4220eb1d63337ef774614f HTTP/1.1" 200 22131 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/exe/jquery.php?tseed=8faf3dc90234d51a499f4f428a0eae43 HTTP/1.1" 200 96922 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/exe/js.php?t=dokuwiki&tseed=ef7ebc214c4220eb1d63337ef774614f HTTP/1.1" 200 27334 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/exe/taskrunner.php?id=start&1755798917 HTTP/1.1" 200 42 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/images/license/button/cc-by-nc-sa.png HTTP/1.1" 200 396 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/tpl/dokuwiki/images/apple-touch-icon.png HTTP/1.1" 200 6336 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/tpl/dokuwiki/images/button-css.png HTTP/1.1" 200 297 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/tpl/dokuwiki/images/button-donate.gif HTTP/1.1" 200 187 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/tpl/dokuwiki/images/button-dw.png HTTP/1.1" 200 398 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/tpl/dokuwiki/images/button-html5.png HTTP/1.1" 200 305 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/tpl/dokuwiki/images/button-php.gif HTTP/1.1" 200 207 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/tpl/dokuwiki/images/favicon.ico HTTP/1.1" 200 7406 "https://handbook.medlab.host/" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0" Aug 21 11:55:17 128.138.65.244 - - [21/Aug/2025:17:55:17 +0000] "GET /lib/tpl/dokuwiki/images/page-gradient.png HTTP/1.1" 200 209 "https://handbook.medlab.host/lib/exe/css.php?t=dokuwiki&tseed=ef7ebc214c4220eb1d63337ef774614f" "Mozilla/5.0 (X11; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0"Thanks!

-

Email forwarding with Amazon SESBumping! A bit desperate for help here! Thanks:)

-

SSO login failingI'm afraid that's not it; I just transferred the pages/ directory. Nothing else.

-

Email forwarding with Amazon SESI recently switched an email address from sending via Cloudron to sending via Amazon SES. I was hoping this would improve deliverability and get rid of persistent errors around the PTR configuration. But now forwarding messages to a gmail address through Rainloop doesn't work. I'm getting this error:

This is a permanent error; I've given up. Sorry it didn't work out. Intended Recipients: <ADDRESS2@EXAMPLE.COM> Failure Reason: Error: 554 Message rejected: Email address is not verified. The following identities failed the check in region US-EAST-2: ADDRESS1@EXAMPLE.COM Original-Envelope-Id: <e4dce8d2-4e99-4de8-8c4d-10ac774a4fb7@EXAMPLE.COM> Reporting-MTA: dns;my.medlab.host Arrival-Date: Tue, 19 Aug 2025 14:47:08 +0000 Final-Recipient: rfc822;ADDRESS2@EXAMPLE.COM Action: failed Remote-MTA: 3.22.8.243 Diagnostic-Code: smtp;554 Message rejected: Email address is not verified. The following identities failed the check in region US-EAST-2: ADDRESS1@EXAMPLE.COMObviously I cannot verify the addresses that people are writing me from, since I don't control those addresses or domains.

Is there a way to properly forward messages from arbitrary email addresses if using SES for delivery?

Thanks for your assistance.