LibreChat

-

What's the difference between opebwebui and librechat? Just wondering, I don't mind adding similar apps. But it seems they both can operate on an api key and are just frontends.

There's many apps in the pipeline already, so we have to wait a bit to get to this.

-

What's the difference between opebwebui and librechat? Just wondering, I don't mind adding similar apps. But it seems they both can operate on an api key and are just frontends.

There's many apps in the pipeline already, so we have to wait a bit to get to this.

What's the difference between opebwebui and librechat?

LibreChat is supposedly more difficult to deploy than OpenWebUI. In which case, Cloudron would be able to add greater value by easing deployment of LibreChat.

Here is a quick summary via Grok:

"Here's a comparison between LibreChat and Open WebUI based on the available information:

Open Source:

LibreChat: Yes, LibreChat is open-source, available on GitHub. It uses an MIT license, allowing for free use, modification, and distribution.Open WebUI: Also open-source, with its code available on GitHub. It uses the Apache 2.0 license, which is similarly permissive.

Development Activity:

LibreChat: It shows active development, with regular updates and contributions from the community. It's noted for integrating with numerous AI providers quickly after their launch.Open WebUI: Has a healthy development pace with frequent commits and a focus on enhancing user experience and adding new features. The project's community seems engaged, with various pipelines and integrations being developed.

User Base:

LibreChat: While specific user numbers aren't detailed, it's popular among those looking for a customizable, enterprise-level AI chat interface, with a focus on privacy and control over data.Open WebUI: It's gaining traction for its ease of setup, user-friendly interface, and extensive features, particularly among those interested in self-hosting AI with control over their data.

Docker Support:

LibreChat: Offers robust Docker support for easy deployment, making it accessible for those familiar with containerization.

Open WebUI: Provides a Docker-based installation for both CPU and GPU environments, simplifying setup and deployment.Supported Features/Models:

LibreChat: Features include AI model switching, conversation branching, multimodality, and integration with various AI services like OpenAI, Azure, Anthropic, and Google. It supports RAG through integration with RAG API.Open WebUI: Known for its versatility, supporting models from Ollama and OpenAI-compatible APIs, with features like custom RAG, message monitoring, user rate limiting, and real-time translation. It also allows for the creation of custom models and real-time web browsing capabilities.

Retrieval Augmented Generation (RAG):

LibreChat: Integrates with RAG API for uploading files and asking questions, enhancing its capability for document-based Q&A. However, it lacks advanced file management features.Open WebUI: Offers an integrated RAG process that works well for small-scale document interaction, although it's noted to be less scalable for larger sets.

License:

LibreChat: MIT License.Open WebUI: Apache 2.0 License.

Pros/Cons:

LibreChat Pros:

Enterprise-level authentication and flexibility.

Supports a wide variety of AI models and services.

Strong focus on privacy with data retention control.

LibreChat Cons:

Configuration might be more complex for some users.

Lacks some of the administrative controls found in Open WebUI.

Open WebUI Pros:

Streamlined user management with a user-friendly interface.

Strong support for custom integrations and pipelines.

Excellent for those looking for an easy-to-set-up AI interface.

Open WebUI Cons:

Less granular user management compared to LibreChat.

RAG implementation might not scale well for large document sets.Both platforms serve different needs, with LibreChat leaning towards enterprise solutions and Open WebUI providing a more consumer-friendly setup with robust community support. Your choice might depend on your specific requirements, particularly regarding ease of use, control over AI models, and data privacy.

" -

What's the difference between opebwebui and librechat? Just wondering, I don't mind adding similar apps. But it seems they both can operate on an api key and are just frontends.

There's many apps in the pipeline already, so we have to wait a bit to get to this.

-

What's the difference between opebwebui and librechat? Just wondering, I don't mind adding similar apps. But it seems they both can operate on an api key and are just frontends.

There's many apps in the pipeline already, so we have to wait a bit to get to this.

@girish Open WebUI looks more focused on also hosting the open models, so that needs a GPU.

LibreChat is more for using APIs to externally hosted model providers.

I'd say LibreChat is the a more mature development, and appropriate for Cloudron to have first, as it will work with all existing hardware.

It's also one of those apps that pays for itself, in that the alternative of using Poe.com for a team of users is more costly than a Cloudron subscription and hosting.

-

@marcusquinn we already have open webui , that's why I asked. Thanks for explaining the difference.

@girish Oh, didn't notice that. Should come with a warning that you're about to download a massive image!

Yeah, LibreChat is might smaller and works without any local models.

I can see use-cases for both, but for most people I think they'll want LibreChat as a way to save on monthly costs for subscriptions to various AI models, as this enables just paying for API token usage, with no monthly fees. Once you see how it works, I think this would be the one you'd personally want to use as a daily driver for these things, too.

-

@girish Oh, didn't notice that. Should come with a warning that you're about to download a massive image!

Yeah, LibreChat is might smaller and works without any local models.

I can see use-cases for both, but for most people I think they'll want LibreChat as a way to save on monthly costs for subscriptions to various AI models, as this enables just paying for API token usage, with no monthly fees. Once you see how it works, I think this would be the one you'd personally want to use as a daily driver for these things, too.

@girish What kinda position in the queue are we talking for LibreChat?

Right now, it's the single most useful, and missing, app for my collaboration toolkit.

The local version is great, but it's the collaboration I'm missing, where a self-hosted version of this would make it so much easier to share and maintain task-specific prompts and agents among a team.

No GPUs needed. It's just by far the best interface to all the many AI APIs, to bring some order back to the multi-modal madness of using each of their interfaces separately and without easy sharing.

-

It's not in the pipeline atleast. Isn't this the exact same as openwebui? we already use openwebui with our openai keys.

@girish You can download and run a local version of LibreChat to see the difference. However, a local version cannot be shared.

Here's the problem with all AI, it's "generic", and unreliable.

So you need need extremely "specific" pre-prompts to get consistent quality outputs.

Some people call this a Standard Operating Procedure (SOP). Essential in teamwork, and onboarding new people to existing workflows and processes.

OK, so you can Q&A your AI locally. Now you want to work with another person to all get consistent outputs from similar inputs. What do you do?

Message back and forth all the pre-prompts and configurations that each of you are using, iterating, and adapting? No-one has the bandwidth for that.

This is why we have anything online, to de-duplicate effort and give consistency of knowledge.

If you have a hosted version of LibreChat, where you create task-specific AI apps, your team can share the use of it, consistently.

To me, Cloudron is for collaboration.

Open Web UI is, at this time, mostly an individual tool.

If you try Poe.com, you should get the concept of AP "apps" that are purpose-specific, and refined for consistent quality output.

LibreChat offers this, therefore is more valuable and useful to a team.

Open Web UI is, at this time, is just not offering anywhere near the same. It's interesting, but just not offering much that a local app can't do.

LibreChat is designed specifically for collaboration.

-

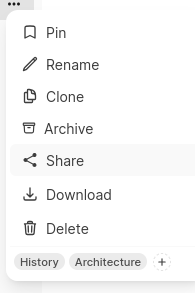

I can give it a try when I find the time, but fwiw you can share in openwebui too. You can share the entrire chat using the share button .

@girish Sorry. Missed point. Although, I understand the confusion, but only if you have not used Poe.com or similar to understand what Apps are in this context.

I don't mean share a conversation. They all do that.

I mean share the entire setup across multiple users.

Let's say you want to create AI chatapp that has all the knowledge of the latest Cloudron documentation already loaded up in it, for all the users on your shared chat platform to then use in getting better AI answers to questions. LibreChat can do that. They call it "Agents" in the interface.

Similar concept to Poe.com apps. You can load them up with tons of recent knowledge that is additional to the AI model it is connecting to.

Update once, and all users of that "App" are now chatting with the latest additional knowledge.

Sharing a chat from the base model with no specific knowledge is just not the same thing.

-

I am also confused about how this is different from openwebui where you can create a custom model that is enriched with your own knowledge (aka you own documents) and share that model with other to chat with. I am no expert here though and have just used openwebui so far. Works great.

-

Surely quite many differerences between those two products for example in RAG technology, each has difference approach that i can't say which one better because it depends on use case, and furthermore openwebUI and librechat has different approach providing agentAI to perform specific tasks, hopefully Cloudron team will accomodate appwishlist to have Librechat available in Cloudron. Eventhough I see in wishlist, there's also a wish for similar product named AnythingLLM, but i frankly see Librechat AI is better option to accomodate

-

By reading descriptions here, I detected 2 major differences.

- It seems like LibreChat CAN be used with APIs to most the big external LLMs available, which Open WebUI does NOT. It can use only OpenAI's API.

- It seems like LibreChat must use APIs to outsider RAG system, while Open WebUI has it's own RAG system integrated.

- Yeah, there might be a third one. Open WebUI is geared to use most of the open source LLMs available right now, it even has a huggingface pipeline, it's a great community with available plugins, functions and tasks, while LibreChat does not seem, at first glance, to be geared to use open source LLMs.

There would be a use for both and since Open WebUI is already on Cloudron, it could be cool to add LibreChat as well. On another note, there's AnythingLLM that has also been suggested. Is there any comparison made to that also amazing LLMs UI? Would be interesting to find out as well before spending time adding a new LLM UI on Cloudron, and thus keep resources to add some other much on demand apps that have been in the waiting for long.

-

By reading descriptions here, I detected 2 major differences.

- It seems like LibreChat CAN be used with APIs to most the big external LLMs available, which Open WebUI does NOT. It can use only OpenAI's API.

- It seems like LibreChat must use APIs to outsider RAG system, while Open WebUI has it's own RAG system integrated.

- Yeah, there might be a third one. Open WebUI is geared to use most of the open source LLMs available right now, it even has a huggingface pipeline, it's a great community with available plugins, functions and tasks, while LibreChat does not seem, at first glance, to be geared to use open source LLMs.

There would be a use for both and since Open WebUI is already on Cloudron, it could be cool to add LibreChat as well. On another note, there's AnythingLLM that has also been suggested. Is there any comparison made to that also amazing LLMs UI? Would be interesting to find out as well before spending time adding a new LLM UI on Cloudron, and thus keep resources to add some other much on demand apps that have been in the waiting for long.

It seems like LibreChat CAN be used with APIs to most the big external LLMs available, which Open WebUI does NOT. It can use only OpenAI's API.

I've only ever played a little with OpenWebUI on Cloudron and never connected it to any API, but I wondered about this.

In short, you can connect OpenWebUI to other APIs too via functions or LiteLLM integration (or via their pipelines - although I gather that's even more involved), it's just not as easy as connecting to the OpenAI API, see e.g. https://github.com/open-webui/open-webui/issues/3288

-

It seems like LibreChat CAN be used with APIs to most the big external LLMs available, which Open WebUI does NOT. It can use only OpenAI's API.

I've only ever played a little with OpenWebUI on Cloudron and never connected it to any API, but I wondered about this.

In short, you can connect OpenWebUI to other APIs too via functions or LiteLLM integration (or via their pipelines - although I gather that's even more involved), it's just not as easy as connecting to the OpenAI API, see e.g. https://github.com/open-webui/open-webui/issues/3288

@jdaviescoates Yeah, there are many things in development, it's actually pretty heavily developed and updated several times a week, so I've no doubt it'll come to a point where it'll connect to big tech's api as well, sooner that later...

-

I am also confused about how this is different from openwebui where you can create a custom model that is enriched with your own knowledge (aka you own documents) and share that model with other to chat with. I am no expert here though and have just used openwebui so far. Works great.

@NCKNE Can you now invite other private users to use your private task-specific knowledge-primed with WebUI?

From what I have seen:

If you work alone. I'm sure WebUI is fine.

If you work as a team. LibreChat has a very, very valuable feature that I've not see existing or planned for WebUI.

I expect the difficulty in seeing the difference is those that don't work as a team on things, so will never have the need to share what LibreChat calls "agents".

Those working as a team just don't have a team-tool to collaborate with.

AI chat requires a ton of refinement for specific purposes.

If you want basic Q&A AI chat, use anything.

If you need a specific type and style of output, based on additional knowledge input, and for the output to be consistent across a team, the choices I know of are Poe.com with those fees, or LibreChat hosted on a server.

-

Surely quite many differerences between those two products for example in RAG technology, each has difference approach that i can't say which one better because it depends on use case, and furthermore openwebUI and librechat has different approach providing agentAI to perform specific tasks, hopefully Cloudron team will accomodate appwishlist to have Librechat available in Cloudron. Eventhough I see in wishlist, there's also a wish for similar product named AnythingLLM, but i frankly see Librechat AI is better option to accomodate

@firmansi Yeah, they might be things "better", and there might be things with WebUI that are "good enough".

The reason for LibreChat is specifically for collaboration.

I use Cloudron as a collaboration platform among many users for different things, so I value collaborative tools that save time and repeat conversations or effort.

LibreChat solves that for me, and it would be nicer to have it self-hosted with Cloudron, than to be setting up a separate small server just for this one app, and then all the maintenance involved in that.

There's a need, problem, and solution. We don't need to re-invent anything. Just an ability to use each thing for it's unique advantages.

-

By reading descriptions here, I detected 2 major differences.

- It seems like LibreChat CAN be used with APIs to most the big external LLMs available, which Open WebUI does NOT. It can use only OpenAI's API.

- It seems like LibreChat must use APIs to outsider RAG system, while Open WebUI has it's own RAG system integrated.

- Yeah, there might be a third one. Open WebUI is geared to use most of the open source LLMs available right now, it even has a huggingface pipeline, it's a great community with available plugins, functions and tasks, while LibreChat does not seem, at first glance, to be geared to use open source LLMs.

There would be a use for both and since Open WebUI is already on Cloudron, it could be cool to add LibreChat as well. On another note, there's AnythingLLM that has also been suggested. Is there any comparison made to that also amazing LLMs UI? Would be interesting to find out as well before spending time adding a new LLM UI on Cloudron, and thus keep resources to add some other much on demand apps that have been in the waiting for long.