After Ubuntu 22/24 Upgrade syslog getting spammed and grows way to much clogging up the diskspace

-

Extra analysis.

Did this issue really just come up after the upgrade to 22/24?System Upgrade timers:

2025-02-19 14:33:55 Linux version 6.8.0-53-generic (buildd@lcy02-amd64-046) (x86_64-linux-gnu-gcc-13 (Ubuntu 13.3.0-6ubuntu2~24.04) 13.3.0, GNU ld (GNU Binutils for Ubuntu) 2.42) #55-Ubuntu SMP PREEMPT_DYNAMIC Fri Jan 17 15:37:52 UTC 2025 2025-02-19 12:33:55 Linux version 5.15.0-131-generic (buildd@lcy02-amd64-057) (gcc (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0, GNU ld (GNU Binutils for Ubuntu) 2.38) #141-Ubuntu SMP Fri Jan 10 21:18:28 UTC 2025 2025-02-19 00:33:55 Linux version 5.4.0-205-generic (buildd@lcy02-amd64-055) (gcc version 9.4.0 (Ubuntu 9.4.0-1ubuntu1~20.04.2)) #225-Ubuntu SMP Fri Jan 10 22:23:35 UTC 2025

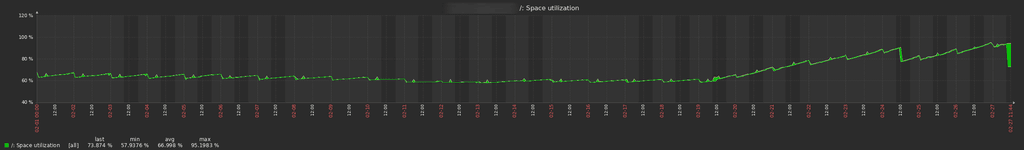

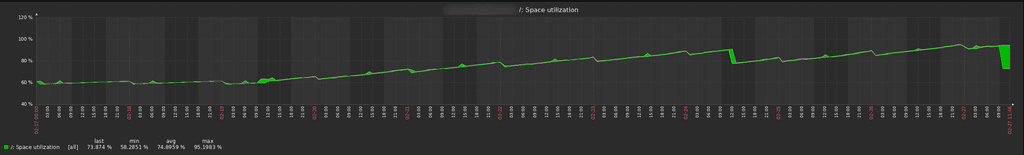

View of the whole month of February 2025.Zoom to 2025-02-17:

Yep, this looks very conclusive to me. This issue is only apparent in Ubuntu 22/24 with the non-fixed

syslog.js -

We're currently seeing this issue on v8.3.1 (Ubuntu 24.04.1 LTS)

@girish thank you for fixing this! When will this fix be rolled out?

@BrutalBirdie thanks for the quick fix! We applied it and it worked perfectly.

-

B BrutalBirdie referenced this topic on

B BrutalBirdie referenced this topic on

-

The patch described in https://forum.cloudron.io/topic/13361/after-ubuntu-22-24-upgrade-syslog-getting-spammed-and-grows-way-to-much-clogging-up-the-diskspace/11# Is not available anymore (Error 500)

-

The patch described in https://forum.cloudron.io/topic/13361/after-ubuntu-22-24-upgrade-syslog-getting-spammed-and-grows-way-to-much-clogging-up-the-diskspace/11# Is not available anymore (Error 500)

@necrevistonnezr That is because https://git.cloudron.io/ is currently throwing err 500.

Has been resolved, is now all good again.

-

Quickfix for users who need it NOW:

# get patch file, apply and remove and restart cloudron-syslog.service cd /home/yellowtent/box wget https://git.cloudron.io/platform/box/-/commit/063b1024616706971d4a1f9c50b5032727640120.diff git apply 063b1024616706971d4a1f9c50b5032727640120.diff rm -v 063b1024616706971d4a1f9c50b5032727640120.diff systemctl restart cloudron-syslog.service@BrutalBirdie this is great, solved the issue for me!

-

J james referenced this topic on

J james referenced this topic on

-

A alex-a-soto referenced this topic on

-

S SansGuidon referenced this topic on

S SansGuidon referenced this topic on

-

FYI I got the same problem a few times in past weeks, I understand this will be solved in Cloudron 9, right? But if yes I'm a bit confused that we need to apply such a patch manually when this could be part of an update. Anyway truncating the syslog + applying the patch got me rid of 60GB of spam in log files.

I'm interested in how others are dealing with this. -

@SansGuidon the issue arises only with the logs of some specific apps it seems. Did you notice which app specifically is growing in log size? Or is it all the app logs? But you are right, this problem is solved only in Cloudron 9.

@girish I don't think I've hit this issue myself, but why not just push out an 8.3.3 with this fix?

-

@SansGuidon the issue arises only with the logs of some specific apps it seems. Did you notice which app specifically is growing in log size? Or is it all the app logs? But you are right, this problem is solved only in Cloudron 9.

@girish said in After Ubuntu 22/24 Upgrade syslog getting spammed and grows way to much clogging up the diskspace:

@SansGuidon the issue arises only with the logs of some specific apps it seems. Did you notice which app specifically is growing in log size? Or is it all the app logs? But you are right, this problem is solved only in Cloudron 9.

Based on early investigation, some apps like Syncthing and Lamp, or even wallos, generate more logs than the rest. But this is just when looking at the data of past hours, and after applying the diff + logrotate tuning. I'll keep you posted if I find more interesting evidence. If someone has a script to quickly generate relevant stats, I'm interested.

-

@girish I don't think I've hit this issue myself, but why not just push out an 8.3.3 with this fix?

@jdaviescoates Yes, could help, as in current state, the syslog implementation generate errors in my logs, which could explain the logs growing in size. So I had to apply the diff to avoid this repeated pattern

2025-08-31T20:42:40.149390+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:40Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - IndexError: list index out of range 2025-08-31T20:42:40.240033+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:40Z ubuntu-cloudron-16gb-nbg1-3 cd4a6fed-6fd7-4616-ba0d-d0c38972774b 1123 cd4a6fed-6fd7-4616-ba0d-d0c38972774b - 172.18.0.1 - - [31/Aug/2025:20:42:40 +0000] "GET / HTTP/1.1" 200 45257 "-" "Mozilla (CloudronHealth)" 2025-08-31T20:42:41.676806+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:41Z ubuntu-cloudron-16gb-nbg1-3 mongodb 1123 mongodb - {"t":{"$date":"2025-08-31T20:42:41.675+00:00"},"s":"D1", "c":"REPL", "id":21223, "ctx":"NoopWriter","msg":"Set last known op time","attr":{"lastKnownOpTime":{"ts":{"$timestamp":{"t":1756672961,"i":1}},"t":42}}} 2025-08-31T20:42:43.067695+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:43Z ubuntu-cloudron-16gb-nbg1-3 mongodb 1123 mongodb - {"t":{"$date":"2025-08-31T20:42:43.066+00:00"},"s":"D1", "c":"NETWORK", "id":4668132, "ctx":"ReplicaSetMonitor-TaskExecutor","msg":"ReplicaSetMonitor ping success","attr":{"host":"mongodb:27017","replicaSet":"rs0","durationMicros":606}} 2025-08-31T20:42:44.061046+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:44Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - url = link.split(" : ")[0].split(" ")[1].strip("[]") 2025-08-31T20:42:44.061077+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:44Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^^^ 2025-08-31T20:42:44.061100+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:44Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - IndexError: list index out of range -

@jdaviescoates Yes, could help, as in current state, the syslog implementation generate errors in my logs, which could explain the logs growing in size. So I had to apply the diff to avoid this repeated pattern

2025-08-31T20:42:40.149390+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:40Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - IndexError: list index out of range 2025-08-31T20:42:40.240033+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:40Z ubuntu-cloudron-16gb-nbg1-3 cd4a6fed-6fd7-4616-ba0d-d0c38972774b 1123 cd4a6fed-6fd7-4616-ba0d-d0c38972774b - 172.18.0.1 - - [31/Aug/2025:20:42:40 +0000] "GET / HTTP/1.1" 200 45257 "-" "Mozilla (CloudronHealth)" 2025-08-31T20:42:41.676806+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:41Z ubuntu-cloudron-16gb-nbg1-3 mongodb 1123 mongodb - {"t":{"$date":"2025-08-31T20:42:41.675+00:00"},"s":"D1", "c":"REPL", "id":21223, "ctx":"NoopWriter","msg":"Set last known op time","attr":{"lastKnownOpTime":{"ts":{"$timestamp":{"t":1756672961,"i":1}},"t":42}}} 2025-08-31T20:42:43.067695+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:43Z ubuntu-cloudron-16gb-nbg1-3 mongodb 1123 mongodb - {"t":{"$date":"2025-08-31T20:42:43.066+00:00"},"s":"D1", "c":"NETWORK", "id":4668132, "ctx":"ReplicaSetMonitor-TaskExecutor","msg":"ReplicaSetMonitor ping success","attr":{"host":"mongodb:27017","replicaSet":"rs0","durationMicros":606}} 2025-08-31T20:42:44.061046+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:44Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - url = link.split(" : ")[0].split(" ")[1].strip("[]") 2025-08-31T20:42:44.061077+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:44Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~^^^ 2025-08-31T20:42:44.061100+00:00 ubuntu-cloudron-16gb-nbg1-3 syslog.js[970341]: <30>1 2025-08-31T20:42:44Z ubuntu-cloudron-16gb-nbg1-3 b5b418fc-0f16-4cde-81a1-1213880c9a10 1123 b5b418fc-0f16-4cde-81a1-1213880c9a10 - IndexError: list index out of range@SansGuidon I'm using Syncthing. I've not hit this issue in that my disk space isn't running out - but perhaps that just because I've got quite a big disk and that I recently cleaned up a load of Nextcloud stuff to give me lots more space because my disk was running out!

Where do I look to check if this issue is indeed affecting me after all? Thanks

-

From a deeper investigation, Syslog is exploding (GBs/day) because Cloudron’s backup job dumps full SQLite DBs (e.g. Kuma’s heartbeat table) to stdout, which gets swallowed by journald/rsyslog. One backup ran = ~500MB of SQL spam in syslog in my case. Four runs/day = 2GB+/day, at least. but it could be more depending on the setup. I just triggered a backup now and it grew by almost 2GB.

root@ubuntu-cloudron-16gb-nbg1-3:~# grep -nE "CREATE TABLE \[heartbeat\]|INSERT INTO heartbeat|BEGIN TRANSACTION" /var/log/syslog | head -10 1152:2025-08-31T21:00:37.705303+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: BEGIN TRANSACTION; 1153:2025-08-31T21:00:37.705386+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: CREATE TABLE [heartbeat](#015 1162:2025-08-31T21:00:37.705789+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(1,1,1,1,'200 - OK','2025-03-27 23:26:53.602',566,0,0); 1163:2025-08-31T21:00:37.705828+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(2,0,1,1,'200 - OK','2025-03-27 23:27:54.295',167,60,0); 1164:2025-08-31T21:00:37.705864+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(3,0,1,1,'200 - OK','2025-03-27 23:28:54.506',247,60,0); 1165:2025-08-31T21:00:37.705930+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(4,0,1,1,'200 - OK','2025-03-27 23:29:54.801',441,60,0); 1166:2025-08-31T21:00:37.705973+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(5,0,1,1,'200 - OK','2025-03-27 23:30:55.259',200,60,0); 1167:2025-08-31T21:00:37.706010+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(6,0,1,1,'200 - OK','2025-03-27 23:31:55.486',162,60,0); 1168:2025-08-31T21:00:37.706033+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(7,0,1,1,'200 - OK','2025-03-27 23:32:55.691',161,60,0); 1169:2025-08-31T21:00:37.706057+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(8,0,1,1,'200 - OK','2025-03-27 23:33:55.899',129,60,0);I'm interested to know if someone can validate this observation on another Cloudron instance ideally with an existing and long running Kuma instance:

Reproduction path

- Install Uptime Kuma on Cloudron

- Trigger a backup

- Watch /var/log/syslog: you’ll see CREATE TABLE heartbeat + endless INSERT lines

Root Cause

Backup script calls sqlite3 .dump → stdout → journald → rsyslog → syslog file. Logging pipelines aren’t designed for multi-hundred MB database dumps.Impact

- /var/log/syslog bloats to multi-GB

- Disk space wasted, logrotate churn

- Actual logs are drowned in noise

Fix?

- Don’t stream .dump to stdout. Redirect to file, or use .backup. Silence the dump in logs?

-

From a deeper investigation, Syslog is exploding (GBs/day) because Cloudron’s backup job dumps full SQLite DBs (e.g. Kuma’s heartbeat table) to stdout, which gets swallowed by journald/rsyslog. One backup ran = ~500MB of SQL spam in syslog in my case. Four runs/day = 2GB+/day, at least. but it could be more depending on the setup. I just triggered a backup now and it grew by almost 2GB.

root@ubuntu-cloudron-16gb-nbg1-3:~# grep -nE "CREATE TABLE \[heartbeat\]|INSERT INTO heartbeat|BEGIN TRANSACTION" /var/log/syslog | head -10 1152:2025-08-31T21:00:37.705303+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: BEGIN TRANSACTION; 1153:2025-08-31T21:00:37.705386+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: CREATE TABLE [heartbeat](#015 1162:2025-08-31T21:00:37.705789+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(1,1,1,1,'200 - OK','2025-03-27 23:26:53.602',566,0,0); 1163:2025-08-31T21:00:37.705828+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(2,0,1,1,'200 - OK','2025-03-27 23:27:54.295',167,60,0); 1164:2025-08-31T21:00:37.705864+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(3,0,1,1,'200 - OK','2025-03-27 23:28:54.506',247,60,0); 1165:2025-08-31T21:00:37.705930+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(4,0,1,1,'200 - OK','2025-03-27 23:29:54.801',441,60,0); 1166:2025-08-31T21:00:37.705973+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(5,0,1,1,'200 - OK','2025-03-27 23:30:55.259',200,60,0); 1167:2025-08-31T21:00:37.706010+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(6,0,1,1,'200 - OK','2025-03-27 23:31:55.486',162,60,0); 1168:2025-08-31T21:00:37.706033+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(7,0,1,1,'200 - OK','2025-03-27 23:32:55.691',161,60,0); 1169:2025-08-31T21:00:37.706057+00:00 ubuntu-cloudron-16gb-nbg1-3 d6750120460b[1123]: INSERT INTO heartbeat VALUES(8,0,1,1,'200 - OK','2025-03-27 23:33:55.899',129,60,0);I'm interested to know if someone can validate this observation on another Cloudron instance ideally with an existing and long running Kuma instance:

Reproduction path

- Install Uptime Kuma on Cloudron

- Trigger a backup

- Watch /var/log/syslog: you’ll see CREATE TABLE heartbeat + endless INSERT lines

Root Cause

Backup script calls sqlite3 .dump → stdout → journald → rsyslog → syslog file. Logging pipelines aren’t designed for multi-hundred MB database dumps.Impact

- /var/log/syslog bloats to multi-GB

- Disk space wasted, logrotate churn

- Actual logs are drowned in noise

Fix?

- Don’t stream .dump to stdout. Redirect to file, or use .backup. Silence the dump in logs?

@SansGuidon good sleuthing. I don't currently have an instance of Uptime Kuma running so can't assist but hopefully others can.

-

That is some good investigation indeed. I tried to reproduce this, but given that Cloudron isn't using syslog as such at all, I am not sure how to reproduce this and what makes it log to syslog in your case. But maybe I am missing something obvious or have you somehow adjusted the docker configs around logging on that instance?

-

I've no idea, my setup seems to use journald which could be a default and root cause of such issues

root@ubuntu-cloudron-16gb-nbg1-3:~# docker info | grep 'Logging Driver' Logging Driver: journaldam I alone with this setup? I've no memory about configuring this behavior for logging driver.

-

I've no idea, my setup seems to use journald which could be a default and root cause of such issues

root@ubuntu-cloudron-16gb-nbg1-3:~# docker info | grep 'Logging Driver' Logging Driver: journaldam I alone with this setup? I've no memory about configuring this behavior for logging driver.

@SansGuidon said in After Ubuntu 22/24 Upgrade syslog getting spammed and grows way to much clogging up the diskspace:

I alone with this setup?

Nope. I see to have the same:

root@Ubuntu-2204-jammy-amd64-base ~ # docker info | grep 'Logging Driver' Logging Driver: journald