Able to clean up binlog.###### files in /var/lib/mysql directory?

-

@d19dotca Interesting. I haven't seen this before (and 12G seems massive). Can you try https://dev.mysql.com/doc/refman/8.0/en/purge-binary-logs.html ? You can get mysql access using

mysql -uroot -ppassword(as-is) -

@d19dotca Interesting. I haven't seen this before (and 12G seems massive). Can you try https://dev.mysql.com/doc/refman/8.0/en/purge-binary-logs.html ? You can get mysql access using

mysql -uroot -ppassword(as-is)@girish I know in one of the products I support for my day job that we have a script executed as a cron job for maintaining the binlog files. I suspect it may be needed to have something similar to clean up MySQL bin logs in Ubuntu 20.04, managed by Cloudron upon install I suppose? Just a thought anyways.

Typically it's okay to just delete those files (and at least in my experience they're normally only created when replication is enabled between two MySQL servers) but I wasn't certain if this would screw up Cloudron at all, and it's my production server so didn't want to remove it yet just in case, but sounds like I can then.

-

Thinking out loud here but a couple things I'm wondering about (and will try to test what I can):

- Is this issue unique to only my instance, or happening to instances of Cloudron?

- If happening to other instances, is the commonality being on Ubuntu 20.04 or MySQL 8.x?

- If others have this issue, then I'd suspect we need to enable something during Cloudron setup to handle this out of the box, or ideally just disable bin logs (if possible).

-

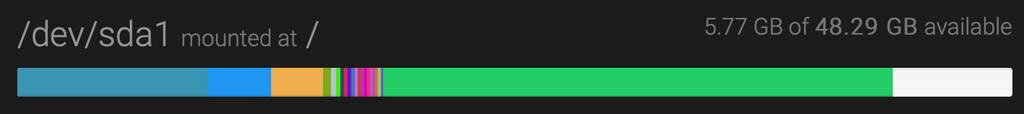

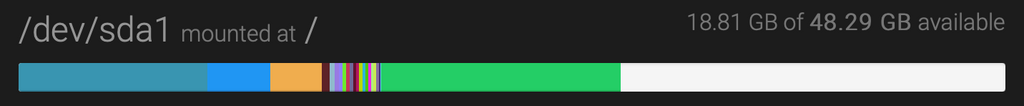

I ran the following commands to temporarily reclaim the disk space (which was 14 GB today, so about 2 GB higher in just 24 hours).

mysql -uroot -ppasswordPURGE BINARY LOGS BEFORE '2021-02-21 23:00:00';(replace the date with today's date of course when running it in the future for anyone stumbling across this post)

Running the above gave me over 13 GB back, with the

/var/lib/mysqldirectory taking up just over 600 MB now instead fo the 14 GB.Of course, I expect this will be an issue going forward still and will need to find a more permanent solution.

Screenshots for reference on disk space usage before & after:

-

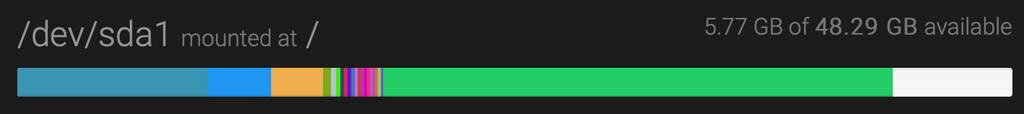

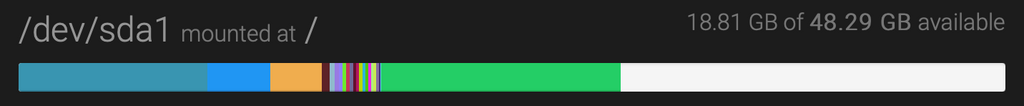

I ran the following commands to temporarily reclaim the disk space (which was 14 GB today, so about 2 GB higher in just 24 hours).

mysql -uroot -ppasswordPURGE BINARY LOGS BEFORE '2021-02-21 23:00:00';(replace the date with today's date of course when running it in the future for anyone stumbling across this post)

Running the above gave me over 13 GB back, with the

/var/lib/mysqldirectory taking up just over 600 MB now instead fo the 14 GB.Of course, I expect this will be an issue going forward still and will need to find a more permanent solution.

Screenshots for reference on disk space usage before & after:

-

@d19dotca do you still see it growing after you cleaned up? We haven't had any other reports like this.

@girish Yes, the issue is still present. Here's the current output:

-rw-r----- 1 mysql mysql 101M Feb 21 23:23 binlog.000145 -rw-r----- 1 mysql mysql 38M Feb 22 00:00 binlog.000146 -rw-r----- 1 mysql mysql 101M Feb 22 01:36 binlog.000147 -rw-r----- 1 mysql mysql 101M Feb 22 03:13 binlog.000148 -rw-r----- 1 mysql mysql 101M Feb 22 04:50 binlog.000149 -rw-r----- 1 mysql mysql 101M Feb 22 06:32 binlog.000150 -rw-r----- 1 mysql mysql 101M Feb 22 08:18 binlog.000151 -rw-r----- 1 mysql mysql 101M Feb 22 10:05 binlog.000152 -rw-r----- 1 mysql mysql 101M Feb 22 11:52 binlog.000153 -rw-r----- 1 mysql mysql 101M Feb 22 13:38 binlog.000154 -rw-r----- 1 mysql mysql 101M Feb 22 15:25 binlog.000155 -rw-r----- 1 mysql mysql 101M Feb 22 17:12 binlog.000156 -rw-r----- 1 mysql mysql 101M Feb 22 18:58 binlog.000157 -rw-r----- 1 mysql mysql 101M Feb 22 20:42 binlog.000158 -rw-r----- 1 mysql mysql 101M Feb 22 22:23 binlog.000159 -rw-r----- 1 mysql mysql 98M Feb 23 00:00 binlog.000160 -rw-r----- 1 mysql mysql 101M Feb 23 01:38 binlog.000161 -rw-r----- 1 mysql mysql 101M Feb 23 03:14 binlog.000162 -rw-r----- 1 mysql mysql 101M Feb 23 04:52 binlog.000163 -rw-r----- 1 mysql mysql 101M Feb 23 06:32 binlog.000164 -rw-r----- 1 mysql mysql 101M Feb 23 08:13 binlog.000165 -rw-r----- 1 mysql mysql 101M Feb 23 09:53 binlog.000166 -rw-r----- 1 mysql mysql 101M Feb 23 11:33 binlog.000167 -rw-r----- 1 mysql mysql 101M Feb 23 13:13 binlog.000168 -rw-r----- 1 mysql mysql 101M Feb 23 14:53 binlog.000169 -rw-r----- 1 mysql mysql 101M Feb 23 16:33 binlog.000170 -rw-r----- 1 mysql mysql 101M Feb 23 18:12 binlog.000171 -rw-r----- 1 mysql mysql 101M Feb 23 19:51 binlog.000172 -rw-r----- 1 mysql mysql 101M Feb 23 21:31 binlog.000173 -rw-r----- 1 mysql mysql 8.0M Feb 23 21:38 binlog.000174Is it possible to grab a copy of your MySQL my.cnf file so I can compare? Maybe it's something weird about the default Ubuntu 20.04 image at OVH.

-

Found this... I may test this out: https://dba.stackexchange.com/questions/226269/disable-binary-logs-in-mysql-8-0

Curious, do you have skip-log-bin in your my.cnf file? Because I do not and suspect this is the root cause.

Here's the contents of my my.cnf file:

ubuntu@cloudron:~$ cat /etc/mysql/my.cnf !includedir /etc/mysql/conf.d/ !includedir /etc/mysql/mysql.conf.d/ # http://bugs.mysql.com/bug.php?id=68514 [mysqld] performance_schema=OFF max_connections=50 # on ec2, without this we get a sporadic connection drop when doing the initial migration max_allowed_packet=64M # https://mathiasbynens.be/notes/mysql-utf8mb4 character-set-server = utf8mb4 collation-server = utf8mb4_unicode_ci # set timezone to UTC default_time_zone='+00:00' [mysqldump] quick quote-names max_allowed_packet = 16M default-character-set = utf8mb4 [mysql] default-character-set = utf8mb4 [client] default-character-set = utf8mb4All of that is the default setup, I haven't manually changed any of it.

-

Found this... I may test this out: https://dba.stackexchange.com/questions/226269/disable-binary-logs-in-mysql-8-0

Curious, do you have skip-log-bin in your my.cnf file? Because I do not and suspect this is the root cause.

Here's the contents of my my.cnf file:

ubuntu@cloudron:~$ cat /etc/mysql/my.cnf !includedir /etc/mysql/conf.d/ !includedir /etc/mysql/mysql.conf.d/ # http://bugs.mysql.com/bug.php?id=68514 [mysqld] performance_schema=OFF max_connections=50 # on ec2, without this we get a sporadic connection drop when doing the initial migration max_allowed_packet=64M # https://mathiasbynens.be/notes/mysql-utf8mb4 character-set-server = utf8mb4 collation-server = utf8mb4_unicode_ci # set timezone to UTC default_time_zone='+00:00' [mysqldump] quick quote-names max_allowed_packet = 16M default-character-set = utf8mb4 [mysql] default-character-set = utf8mb4 [client] default-character-set = utf8mb4All of that is the default setup, I haven't manually changed any of it.

-

Found this... I may test this out: https://dba.stackexchange.com/questions/226269/disable-binary-logs-in-mysql-8-0

Curious, do you have skip-log-bin in your my.cnf file? Because I do not and suspect this is the root cause.

Here's the contents of my my.cnf file:

ubuntu@cloudron:~$ cat /etc/mysql/my.cnf !includedir /etc/mysql/conf.d/ !includedir /etc/mysql/mysql.conf.d/ # http://bugs.mysql.com/bug.php?id=68514 [mysqld] performance_schema=OFF max_connections=50 # on ec2, without this we get a sporadic connection drop when doing the initial migration max_allowed_packet=64M # https://mathiasbynens.be/notes/mysql-utf8mb4 character-set-server = utf8mb4 collation-server = utf8mb4_unicode_ci # set timezone to UTC default_time_zone='+00:00' [mysqldump] quick quote-names max_allowed_packet = 16M default-character-set = utf8mb4 [mysql] default-character-set = utf8mb4 [client] default-character-set = utf8mb4All of that is the default setup, I haven't manually changed any of it.

@d19dotca Ah, this seems to be a some MySQL 8/Ubuntu 20 thing. It generates these binlogs for me on my test cloudron.

-rw-r----- 1 mysql mysql 179 Feb 15 20:42 binlog.000001 -rw-r----- 1 mysql mysql 179 Feb 15 20:45 binlog.000002 -rw-r----- 1 mysql mysql 116K Feb 15 20:45 binlog.000003 -rw-r----- 1 mysql mysql 13M Feb 17 00:00 binlog.000004 -rw-r----- 1 mysql mysql 43M Feb 18 00:00 binlog.000005 -rw-r----- 1 mysql mysql 43M Feb 19 00:00 binlog.000006 -rw-r----- 1 mysql mysql 13M Feb 20 00:00 binlog.000007 -rw-r----- 1 mysql mysql 28M Feb 21 00:00 binlog.000008 -rw-r----- 1 mysql mysql 2.8M Feb 22 00:00 binlog.000009 -rw-r----- 1 mysql mysql 13M Feb 23 00:00 binlog.000010 -rw-r----- 1 mysql mysql 14M Feb 23 21:41 binlog.000011 -rw-r----- 1 mysql mysql 176 Feb 23 00:00 binlog.index -

@d19dotca Ah, this seems to be a some MySQL 8/Ubuntu 20 thing. It generates these binlogs for me on my test cloudron.

-rw-r----- 1 mysql mysql 179 Feb 15 20:42 binlog.000001 -rw-r----- 1 mysql mysql 179 Feb 15 20:45 binlog.000002 -rw-r----- 1 mysql mysql 116K Feb 15 20:45 binlog.000003 -rw-r----- 1 mysql mysql 13M Feb 17 00:00 binlog.000004 -rw-r----- 1 mysql mysql 43M Feb 18 00:00 binlog.000005 -rw-r----- 1 mysql mysql 43M Feb 19 00:00 binlog.000006 -rw-r----- 1 mysql mysql 13M Feb 20 00:00 binlog.000007 -rw-r----- 1 mysql mysql 28M Feb 21 00:00 binlog.000008 -rw-r----- 1 mysql mysql 2.8M Feb 22 00:00 binlog.000009 -rw-r----- 1 mysql mysql 13M Feb 23 00:00 binlog.000010 -rw-r----- 1 mysql mysql 14M Feb 23 21:41 binlog.000011 -rw-r----- 1 mysql mysql 176 Feb 23 00:00 binlog.index@girish Right. Yeah I figured it was a weird issue in MySQL or Ubuntu 20.04 since both of those changed after I moved servers earlier last week. I suspect the

skip-log-binoption should be added to the my.cnf file, my gut tells me that should do the trick.This seems to be explained too in the actual documentation. One answer highlighted this in that post earlier... https://dba.stackexchange.com/a/228959

In fact, when I compare the MySQL documentation between 5.7 and 8.0, it seems that binlogs became default enabled in 8.0, was not a default in 5.7.

-

@girish Right. Yeah I figured it was a weird issue in MySQL or Ubuntu 20.04 since both of those changed after I moved servers earlier last week. I suspect the

skip-log-binoption should be added to the my.cnf file, my gut tells me that should do the trick.This seems to be explained too in the actual documentation. One answer highlighted this in that post earlier... https://dba.stackexchange.com/a/228959

In fact, when I compare the MySQL documentation between 5.7 and 8.0, it seems that binlogs became default enabled in 8.0, was not a default in 5.7.

@d19dotca We can't skip the binlog because it's used for db recovery. But in the mysql addon we set

max_binlog_size = 100Mand alsoexpire_logs_days = 10. So, I will put this in the main mysql config as well.See https://dev.mysql.com/doc/refman/8.0/en/binary-log.html for importance of binlog.

edit: it's

binlog_expire_log_secondsnow - https://dev.mysql.com/worklog/task/?id=10924 -

@d19dotca We can't skip the binlog because it's used for db recovery. But in the mysql addon we set

max_binlog_size = 100Mand alsoexpire_logs_days = 10. So, I will put this in the main mysql config as well.See https://dev.mysql.com/doc/refman/8.0/en/binary-log.html for importance of binlog.

edit: it's

binlog_expire_log_secondsnow - https://dev.mysql.com/worklog/task/?id=10924@girish Sounds good. I'm surprised that binlogs is needed for database recovery in a non-replicated scenario. In my day-job if we need to recover a database we just use the mysqldump created and then use the mysql command to import, nothing needed on the binlog side of things. Maybe you guys do it differently though than I'm used to.

If I edit the my.cnf file, will a future Cloudron update overwrite it? Trying to see if I should add it in now or just wait for the update so I don't mess up the update part at all.

-

@girish Sounds good. I'm surprised that binlogs is needed for database recovery in a non-replicated scenario. In my day-job if we need to recover a database we just use the mysqldump created and then use the mysql command to import, nothing needed on the binlog side of things. Maybe you guys do it differently though than I'm used to.

If I edit the my.cnf file, will a future Cloudron update overwrite it? Trying to see if I should add it in now or just wait for the update so I don't mess up the update part at all.

@d19dotca Same. We also always use only mysqldump for recovery and major mysql upgrades (like the upcoming one from 5.7 to 8.0). Generally, I don't trust those direct data directory upgrade procedures

But the recovery stuff is sometimes useful when the disk goes corrupt and we want to recover quickly.

But the recovery stuff is sometimes useful when the disk goes corrupt and we want to recover quickly.From what I can make out, this issue has surfaced because expire_logs_days which was 10 is now deprecated and replaced with this log_seconds variable which has a default of 30 days...

If I edit the my.cnf file, will a future Cloudron update overwrite it? Trying to see if I should add it in now or just wait for the update so I don't mess up the update part at all.

Yes, the my.cnf will be overwritten. You can just add it it's fine, the update will merely overwrite it with the same config... Currently, I am testing with 100M max_binlog_size and 432000 (5 days) as expire_log_days.

-

Okay this seems to have worked. I took what you did in the git but made it 6 hours (21600 seconds) instead as at least a test. Restarted the mysql service and now many of the binlogs are gone.

Side question: I tried that earlier and restarted mysql but from the Cloudron Services page, and it didn't seem to work. However when I restarted mysql on the command line, it worked. Is there a difference in that process?

-

@d19dotca Same. We also always use only mysqldump for recovery and major mysql upgrades (like the upcoming one from 5.7 to 8.0). Generally, I don't trust those direct data directory upgrade procedures

But the recovery stuff is sometimes useful when the disk goes corrupt and we want to recover quickly.

But the recovery stuff is sometimes useful when the disk goes corrupt and we want to recover quickly.From what I can make out, this issue has surfaced because expire_logs_days which was 10 is now deprecated and replaced with this log_seconds variable which has a default of 30 days...

If I edit the my.cnf file, will a future Cloudron update overwrite it? Trying to see if I should add it in now or just wait for the update so I don't mess up the update part at all.

Yes, the my.cnf will be overwritten. You can just add it it's fine, the update will merely overwrite it with the same config... Currently, I am testing with 100M max_binlog_size and 432000 (5 days) as expire_log_days.

@girish said in Able to clean up binlog.###### files in /var/lib/mysql directory?:

432000 (5 days) as expire_log_days

Out of curiosity, is there a need to have 10 days of binlogs? Just curious, because I was seeing issues before the 10 days, so I'm worried that setting won't work for me (and presumably at least a few others with well-used Cloudron's on servers with limited local disk space). I guess I can overwrite it though in my instance using something in the .d directories that are included, or do those get overwritten too? If it can be a lot less (like 3 days or something) that'd be best I'd think, but maybe more time is needed for most environments and mine is just a fringe scenario given my limited disk space.

-

@girish said in Able to clean up binlog.###### files in /var/lib/mysql directory?:

432000 (5 days) as expire_log_days

Out of curiosity, is there a need to have 10 days of binlogs? Just curious, because I was seeing issues before the 10 days, so I'm worried that setting won't work for me (and presumably at least a few others with well-used Cloudron's on servers with limited local disk space). I guess I can overwrite it though in my instance using something in the .d directories that are included, or do those get overwritten too? If it can be a lot less (like 3 days or something) that'd be best I'd think, but maybe more time is needed for most environments and mine is just a fringe scenario given my limited disk space.

-

@d19dotca as far as I understand the situation, it makes not too much sense to keep binlogs for db recovery for longer than the last backup (which contains the mysqldump for restore)