Backups Failing Frequently

-

I use Backblaze B2 for backups.

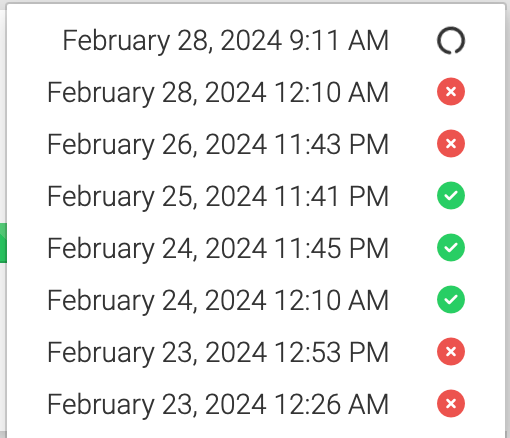

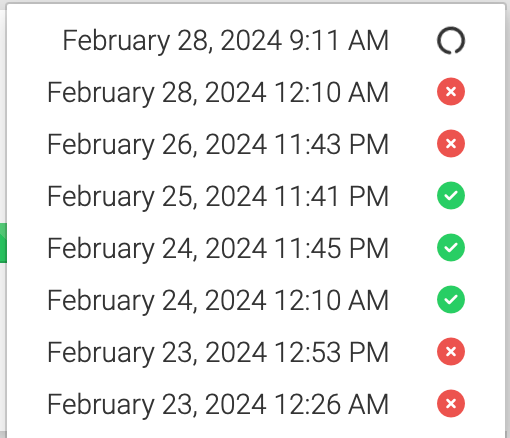

They have been failing more often than not in the last week.

The error is always "InternalError: CPU too busy" however my usage graphs have my CPU never going over 50%.

Is this a Cloudron problem or a B2 problem?

-

I find that failed backups are usually due to interrupted/slow network connections, not necessarily the source or the destination.

-

I use Backblaze B2 for backups.

They have been failing more often than not in the last week.

The error is always "InternalError: CPU too busy" however my usage graphs have my CPU never going over 50%.

Is this a Cloudron problem or a B2 problem?

InternalError: CPU too busy

@Dave-Swift ok, that's an interesting message! never seen that, I wasn't even aware one can detect that CPU is too busy

If you go back to the failing backups, what does the log say in those? Maybe the last 100-200 lines give us an idea of where it is failing.

If you go back to the failing backups, what does the log say in those? Maybe the last 100-200 lines give us an idea of where it is failing. -

G girish marked this topic as a question on

G girish marked this topic as a question on

-

@girish here is the most recent one

Mar 18 00:49:48 box:tasks update 713: {"percent":21,"message":"Uploading backup 25376M@1MBps (example.com)"}

Mar 18 00:49:58 box:tasks update 713: {"percent":21,"message":"Uploading backup 25386M@1MBps (example.com)"}

Mar 18 00:50:05 box:shell backup-snapshot/app_a64992c5-6f6d-401e-9edc-fa8d1f52adfa: /usr/bin/sudo -S -E --close-from=4 /home/yellowtent/box/src/scripts/backupupload.js snapshot/app_a64992c5-6f6d-401e-9edc-fa8d1f52adfa tgz {"localRoot":"/home/yellowtent/appsdata/a64992c5-6f6d-401e-9edc-fa8d1f52adfa","layout":[]} errored BoxError: backup-snapshot/app_a64992c5-6f6d-401e-9edc-fa8d1f52adfa exited with code 1 signal null

Mar 18 00:50:05 box:tasks setCompleted - 713: {"result":null,"error":{"stack":"BoxError: Backuptask crashed\n at runBackupUpload (/home/yellowtent/box/src/backuptask.js:163:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async uploadAppSnapshot (/home/yellowtent/box/src/backuptask.js:360:5)\n at async backupAppWithTag (/home/yellowtent/box/src/backuptask.js:382:5)\n at async fullBackup (/home/yellowtent/box/src/backuptask.js:503:29)","name":"BoxError","reason":"Internal Error","details":{},"message":"Backuptask crashed"}}

Mar 18 00:50:05 box:tasks update 713: {"percent":100,"result":null,"error":{"stack":"BoxError: Backuptask crashed\n at runBackupUpload (/home/yellowtent/box/src/backuptask.js:163:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async uploadAppSnapshot (/home/yellowtent/box/src/backuptask.js:360:5)\n at async backupAppWithTag (/home/yellowtent/box/src/backuptask.js:382:5)\n at async fullBackup (/home/yellowtent/box/src/backuptask.js:503:29)","name":"BoxError","reason":"Internal Error","details":{},"message":"Backuptask crashed"}}

Mar 18 00:50:05 box:taskworker Task took 6604.595 seconds

[no timestamp] Backuptask crashed -

Mar 19 09:49:52 box:taskworker Starting task 1060. Logs are at /home/yellowtent/platformdata/logs/tasks/1060.log Mar 19 09:49:52 box:tasks update 1060: {"percent":1,"message":"Backing up netdata.example.com (1/2)"} Mar 19 09:49:52 box:tasks update 1060: {"percent":21,"message":"Snapshotting app netdata.example.com"} Mar 19 09:49:52 box:backuptask snapshotApp: netdata.example.com took 0.026 seconds Mar 19 09:49:52 box:services backupAddons Mar 19 09:49:52 box:services backupAddons: backing up ["localstorage","proxyAuth"] Mar 19 09:49:52 box:tasks update 1060: {"percent":21,"message":"Uploading app snapshot netdata.example.com"} Mar 19 09:49:52 box:shell backup-snapshot/app_03cfa8c6-9930-4b76-8604-a9de46be6f08 /usr/bin/sudo -S -E --close-from=4 /home/yellowtent/box/src/scripts/backupupload.js snapshot/app_03cfa8c6-9930-4b76-8604-a9de46be6f08 tgz {"localRoot":"/home/yellowtent/appsdata/03cfa8c6-9930-4b76-8604-a9de46be6f08","layout":[]} Mar 19 09:50:03 box:tasks update 1060: {"percent":21,"message":"Uploading backup 41M@4MBps (netdata.example.com)"} Mar 19 09:50:13 box:tasks update 1060: {"percent":21,"message":"Uploading backup 55M@1MBps (netdata.example.com)"} [...] Mar 19 13:51:39 box:tasks update 1060: {"percent":21,"message":"Uploading backup 55M@0MBps (netdata.example.com)"}On my side backup are stuck on this line (It's been on for a while now.

Don't know if it's related but the percentage is the same and symptom seems identical (no upload speed).

And my backup is done to local filesystem so it should be a problem of network -

I've also had similar issues with backups the past few days.

2024-03-20T08:58:00.790Z box:shell backup-snapshot/app_c4a27e93-af6e-44e2-b7cf-9056fa01c5c3 code: null, signal: SIGKILL 2024-03-20T08:58:00.792Z box:taskworker Task took 10678.952 seconds 2024-03-20T08:58:00.793Z box:tasks setCompleted - 16169: {"result":null,"error":{"stack":"BoxError: Backuptask crashed\n at runBackupUpload (/home/yellowtent/box/src/backuptask.js:164:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async uploadAppSnapshot (/home/yellowtent/box/src/backuptask.js:361:5)\n at async backupAppWithTag (/home/yellowtent/box/src/backuptask.js:383:5)\n at async fullBackup (/home/yellowtent/box/src/backuptask.js:504:29)","name":"BoxError","reason":"Internal Error","details":{},"message":"Backuptask crashed"}} 2024-03-20T08:58:00.793Z box:tasks update 16169: {"percent":100,"result":null,"error":{"stack":"BoxError: Backuptask crashed\n at runBackupUpload (/home/yellowtent/box/src/backuptask.js:164:15)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async uploadAppSnapshot (/home/yellowtent/box/src/backuptask.js:361:5)\n at async backupAppWithTag (/home/yellowtent/box/src/backuptask.js:383:5)\n at async fullBackup (/home/yellowtent/box/src/backuptask.js:504:29)","name":"BoxError","reason":"Internal Error","details":{},"message":"Backuptask crashed"}} BoxError: Backuptask crashedIt would be nice if the error or code it pointed too had more info about what happened. Best I can tell is it got killed somehow. I increased the backup task memory and will see what happens next time.

Edit: it didn't crash yesterday, so maybe it just needed more ram.

-

I got that “uploading backup ***M@0MBps” too. I'll use Contabo Object Storage. But it happened on Backblaze and HetznerStorageBox too. At some days it seems to got stuck for 8 hours until I cancel. Additional Information: I'll use tgz-backup with encryption enabled.

-

@Meuschke I'm facing issues with contabo storage and stuck cloudron backup as well, did you find any solution?

-

Hi @nebulon I'm running on the latest Cloudron by default, but no I can't relate the problem to a specific app. It seems to affect apps randomly

Here the backup task was stuck for 10 hours on uploading a snapshot for one of the 21 apps. Still 19 to go...Oct 03 00:27:14 box:backuptask runBackupUpload: result - {"result":""} Oct 03 00:27:14 box:backuptask uploadAppSnapshot: calibre.**REDACTED** uploaded to snapshot/app_00d005bc-a2ea-43a2-a3b9-30f028f26ce3. 32.537 seconds Oct 03 00:27:14 box:backuptask rotateAppBackup: rotating calibre.**REDACTED** to path 2024-10-02-222642-273/app_calibre.**REDACTED**_v1.4.10 Oct 03 00:27:15 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying with concurrency of 10"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying files from 0-14"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/jrJgqfYv69tbac6fVBFtxdljnGrWninV5aJyOjrOG8s/6Z4pOMIpGTIyl3Zxi9zzn5Z5Z3J-h9T90BMaJIksycI"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/DeKFwwiKOYjuA47xr1mZBfh69jggmLJ8l-5r5hw1offdPUKYU5osRjaBaW8aEfFuMPMu-+Hb-z1vk7Bs-F2ysCkum+eTNkieV4Ts6l1VD00"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/ZKv-vNRppOn5xsg3bkOA1KA7+8-CtXL9aVky0zWZMwk"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/wHCrqlLCtoEEa003gLqn0x5LcOeF61iwx9M+Y7wsQH3zpKRUA3FTOj+Xq66-MqMKjoymoy4k0psK1i-8sAYyiv1wuS-SoXLA1+kJbe3fsMg"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/r4rCRGLG-KOXkY5Q8GZm57Nrr-JyQJeyPwfZCRcDxrI/Vnx7IX3pMZ0Ejn1VNGj7R+nx8XAV6aj5HvMaQhOYUPzrpGUwy+kYh74M7Re5Kc91hsriTA6wN4vOX4TtiE6DBg/ZKv-vNRppOn5xsg3bkOA1KA7+8-CtXL9aVky0zWZMwk"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/r4rCRGLG-KOXkY5Q8GZm57Nrr-JyQJeyPwfZCRcDxrI/Vnx7IX3pMZ0Ejn1VNGj7R+nx8XAV6aj5HvMaQhOYUPzrpGUwy+kYh74M7Re5Kc91hsriTA6wN4vOX4TtiE6DBg/fd4UifKQ0OB46+3ScxT00rIq9OHrN8eKpgbCRaG1kYk"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/r4rCRGLG-KOXkY5Q8GZm57Nrr-JyQJeyPwfZCRcDxrI/Vnx7IX3pMZ0Ejn1VNGj7R+nx8XAV6aj5HvMaQhOYUPzrpGUwy+kYh74M7Re5Kc91hsriTA6wN4vOX4TtiE6DBg/uBn1qiDlCNedHt4XTcAD287edwzLjz48Ms5LmF2mmTy5RTa34rwUcbmJoopxxQLAzPH6AUtnFd4B03XlqGadc80-7tbatyW6tsjVgblU4ug"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/tA0uGbXkr090X35m9ZJMHC5B2oXd1yUekTfHxEW34Bk"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/zLE6me3TZuVJe4coiSls7F+tBBEAPFOuPxMI6l6xKGY/Odt4iwHyWPWzX0JohDmSN5i8Ui0ViroHlpbtzqBaO+A"} Oct 03 00:27:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/zLE6me3TZuVJe4coiSls7F+tBBEAPFOuPxMI6l6xKGY/Tpo9QFNbimCemAb5UnJnXwyS8rBZT0PG+OrMuUUVoRw"} Oct 03 00:27:29 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/zLE6me3TZuVJe4coiSls7F+tBBEAPFOuPxMI6l6xKGY/rzafhF-yh4kD72joSHsuv0JJ-ppVopfynXP+PCgEj9I"} Oct 03 00:27:29 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/zLE6me3TZuVJe4coiSls7F+tBBEAPFOuPxMI6l6xKGY/sXvd51xBzqb7NSNHyFclg--cwqJdSWNfPu-1Kwomr+o"} Oct 03 00:27:29 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying 9zpGQTS3UZche6GQ47tXzP7EaJyoUuGEs+fostTm5XI"} Oct 03 00:27:29 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copying fQ+nHrDglHMMUKO1bl2UGlC-rBFqid8BdQ7cnt3Jruo"} Oct 03 01:57:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (1) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/zLE6me3TZuVJe4coiSls7F+tBBEAPFOuPxMI6l6xKGY/Odt4iwHyWPWzX0JohDmSN5i8Ui0ViroHlpbtzqBaO+A. Error: 504: null 504"} Oct 03 01:57:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (1) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/r4rCRGLG-KOXkY5Q8GZm57Nrr-JyQJeyPwfZCRcDxrI/Vnx7IX3pMZ0Ejn1VNGj7R+nx8XAV6aj5HvMaQhOYUPzrpGUwy+kYh74M7Re5Kc91hsriTA6wN4vOX4TtiE6DBg/fd4UifKQ0OB46+3ScxT00rIq9OHrN8eKpgbCRaG1kYk. Error: 504: null 504"} Oct 03 01:57:18 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (1) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/ZKv-vNRppOn5xsg3bkOA1KA7+8-CtXL9aVky0zWZMwk. Error: 504: null 504"} Oct 03 03:27:38 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (2) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/r4rCRGLG-KOXkY5Q8GZm57Nrr-JyQJeyPwfZCRcDxrI/Vnx7IX3pMZ0Ejn1VNGj7R+nx8XAV6aj5HvMaQhOYUPzrpGUwy+kYh74M7Re5Kc91hsriTA6wN4vOX4TtiE6DBg/fd4UifKQ0OB46+3ScxT00rIq9OHrN8eKpgbCRaG1kYk. Error: 504: null 504"} Oct 03 03:27:38 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (2) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/zLE6me3TZuVJe4coiSls7F+tBBEAPFOuPxMI6l6xKGY/Odt4iwHyWPWzX0JohDmSN5i8Ui0ViroHlpbtzqBaO+A. Error: 504: null 504"} Oct 03 03:27:38 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (2) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/ZKv-vNRppOn5xsg3bkOA1KA7+8-CtXL9aVky0zWZMwk. Error: 504: null 504"} Oct 03 04:57:57 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (3) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/zLE6me3TZuVJe4coiSls7F+tBBEAPFOuPxMI6l6xKGY/Odt4iwHyWPWzX0JohDmSN5i8Ui0ViroHlpbtzqBaO+A. Error: 504: null 504"} Oct 03 04:57:57 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (3) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/r4rCRGLG-KOXkY5Q8GZm57Nrr-JyQJeyPwfZCRcDxrI/Vnx7IX3pMZ0Ejn1VNGj7R+nx8XAV6aj5HvMaQhOYUPzrpGUwy+kYh74M7Re5Kc91hsriTA6wN4vOX4TtiE6DBg/fd4UifKQ0OB46+3ScxT00rIq9OHrN8eKpgbCRaG1kYk. Error: 504: null 504"} Oct 03 04:57:58 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (3) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/ZKv-vNRppOn5xsg3bkOA1KA7+8-CtXL9aVky0zWZMwk. Error: 504: null 504"} Oct 03 06:28:17 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (4) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/r4rCRGLG-KOXkY5Q8GZm57Nrr-JyQJeyPwfZCRcDxrI/Vnx7IX3pMZ0Ejn1VNGj7R+nx8XAV6aj5HvMaQhOYUPzrpGUwy+kYh74M7Re5Kc91hsriTA6wN4vOX4TtiE6DBg/fd4UifKQ0OB46+3ScxT00rIq9OHrN8eKpgbCRaG1kYk. Error: 504: null 504"} Oct 03 06:28:17 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (4) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/zLE6me3TZuVJe4coiSls7F+tBBEAPFOuPxMI6l6xKGY/Odt4iwHyWPWzX0JohDmSN5i8Ui0ViroHlpbtzqBaO+A. Error: 504: null 504"} Oct 03 06:28:17 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (4) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/ZKv-vNRppOn5xsg3bkOA1KA7+8-CtXL9aVky0zWZMwk. Error: 504: null 504"} Oct 03 07:58:37 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (5) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/ZKv-vNRppOn5xsg3bkOA1KA7+8-CtXL9aVky0zWZMwk. Error: 504: null 504"} Oct 03 09:28:57 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (6) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/ZKv-vNRppOn5xsg3bkOA1KA7+8-CtXL9aVky0zWZMwk. Error: 504: null 504"} Oct 03 10:36:03 box:tasks update 2700: {"percent":5.166666666666667,"message":"Retrying (7) copy of +-SqAUe7wm42qtKljEa0rA5Org2aerAP0dRZ+xrfGA8/hBVH2XnNw+prJYmkyuv9hmGRbtOX-CXOWAWKdeyIVPw/otRz4aJIm6OFqv7zzyRnxh-aZfFLzkqN4KYri8ZQTlA/P3nNt8Vpr4u6OpFvII+VL6i827eBADNfyfOC9JndV91FiIE6Los49XGvdQYsNB890ZX9k33XZn2-YcMNGhxVklWvuYYeOLm3dOhnCHCkeuaN+woWOUUPIokIjhj2JESlspSUa7+1Ejhwgw5-FsV2dg/ZKv-vNRppOn5xsg3bkOA1KA7+8-CtXL9aVky0zWZMwk. Error: XMLParserError: Non-whitespace before first tag.\nLine: 0\nColumn: 1\nChar: { 502"} Oct 03 10:36:26 box:tasks update 2700: {"percent":5.166666666666667,"message":"Copied 14 files with error: null"} Oct 03 10:36:26 box:backuptask copy: copied successfully to 2024-10-02-222642-273/app_calibre.**REDACTED**_v1.4.10. Took 36551.567 seconds Oct 03 10:36:26 box:backuptask fullBackup: app calibre.**REDACTED** backup finished. Took 36584.151 seconds Oct 03 10:36:26 box:tasks update 2700: {"percent":5.166666666666667,"message":"Backing up syncthing.**REDACTED** (2/21)"} Oct 03 10:36:26 box:tasks update 2700: {"percent":9.333333333333334,"message":"Snapshotting app syncthing.**REDACTED**"} Oct 03 10:36:26 box:services backupAddons Oct 03 10:36:26 box:services backupAddons: backing up ["localstorage","ldap"] ...And of course many backup cleanup tasks are also stuck/queued.

The logs are not so helpful about what command is blocking or how to troubleshoot it. -

I've switched from Contabo to Hetzner for storage/backup, I'll let you know if that improves things.

-

Indeed those errors are coming from the server, some status 504. 5* errors indicate that the upstream server (in this case contabo) has issues. Not sure what to do but keep retrying on our side. But since you moved anyways to hetzner, I would assume things work now.

-

Things work like a charm indeed. I'll likely move the vps over soon or later once answering the question of where I should go. Also looking for a good option for CI/CD runner and to mitigate most issues faced this last month with Contabo.

-

J joseph has marked this topic as solved on