Nextcloud backup crashes

-

Thanks Girish, I think I might have the largest Nextcloud on Cloudron. It has been working flawlessly, except for the present backup hiccup. I always feel so safe with Cloudron.

The support is priceless. As one of the earliest adopters, Cloudron has been my second-best decision ever. -

Thanks Girish, I think I might have the largest Nextcloud on Cloudron. It has been working flawlessly, except for the present backup hiccup. I always feel so safe with Cloudron.

The support is priceless. As one of the earliest adopters, Cloudron has been my second-best decision ever.@jagan thanks for the nice words

I think using a storage box is probably the best approach for backups instead of these object storage solutions. They are designed to serve reasonably sized objects and not 1.5TB objects

I think using a storage box is probably the best approach for backups instead of these object storage solutions. They are designed to serve reasonably sized objects and not 1.5TB objects  You mentioned trying out Hetzner Storage Box but is your server on Hetzner? I don't have much idea how well CIFS works across the "internet"

You mentioned trying out Hetzner Storage Box but is your server on Hetzner? I don't have much idea how well CIFS works across the "internet" -

I got a Storage Box on Hetzner, my Cloudron is also on Hetzner.

But somehow I am unable to add the storage box to the backup, may I please pass on the credentials to you please? if you can help me add it to the backup, I would be most grateful.

Will mail the details as a reply to the support mail. -

I got a Storage Box on Hetzner, my Cloudron is also on Hetzner.

But somehow I am unable to add the storage box to the backup, may I please pass on the credentials to you please? if you can help me add it to the backup, I would be most grateful.

Will mail the details as a reply to the support mail. -

I got a Storage Box on Hetzner, my Cloudron is also on Hetzner.

But somehow I am unable to add the storage box to the backup, may I please pass on the credentials to you please? if you can help me add it to the backup, I would be most grateful.

Will mail the details as a reply to the support mail. -

@jagan ah perfect, let's try that then. Is the hetzner storage and VPS in the same region ? I know that other network providers (like aws) also charge for outbound network! So, we are transferring out TB of data then there is a cost.

@girish said in Nextcloud backup crashes:

@jagan ah perfect, let's try that then. Is the hetzner storage and VPS in the same region ? I know that other network providers (like aws) also charge for outbound network! So, we are transferring out TB of data then there is a cost.

The VPS and Storage are in the same region of Germany.

There is sparse info on the storage.

I have to find out about the traffic. I hope internal traffic within Hetzner is free, but this is something I need to get sure about. Thanks for the pointer! -

G girish has marked this topic as solved on

G girish has marked this topic as solved on

-

I will mark this as solved for the moment. CIFS mounting works fine. The (still ongoing) issue is that the data is quite massive and I am looking into seeing if we can throw in some retry logic to make things more "stable".

@girish Yes Please. Thank you for the help.

I triggered a backup earlier today, and it stopped midway. Do the following log entries say anything of interest?

Dec 26 21:28:46 box:backuptask copy: copied to 2023-12-26-092602-586/app_hub.sbvu.ac.in_v4.20.5 errored. error: copy exited with code 1 signal null

Dec 26 21:28:47 box:apptask run: app error for state pending_backup: BoxError: copy exited with code 1 signal null at Object.copy (/home/yellowtent/box/src/storage/filesystem.js:191:26) at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { reason: 'External Error', details: {} }

-

Sorry for not responding in an adequate time. Since my backups are still failing, I would try some other storage option. What storage protocol and configuration do you recommend for big backups?

-

@opensourced I have been testing things on the side and tried copying over such amount of data to a CIFS mounted Hetzner Storage and just

cp -rcommand failed in the middle. rclone failed as well since the Hetzner storage box mount point disconnects/breaks randomly. I am getting farther with rsync but currently cloudron does not have an adapter for rsync. We are still working on this. Fundamentally, backing up 2.5TB of data reliably is a very complicated task.All this to say, I don't really have a solution but if someone has ideas, I can try something else.

-

@opensourced I have been testing things on the side and tried copying over such amount of data to a CIFS mounted Hetzner Storage and just

cp -rcommand failed in the middle. rclone failed as well since the Hetzner storage box mount point disconnects/breaks randomly. I am getting farther with rsync but currently cloudron does not have an adapter for rsync. We are still working on this. Fundamentally, backing up 2.5TB of data reliably is a very complicated task.All this to say, I don't really have a solution but if someone has ideas, I can try something else.

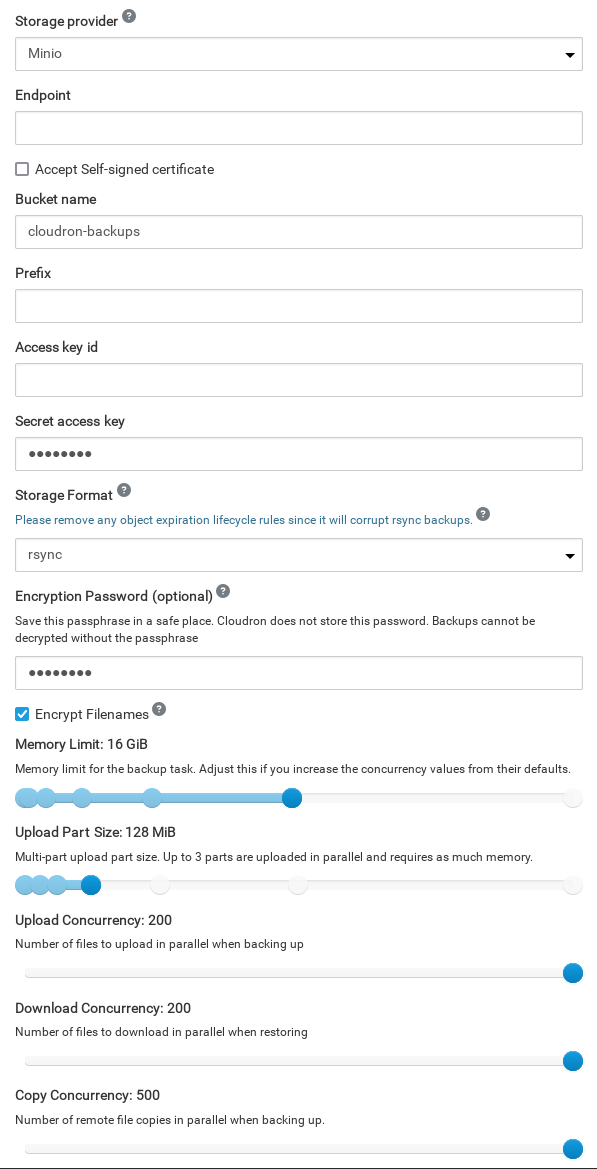

@girish Bevor I used Minio for backups, I was mounting a NFS mount. But I went away from using this, because there were instabilities with the mount and if the share was not mounted, the backup was made onto the rootfs and eventually filled up the disk untill the instance crashed.

-

@girish Bevor I used Minio for backups, I was mounting a NFS mount. But I went away from using this, because there were instabilities with the mount and if the share was not mounted, the backup was made onto the rootfs and eventually filled up the disk untill the instance crashed.

@opensourced er, apologies . I confused you and @jagan !

Can you tell a bit more about your network? Is it a home network or public VPS? Just trying to understand the practicalities of backing up so much. Where the minio and NFS in your local network ?

-

@opensourced I have been testing things on the side and tried copying over such amount of data to a CIFS mounted Hetzner Storage and just

cp -rcommand failed in the middle. rclone failed as well since the Hetzner storage box mount point disconnects/breaks randomly. I am getting farther with rsync but currently cloudron does not have an adapter for rsync. We are still working on this. Fundamentally, backing up 2.5TB of data reliably is a very complicated task.All this to say, I don't really have a solution but if someone has ideas, I can try something else.

@girish said in Nextcloud backup crashes:

@opensourced I have been testing things on the side and tried copying over such amount of data to a CIFS mounted Hetzner Storage and just

cp -rcommand failed in the middle. rclone failed as well since the Hetzner storage box mount point disconnects/breaks randomly. I am getting farther with rsync but currently cloudron does not have an adapter for rsync. We are still working on this. Fundamentally, backing up 2.5TB of data reliably is a very complicated task.All this to say, I don't really have a solution but if someone has ideas, I can try something else.

@girish A few years ago you helped me out with a similar issue (not on forums but via a support ticket) with backing up a large Nextcloud instance (2TB+). The issue was with timeouts and you advised how and where to update to extend the timeout window significantly. I was using a Hetzner Storage Box in Germany and a VPS in Sweden using a CIFS mount. Later I moved it to an sshfs, rsync to a local server.

This fixed the issue and allowed me a couple of years of backups of that large Nextcloud instance (with many other apps running concurrently) without problem. I don't have those emails any more or access to that instance but wanted to mention in case a reminder is useful to you here as it seems to be a similar issue.

-

@opensourced er, apologies . I confused you and @jagan !

Can you tell a bit more about your network? Is it a home network or public VPS? Just trying to understand the practicalities of backing up so much. Where the minio and NFS in your local network ?

@girish Cloudron is running in a Proxmox cluster. In the same local network there are storage servers via 10Gb/s. I guess ideal would be a NFS mount from a TrueNAS server. This way I could get rid of encryption overhead and use Jumbo frames. If cloudron could handle those NFS mounts stable, I would switch back to this. Other suggestions are very welcome.

-

I would warmly welcome this and change right back to NFS.

-

Somehow, the backup is failing because files from the cache were not found.

Jan 17 06:05:54box:shell copy (stderr): /bin/cp: cannot create hard link '/mnt/cloudronbackup/2024-01-16-143004-490/app_n8n.sbvu.ac.in_v3.17.2/data/user/.cache/n8n/public/assets/@jsplumb/browser-ui-d5e5c8b4.js' to '/mnt/cloudronbackup/snapshot/app_3b812988-82fc-4270-b8b1-a94efa9fc06a/data/user/.cache/n8n/public/assets/@jsplumb/browser-ui-d5e5c8b4.js': No such file or directory -

@girish can you make sure the remount checks for CIFS are applied to NFS as well? At every backup..

Having it be more bulletproof would be great!

@robi said in Nextcloud backup crashes:

@girish can you make sure the remount checks for CIFS are applied to NFS as well? At every backup..

Having it be more bulletproof would be great!

@girish do you think there will be a more stable support for NFS in the feature?

-

I am now slowly running into a storage limitation issue with the current settings. Also the performance of the current setting is really poor with 10-20 Mb/s considering that i would have a 10G link available that i cannot use.

@girish if i knew that there is some NFS improve scheduled, i could work around untill then. If not, i would need to cope with the current circumstances somehow. I think there would not even much needed.

I just need the NFS connector to:

- Never write to the root mount in order to prevent full root disk.

- Check mount before backup.

- If during the backup there is a connection issue, try to remount and continue. Try remount for 10 times with a delay of 10s or so...