Bundle with Pipelines?

-

Even if IMO it's not appropriate for this package, the approach to bundle Ollama and Open WebUI together in the same package is an interesting one.

I was wondering if instead of packaging Ollama with it, we could package Pipelines and Open WebUI.

Pipelines in itself is really just a sandboxed runtime for python code, built for use with Open WebUI. It has no frontend, and the same dependencies as Open WebUI.

I think this'll be worth debating when comes the time to publish pipelines to the app store.

-

Can we please consider this?

The virtual servers we run are hardly suited for good Ollama output anyways. Now that the tokens cost for lower models of OpenAI, Grok, Anthropic, Google, etc are low, there is actually no point to have Ollama bundled.Pipelines however, add a great deal of flexibility and functionality to OpenWebUI and can enhance it to no limit!

Thank you

-

I guess since it's not a webapp, we have to bundle it with the app itself. But I am not sure how flexible these peipelines are. Does one have to install many deps? i.e user can install whatever they want? Maybe @Lanhild knows. I wonder how that would fit into the cloudron packaging model

-

I guess since it's not a webapp, we have to bundle it with the app itself. But I am not sure how flexible these peipelines are. Does one have to install many deps? i.e user can install whatever they want? Maybe @Lanhild knows. I wonder how that would fit into the cloudron packaging model

@girish Dependencies to install are specified in each pipelines or filters frontmatters. (the scripts that the actual software ingests)

I've already made the package @ https://github.com/Lanhild/pipelines-cloudron and dependencies installation works.

-

in the OpenWebUI Docs they don't recommend using the pipelines

unless for advance usage case@jagan said in Bundle with Pipelines?:

The virtual servers we run are hardly suited for good Ollama output anyways. Now that the tokens cost for lower models of OpenAI, Grok, Anthropic, Google, etc are low, there is actually no point to have Ollama bundled.

if you want to enable others LLM; you can already do it with any providers that offer a compatible OpenAI API; which means virtually all of them.

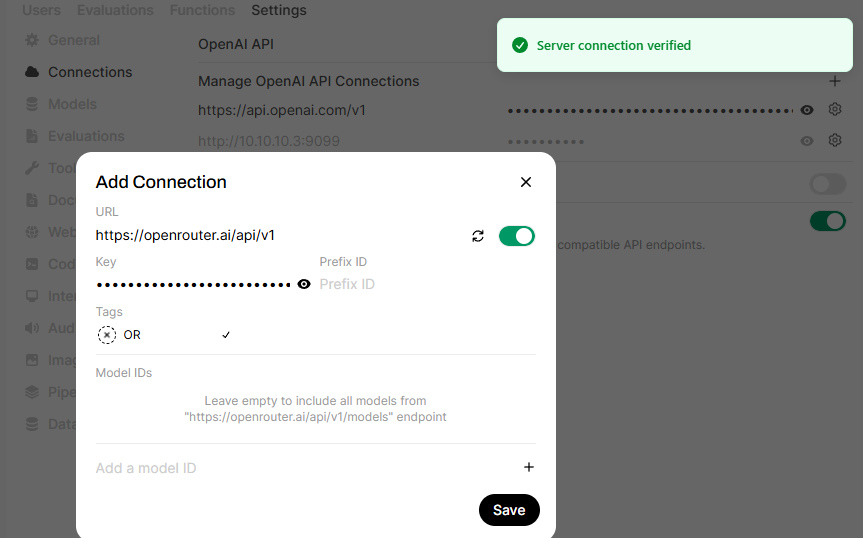

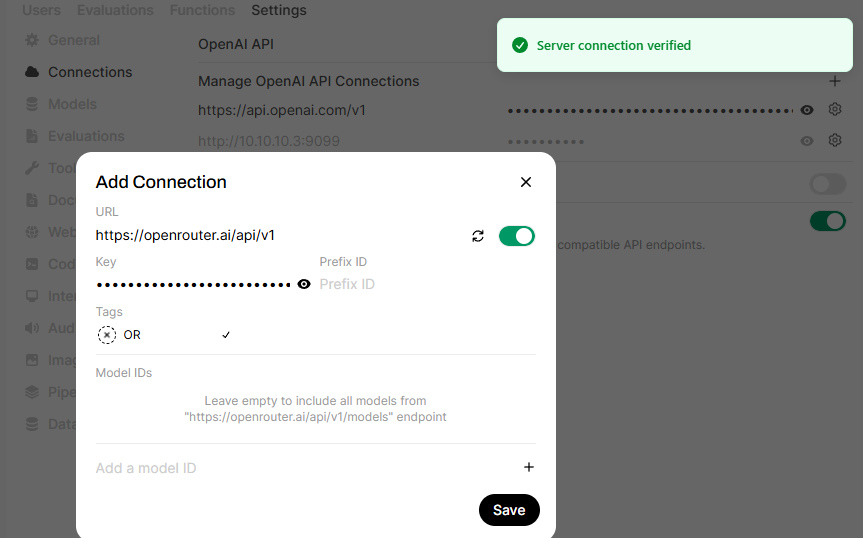

simply go in admin/settings -> Connections of your OpenWebUI instance andadd the base URL API of your favorite provider

- IE: https://openrouter.ai/api/v1 for OpenRouter

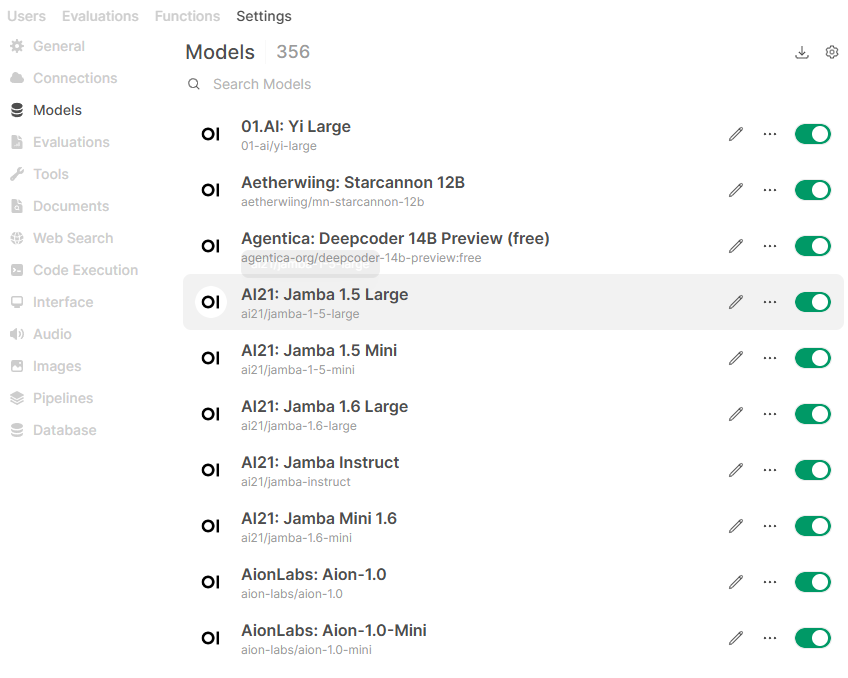

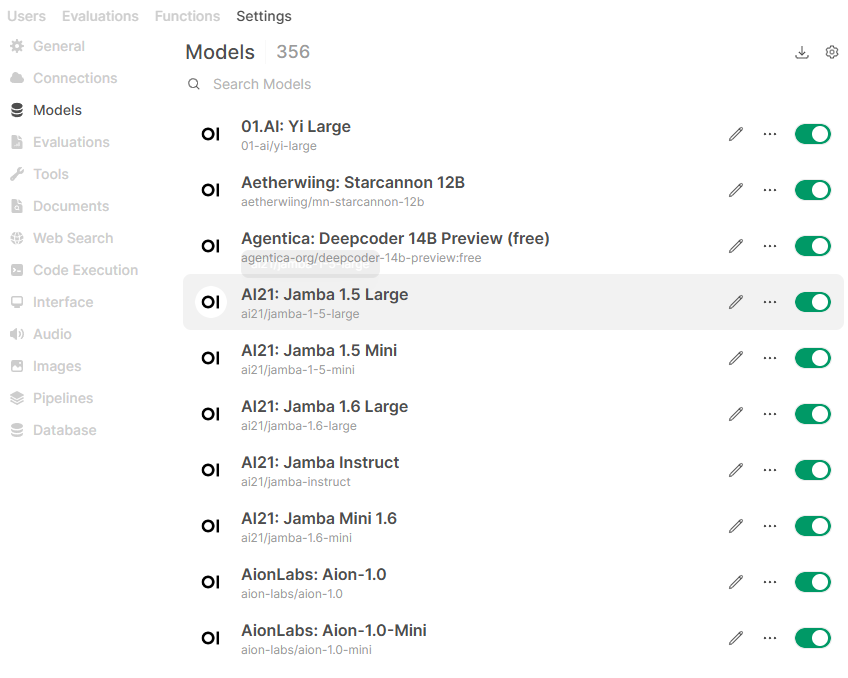

Then in Settings/Models you will have access to all the model provided by OpenRouter.

NOTE: it also work at user level via DirectConnection

In the same logic;

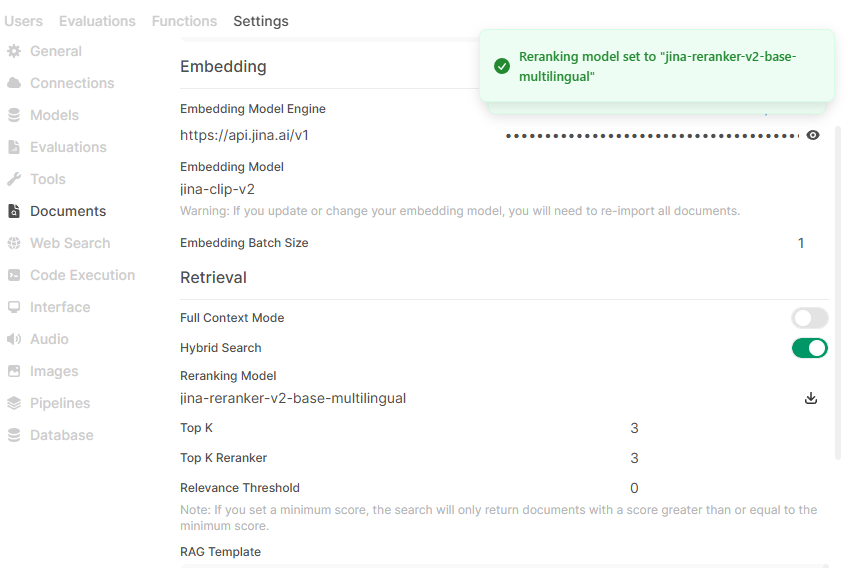

I also managed to use Replicate.AI as ComfyUI instance and Jina.ai as Embedder and ReRanker

-

in the OpenWebUI Docs they don't recommend using the pipelines

unless for advance usage case@jagan said in Bundle with Pipelines?:

The virtual servers we run are hardly suited for good Ollama output anyways. Now that the tokens cost for lower models of OpenAI, Grok, Anthropic, Google, etc are low, there is actually no point to have Ollama bundled.

if you want to enable others LLM; you can already do it with any providers that offer a compatible OpenAI API; which means virtually all of them.

simply go in admin/settings -> Connections of your OpenWebUI instance andadd the base URL API of your favorite provider

- IE: https://openrouter.ai/api/v1 for OpenRouter

Then in Settings/Models you will have access to all the model provided by OpenRouter.

NOTE: it also work at user level via DirectConnection

In the same logic;

I also managed to use Replicate.AI as ComfyUI instance and Jina.ai as Embedder and ReRanker

-

docs

-

embedding: https://jina.ai/api-dashboard/embedding

-

reranker: https://jina.ai/api-dashboard/reranker

-

jina endpoint: https://api.jina.ai/v1

-