Backup formats for object storage - is any one of them more efficient/quicker than the other?

-

So I know rsync is generally better for local disk (or external disk) storage, as it's super quick and saves disk space. That is my experience anyways. However when using object storage (which is what I want to move to from an external disk), it seems that it takes longer which is expected since it's over the network, but I'm not sure which may be a bit more efficient in that use-case. Is it rsync as I'd have assumed, or tgz?

If it matters, I have some larger sites (~3 GB) and many smaller ones (~200 MB), and then some apps that take very little storage such as Radicale and Bitwarden, etc. Usually the tgz image is about 12 GB in size, with about 35 GB of disk space used in the Cloudron all together. Any suggestions which one to use?

Has anyone had experience with this themselves with object storage, any of them in particulars seem more efficient than the other? My guess is it's about one and the same, in my own testing so far, but would love to feedback in case there's a more technical advantage to one of them when using object storage. At first I assumed it'd be rsync, but it doesn't seem any faster than tgz, my assumption because it takes rsync quite a while to get the list of what's changed when it has to cross the network (most object storage providers are also quite limited in their data transmission, so usually less than 8 Mbps in my experience with DigitalOcean and OVH), and tgz is uploading a compressed file instead, so in the end they sort of even out. But this is just my very limited testing so far and I'd love to know what others have experienced.

-

The general take on this is that it depends

The tarball is generally much better for lots of small files or just simply small backups. Especially with object storage this reduces the involved network requests a lot (essentially only few requests are required compared to rsync which requires requests per file within the backup.Tarball on the other hand is not good for example when having lots of larger files within for example nextcloud. The tarball creation needs a lot of memory and is prone to fail due to that, depending on the available server resources, however rsync especially with hardlinks reduces the required amount of backup storage overall.

-

The general take on this is that it depends

The tarball is generally much better for lots of small files or just simply small backups. Especially with object storage this reduces the involved network requests a lot (essentially only few requests are required compared to rsync which requires requests per file within the backup.Tarball on the other hand is not good for example when having lots of larger files within for example nextcloud. The tarball creation needs a lot of memory and is prone to fail due to that, depending on the available server resources, however rsync especially with hardlinks reduces the required amount of backup storage overall.

@nebulon this is why being able to define backup format per app would be a nice addition.

-

So in my initial testing yesterday evening... TGZ seems to be the format to use if time is a factor. So for example my full system back up took roughly 20 minutes to the OVH Object Storage storage. However using rsync both the first time and the second time took well over an hour (it was almost 3 hours for the first one but that’s to be expected it’d take longer the first time around). So even though I may be using more disk space with TGZ and thus paying a little bit more I think it’s worth it because there are times where I want to do a full system back up before doing an update or something like that and I don’t want to have to wait an hour or more for that to finish when I want to just get going with the maintenance. My main reason to switch to object storage is I want to not have to worry about space again. Using an external disk was way quicker (just a few minutes using rsync) but much more costly too and also would run into occasional space limitations that’d be annoying to fix.

-

@d19dotca It's slow not because of the format but because we set a very low concurrency. Specifically, we only make like 10 requests in parallel at a time. So, if you have a lot of files, this can take a while! For AWS S3 alone, we set this concurrency to 500. This is because AWS doesn't even seem to fail but all other providers (especially DO spaces back in the day) used to fail and return 500 all the time.

I will look into this for the next release, it's easy to speed things up.

-

@d19dotca It's slow not because of the format but because we set a very low concurrency. Specifically, we only make like 10 requests in parallel at a time. So, if you have a lot of files, this can take a while! For AWS S3 alone, we set this concurrency to 500. This is because AWS doesn't even seem to fail but all other providers (especially DO spaces back in the day) used to fail and return 500 all the time.

I will look into this for the next release, it's easy to speed things up.

-

@d19dotca It's slow not because of the format but because we set a very low concurrency. Specifically, we only make like 10 requests in parallel at a time. So, if you have a lot of files, this can take a while! For AWS S3 alone, we set this concurrency to 500. This is because AWS doesn't even seem to fail but all other providers (especially DO spaces back in the day) used to fail and return 500 all the time.

I will look into this for the next release, it's easy to speed things up.

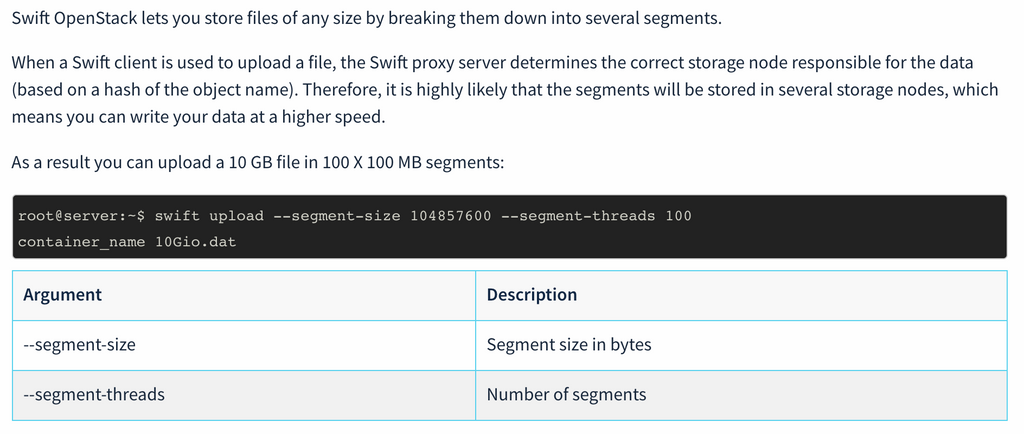

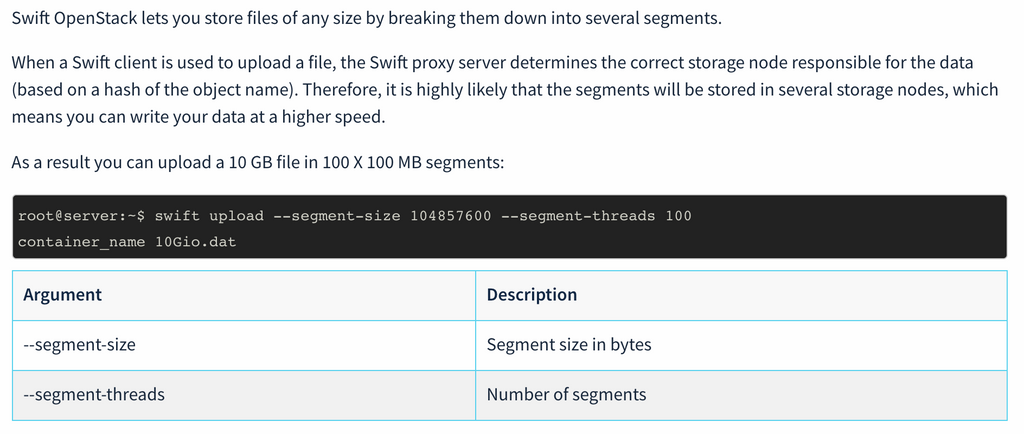

@girish I can open a new thread if you'd like, but figured I'd quickly tack this onto this post here... I found a KB article on OVH for an "optimized method for uploading" to their Object Storage to get better performance. Was wondering if this is something that needs to be incorporated into the Cloudron backup package somehow for improvements in performance?

https://docs.ovh.com/ca/en/storage/optimised_method_for_uploading_files_to_object_storage/

Ultimately I'm trying to see how I can improve my backup speeds, if it's even possible. Right now using tgz format to OVH Object Storage takes me about 33 minutes. For what it's worth, the snapshot folder is ~15 GB in size (compressed I guess since tgz is used).

-

@girish I can open a new thread if you'd like, but figured I'd quickly tack this onto this post here... I found a KB article on OVH for an "optimized method for uploading" to their Object Storage to get better performance. Was wondering if this is something that needs to be incorporated into the Cloudron backup package somehow for improvements in performance?

https://docs.ovh.com/ca/en/storage/optimised_method_for_uploading_files_to_object_storage/

Ultimately I'm trying to see how I can improve my backup speeds, if it's even possible. Right now using tgz format to OVH Object Storage takes me about 33 minutes. For what it's worth, the snapshot folder is ~15 GB in size (compressed I guess since tgz is used).

@d19dotca said in Backup formats for object storage - is any one of them more efficient/quicker than the other?:

Ultimately I'm trying to see how I can improve my backup speeds, if it's even possible. Right now using tgz format to OVH Object Storage takes me about 33 minutes. For what it's worth, the snapshot folder is ~15 GB in size (compressed I guess since tgz is used).

The tgz in Cloudron is created as a "stream" and is not a real file in the file system (because if we made a separate file, then we will consume the compressed size of 15GB in your case extra in the file system, atleast temporarily during the time of backup). Because it's a stream created on the fly, we cannot do "parallel" upload of this file by breaking it up into parts. Speed optimizations can be done in rsync mode though, some of the settings are exposed in Advanced section of backups, like the concurrently etc. Finally, I think segment* is swift specific, I think. We use S3 APIs, usually they are called chunk/part size in those APIs.

-

@d19dotca said in Backup formats for object storage - is any one of them more efficient/quicker than the other?:

Ultimately I'm trying to see how I can improve my backup speeds, if it's even possible. Right now using tgz format to OVH Object Storage takes me about 33 minutes. For what it's worth, the snapshot folder is ~15 GB in size (compressed I guess since tgz is used).

The tgz in Cloudron is created as a "stream" and is not a real file in the file system (because if we made a separate file, then we will consume the compressed size of 15GB in your case extra in the file system, atleast temporarily during the time of backup). Because it's a stream created on the fly, we cannot do "parallel" upload of this file by breaking it up into parts. Speed optimizations can be done in rsync mode though, some of the settings are exposed in Advanced section of backups, like the concurrently etc. Finally, I think segment* is swift specific, I think. We use S3 APIs, usually they are called chunk/part size in those APIs.

@girish Ah okay, that makes sense on the tgz part. Unfortunately I haven't had much success at all in any quicker times (in fact it's often closer to double the time than tgz, taking between 1-2 hours) when using rsync in my tests for OVH Object Storage. But maybe I just haven't found that 'sweet spot' yet. I'll keep testing. Thanks Girish.