Help with Wasabi mounting

-

@privsec the latter.

verify the mount on my Server OS first and then afterwords resume with cloudron

Other then running

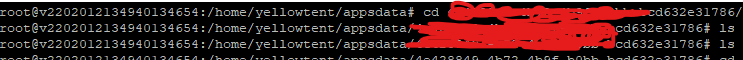

rclone lsd remote:bucketandrclone ls wasabi:nextcloud.myprivsec. I do not know how to test rclones connection.I just created an empty text file called "hello.txt" via touch hello.txt in the mounted folder on my server, and it did not get sent to wasabi.

So then that means that the connection is not working right?

Could this be do to unmounting after a reboot? I believe one occurred after setting the mount up. How do i get this to persist through reboots?

I am going to try out the s3fs once more right now and will report back on that.

-

Other then running

rclone lsd remote:bucketandrclone ls wasabi:nextcloud.myprivsec. I do not know how to test rclones connection.I just created an empty text file called "hello.txt" via touch hello.txt in the mounted folder on my server, and it did not get sent to wasabi.

So then that means that the connection is not working right?

Could this be do to unmounting after a reboot? I believe one occurred after setting the mount up. How do i get this to persist through reboots?

I am going to try out the s3fs once more right now and will report back on that.

-

Ok, so I went through the s3fs installing and running and now I am getting this

root@vxxxx:~# s3fs xxxx.xxxx /mnt/volumes/nextcloud -o passwd_file=/etc/passwd-s3fs -o url=https://s3.eu-central-1.wasabisys.com -o use_path_request_style -o dbglevel=info -f -o curldbg [CRT] s3fs.cpp:set_s3fs_log_level(257): change debug level from [CRT] to [INF] [INF] s3fs.cpp:set_mountpoint_attribute(4193): PROC(uid=0, gid=0) - MountPoint(uid=0, gid=0, mode=40755) [CRT] s3fs.cpp:s3fs_init(3378): init v1.82(commit:unknown) with GnuTLS(gcrypt) [INF] s3fs.cpp:s3fs_check_service(3754): check services. [INF] curl.cpp:CheckBucket(2914): check a bucket. [INF] curl.cpp:prepare_url(4205): URL is https://s3.eu-central-1.wasabisys.com/myprivsec.nextcloud/ [INF] curl.cpp:prepare_url(4237): URL changed is https://s3.eu-central-1.wasabisys.com/myprivsec.nextcloud/ [INF] curl.cpp:insertV4Headers(2267): computing signature [GET] [/] [] [] [INF] curl.cpp:url_to_host(100): url is https://s3.eu-central-1.wasabisys.com * Trying 130.117.252.11... * TCP_NODELAY set * Connected to s3.eu-central-1.wasabisys.com (130.117.252.11) port 443 (#0) * found 138 certificates in /etc/ssl/certs/ca-certificates.crt * found 414 certificates in /etc/ssl/certs * ALPN, offering http/1.1 * SSL connection using TLS1.2 / ECDHE_RSA_AES_256_GCM_SHA384 * server certificate verification OK * server certificate status verification SKIPPED * common name: *.s3.eu-central-1.wasabisys.com (matched) * server certificate expiration date OK * server certificate activation date OK * certificate public key: RSA * certificate version: #3 * subject: OU=Domain Control Validated,OU=EssentialSSL Wildcard,CN=*.s3.eu-central-1.wasabisys.com * start date: Thu, 24 Jan 2019 00:00:00 GMT * expire date: Sat, 23 Jan 2021 23:59:59 GMT * issuer: C=GB,ST=Greater Manchester,L=Salford,O=Sectigo Limited,CN=Sectigo RSA Domain Validation Secure Server CA * compression: NULL * ALPN, server accepted to use http/1.1 > GET /myprivsec.nextcloud/ HTTP/1.1 host: s3.eu-central-1.wasabisys.com User-Agent: s3fs/1.82 (commit hash unknown; GnuTLS(gcrypt)) Accept: */* Authorization: AWS4-HMAC-SHA256 Credential=xxxxxx/us-east-1/s3/aws4_request, SignedHeaders=host;x-amz-content-sha256;x-amz-date, Signature=xxxxx x-amz-content-sha256: e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855 x-amz-date: 20201206T014829Z < HTTP/1.1 200 OK < Content-Type: application/xml < Date: Sun, 06 Dec 2020 01:48:29 GMT < Server: WasabiS3/6.2.3223-2020-10-14-51cd02c (head02) < x-amz-bucket-region: eu-central-1 < x-amz-id-2: xxxxx < x-amz-request-id: xxxxx < Transfer-Encoding: chunked < * Connection #0 to host s3.eu-central-1.wasabisys.com left intact [INF] curl.cpp:RequestPerform(1940): HTTP response code 200However, files are not updating. If I upload directly to wasabi, they are not showing in the cli, and vice versa.

-

Ok, so I went through the s3fs installing and running and now I am getting this

root@vxxxx:~# s3fs xxxx.xxxx /mnt/volumes/nextcloud -o passwd_file=/etc/passwd-s3fs -o url=https://s3.eu-central-1.wasabisys.com -o use_path_request_style -o dbglevel=info -f -o curldbg [CRT] s3fs.cpp:set_s3fs_log_level(257): change debug level from [CRT] to [INF] [INF] s3fs.cpp:set_mountpoint_attribute(4193): PROC(uid=0, gid=0) - MountPoint(uid=0, gid=0, mode=40755) [CRT] s3fs.cpp:s3fs_init(3378): init v1.82(commit:unknown) with GnuTLS(gcrypt) [INF] s3fs.cpp:s3fs_check_service(3754): check services. [INF] curl.cpp:CheckBucket(2914): check a bucket. [INF] curl.cpp:prepare_url(4205): URL is https://s3.eu-central-1.wasabisys.com/myprivsec.nextcloud/ [INF] curl.cpp:prepare_url(4237): URL changed is https://s3.eu-central-1.wasabisys.com/myprivsec.nextcloud/ [INF] curl.cpp:insertV4Headers(2267): computing signature [GET] [/] [] [] [INF] curl.cpp:url_to_host(100): url is https://s3.eu-central-1.wasabisys.com * Trying 130.117.252.11... * TCP_NODELAY set * Connected to s3.eu-central-1.wasabisys.com (130.117.252.11) port 443 (#0) * found 138 certificates in /etc/ssl/certs/ca-certificates.crt * found 414 certificates in /etc/ssl/certs * ALPN, offering http/1.1 * SSL connection using TLS1.2 / ECDHE_RSA_AES_256_GCM_SHA384 * server certificate verification OK * server certificate status verification SKIPPED * common name: *.s3.eu-central-1.wasabisys.com (matched) * server certificate expiration date OK * server certificate activation date OK * certificate public key: RSA * certificate version: #3 * subject: OU=Domain Control Validated,OU=EssentialSSL Wildcard,CN=*.s3.eu-central-1.wasabisys.com * start date: Thu, 24 Jan 2019 00:00:00 GMT * expire date: Sat, 23 Jan 2021 23:59:59 GMT * issuer: C=GB,ST=Greater Manchester,L=Salford,O=Sectigo Limited,CN=Sectigo RSA Domain Validation Secure Server CA * compression: NULL * ALPN, server accepted to use http/1.1 > GET /myprivsec.nextcloud/ HTTP/1.1 host: s3.eu-central-1.wasabisys.com User-Agent: s3fs/1.82 (commit hash unknown; GnuTLS(gcrypt)) Accept: */* Authorization: AWS4-HMAC-SHA256 Credential=xxxxxx/us-east-1/s3/aws4_request, SignedHeaders=host;x-amz-content-sha256;x-amz-date, Signature=xxxxx x-amz-content-sha256: e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855 x-amz-date: 20201206T014829Z < HTTP/1.1 200 OK < Content-Type: application/xml < Date: Sun, 06 Dec 2020 01:48:29 GMT < Server: WasabiS3/6.2.3223-2020-10-14-51cd02c (head02) < x-amz-bucket-region: eu-central-1 < x-amz-id-2: xxxxx < x-amz-request-id: xxxxx < Transfer-Encoding: chunked < * Connection #0 to host s3.eu-central-1.wasabisys.com left intact [INF] curl.cpp:RequestPerform(1940): HTTP response code 200However, files are not updating. If I upload directly to wasabi, they are not showing in the cli, and vice versa.

Ok, I never tried moving files with that screenshot above running.

Now that I have, and I tried moving content over it is looking similar to a packet sniffer as data is transferring over.

So does that mean I have to always ensure that command runs via ssh session?

I feel like thats way to manual and too much upkeep, especially if I have to constantly have an SSH session at all times.

So what am I missing?

-

Ok, well I just dont get it.

ITs almost as if wasabi and cloudron are only syncing some information and not all. And honestly, its irritating the crap out of me to the point where I might just cancel the subscription I bought banking on getting nextlcoud and wasabi to work.

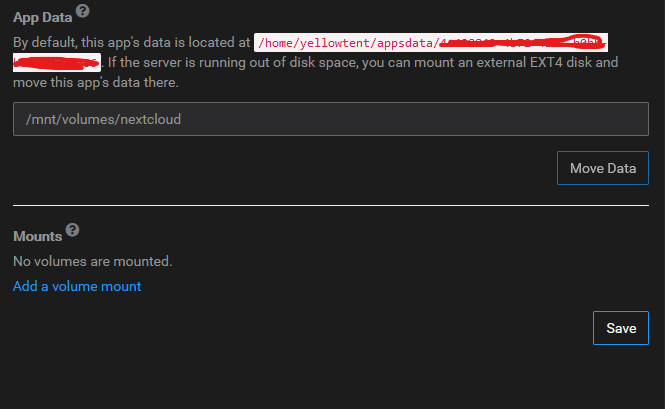

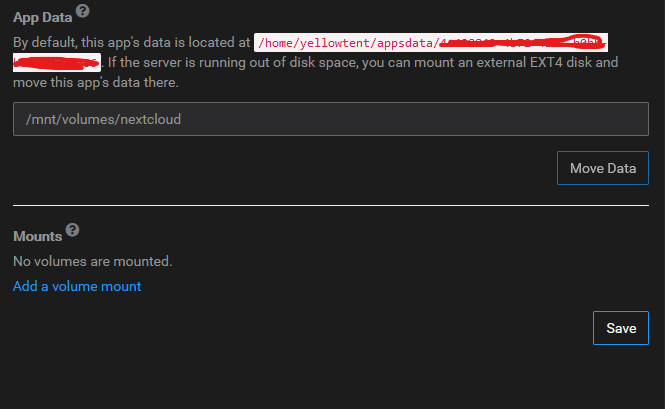

This should not be this difficult or problem prone.App settings

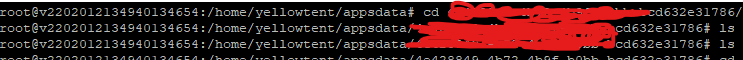

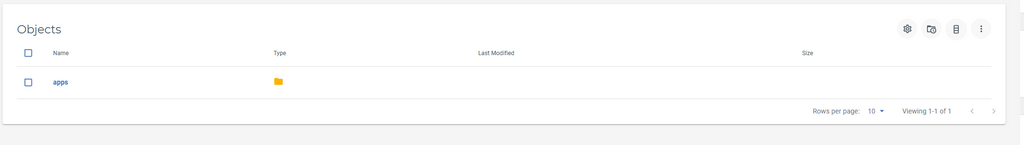

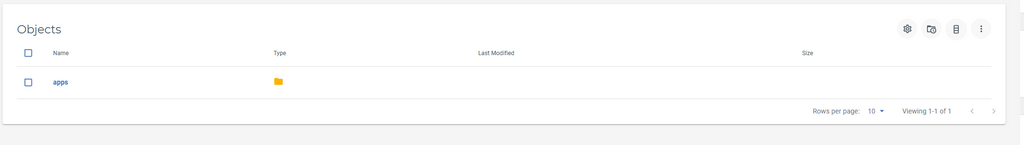

The base directory has no data in it. Which is what I told it to do.

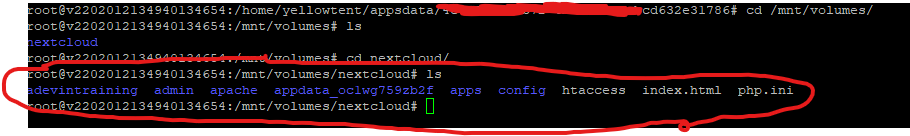

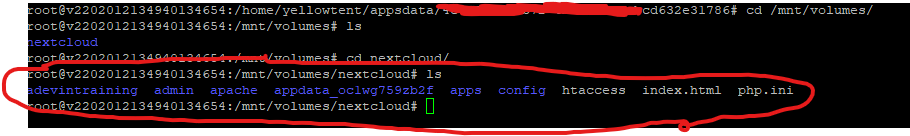

In this photo, it is evident that there is data inside the /mnt/volumes/nextcloud folder

However, in my wasabi bucket, there is no where near as much info

Why is cloudron only syncing a piece of the full app folder?

-

Ok, well I just dont get it.

ITs almost as if wasabi and cloudron are only syncing some information and not all. And honestly, its irritating the crap out of me to the point where I might just cancel the subscription I bought banking on getting nextlcoud and wasabi to work.

This should not be this difficult or problem prone.App settings

The base directory has no data in it. Which is what I told it to do.

In this photo, it is evident that there is data inside the /mnt/volumes/nextcloud folder

However, in my wasabi bucket, there is no where near as much info

Why is cloudron only syncing a piece of the full app folder?

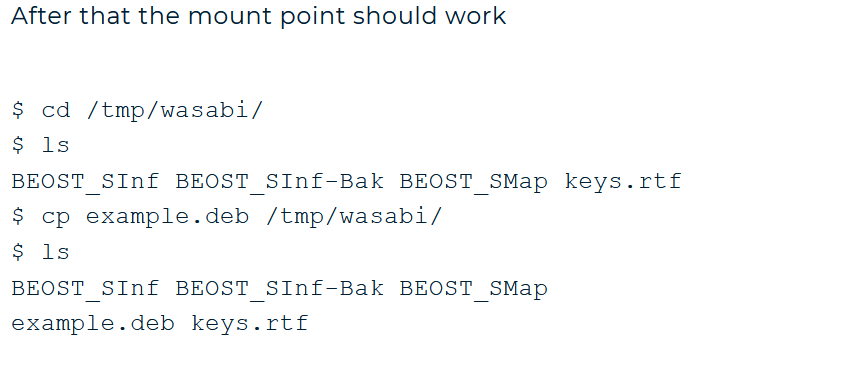

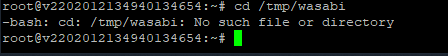

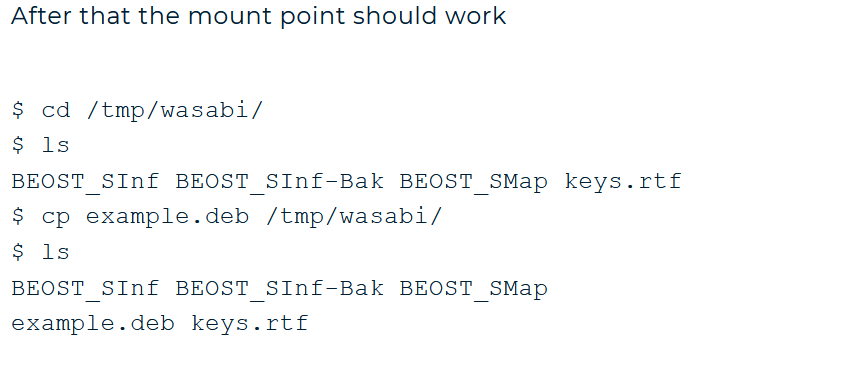

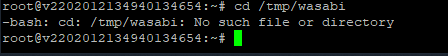

In addition, I thought maybe it was a folder/directory issue so I create one deeper level folder called wasabi in my server (fulll path

/mnt/volumes/nextcloud/wasabi) and then I ran the commands3fs xxxx /mnt/volumes/nextcloud/wasabi -o passwd_file=/etc/passwd-s3fs -o url=https://s3.eu-central-1.wasabisys.comand then I went to the dashboard for nextcloud and moved the directory

Then I went to wasabi to see if anything got moved to it, and there is nothing.

-

In addition, I thought maybe it was a folder/directory issue so I create one deeper level folder called wasabi in my server (fulll path

/mnt/volumes/nextcloud/wasabi) and then I ran the commands3fs xxxx /mnt/volumes/nextcloud/wasabi -o passwd_file=/etc/passwd-s3fs -o url=https://s3.eu-central-1.wasabisys.comand then I went to the dashboard for nextcloud and moved the directory

Then I went to wasabi to see if anything got moved to it, and there is nothing.

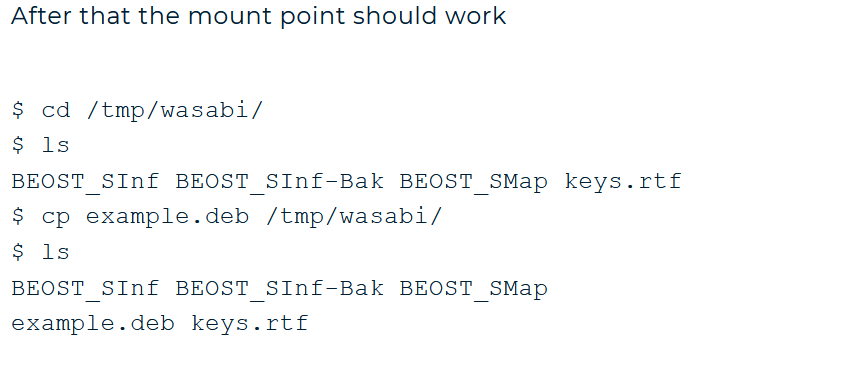

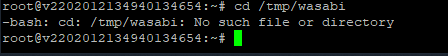

And, I cant do the remaining steps either per

https://wasabi-support.zendesk.com/hc/en-us/articles/115001744651-How-do-I-use-S3FS-with-Wasabi-

As when I try to follow these steps, I get this

-

And, I cant do the remaining steps either per

https://wasabi-support.zendesk.com/hc/en-us/articles/115001744651-How-do-I-use-S3FS-with-Wasabi-

As when I try to follow these steps, I get this

And probably my final note,

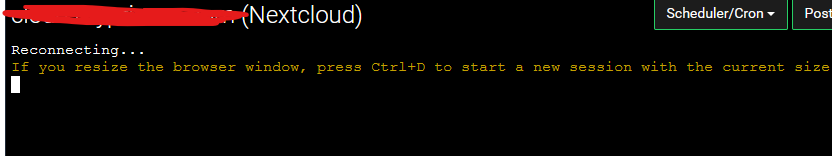

I cant access the console of nextcloud either. It keeps doing this loopFirst this one

And then this one

And hen it goes back to

Keep in mind, this is a brand new, fresh install not even logged into yet, my cache is cleared out as well

-

And probably my final note,

I cant access the console of nextcloud either. It keeps doing this loopFirst this one

And then this one

And hen it goes back to

Keep in mind, this is a brand new, fresh install not even logged into yet, my cache is cleared out as well

-

And, I cant do the remaining steps either per

https://wasabi-support.zendesk.com/hc/en-us/articles/115001744651-How-do-I-use-S3FS-with-Wasabi-

As when I try to follow these steps, I get this

-

@privsec Does the app say "Running" in the Cloudron dashboard? The Web Terminal won't work until the app is running.

-

@privsec I will probably give s3fs a try tomorrow and see if I can write a guide for it

(I have never tried s3fs or rclone mount either, so let's see if I am successful).

(I have never tried s3fs or rclone mount either, so let's see if I am successful). -

@girish Is there another mounting solution?

It is absolutely pivotal that I get the app directories off my servers drive.

@privsec Is the goal here to have a large amount of space external (at correct price) to the server? If you are hosted on a VPS is there an option for you to attach external storage? For example, many VPS providers like time4vps, hetzner etc have quite cheap external disks that can be attached to a server. The reason I ask is even if s3fs or rclone mount does work, it's probably going to be quite slow - for example, I see posts like https://serverfault.com/questions/396100/s3fs-performance-improvements-or-alternative . We have no experience with how well these mounts work and if they work at all. Maybe files go missing, maybe it's unstable, we don't know.

-

So I found some interesting posts:

- https://www.reddit.com/r/aws/comments/dplfoa/why_is_s3fs_such_a_bad_idea/

- https://www.reddit.com/r/aws/comments/a5jdth/what_are_peoples_thoughts_on_using_s3fs_in/

The point of both of them is that S3FS is not a file system and as such file permissions won't work. It's also not consistent (ie. if you write and read back, it may not be the same). This makes it pretty much unsuitable for making it an app data directory. What might work is if you make it as an external storage inside nextcloud - https://docs.nextcloud.com/server/latest/admin_manual/configuration_files/external_storage_configuration_gui.html. I didn't suggest this earlier because I think you said you didn't want this in your initial post. Can you recheck this? Because I think it will provide what you want. You can just add the wasabi storage space inside nextcloud and it will appear as a folder for your users (you can create 1 bucket for each user or something).

@privsec So, I think it's best not to go down this s3fs route, it won't work reliably.

(If you were banking on s3fs somehow, for refunds etc, not a problem

, just reach out to us on support@).

, just reach out to us on support@). -

So I found some interesting posts:

- https://www.reddit.com/r/aws/comments/dplfoa/why_is_s3fs_such_a_bad_idea/

- https://www.reddit.com/r/aws/comments/a5jdth/what_are_peoples_thoughts_on_using_s3fs_in/

The point of both of them is that S3FS is not a file system and as such file permissions won't work. It's also not consistent (ie. if you write and read back, it may not be the same). This makes it pretty much unsuitable for making it an app data directory. What might work is if you make it as an external storage inside nextcloud - https://docs.nextcloud.com/server/latest/admin_manual/configuration_files/external_storage_configuration_gui.html. I didn't suggest this earlier because I think you said you didn't want this in your initial post. Can you recheck this? Because I think it will provide what you want. You can just add the wasabi storage space inside nextcloud and it will appear as a folder for your users (you can create 1 bucket for each user or something).

@privsec So, I think it's best not to go down this s3fs route, it won't work reliably.

(If you were banking on s3fs somehow, for refunds etc, not a problem

, just reach out to us on support@).

, just reach out to us on support@). -

-

There may be another "advanced" way via Minio.

Minio can be used as a storage gateway.

See if you can connect Minio to Wasabi, if you can in gateway mode, then see if you can mount the local Minio instance in Linux, even if s3fs, it would be local.

-

@privsec We tried this once and it was unbearably slow. Personally I'd look for ways to reduce the Nextcloud storage needs and use a native mounted drive from the host, and just offer an Archive Wasabi S3 bucket for people to move things no longer needed for regular access to that to save space needs on the main NC.

-

@privsec We tried this once and it was unbearably slow. Personally I'd look for ways to reduce the Nextcloud storage needs and use a native mounted drive from the host, and just offer an Archive Wasabi S3 bucket for people to move things no longer needed for regular access to that to save space needs on the main NC.

@marcusquinn Cyber Duck/Mountain Duck is very good as a platform to making Wasabi/S3 storage quite accessible to users if they need.