Questions on OVH Object Storage with "+segments" (OpenStack) bucket/container

-

I was curious... is the "+segments" container created in OpenStack required to remain in account in order for Cloudron backups to restore correctly? I presume the answer is "no", but wanted to confirm.

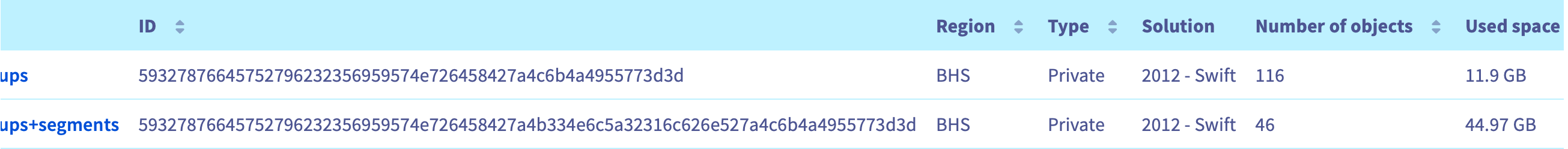

For context... In OVH Object Storage, they use OpenStack which has an annoying "<containerName>+segments" container that gets created automatically for multipart uploads. Since Cloudron only allows mutipart uploads with 1 GB max size and I have a few apps which have files a bit larger than this, it creates a lot of these files (around fourty-six 1 GB files I believe), plus it seems to also happen for the mail backup too, the other apps don't trigger this though. Unfortunately OVH still charges for that disk space, so I am thinking if there's no need to keep that around after Cloudron backup task completes, then I should find a way to auto-remove that container or at least all the files inside of it to help with the costs.

Second but likely unrelated issue (though maybe it is indeed related?) is why the main container "cloudronbackups" is only several GB in size despite it being one file in there that's over 20 GB (the mail tarball), but that's an issue for another day I think. As you can see, the +segments container is the one with the most size to it, but I think it's not necessary since the necessary backup material should be in the regular container, correct?

@d19dotca Interesting. I have to admit I have no idea what

+segmentsis. So, I can't actually tell if it can be deleted or not. Is it some book keeping/temporary stuff that OVH uses? If so, why is all this exposed to the user? Might be worth while asking their support since atleast in the S3 API we don't have any concepts of segments or cleaning up segments. -

Unsure if this is related or not, but I suppose this isn't going to work then? The logs don't seem to suggest an issue here though. I tried by spinning up a new VPS, installed the same version of Cloudron (7.0.2), deleted the "+segments" container from OVH Object Storage, then uploaded the backup config to the new Cloudron VPS and set the secret key and all that. It started to restore, but after maybe 20-25 seconds it just skipped forward to the main dashboard with no data presented on it and instead just the

Cloudron is offline. Reconnecting...message (which I'd expect since it's not actually restored yet but what I don't expect is to be directed to the dashboard so soon).Logs:

ubuntu@b2-15-bhs3-test-cloudron-restore:~$ sudo tail -f /home/yellowtent/platformdata/logs/box.log 2021-11-03T04:53:46.207Z box:server Cloudron 7.0.2 2021-11-03T04:53:46.207Z box:server ========================================== 2021-11-03T04:53:46.290Z box:settings initCache: pre-load settings 2021-11-03T04:53:46.325Z box:tasks stopAllTasks: stopping all tasks 2021-11-03T04:53:46.326Z box:shell stopTask spawn: /usr/bin/sudo -S /home/yellowtent/box/src/scripts/stoptask.sh all 2021-11-03T04:53:46.371Z box:dockerproxy startDockerProxy: started proxy on port 3003 Cloudron is up and running. Logs are at /home/yellowtent/platformdata/logs/box.log 2021-11-03T04:53:46.404Z box:cloudron runStartupTasks: not activated. generating IP based redirection config 2021-11-03T04:53:46.407Z box:reverseproxy writeDefaultConfig: writing configs for endpoint "setup" 2021-11-03T04:53:46.408Z box:shell reload spawn: /usr/bin/sudo -S /home/yellowtent/box/src/scripts/restartservice.sh nginx 2021-11-03T04:56:05.879Z box:provision setProgress: restore - Downloading backup 2021-11-03T04:56:05.880Z box:backuptask download: Downloading 2021-11-03-030000-931/box_v7.0.2 of format tgz to {"localRoot":"/home/yellowtent/boxdata/box","layout":[]} 2021-11-03T04:56:05.881Z box:provision setProgress: restore - Downloading backup 2021-11-03-030000-931/box_v7.0.2 2021-11-03T04:56:06.124Z box:provision setProgress: restore - Downloading 4M@4MBps 2021-11-03T04:56:06.374Z box:backuptask tarExtract: done. 2021-11-03T04:56:06.374Z box:backuptask restore: download completed, importing database 2021-11-03T04:56:06.375Z box:shell importFromFile exec: /usr/bin/mysql -h "127.0.0.1" -u root -ppassword box < /home/yellowtent/boxdata/box/box.mysqldump 2021-11-03T04:56:12.881Z box:shell importFromFile (stdout): null 2021-11-03T04:56:12.881Z box:shell importFromFile (stderr): mysql: [Warning] Using a password on the command line interface can be insecure. 2021-11-03T04:56:12.881Z box:backuptask restore: database imported 2021-11-03T04:56:12.881Z box:settings initCache: pre-load settings 2021-11-03T04:56:12.894Z box:cloudron setDashboardDomain: example.com 2021-11-03T04:56:12.895Z box:reverseproxy writeDashboardConfig: writing admin config for example.com 2021-11-03T04:56:12.918Z box:shell reload spawn: /usr/bin/sudo -S /home/yellowtent/box/src/scripts/restartservice.sh nginx 2021-11-03T04:56:13.125Z box:appstore updateCloudron: Cloudron updated with data {"domain":"example.com"} 2021-11-03T04:56:13.133Z box:cron handleSettingsChanged: recreating all jobs 2021-11-03T04:56:13.134Z box:shell removeCollectdProfile spawn: /usr/bin/sudo -S /home/yellowtent/box/src/scripts/configurecollectd.sh remove cloudron-backup 2021-11-03T04:56:13.140Z box:cron startJobs: starting cron jobs 2021-11-03T04:56:13.162Z box:shell removeCollectdProfile (stdout): Restarting collectd 2021-11-03T04:56:13.163Z box:cron backupConfigChanged: schedule 00 00 7,20 * * * (America/Vancouver) 2021-11-03T04:56:13.176Z box:cron autoupdatePatternChanged: pattern - 00 00 21 * * * (America/Vancouver) 2021-11-03T04:56:13.179Z box:cron Dynamic DNS setting changed to false 2021-11-03T04:56:13.206Z box:shell removeCollectdProfile (stdout): Removing collectd stats of cloudron-backup 2021-11-03T04:56:13.222Z box:cloudron onRestored: downloading mail 2021-11-03T04:56:13.225Z box:cloudron startMail: downloading backup 2021-11-03-030000-931/mail_v7.0.2 2021-11-03T04:56:13.226Z box:backuptask download: Downloading 2021-11-03-030000-931/mail_v7.0.2 of format tgz to {"localRoot":"/home/yellowtent/boxdata/mail","layout":[]} 2021-11-03T04:56:13.226Z box:cloudron startMail: Downloading backup 2021-11-03-030000-931/mail_v7.0.2 2021-11-03T04:56:20.061Z box:apphealthmonitor setHealth: 00895422-a1ff-4196-8bb8-cb4ff8d6eeaa (www.example.com) waiting for 1199.941 to update health 2021-11-03T04:56:20.062Z box:apphealthmonitor setHealth: 02b2f762-afa4-4278-afaa-5c07ad29715e (www.example.com) waiting for 1199.94 to update health 2021-11-03T04:56:20.062Z box:apphealthmonitor setHealth: 05db518f-2c45-411b-865f-813dbf85c244 (www.example.com) waiting for 1199.94 to update health 2021-11-03T04:56:20.063Z box:apphealthmonitor setHealth: 1247a348-c8da-4f30-8a4c-3fa014f092a1 (www.example.com.ca) waiting for 1199.939 to update health 2021-11-03T04:56:20.063Z box:apphealthmonitor setHealth: 134ef7f1-46f8-403e-8ac5-a3297b37893f (www.example.com.ca) waiting for 1199.939 to update health 2021-11-03T04:56:20.064Z box:apphealthmonitor setHealth: 1719fcbc-3a73-4b83-aedf-caae1b344838 (www.example.com) waiting for 1199.938 to update health 2021-11-03T04:56:20.064Z box:apphealthmonitor setHealth: 29354a9c-7e1d-4986-b78e-3510fe73b370 (www.example.com) waiting for 1199.938 to update healthIt's almost like the very moment it started to download mail it determined it had finished or something like that.

Is this related, @girish, or is this more of a coincidence with a possible bug in 7.0.2 or something? I don't see any error in the logs, which is strange.

@d19dotca said in Questions on OVH Object Storage with "+segments" (OpenStack) bucket/container:

2021-11-03T04:56:13.226Z box:cloudron startMail: Downloading backup 2021-11-03-030000-931/mail_v7.0.2

This is currently an "issue" when restoring. The mail backup is downloaded on start up and the dashboard becomes available only afterwards. This means that if the mail backup is large, it appears to "hang" when really it's just downloading the mail backup. How long has it been since it's downloading the mail backup?

Generally, from our tests, even download ~20GB can be fairly quick, so we let this be a low priority issue. This issue is only in Cloudron 7 because previously the mail was part of the box backup.

-

@d19dotca Interesting. I have to admit I have no idea what

+segmentsis. So, I can't actually tell if it can be deleted or not. Is it some book keeping/temporary stuff that OVH uses? If so, why is all this exposed to the user? Might be worth while asking their support since atleast in the S3 API we don't have any concepts of segments or cleaning up segments.@girish said in Questions on OVH Object Storage with "+segments" (OpenStack) bucket/container:

@d19dotca Interesting. I have to admit I have no idea what

+segmentsis. So, I can't actually tell if it can be deleted or not. Is it some book keeping/temporary stuff that OVH uses? If so, why is all this exposed to the user? Might be worth while asking their support since atleast in the S3 API we don't have any concepts of segments or cleaning up segments.Hi Girish! It's not actually OVH-unique though they're probably the biggest company using it, but it's actually an OpenStack thing. The following link may be a helpful read for insight into this: https://mindmajix.com/openstack/uploading-large-objects

From the article:

When using cloud storage, such as OpenStack Swift, at some point you might face a situation when you have to upload relatively large files (i.e. a couple of gigabytes or more). For a number of reasons this brings in difficulties for both client and server. From a client point of view, uploading such a large file is quite cumbersome, especially if the connection is not very good. From a storage point of view, handling very large objects is also not trivial.

For that reason, Object Storage (swift) uses segmentation to support the upload of large objects. Using segmentation, uploading a single object is virtually unlimited. The segmentation process works by fragmenting the object, and automatically creating a file that sends the segments together as a single object. This option offers greater upload speed with the possibility of parallel uploads.

Individual objects up to 5 GB in size can be uploaded to OpenStack Object Storage. However, by splitting the objects into segments, the download size of a single object is virtually unlimited. Segments of the larger object are uploaded and a special manifest file is created that, when downloaded, sends all the segments concatenated as a single object. By splitting objects into smaller chunks, you also gain efficiency by allowing parallel uploads.

I suspect I could get around this if Cloudron allowed larger max part sizes (currently limited to 1 GB), but that's just a theory. The reason I believe this is because this issue is easily reproducible, and I have mine set to 1 GB so there's not many files in the segments folder it creates, but if I set the max part size to the minimum allowed in Cloudron you'd basically get hundreds of files in there and the segments folder would be created even earlier in the backup process. You should be able to reproduce this easily, though I can certainly offer my environment for assistance if that's easier for you.

I've talked to OVH Support on that before last year when I first started testing out their Object Storage offering, and they informed me this was an OpenStack concept that they apparently have no control over, and unfortunately the disk space consumed in the segments folder also counts against our storage costs. While I really want to use OVH because they are one of the few (possibly only apart from AWS) that has the option to keep the data in Canada, they do have a frustrating limitation of this segments thing which complicates things a bit.

OpenStack uses swift for the integration with it rather than s3, so I wonder if perhaps using their preferred tool of OpenStack would be helpful here? Not sure if that's help solve problems but it could allow for greater functionality too if not fixing the segments thing. https://docs.openstack.org/ocata/cli-reference/swift.html

And actually, as I am writing this, I guess I can't really leave the segments folder deleted? I think this is because it uses a manifest file behind the scenes to link everything together: https://docs.openstack.org/swift/ussuri/admin/objectstorage-large-objects.html - so maybe I just found the answer to my own question anyways, lol. The problem is, I think, that when Cloudron cleans up backups, it doesn't touch the segments folder, though I'll see if I can confirm that for sure too.

@girish said in Questions on OVH Object Storage with "+segments" (OpenStack) bucket/container:

This is currently an "issue" when restoring. The mail backup is downloaded on start up and the dashboard becomes available only afterwards. This means that if the mail backup is large, it appears to "hang" when really it's just downloading the mail backup. How long has it been since it's downloading the mail backup?

That's interesting, glad to know it's not related to the original issue then. I suspect something still a bit odd there though... my mail tarball image is around 23 GB in size, and it seemed to redirect after starting the restore within about 25 seconds, but that seems far too early to have been able to download 23 GB of data when it's only pushing around 5 Mbps from what I could tell. I can open a new thread on this topic though, as that may be something that needs fixing in later builds, because right now the behaviour would suggest to a user that it either failed or broke, or hung since it looks like it finished yet isn't connected, etc, which isn't a great user experience IMO.

-

@girish said in Questions on OVH Object Storage with "+segments" (OpenStack) bucket/container:

@d19dotca Interesting. I have to admit I have no idea what

+segmentsis. So, I can't actually tell if it can be deleted or not. Is it some book keeping/temporary stuff that OVH uses? If so, why is all this exposed to the user? Might be worth while asking their support since atleast in the S3 API we don't have any concepts of segments or cleaning up segments.Hi Girish! It's not actually OVH-unique though they're probably the biggest company using it, but it's actually an OpenStack thing. The following link may be a helpful read for insight into this: https://mindmajix.com/openstack/uploading-large-objects

From the article:

When using cloud storage, such as OpenStack Swift, at some point you might face a situation when you have to upload relatively large files (i.e. a couple of gigabytes or more). For a number of reasons this brings in difficulties for both client and server. From a client point of view, uploading such a large file is quite cumbersome, especially if the connection is not very good. From a storage point of view, handling very large objects is also not trivial.

For that reason, Object Storage (swift) uses segmentation to support the upload of large objects. Using segmentation, uploading a single object is virtually unlimited. The segmentation process works by fragmenting the object, and automatically creating a file that sends the segments together as a single object. This option offers greater upload speed with the possibility of parallel uploads.

Individual objects up to 5 GB in size can be uploaded to OpenStack Object Storage. However, by splitting the objects into segments, the download size of a single object is virtually unlimited. Segments of the larger object are uploaded and a special manifest file is created that, when downloaded, sends all the segments concatenated as a single object. By splitting objects into smaller chunks, you also gain efficiency by allowing parallel uploads.

I suspect I could get around this if Cloudron allowed larger max part sizes (currently limited to 1 GB), but that's just a theory. The reason I believe this is because this issue is easily reproducible, and I have mine set to 1 GB so there's not many files in the segments folder it creates, but if I set the max part size to the minimum allowed in Cloudron you'd basically get hundreds of files in there and the segments folder would be created even earlier in the backup process. You should be able to reproduce this easily, though I can certainly offer my environment for assistance if that's easier for you.

I've talked to OVH Support on that before last year when I first started testing out their Object Storage offering, and they informed me this was an OpenStack concept that they apparently have no control over, and unfortunately the disk space consumed in the segments folder also counts against our storage costs. While I really want to use OVH because they are one of the few (possibly only apart from AWS) that has the option to keep the data in Canada, they do have a frustrating limitation of this segments thing which complicates things a bit.

OpenStack uses swift for the integration with it rather than s3, so I wonder if perhaps using their preferred tool of OpenStack would be helpful here? Not sure if that's help solve problems but it could allow for greater functionality too if not fixing the segments thing. https://docs.openstack.org/ocata/cli-reference/swift.html

And actually, as I am writing this, I guess I can't really leave the segments folder deleted? I think this is because it uses a manifest file behind the scenes to link everything together: https://docs.openstack.org/swift/ussuri/admin/objectstorage-large-objects.html - so maybe I just found the answer to my own question anyways, lol. The problem is, I think, that when Cloudron cleans up backups, it doesn't touch the segments folder, though I'll see if I can confirm that for sure too.

@girish said in Questions on OVH Object Storage with "+segments" (OpenStack) bucket/container:

This is currently an "issue" when restoring. The mail backup is downloaded on start up and the dashboard becomes available only afterwards. This means that if the mail backup is large, it appears to "hang" when really it's just downloading the mail backup. How long has it been since it's downloading the mail backup?

That's interesting, glad to know it's not related to the original issue then. I suspect something still a bit odd there though... my mail tarball image is around 23 GB in size, and it seemed to redirect after starting the restore within about 25 seconds, but that seems far too early to have been able to download 23 GB of data when it's only pushing around 5 Mbps from what I could tell. I can open a new thread on this topic though, as that may be something that needs fixing in later builds, because right now the behaviour would suggest to a user that it either failed or broke, or hung since it looks like it finished yet isn't connected, etc, which isn't a great user experience IMO.

@d19dotca said in Questions on OVH Object Storage with "+segments" (OpenStack) bucket/container:

I suspect something still a bit odd there though... my mail tarball image is around 23 GB in size, and it seemed to redirect after starting the restore within about 25 seconds, but that seems far too early to have been able to download 23 GB of data when it's only pushing around 5 Mbps from what I could tell.

Right, so this is exactly the issue. In previous releases (below Cloudron 6), the box backup and mail backup were together in a single tarball. So, the restore UI did not redirect until both were downloaded. In current release (Cloudron 7), box backup and mail backup are separate. The restore UI only waits for box backup to download. Usually this is very small and thus super quick. The mail backup starts downloading immediately after. There is no obvious indication anywhere that it's downloading mail backup, we will fix this shortly.

-

@d19dotca said in Questions on OVH Object Storage with "+segments" (OpenStack) bucket/container:

I suspect something still a bit odd there though... my mail tarball image is around 23 GB in size, and it seemed to redirect after starting the restore within about 25 seconds, but that seems far too early to have been able to download 23 GB of data when it's only pushing around 5 Mbps from what I could tell.

Right, so this is exactly the issue. In previous releases (below Cloudron 6), the box backup and mail backup were together in a single tarball. So, the restore UI did not redirect until both were downloaded. In current release (Cloudron 7), box backup and mail backup are separate. The restore UI only waits for box backup to download. Usually this is very small and thus super quick. The mail backup starts downloading immediately after. There is no obvious indication anywhere that it's downloading mail backup, we will fix this shortly.

@girish said in Questions on OVH Object Storage with "+segments" (OpenStack) bucket/container:

Right, so this is exactly the issue. In previous releases (below Cloudron 6), the box backup and mail backup were together in a single tarball. So, the restore UI did not redirect until both were downloaded. In current release (Cloudron 7), box backup and mail backup are separate. The restore UI only waits for box backup to download. Usually this is very small and thus super quick. The mail backup starts downloading immediately after. There is no obvious indication anywhere that it's downloading mail backup, we will fix this shortly.

@girish Oh sorry, I misunderstood. I thought you meant it waits for the mail part to download which is why I was unsure how that part was so quickly done haha, so it's just the box data one then which yes that'd be super quick then and explains that part.

-

Quick update: I cleared out the backups from OVH Object Storage and started a fresh backup. The totals of the buckets:

cloudronbackups = 6.74 GB

cloudronbackups+segments = 45 GB

TOTAL = 51.74 GBThe total combined seems about right, being that the mail itself is over 23 GB itself (zipped) and I have an app around 4 GB in size too plus the rest smaller ones. If I take my unzipped total on my VPS it's giving me around 63 GB used, of course a few GBs will be logs and other system data that's not included in the backup, etc, and it's also the uncompressed size. So the 51.74 GB sounds about right to me, plus it's nearly the size as seen when I tried Backblaze and Wasabi too (though it was closer to 55 GB there but I think I've deleted a few apps since then too), so it's not an "OVH is broken" thing. haha.

So I guess maybe it's actually okay, it's just split oddly but the total disk usage in OVH Object Storage might be totally fine. I'll have to wait a few days and then I'll maybe try to cleanup backups and see if it correctly trims the cloudronbackups+segments container.

What's odd to me is just the discrepancy in the sizing between the containers, but I guess the total is still accurate so it must be fine. For example, if everything is truly in the original cloudronbackups folder as it appears to be if you run through the folder listings then the total should be FAR higher than the 6.74 GB it reports, but I suspect this is because it's simply linking to the cloudronbackups+segments container for the rest of the larger files which is why the total still appears correctly between them even if it doesn't quite match the individual sizes when looking at the files in the cloudronbackups container alone.

So I guess moral of the story... the +segments container seems to be required, and should not be deleted, otherwise restoring from it wouldn't work.

Hopefully that kind of makes sense.

-

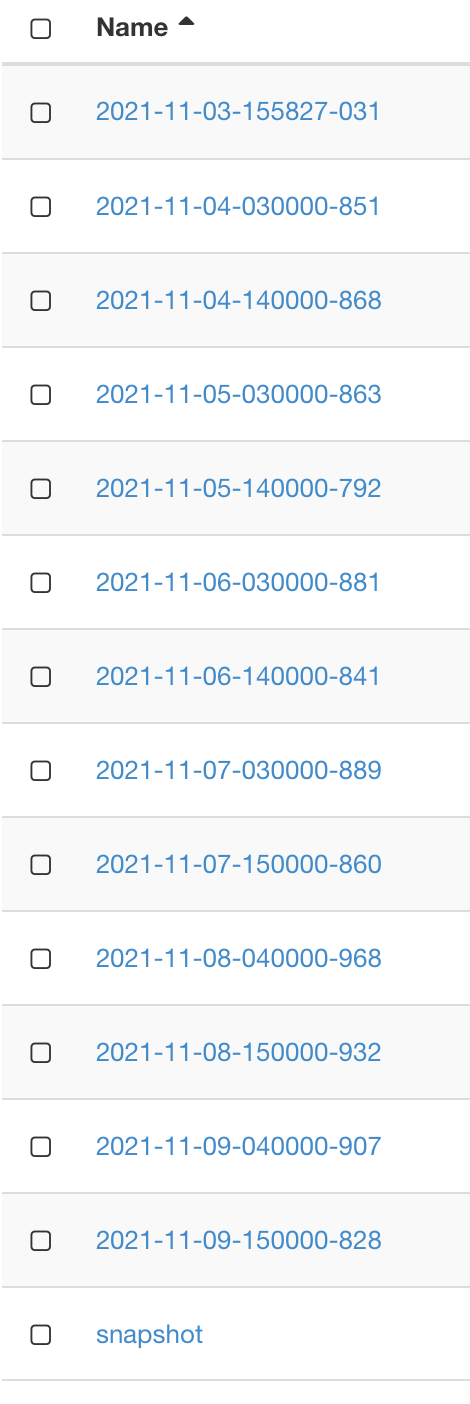

Hi @girish - I think there may be an issue here that Cloudron's scripts will need to account for.

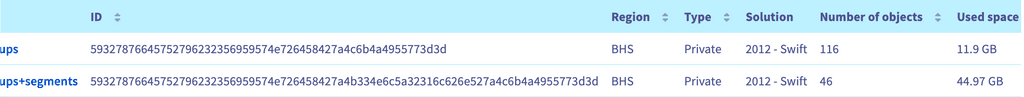

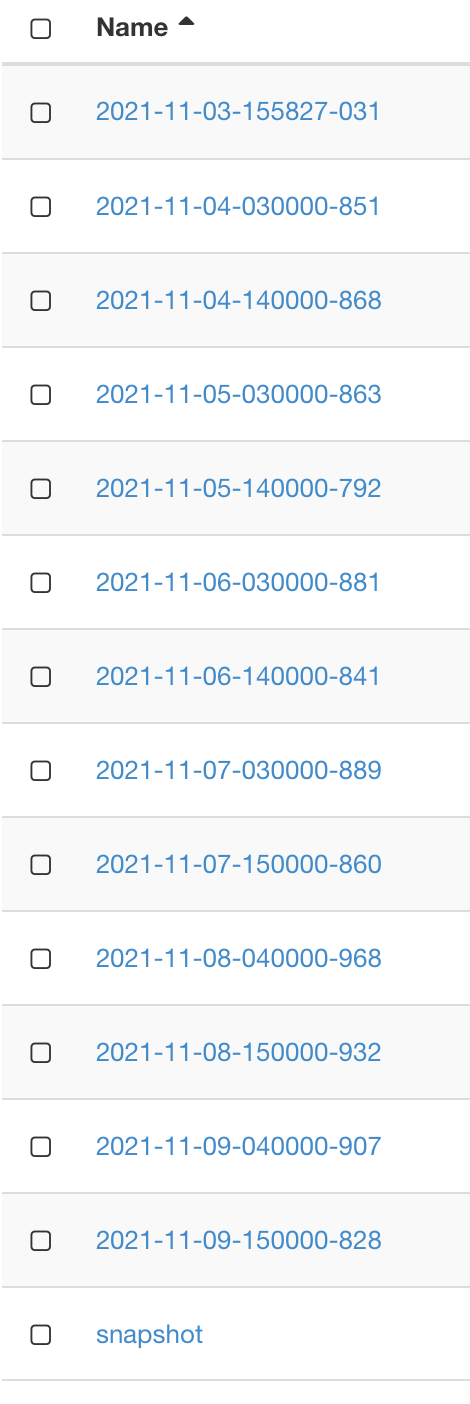

I ran a test with leaving 4 days of backups in OVH Object Storage, and then later changed the retention period to 2 days and ran the cleanup process to ensure the +segments container was also being trimmed properly. What I found is that data was deleted from the main container but not the segments container associated with it. I suspect this is incorrect behaviour as I'd have expected the total GB used between the two containers to be approximately half going from 4 days to 2 days, and that was the case for the main container but the segments container was virtually untouched.

Before cleanup:

cloudronbackups = 432 objects (54.20 GB)

cloudronbackups+segments = 598 objects (585.97 GB)After cleanup:

cloudronbackups = 204 (22.32 GB)

cloudronbackups+segments = 598 (585.97 GB)Notice the cloudronbackups container is approximately half the amount as expected, but the cloudronbackups+segments container is untouched. When I look at the listing of folders in the segments container, I see old references still there too:

What I see in all the older folders is just one file by the way... the mail file. So maybe since that all changed in 7.x that maybe is an issue and not so much an OVH one? Could it be that it's just not deleting the mail backups regardless of storage type?

-

G girish referenced this topic on

G girish referenced this topic on

-

Hi @girish - I think there may be an issue here that Cloudron's scripts will need to account for.

I ran a test with leaving 4 days of backups in OVH Object Storage, and then later changed the retention period to 2 days and ran the cleanup process to ensure the +segments container was also being trimmed properly. What I found is that data was deleted from the main container but not the segments container associated with it. I suspect this is incorrect behaviour as I'd have expected the total GB used between the two containers to be approximately half going from 4 days to 2 days, and that was the case for the main container but the segments container was virtually untouched.

Before cleanup:

cloudronbackups = 432 objects (54.20 GB)

cloudronbackups+segments = 598 objects (585.97 GB)After cleanup:

cloudronbackups = 204 (22.32 GB)

cloudronbackups+segments = 598 (585.97 GB)Notice the cloudronbackups container is approximately half the amount as expected, but the cloudronbackups+segments container is untouched. When I look at the listing of folders in the segments container, I see old references still there too:

What I see in all the older folders is just one file by the way... the mail file. So maybe since that all changed in 7.x that maybe is an issue and not so much an OVH one? Could it be that it's just not deleting the mail backups regardless of storage type?

-

@d19dotca there is a bug in the cleanup of mail backups in 7.0.3. This is fixed in (yet to be released) 7.0.4.

-

Resurrecting an old thread but @samir hit this as well recently. The object storage is growing in size dramatically.

I suspect it is https://bugs.launchpad.net/swift/+bug/1813202 . Basically, multipart storage is not cleaned up properly on overwrite/delete. I find that a bit shocking, this incorrectly bills customers, if it's true.