Expose disk usage / free space to API (preferably with a readonly token)

-

@msbt AFAIK, linux provides no event for disk usage. So, one just has to poll a lot. du based polling can churn the disk a lot.

I would also like to know how this is done outside Cloudron.

@girish things that don't churn disk are non filesystem based polling, such as /proc or other similar kernel based APIs.

This can also be done in a multi stage way, for example, monitoring a /proc node for inodes that lets you know of a calculated threshold being reached after which you can query disks or filesystem for more specificity.

-

@girish but we do have the Cloudron notification, it just doesn't send out an email about it. Could we make that a webhook or something so we can trigger alerts? I think that was the case once (email notification), but not anymore it seems.

-

@msbt ah ok, so you just want better notifications . Can do that.

In 7.3.4 atleast, we started collecting du information in a cron job (every 24 hours).

@girish yes, I just want to get notified either by Cloudron via email (because I'm not always logging in and seeing the notifications) or some other means (i.e. regularly checking an API endpoint) if disk space is running low. It's not about individual space usage of apps, just the df of the main disk

-

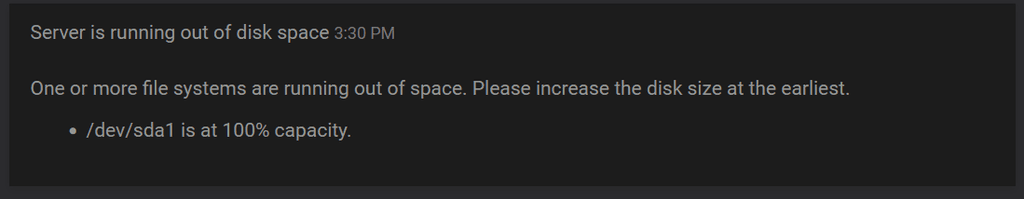

So I just had another machine hitting 100% disk usage without getting notified via email, only the Cloudron notification inside the dashboard (which was pure luck that I opened it up, could have cought it much later):

What's the current status there, was there supposed to be an email or did that get removed with the other notifications (i.e. backup failed). If so, what's the best way to get notified outside of Cloudron if a server is running low on space?

-

There is no email currently. We used to have one but when there is no disk space usually you cannot send emails from the server itself either. We have to find some other way of notifications (i.e like a external webhook).

-

There is no email currently. We used to have one but when there is no disk space usually you cannot send emails from the server itself either. We have to find some other way of notifications (i.e like a external webhook).

@girish wasn't there a threshold when that message apperars? Should be plenty of space to send an email (also, my servers are all using Postmark, maybe that would make it easier to send those messages too?)

I would rather get too many emails than having to recover a Cloudron because it ran oos

But any kind of notificaton/webhook/api endpoint to monitor disk space would be much appreciated, that's one of my biggest concerns these days.

But any kind of notificaton/webhook/api endpoint to monitor disk space would be much appreciated, that's one of my biggest concerns these days. -

At least in the past usually the situation was happening very fast and not that much over time. For example a Cloudron having local disk backups enabled and a backup run quickly fills up the disk or someone syncing large amounts of data via Nextcloud at once.

-

At least in the past usually the situation was happening very fast and not that much over time. For example a Cloudron having local disk backups enabled and a backup run quickly fills up the disk or someone syncing large amounts of data via Nextcloud at once.

@nebulon true, that is probably the reason in many cases, but I have a current one where the platformdata logs are >15GB now due to some errors in an app, that didn't grow over night, but still rapidly. Plenty of time to act on it though, if I have a notifcation about it

-

I don't understand the issue here.

Simple bash script plusntfyalerting to telegram

dumight create disk churn, but I don't thinkdfdoes, or to a much lesser degree.

And it's disk FREE space that needs to be alerted, not the results of what's used (that might be a secondary space if disk free is low) -

I don't understand the issue here.

Simple bash script plusntfyalerting to telegram

dumight create disk churn, but I don't thinkdfdoes, or to a much lesser degree.

And it's disk FREE space that needs to be alerted, not the results of what's used (that might be a secondary space if disk free is low)@timconsidine of course there are ways to do that outside of Cloudron, but since this affects any and all installations, it would be nice if it was shipped with the platform by default, which will also survive migrations and such.

It would be cool to set a threshold for any disk and mounted volume in

/#/system, either a fixed number or percentage, for when a notification email is sent out. -

I get the desire to have it "in Cloudron".

Equally I would have to say thatntfyis an app on Cloudron, just as Grafana is.

Seems this is more about wanting Grafana support. Which is fine. No objections to that in itself.However, given the frequency here in the forum of people needing to recover from full disks and the criticality of such events (server down, multiple apps down, recovery work needed), I'm just suggesting a solution that is 'here and now' rather than waiting.

There's a cron tab on every app in Cloudron.

- Install

ntfyand set up to send the alerts how you want (mobile app, Telegram) - add this to a cron entry in an app, setting the user name and password, and also the ntfy app location :

*/5 * * * * root_space=$(df -h | grep '/$') && root_usage=$(echo $root_space | awk '{ print int($5) }') && if [ $root_usage -gt 80 ]; then echo "Disk usage alert : $root_usage%" | curl -u user:password -X POST --data "payload@-" https://ntfy.domain.uk/alerts; fi

Automated no hassle alerts for 5 mins work

No excuse for disk full ever again

- Install

-

@girish when a Cloudron app is backed up, do the contents of the cron tab get backed up as well ?

@timconsidine easy to test by cloning the app.

-

@robi @timconsidine good catch, I don't think it is. Will fix. Opened https://git.cloudron.io/cloudron/box/-/issues/832 to track internally