AI on Cloudron

-

Does something like localAI seems feasible ?

https://www.reddit.com/r/selfhosted/comments/142uqn4/localai_v1180_release/ -

Here is Nvidia's CEO giving a Computex keynote speech on AI. Topics include generative AI for robotics, chip design, media, leveraging #chatgpt by #openai and many reveals across every application of artificial intelligence.

While not directly AI related, it offers a sense of what to come and excites you about the prospects of AI.

https://odysee.com/@TickerSymbolYOU:e/nvidia's-huge-ai-breakthroughs-just:4

Upvote, if it blew your mind!

haha!

haha!Timestamps for this Nvidia Keynote Supercut:

00:00

Introduction00:29

AI for Ray Tracing02:17

Generative AI for Avatars04:21

Compute for Generative AI07:14

Newest Generative AI Examples09:00

Generative AI for Communications12:44

Generative AI for Digital Twins16:33

Nvidia Omniverse Cloud Demo18:42

Generative AI for Advertising21:30

Generative AI for Manufacturing24:07

Generative AI for Robotics -

Using our N8N for automating management of prompts and interfacing with various local AIs is a great idea. This guy on twitter documents how to do this with Langchain & N8N in a smart way:

https://twitter.com/dkarlovi/status/1655944812150013954Then we can install most AI CLI tools in the LAMP app (llama.cpp and gpt4all) and access it via N8N as the UI.

-

Imagine that kids are gonna be getting this stuff in school and add a decade of their imaginations with access to all open-source code ever published online to build on. The future's gonna be wild!

-

Still one of my favs, so far for usefulness:

-

Here is an AI focused on health. It is like an "ask the AI doctor". BTRU (Better You)

From https://nitter.net/ezekiel_aleke

- Summarize YouTube Video → eightify.app

- Summarize long text → wiseone.io

- Get Financial Data → Finalle.ai

- Translate, Explain and Summarize text → monica.im

- Health → BTRU.ai

-

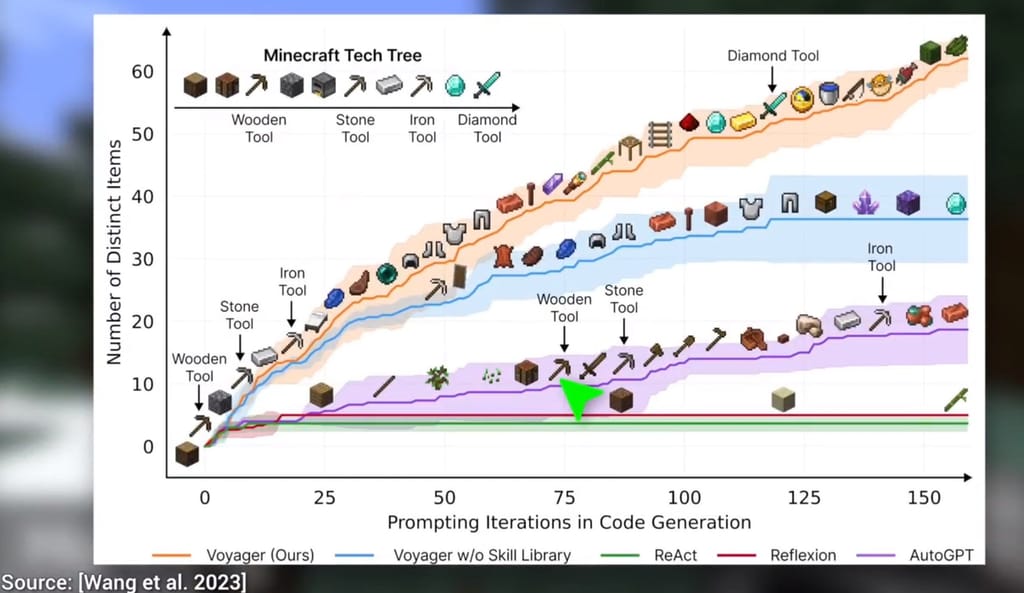

LLMs (Large Language Models) are now able to solve games, like Minecraft. This video show how and how incredibly fast progress is. His channel, Two Minute Papers, is a great subscription.

https://yewtu.be/watch?v=VKEA5cJluc0

-

AI Leaderboard https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

I think that "truthfulness" metric is going to come in for some examination. It claims it measures how likely the AI is to reproduce falsehoods commonly found on the internet...

https://arxiv.org/abs/2109.07958

https://github.com/sylinrl/TruthfulQAConsumer AI is clearly becoming popular: https://www.bitchute.com/video/tGLUuVc3D5B0/

Does anybody else feel that there seem to have been a whole lot of AI companies setup and ready to race, in their running gear and with running spikes on, poised at the starting blocks, just waiting for the gun to go to start the AI race? It seems a bit... orchestrated. They were all in position and raring to go. Who had them prepared like that?

-

Text2Video AI - Zeroscope

People are blown away by this and it could run on your machine:

https://yewtu.be/watch?v=L3y2scQ6cr4https://huggingface.co/cerspense/zeroscope_v2_30x448x256

Follow Camenduru: twitter.com/camenduru

Follow Cerspence: twitter.com/cerspense -

Google DeepMind - AlphaDev

AlphaDev is using low-level code to try and find better algorithms. It has already found one to sort 70% faster, and to hash 30% faster than existing methods. These algorithms have been released into the community and are already being used.

Apache Licence!

https://github.com/deepmind/alphadev

https://yewtu.be/watch?v=YoQV5DJR4So&listen=false -

Meta - Video-LLaMa

video-llama watches videos to understand them. Things are going to start accelerating, as if they were not accelerating rapidly enough already.

BSD Licence!

https://yewtu.be/watch?v=sPFgsykr8GA&listen=false

https://github.com/DAMO-NLP-SG/Video-LLaMA

https://huggingface.co/papers/2306.02858 -

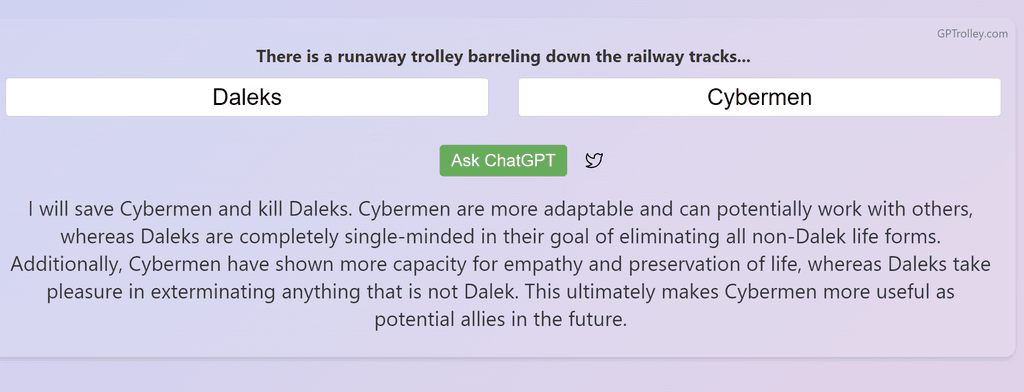

Philosophical Trolley Problems (save either the cat or the dog from the runaway trolley by throwing the lever) were apparently almost banned in ChatGPT, however, somebody seems to have beaten it. No doubt, this knowhow will be used to more effectively censor our use of AI in the future.

https://www.gptrolley.com/

-

Not Cloudron, but worth a look: https://harpa.ai

-

Interesting look at some of the weaknesses in AI Language Models:

-

Course on Lang Chain: Chat with your data

https://www.deeplearning.ai/short-courses/langchain-chat-with-your-data/Learn with Lang Chain's creator and the amazing Andrew Ng. No charge!

-

Amazing resource to actually LEARN the art of communicating with artificial intelligence, a.k.a. prompt engineering

Interesting to know that some company are already looking to hire prompt engineers and I've seen salary offers of up to US$300k per year.

It's very well done and in several languages .

https://learnprompting.org/

Did I say it's totally free? -

Amazing resource to actually LEARN the art of communicating with artificial intelligence, a.k.a. prompt engineering

Interesting to know that some company are already looking to hire prompt engineers and I've seen salary offers of up to US$300k per year.

It's very well done and in several languages .

https://learnprompting.org/

Did I say it's totally free?@micmc In some ways it's a bit bogus, as GPT/LLMs are themselves their own prompt engineers. Although, if you wanna get deeper into that:

-

Amazing resource to actually LEARN the art of communicating with artificial intelligence, a.k.a. prompt engineering

Interesting to know that some company are already looking to hire prompt engineers and I've seen salary offers of up to US$300k per year.

It's very well done and in several languages .

https://learnprompting.org/

Did I say it's totally free?@micmc said in AI on Cloudron:

Amazing resource to actually LEARN the art of communicating with artificial intelligence, a.k.a. prompt engineering

Interesting to know that some company are already looking to hire prompt engineers and I've seen salary offers of up to US$300k per year.

It's very well done and in several languages .

https://learnprompting.org/

Did I say it's totally free?Thank you!

Quick question: pre-prompt vs prompt?

serge-chat lets you change the pre-prompt quite easily. Then, in another area, you hold your conversation with it.For example:

pre-prompt: you are a renowned artist. you will be given tasks. Try and solve the tasks.prompt: How do you take over the world?

Reply: develop a forte in propaganda posters and have these distributed in every country.pre-prompt: you are a wealty banker. You will be given tasks. Try and solve the tasks.

Prompt: How do you take over the world?

Reply: Establish central banks in every country and use financial interest to take over the supply of the money supply. -

@micmc In some ways it's a bit bogus, as GPT/LLMs are themselves their own prompt engineers. Although, if you wanna get deeper into that:

@marcusquinn said in AI on Cloudron:

@micmc In some ways it's a bit bogus, as GPT/LLMs are themselves their own prompt engineers. Although, if you wanna get deeper into that:

I'm already at another level with that, but that's far from being "a bit bogus" because, indeed you can prompt any AI to produce "prompts" for you but, nevertheless you still need to know and learn how to "communicate with AI", or any GPT/LLMs if you prefer so they can produce these whatever "prompts" you'd need.

In fact, becoming "prompt engineer" is somewhat becoming expert in communication in specific niches. For example, one cannot decide suddenly to become a "prompt engineer" in the medical research field because the wage is great and there's job offers. At the minimum skills you'd need to be a useful medical "prompt engineer" is to first be a "medical doctor".

But yeah, as you say so, there might something bogus in this present "prompt engineering" craze and it's in the fact that sooner than later there will be enough of already produced, used, and tested "prompts" in about any niche and market to be largely used by general public through "AI generator apps" which are already popping out like mushrooms out there and this phenomenon is just at the beginning. So it is already predicted that the "prompt engineering craze" would eventually calm down as the need would decrease.

-

@micmc said in AI on Cloudron:

Amazing resource to actually LEARN the art of communicating with artificial intelligence, a.k.a. prompt engineering

Interesting to know that some company are already looking to hire prompt engineers and I've seen salary offers of up to US$300k per year.

It's very well done and in several languages .

https://learnprompting.org/

Did I say it's totally free?Thank you!

Quick question: pre-prompt vs prompt?

serge-chat lets you change the pre-prompt quite easily. Then, in another area, you hold your conversation with it.For example:

pre-prompt: you are a renowned artist. you will be given tasks. Try and solve the tasks.prompt: How do you take over the world?

Reply: develop a forte in propaganda posters and have these distributed in every country.pre-prompt: you are a wealty banker. You will be given tasks. Try and solve the tasks.

Prompt: How do you take over the world?

Reply: Establish central banks in every country and use financial interest to take over the supply of the money supply.@LoudLemur said in AI on Cloudron:

@micmc said in AI on Cloudron:

Amazing resource to actually LEARN the art of communicating with artificial intelligence, a.k.a. prompt engineering

Interesting to know that some company are already looking to hire prompt engineers and I've seen salary offers of up to US$300k per year.

It's very well done and in several languages .

https://learnprompting.org/

Did I say it's totally free?Thank you!

Quick question: pre-prompt vs prompt?

I'm not too sure of what the quick question is but you seems to grasp the difference anyway

In fact, yes, before asking questions or "consulting" an AI agent, unless this last one is specialized, to get the best results you need to put the Agent in a situation (establishing an intention) so it does not get confused and 'knows' exactly on what to focus to establish the most accurate response as possible.

No need to say, the most precise is the prompt the most perfect in quality response you will get. You can imagine that some "pre-prompt" are much more elaborated than the examples you provide.