AI on Cloudron

-

ChatGPT 4 is now available to all fee-paying users. It remains proprietary AI.

https://openai.com/blog/gpt-4-api-general-availability

The Powers That Shouldn't Be don't want people to put "their" values into AI, or to raise doubts. DeepMind start pushing for 'regulation' over AI.

@LoudLemur said in AI on Cloudron:

The Powers That Shouldn't Be don't want people to put "their" values into AI, or to raise doubts. DeepMind start pushing for 'regulation' over AI.

Ironically the title says : "We need humans at the top of food chain; says AI pioneer" BUT, startlingly enough that is actually a FACT!

It's also a paradox, because there wouldn't exist any AI technology without humans UP there, "at the top of food chain". It sounds bold, but that's very likely for making the headlines anyway...

Of course we understand that what he means is that it take humans to constantly be overlooking at AI apps and processes, so it's 'never' be left by itself, to maintain and enhance itself, and program itself even more powerful features by itself without any human supervision. (Yes, supervision is human, not AI's

)

)We already have "AI Surveillance Systems" over humans, but, indeed, we also MUST have to legislate, implement and enforce a "Human Surveillance over AI Systems" as well, and forever!

-

@LoudLemur The new Code Interpreter beta is WILD. Try uploading Wordpress plugins to it and ask how they work, and for it to create patches and add-ons. Amazing to see it in action!

@marcusquinn said in AI on Cloudron:

@LoudLemur The new Code Interpreter beta is WILD. Try uploading Wordpress plugins to it and ask how they work, and for it to create patches and add-ons. Amazing to see it in action!

Can you IMAGINE man? And all this is only at the beginnings! We'll need to find another expression than "mind boggling"?

-

Elong announces his new AI company: xAI. It will tell us the truth...

https://x.ai/https://www.businessinsider.com/elon-musk-new-company-xai-website-2023-7

@LoudLemur said in AI on Cloudron:

Elong announces his new AI company: xAI. It will tell us the truth...

https://x.ai/

-

@marcusquinn said in AI on Cloudron:

@LoudLemur The new Code Interpreter beta is WILD. Try uploading Wordpress plugins to it and ask how they work, and for it to create patches and add-ons. Amazing to see it in action!

Can you IMAGINE man? And all this is only at the beginnings! We'll need to find another expression than "mind boggling"?

@micmc said in AI on Cloudron:

The new Code Interpreter beta is WILD. Try uploading Wordpress plugins to it and ask how they work, and for it to create patches and add-ons.

Under what licence does it release/create that code? For example, if I had it create a "Hello, world!" browser extension, what would the licence be, or is it just public domain?

-

@micmc said in AI on Cloudron:

The new Code Interpreter beta is WILD. Try uploading Wordpress plugins to it and ask how they work, and for it to create patches and add-ons.

Under what licence does it release/create that code? For example, if I had it create a "Hello, world!" browser extension, what would the licence be, or is it just public domain?

@LoudLemur I believe their Ts & Cs say they don't claim copyright over output. With WordPress, it's typical that GPL is inherited. Otherwise, I'm sure it can be whatever you want it to be. Ultimately, the value in any code isn't the code itself, it's the usage and feedback on what the code does, and anything you publish open-source, that's then tried & tested, they'll then be re-ingesting to future LLMs. They're not interested in your license, they're interested in your proving code does what it says it does, as then it's valuable to re-ingest and increase their own code understanding and creation capabilities. You work for the robots now

-

@LoudLemur I believe their Ts & Cs say they don't claim copyright over output. With WordPress, it's typical that GPL is inherited. Otherwise, I'm sure it can be whatever you want it to be. Ultimately, the value in any code isn't the code itself, it's the usage and feedback on what the code does, and anything you publish open-source, that's then tried & tested, they'll then be re-ingesting to future LLMs. They're not interested in your license, they're interested in your proving code does what it says it does, as then it's valuable to re-ingest and increase their own code understanding and creation capabilities. You work for the robots now

@marcusquinn said in AI on Cloudron:

You work for the robots now

Haha! Thanks! I shall be pondering this all day!

-

@micmc said in AI on Cloudron:

The new Code Interpreter beta is WILD. Try uploading Wordpress plugins to it and ask how they work, and for it to create patches and add-ons.

Under what licence does it release/create that code? For example, if I had it create a "Hello, world!" browser extension, what would the licence be, or is it just public domain?

@LoudLemur said in AI on Cloudron:

@micmc said in AI on Cloudron:

The new Code Interpreter beta is WILD. Try uploading Wordpress plugins to it and ask how they work, and for it to create patches and add-ons.

Under what licence does it release/create that code? For example, if I had it create a "Hello, world!" browser extension, what would the licence be, or is it just public domain?

In all logic, you own the copyright here's why.

AI is a TOOL as Word, Excel, WordPress, PHP, Python... and what you produce using these tools is your WORK and protected by copyright law, and the same goes for AI tools.

Moreover, when one think about it where do you think ALL DATA used LLMs etc is coming from? PLUS every time we use it and even tweak results we FEED IT as well. That's why providers don't care about copyright themselves, because it's not their purpose.

-

Much ChatGPT competition is quickly getting into the AI race.

Llama 2: The New Open LLM SOTA

https://www.latent.space/p/llama2 -

Much ChatGPT competition is quickly getting into the AI race.

Llama 2: The New Open LLM SOTA

https://www.latent.space/p/llama2@micmc said in AI on Cloudron:

SOTA

Thanks, @micmc!

SOTA (State Of The Art)

People can play with Lama2 here:

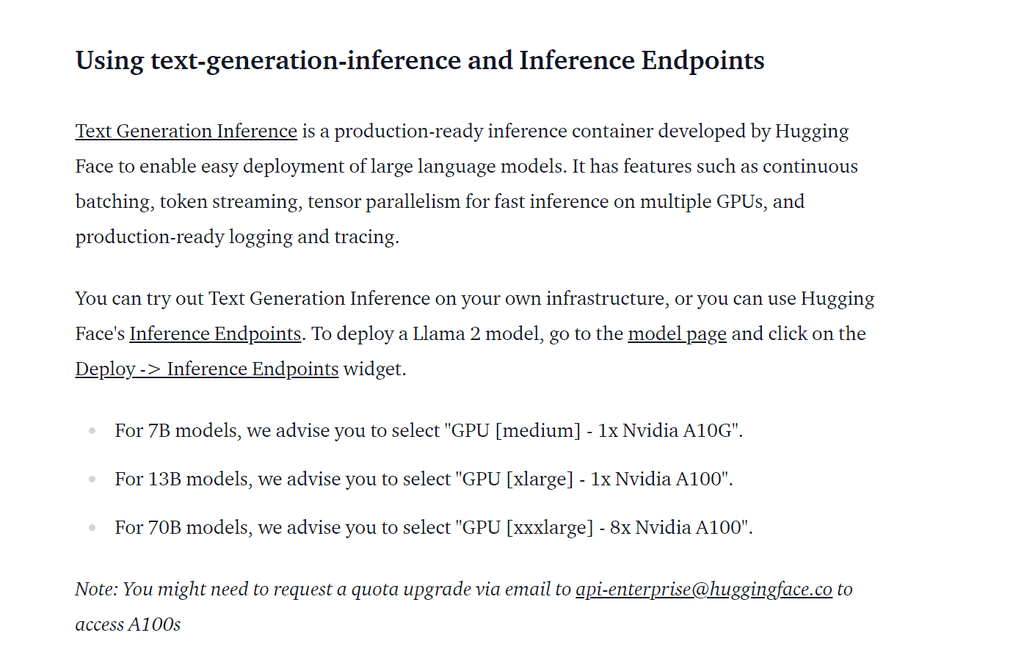

https://huggingface.co/spaces/ysharma/Explore_llamav2_with_TGIWhat I notice is that the response time between question and answer is split second. I would like to know why! If I try and run this locally, it takes AI half a minute or longer to get going, and then an age to s-l-o-w-l-y print out its reply.

Is it a hardware issue? What would be needed to run that locally at similar speed to the hugging face demo?

What I am hoping, is that it is not 8x Nvidia A100s

-

I guess you've come to realize the GPU power required to run a decent ML model

One might want to experience on a lower scale there already is models that work with consumer grade hardware. That you can install on your local PC, and since it runs on Docker theoretically it should run on a VPS as well as maybe even Cloudron then. I've not yet tested this on a VPS but I'm working on it.

Check out https://localai.io/ which does NOT require GPU and uses several GPT models as well, like llama.cpp, gpt4all.cpp and whisper.cpp and more... This site is very interesting for AI enthusiasts who want to learn more and tests things deeper, it's fascinating.

Enjoy!

-

I'm not sure if anyone here, power users, has realized that there already is AI tools and modules being added to Cloudron via several app carried and maintained by our fearless developers team here.

First I discover is in Joplin as I'm a Joplin power user (this app is amazing) and there exists several plugins to add to enhance the app and one of them is called Jarvis and offer SEVERAL AI features to use right inside your notes everywhere.

Then recently in the latest major release of NextCloud they've added an OpenAI integration module.

And just now, in a major release of ChatWoot: OpenAI integration : ( Reply suggestions, summarization, and ability to improve drafts ). This is just a beginning this app was already very much powerful and getting more and more almost by the day.

I guess, RocketChat and MatterMost* might quickly follow if it's not already done. (?)

Roughly, I know it can also be integrated into LibreOffice somehow.

What else, anyone?

-

I'm not sure if anyone here, power users, has realized that there already is AI tools and modules being added to Cloudron via several app carried and maintained by our fearless developers team here.

First I discover is in Joplin as I'm a Joplin power user (this app is amazing) and there exists several plugins to add to enhance the app and one of them is called Jarvis and offer SEVERAL AI features to use right inside your notes everywhere.

Then recently in the latest major release of NextCloud they've added an OpenAI integration module.

And just now, in a major release of ChatWoot: OpenAI integration : ( Reply suggestions, summarization, and ability to improve drafts ). This is just a beginning this app was already very much powerful and getting more and more almost by the day.

I guess, RocketChat and MatterMost* might quickly follow if it's not already done. (?)

Roughly, I know it can also be integrated into LibreOffice somehow.

What else, anyone?

@micmc said in AI on Cloudron:

What else, anyone?

There is some AI tagging of faces in the image applications, for example, Immich.

I think OCR (Optical Character Recognition) extraction of text from images could count as AI, too.

By the way, @micmc, thank you very much indeed for your brilliant links which are very worthwhile following.

-

We know Automatic1111 and Serge, there is also oobabooga, which is like a Serge rival, I suppose and which can install Llama2:

https://github.com/oobabooga/text-generation-webui

there is a video explaining how to do it here:

https://vid.puffyan.us/watch?v=SbuhznykQBg&quality=dash -

I guess you've come to realize the GPU power required to run a decent ML model

One might want to experience on a lower scale there already is models that work with consumer grade hardware. That you can install on your local PC, and since it runs on Docker theoretically it should run on a VPS as well as maybe even Cloudron then. I've not yet tested this on a VPS but I'm working on it.

Check out https://localai.io/ which does NOT require GPU and uses several GPT models as well, like llama.cpp, gpt4all.cpp and whisper.cpp and more... This site is very interesting for AI enthusiasts who want to learn more and tests things deeper, it's fascinating.

Enjoy!

@micmc said in AI on Cloudron:

I guess you've come to realize the GPU power required to run a decent ML model

I sure have! Some people have commented that Meta deliberately didn't release a 30b version of Llama2 as that would be just about possible to run on consumer grade hardware.

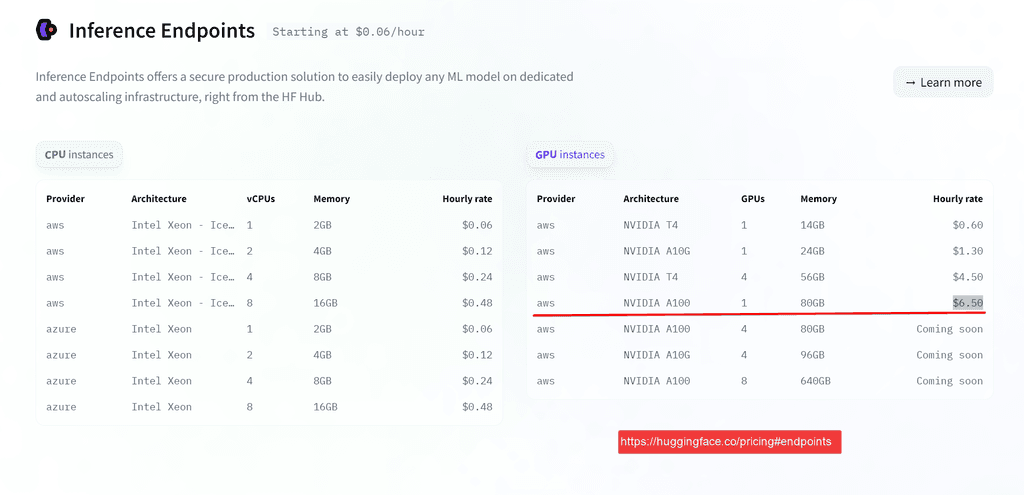

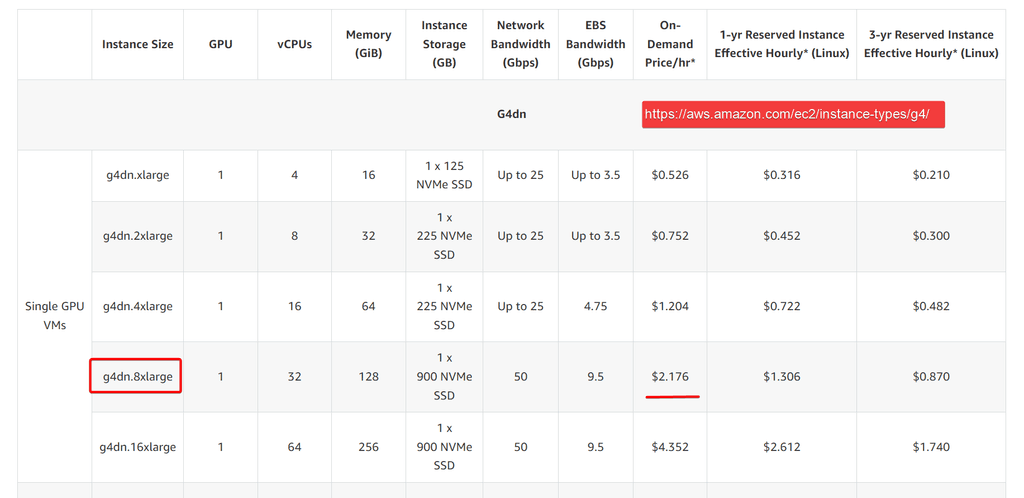

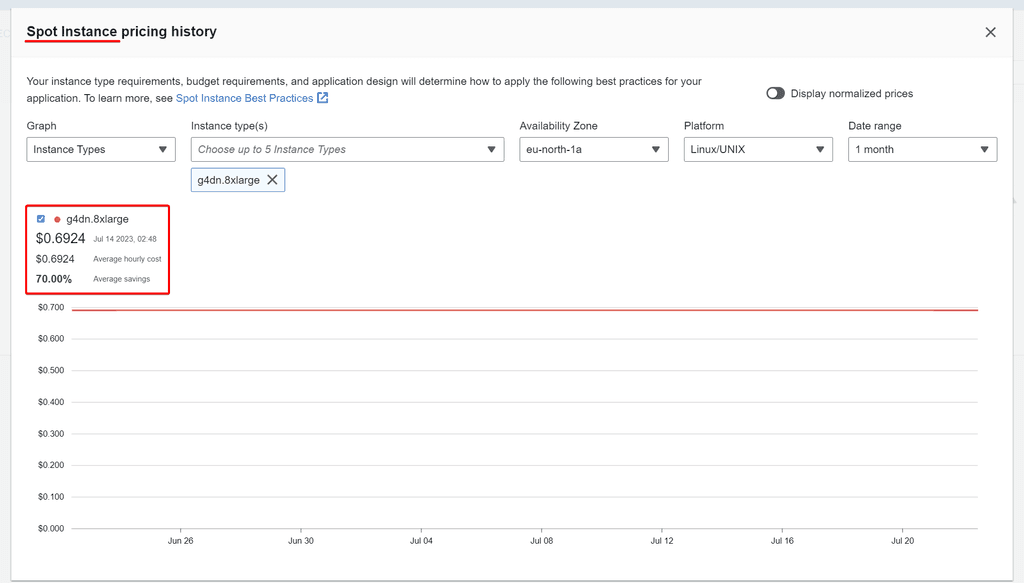

That 70b Llama2 I tried on huggingface chat and I loved it! I want to try and host it, and am trying to figure out how to go about doing that. This is what I am looking at. Maybe others here have some better ideas!

https://xethub.com/XetHub/Llama2

Is it relatively easy to just "turn on" a pre-saved instance when you are ready for a session and then go full-steam for a couple of hours, then when you are finished for the day, "turn off" and just pay the $4? It is a highly efficient.

-

I was reading through localai.io github pages and it mentions this nifty tool for upscaling images (Upscayl). It converted a 640px image to 2560px and the detail improvement is insane. No more blurriness or pixelation, and it even enhances the colors to make it "pop". I used the ULTRASHARP mode. The first (default) mode in the list does the same job minus the color pop. Here are the images for comparison.

640px ring

2560px upscayle + compressed (TinyPNG; forum limits size to 4MB)

-

Talking about LocalAI.io There is now a OpenAI plugin that can be added to OnlyOffice in NextCloud.

You can use OpenAI API by putting your API key, but it can also be use, and this is what they even recommend, with a LocalAI instance running on the same server as NextCloud.I guess we should start looking if a local instance of LocalAI could be run smoothly on a LAMP instance on Cloudron, and even be added as an app to Cloudron since it is built to run on Docker already?

-

Talking about LocalAI.io There is now a OpenAI plugin that can be added to OnlyOffice in NextCloud.

You can use OpenAI API by putting your API key, but it can also be use, and this is what they even recommend, with a LocalAI instance running on the same server as NextCloud.I guess we should start looking if a local instance of LocalAI could be run smoothly on a LAMP instance on Cloudron, and even be added as an app to Cloudron since it is built to run on Docker already?

-

@robi said in AI on Cloudron:

@micmc https://forum.cloudron.io/topic/9399/how-to-run-ai-models-in-lamp-app

Exactly what we need to deepened right!

Thanks mate. -

Stackoverflow usage has plummeted with people switching to AI for solutions, so they have now launched Overflow AI:

-

I'm not sure if anyone here, power users, has realized that there already is AI tools and modules being added to Cloudron via several app carried and maintained by our fearless developers team here.

First I discover is in Joplin as I'm a Joplin power user (this app is amazing) and there exists several plugins to add to enhance the app and one of them is called Jarvis and offer SEVERAL AI features to use right inside your notes everywhere.

Then recently in the latest major release of NextCloud they've added an OpenAI integration module.

And just now, in a major release of ChatWoot: OpenAI integration : ( Reply suggestions, summarization, and ability to improve drafts ). This is just a beginning this app was already very much powerful and getting more and more almost by the day.

I guess, RocketChat and MatterMost* might quickly follow if it's not already done. (?)

Roughly, I know it can also be integrated into LibreOffice somehow.

What else, anyone?

@micmc said in AI on Cloudron:

Then recently in the latest major release of NextCloud they've added an OpenAI integration module.

Nextcloud have an article on this:

https://nextcloud.com/blog/ai-in-nextcloud-what-why-and-how/