Ok I found that the configuration is loaded from the login_soruce table, which has a JSON column cfg with OAuth2-specific fields. For reference it works like this:

I looked at my login_source table:

SELECT * FROM login_source WHERE type = 6 /*OAuth2*/ AND name = 'cloudron' AND is_active = 1;

+----+------+----------+-----------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+--------------+--------------+-----------+-------------------+

| id | type | name | is_sync_enabled | cfg | created_unix | updated_unix | is_active | two_factor_policy |

+----+------+----------+-----------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+--------------+--------------+-----------+-------------------+

| 1 | 6 | cloudron | 0 | {"Provider":"openidConnect","ClientID":"<snip>","ClientSecret":"<snip>","OpenIDConnectAutoDiscoveryURL":"https://my.<cloudron domain>/openid/.well-known/openid-configuration","CustomURLMapping":null,"IconURL":"","Scopes":["openid email profile"],"RequiredClaimName":"","RequiredClaimValue":"","GroupClaimName":"","AdminGroup":"","GroupTeamMap":"","GroupTeamMapRemoval":false,"RestrictedGroup":""} | 1752293846 | 1752293846 | 1 | |

+----+------+----------+-----------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+--------------+--------------+-----------+-------------------+

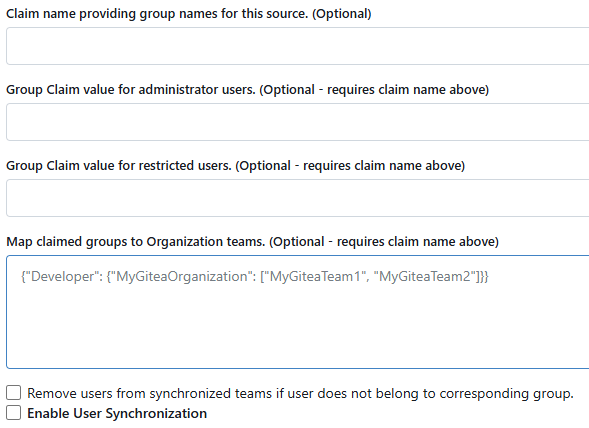

Here you can see the JSON fields GroupClaimName and AdminGroup in the cfg column.

So these configuration settings can be updated today with a query like this:

UPDATE login_source

SET cfg = JSON_SET(cfg, '$.GroupClaimName', '<group claim name>', '$.AdminGroup', '<operators group name>')

WHERE type = 6 /*OAuth2*/ AND name = 'cloudron' AND is_active = 1;

Obviously it would be better if this setting was exposed in app.ini, but this is enough for testing. And we'll have a stronger case for a PR to add this if we come with a live use-case.

To me it seems like the next steps are:

- Figure out if Cloudron even exposes the group names and the app's operators group via OAuth2 claims

- Test to see if filling in the GroupClaimName and AdminGroup fields in the UI works

- Test to see if changing it by sql works

- Decide if this makes sense as a default

- Update the app to use it by default

a. start.sh can open a subshell that tries to update these settings by direct query, checking in a loop every 5 seconds waiting for the schema to populate on first boot and for the login_source row to be created from config.

b. Add the settings ENABLE_PASSWORD_SIGNIN_FORM = false and ENABLE_BASIC_AUTHENTICATION = false, and remove the first-time setup task to change the root password.

c. Open an issue with Gitea to expose these settings via app.ini, remove the subshell workaround and use the exposed config options when they are available.