Hi.

When I used Hetzner Storagebox for backups, everything worked just fine. Incremental backups took only around 4 minutes, for a 600BG backup. Just great.

I wanted to move to my own server, so I've been doing some tests.

Everything works just fine. I'm using sshfs, rsync with hardlinks.

The first backup, around 700GB now, takes around 10 hours (lots of small photos and stuff, backup server on a different country, etc), which I consider normal.

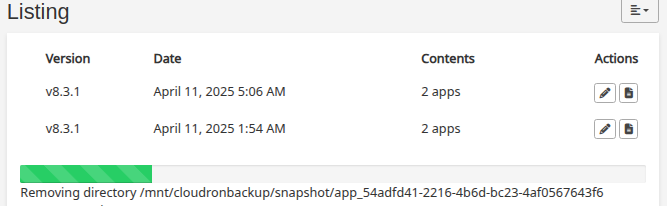

BUT, next backups, although (almost) no data is transferred between the servers, take around 3,5 hours.

I think it's because lots of hardlinks are beeing added/deleted, and it takes a lot of time.

My questions are:

- I tested with ext4, am now testing with xfs. Should I expect xfs to be faster dealing with hardlinks?

- Any tips for mounting the partitions?

- Any ideas to make the whole process faster?

The server is capable enough, no high load on CPU, no IO Wait, so I think that's not the issue.

Also, I'm using just 10 concurrency connections, because with more I didn't notice it faster, and also because it's sshfs and there's some latency involved (although just 25ms), I didn't want to saturate the ssh connection.

Any tips is appreciated.

Thanks!