optimizing VPS for backups

-

Hi.

When I used Hetzner Storagebox for backups, everything worked just fine. Incremental backups took only around 4 minutes, for a 600BG backup. Just great.

I wanted to move to my own server, so I've been doing some tests.

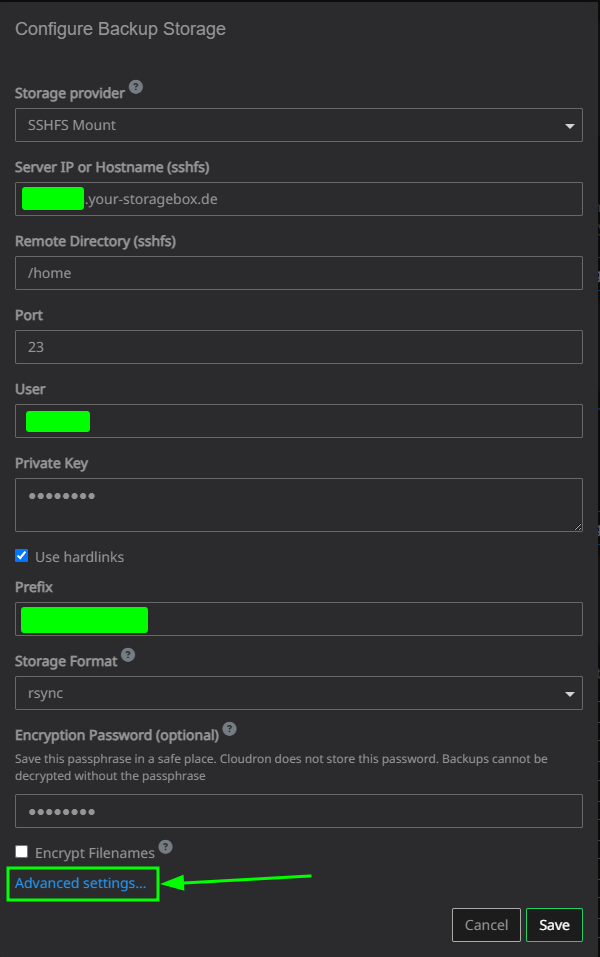

Everything works just fine. I'm using sshfs, rsync with hardlinks.

The first backup, around 700GB now, takes around 10 hours (lots of small photos and stuff, backup server on a different country, etc), which I consider normal.BUT, next backups, although (almost) no data is transferred between the servers, take around 3,5 hours.

I think it's because lots of hardlinks are beeing added/deleted, and it takes a lot of time.My questions are:

- I tested with ext4, am now testing with xfs. Should I expect xfs to be faster dealing with hardlinks?

- Any tips for mounting the partitions?

- Any ideas to make the whole process faster?

The server is capable enough, no high load on CPU, no IO Wait, so I think that's not the issue.

Also, I'm using just 10 concurrency connections, because with more I didn't notice it faster, and also because it's sshfs and there's some latency involved (although just 25ms), I didn't want to saturate the ssh connection.Any tips is appreciated.

Thanks! -

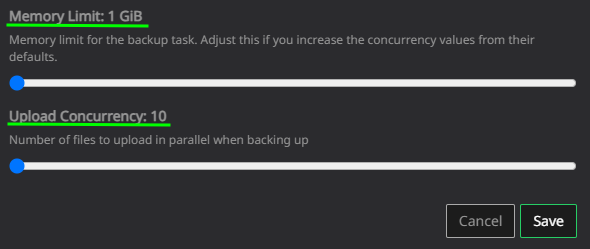

Did you know you can increase the RAM and the concurrency for the backup task? (Maybe this also helps)

opens this:

-

Hi.

Yes, I know. I have it to 12GB, although I'm only using 10 parallel connections.Since the backup started (the first one), around 7h ago or so, it copied 675GB, but now it's doing "something" that takes forever. iftop shows around 80Kbps data transfer from the source server.

I'm not sure how much more time it will take. -

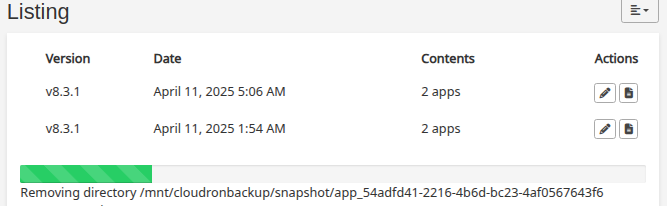

1st backup took around 10h. 745GB.

2nd backup (rsync) took 3h06m, with 10 concurrent connections.

Now running 3rd backup (rsynd) with 100 concurrent connections, to check if it's faster than the 2nd.I think all this time is just to delete and add hardlinks. Is there any way I can optimize it? Maybe on the server side?

On Hetzner Storagebox this was way faster, just a few minutes.Many thanks to you all.

-

-

1st backup took around 10h. 745GB.

2nd backup (rsync) took 3h06m, with 10 concurrent connections.

Now running 3rd backup (rsynd) with 100 concurrent connections, to check if it's faster than the 2nd.I think all this time is just to delete and add hardlinks. Is there any way I can optimize it? Maybe on the server side?

On Hetzner Storagebox this was way faster, just a few minutes.Many thanks to you all.

@pitadavespa said in optimizing VPS for backups:

with 100 concurrent connections

Keep in mind https://www.hetzner.com/storage/storage-box/

Concurrent connections 10

@pitadavespa said in optimizing VPS for backups:

On Hetzner Storagebox this was way faster, just a few minutes.

Because of a fix

@girishimplement that runs some opperataions directly via. ssh in the storage-box instead of over fuse sshfs > network > mount yada yada. -

@pitadavespa said in optimizing VPS for backups:

with 100 concurrent connections

Keep in mind https://www.hetzner.com/storage/storage-box/

Concurrent connections 10

@pitadavespa said in optimizing VPS for backups:

On Hetzner Storagebox this was way faster, just a few minutes.

Because of a fix

@girishimplement that runs some opperataions directly via. ssh in the storage-box instead of over fuse sshfs > network > mount yada yada.@BrutalBirdie said in optimizing VPS for backups:

Because of a fix @girish implement that runs some opperataions directly via. ssh in the storage-box instead of over fuse sshfs > network > mount yada yada.

Thanks.

@girish Could this fix be used in my use case?--

Since yesterday, I'm testing backups with a XFS partition.

I think something's different, compared to EXT4.

On EXT4, after the first backup almost no data was transferred. It was just a matter of "taking care of the" hardlinks, although it took a few hours.

On XFS, if I'm seeing it right, hardlinks on the snapshot folder where deleted, and now data is being transferred again from the Cloudron server.Can this be related to the filesystem?

Or maybe the way the partition is mounted?Thanks for your help.

PS: this is not a problem to me, things are working just fine. I'm just trying to understand, so I can optimize it, if possible.

-

EXT4 and XFS are basically treated the same way from Cloudron side, so there is no difference in that aspect.

Just to be on the same page, you are still using the same configs sshfs (rsync with hardlinks) as before but only the remote is now not hetzner storage box anymore, but your own server with some disks mounted?

If this is the case, then there is no difference at all from a Cloudron perspective. Maybe the disk I/O is just not as fast in your setup as with hetzner?

-

J joseph marked this topic as a question on

-

EXT4 and XFS are basically treated the same way from Cloudron side, so there is no difference in that aspect.

Just to be on the same page, you are still using the same configs sshfs (rsync with hardlinks) as before but only the remote is now not hetzner storage box anymore, but your own server with some disks mounted?

If this is the case, then there is no difference at all from a Cloudron perspective. Maybe the disk I/O is just not as fast in your setup as with hetzner?

@nebulon said in optimizing VPS for backups:

EXT4 and XFS are basically treated the same way from Cloudron side, so there is no difference in that aspect.

Just to be on the same page, you are still using the same configs sshfs (rsync with hardlinks) as before but only the remote is now not hetzner storage box anymore, but your own server with some disks mounted?

If this is the case, then there is no difference at all from a Cloudron perspective. Maybe the disk I/O is just not as fast in your setup as with hetzner?

Hi, @nebulon . Thank you for your time.

Yes, the config is the same, except now backups are being sent to my server. It has a NVME boot disk and an HDD storage disk, for Cloudron backups.

The first backup, 764GB, takes a few hours, just like hetzner storagebox. Disk I/O is (at least) good enough, I manage to do 500Mbps, easy. With lots of small files, it's less, off course.What differs are the next backups, where (almost) no data is transferred, only hard links are "updated". It takes around 4h to do this. I tested with a XFS mounted partition and now I'm testing with an EXT4. I think this one will handle this operation a bit better. Let's see.

Partition is mount like this: UUID=xxxxxxxxxx /mnt/backups ext4 defaults,noatime,nodiratime,data=ordered,commit=15 0 2

Any tips to make it better?

Thanks

-

So then if this is a locally mounted disk it mostly comes down to I/O speed to this disk. Since we just run basic filesystem commands for the backups, not sure I have any further recommendation. Usually the defaults on linux for such things are already good.

One thing though, if you have very precious data there, it is maybe not best advised to keep the live and backup data in the same physical location (which I presume it is, given a local mountpoint)

-

@nebulon I appreciate your advice. The backup server is not on the same location as the cloudron server. It isn't even on the same country (like I do with all my backups).

Maybe I wasn't clear: the server has an OS disk (nvme) and a HDD for backups. The mount I showed is the HDD disk mount. It was just to check if any filesystem mount options were needed to improve performance.

Thank you all.

-

J joseph has marked this topic as solved on