Thanks. Works.

tobiasb

Posts

-

Hetzner-Cloud-Api: Zone not found -

Hetzner-Cloud-Api: Zone not foundI believe the current implementation of the API does not support paging.

Zone not found: Hetzner DNS error 200 { "meta": { "pagination": { "last_page": 2, "next_page": 2, "page": 1, "per_page": 25, "previous_page": null, "total_entries": 29 } }, "zones": ["25x zones"] } -

Today we received a Top20 award for OpenCulturasTo download/install OpenCulturas just run

composer create-project --remove-vcs drupal/openculturas_project example.organd then create a symlink for public which links toexample.org/web. I believe you need to remove the public directory before you can do it.Then you can install it via browser. Use the credentials from credentials.txt for database. When installed, it is better to replaces the strings in web/sites/default/settings.php with getenv('CLOUDRON_MYSQL_HOST'). So that you can create a copy, and it uses the new credentials and not the credentials from the other app.

-

After update to version 2.0.0 and dedicated auth (gitlab)Yes to use it together with Gitlab app, the user management is not openid as documented in cloudron docs.

-

After update to version 2.0.0 and dedicated auth (gitlab)I upgraded the app to latest version 2.0.0, but then when I try to use the registry, I see in the logs following pattern:

failed to verify token: token signed by untrusted key with IDOn gitlab site:

Error response from daemon: login attempt to https://dr.example.org:443/v2/ failed with status: 401 Unauthorized -

After Ubuntu 22/24 Upgrade syslog getting spammed and grows way to much clogging up the diskspaceFyi: The fix was not part of 8.3.2. Now we need patch it again.

-

After Ubuntu 22/24 Upgrade syslog getting spammed and grows way to much clogging up the diskspaceI would like to see this bugfix already in 8.3.x. Please <3.

-

Backup cleanup task starts but do not finish the jobI would say lets close this here. I do not see any failed cleanup jobs even multiple jobs try todo the same.

-

Backup cleanup task starts but do not finish the job@nebulon Hetzner storagebox (Finland) and sshfs, rsync and encrypted filenames.

The host fs (cloudron) is not a ssd (netcup).

The host fs (cloudron) is not a ssd (netcup). -

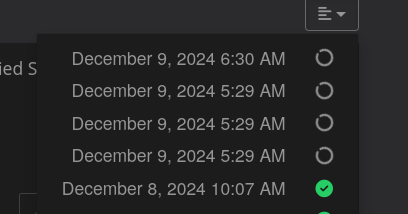

Backup cleanup task starts but do not finish the jobThe jobs did not start on same time 23:30, 01:30, 03:30, 05:30.

At the moment only one cleanup is running. -

Backup cleanup task starts but do not finish the jobThe four jobs from about was all successfully.

So what I take from it. A job just start and do not care if the other job already runs, perhaps they try to cleanup the same.

And perhaps it is faster, because job A delete file A and job B can delete file B, when it is faster than job A.

I did not read the entire log to verify that this was the case.Just a idea:

A cleanup-job should look/claim what it does so that a following job do not try it also and waste time to find out that the file list in his memory was already deleted.

-

Backup cleanup task starts but do not finish the jobThe tasks are still running, box-task-21538.service started Sun 2024-12-08 23:30:02 UTC; so I need to wait to say which task was sucessfully, which not.

The directory

/mnt/cloudronbackup/my_example_org/2024-11-14-030035-138is gone from my first post. -

Backup cleanup task starts but do not finish the jobWhen I trust systemctl status box-task-12345.service, then all 4 tasks try to remove the same (

rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0).

I opened the process (rm -rf) with strace. which still runs syscall unlinkat/newfstatat. (pid 130049)newfstatat(4, "tpgnVSb3898pzsvVCOpQQ-IwDccdLmSNWN1n-DYUdlo", {st_mode=S_IFREG|0644, st_size=2180, ...}, AT_SYMLINK_NOFOLLOW) = 0 unlinkat(4, "tpgnVSb3898pzsvVCOpQQ-IwDccdLmSNWN1n-DYUdlo", 0) = 0All other tasks tries the same:

newfstatat(4, "FRWM67Hwddxd+j4LlMw0eNkJtl1TB-NY4hupbahkGyTyWLn0Kduf8YTNra3FrvU3", 0x55fcd3be73e8, AT_SYMLINK_NOFOLLOW) = -1 ENOENT (No such file or directory) unlinkat(4, "FRWM67Hwddxd+j4LlMw0eNkJtl1TB-NY4hupbahkGyTyWLn0Kduf8YTNra3FrvU3", 0) = -1 ENOENT (No such file or directory)Summary:

box-task-21539.service 130049 rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0 # still running box-task-21538.service 130047 rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0 box-task-21543.service 130046 rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0 box-task-21545.service 130048 rm -rf /mnt/cloudronbackup/myhost/2024-11-26-030148-145/app_n8n.example.org_v3.57.0I will wait and see what will be happing when task 1 is finished.

-

Backup cleanup task starts but do not finish the jobAt some ENOTEMPTY errors I see a new error.

box:backupcleaner removeBackup: unable to prune backup directory /mnt/cloudronbackup/myhost/2024-11-26-030148-145: ENOTEMPTY: directory not empty, rmdir '/mnt/cloudronbackup/myhost/2024-11-26-030148-145' Dec 09 05:28:23 box:backupcleaner removeBackup: error removing app_cef6575d-2b80-4e31-bf0f-01b61e1ca149_v1.4.3_344f2264 from database. BoxError: Backup not found at Object.del (/home/yellowtent/box/src/backups.js:281:42) at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { reason: 'Not found', details: {} } Dec 09 05:28:23 -

Backup cleanup task starts but do not finish the jobYes, internal 2 different issues, but both had ~ 5 running backup cleanup jobs.

I do not see case one at the moment, but case 2 still.

I bet only one job should be started and when job 1 does something, which job 2 tries also that this can happen.

-

Backup cleanup task starts but do not finish the jobUse case 1: In one instance I see multiple started, but not finished backup cleaner jobs with the same log message.

box:taskworker Starting task 3335. Logs are at /home/yellowtent/platformdata/logs/tasks/3335.log box:backupcleaner run: retention is {"keepWithinSecs":604800} box:shell mounts: mountpoint -q -- /mnt/cloudronbackup box:database Connection 422 error: Packets out of order. Got: 0 Expected: 7 PROTOCOL_PACKETS_OUT_OF_ORDERUse case 2: In another instance other error, but also started but not finished.

retention is{"keepDaily":3,"keepWeekly":4,"keepMonthly":6}... 2024-11-29T03:30:29.878Z box:tasks update 21410: {"message":"Removing app backup (b178e0a6-ffe8-4ede-976c-c382f998a50d): app_b178e0a6-ffe8-4ede-976c-c382f998a50d_v3.55.0_97d403e1"} 2024-11-29T03:30:30.451Z box:tasks update 21410: {"message":"2024-11-14-030035-138/app_n8n.example.org_v3.55.0: Removing directory /mnt/cloudronbackup/my_example_org/2024-11-14-030035-138/app_n8n.example.org_v3.55.0"} 2024-11-29T03:30:30.523Z box:tasks update 21410: {"message":"Removing directory /mnt/cloudronbackup/my_example_org/2024-11-14-030035-138/app_n8n.example.org_v3.55.0"} 2024-11-29T03:30:30.524Z box:shell filesystem: rm -rf /mnt/cloudronbackup/my_example_org/2024-11-14-030035-138/app_n8n.example.org_v3.55.0 2024-11-29T03:38:00.508Z box:backupcleaner removeBackup: unable to prune backup directory /mnt/cloudronbackup/my_example_org/2024-11-14-030035-138: ENOTEMPTY: directory not empty, rmdir '/mnt/cloudronbackup/my_example_org/2024-11-14-030035-138' 2024-11-29T03:38:00.521Z box:backupcleaner removeBackup: removed 2024-11-14-030035-138/app_n8n.example.org_v3.55.0 ...Use case 2: The Jobs are finished now as a error. Some magic or the there is a hidden timeout.

-

Compress database dump when configured to use rsync as storage formatPlease change the title to "Compress database dump when ..."

-

Compress database dump when configured to use rsync as storage formatWhen configured rsync the database does not benefit of rsync (not really) therefore it should always be compress to save storage/speed up the backup process.

-

Disc usage display per app@girish This would already help to find out that not something hidden in the OS takes the storage.