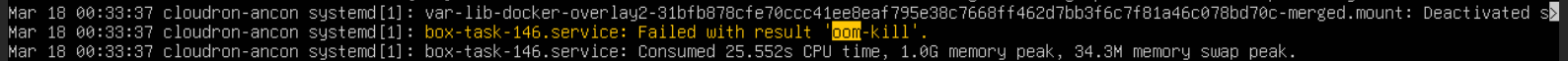

Task 'mvvolume.sh' dies from oom-kill during move of data-directory from local drive to NFS share

-

Looking at the source of the

.shfile here -https://git.cloudron.io/platform/box/-/blob/master/src/scripts/mvvolume.sh?ref_type=headsI'd guess that replacing these lines:find "${source_dir}" -maxdepth 1 -mindepth 1 -not -wholename "${target_dir}" -exec cp -ar '{}' "${target_dir}" \; find "${source_dir}" -maxdepth 1 -mindepth 1 -not -wholename "${target_dir}" -exec rm -rf '{}' \;with something along the lines of:

rsync -a --remove-source-files --exclude "${target_dir}" "${source_dir}/" "${target_dir}/"may end up being less of a resource hog.

I'll schedule an increase of RAM for the VM this weekend and reboot it all - see if that help.

-

G girish marked this topic as a question on

G girish marked this topic as a question on

-

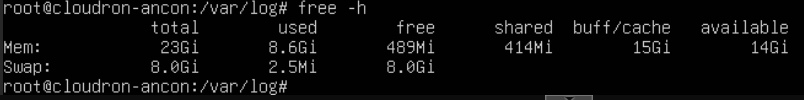

I upped the VM memory allocation from 24Gb to 30Gb (the whole box has 32Gb, I wanted to leave a little for the hypervisor).

Same problem. Partway though the file copy the oom-kill steps in and spoils the party. The total -vm size reported by the oom-killer was a little larger - so maybe something is using a lot of RAM during that process - but I cannot for the life of me think what it would be.

Have ordered more physical RAM for the server, will add that in the next service window and re-try this to see if it helps.

What's odd is outside the VM, there is no evidence of that amount of memory usage. The Proxmox host itself tracks the memory usage and it's not changing significantly during that time, and the looking at the system monitoring inside the Ubuntu VM shows a peak memory usage of less than 10Gb overall.

-

J joseph has marked this topic as solved on

-

-

Perhaps it was in another context than just RAM, like a name space, or temp I/O log that is usually short lived, except where it isn't.

This reminds me of the old days where copying floppies was inordinately slow since it copied only a few blocks at a time which had a lot of context switches, until someone introduced the xcopy algorythm where as much of the source that could be was read into memory at once then written to the destination at once, minimizing block copies and the back and forth context switching. Later it was improved further to not use so much memory and do nice large chunks, optimizing reads, writes of the drives (heat, errors) and time.

If the code could copy in predefined chunks, then the memory will be bound and not fill up for larger runs.

-

Perhaps it was in another context than just RAM, like a name space, or temp I/O log that is usually short lived, except where it isn't.

This reminds me of the old days where copying floppies was inordinately slow since it copied only a few blocks at a time which had a lot of context switches, until someone introduced the xcopy algorythm where as much of the source that could be was read into memory at once then written to the destination at once, minimizing block copies and the back and forth context switching. Later it was improved further to not use so much memory and do nice large chunks, optimizing reads, writes of the drives (heat, errors) and time.

If the code could copy in predefined chunks, then the memory will be bound and not fill up for larger runs.

@robi said in Task 'mvvolume.sh' dies from oom-kill during move of data-directory from local drive to NFS share:

If the code could copy in predefined chunks, then the memory will be bound and not fill up for larger runs.

in this situation atleast, the code is executing the

cptool Strange behavior really.

Strange behavior really. -

@robi said in Task 'mvvolume.sh' dies from oom-kill during move of data-directory from local drive to NFS share:

If the code could copy in predefined chunks, then the memory will be bound and not fill up for larger runs.

in this situation atleast, the code is executing the

cptool Strange behavior really.

Strange behavior really.@girish said in Task 'mvvolume.sh' dies from oom-kill during move of data-directory from local drive to NFS share:

in this situation atleast, the code is executing the cp tool Strange behavior really.

That's the issue: See here https://serverfault.com/questions/156431/copying-large-directory-with-cp-fills-memory

-

@girish said in Task 'mvvolume.sh' dies from oom-kill during move of data-directory from local drive to NFS share:

in this situation atleast, the code is executing the cp tool Strange behavior really.

That's the issue: See here https://serverfault.com/questions/156431/copying-large-directory-with-cp-fills-memory

@robi interesting link. I haven't found why it takes so much memory though, do you happen to have any information on this?

Do you think it just loads all the files into memory and works of a massive list one by one? If that's the case, yeah... this is a problem . No easy fix other than rolling our own cp it seems.

-

@robi interesting link. I haven't found why it takes so much memory though, do you happen to have any information on this?

Do you think it just loads all the files into memory and works of a massive list one by one? If that's the case, yeah... this is a problem . No easy fix other than rolling our own cp it seems.

@girish Here's what I found:

https://www.qwant.com/?q=long+run+copies+via+cp+causing+oom+memory

Various OOM workarounds:

https://github.com/rfjakob/earlyoom

https://github.com/hakavlad/nohang

https://github.com/facebookincubator/oomd

https://gitlab.freedesktop.org/hadess/low-memory-monitor/

https://github.com/endlessm/eos-boot-helper/tree/master/psi-monitorUnderstanding OOM Score adjustment:

https://last9.io/blog/understanding-the-linux-oom-killer/Possible LowFree issue:

https://bugzilla.redhat.com/show_bug.cgi?id=536734Parallel vs sequential copy:

https://askubuntu.com/questions/1471139/fuse-zip-using-cp-reported-running-out-of-virtual-memoryAnd lastly, since you use stdio as output, perhaps it fills that up somehow. If it were redirected to disk it might be different.

That might also enable resuming a failed restore.

-

I got data lost due to a weird find commands in the shell script; wonder why not to use rsync

-

@joseph said in Task 'mvvolume.sh' dies from oom-kill during move of data-directory from local drive to NFS share:

I think rsync requires a server, no?

?

No, not even over SSH. There’s a daemon mode and it can work as a server but it’s not required -

@necrevistonnezr I guess you mean the rsync binary. Sure that will work "locally" but the real benefit of rsync (protocol) comes from having a server component. This server component will not be used with nfs mounts (the topic of this thread). If you want to use rsync just locally, there are also other tools you can use.

I don't have any context more than that one sentence though

I was just replying in passing.

I was just replying in passing.